Burp Suite Extension: AWS Signer 2.0 Release

Update April 20, 2022: The updated version of the AWS Signer extension is now available on the BApp Store. This can be installed/updated within Burp Suite through the Extender tab. Alternatively the extension can be downloaded from the BApp Store here and installed manually.

+ + +

The AWS Signer extension enhances Burp Suite’s functionality for manipulating API requests sent to AWS services. As the requests pass through the proxy, the extension signs (or resigns) the requests using user-supplied credentials and the AWS SigV4 algorithm. This allows the user to easily modify/replay the request in Burp Suite and ensure the AWS service accepts the request. Eligible requests are automatically identified by the presence of the X-Amz-Date and Authorization header.

The extension was initially released by NetSPI’s Eric Gruber in October 2017 and has been maintained by NetSPI’s Andrey Rainchik. The extension has served as a valuable tool on hundreds of penetration tests over the years.

Today, I’m releasing version 2.0 which brings functional and usability improvements to the extension. An introduction to the enhancements is provided below. For more detailed information on how to use the extension, please see the updated documentation on GitHub.

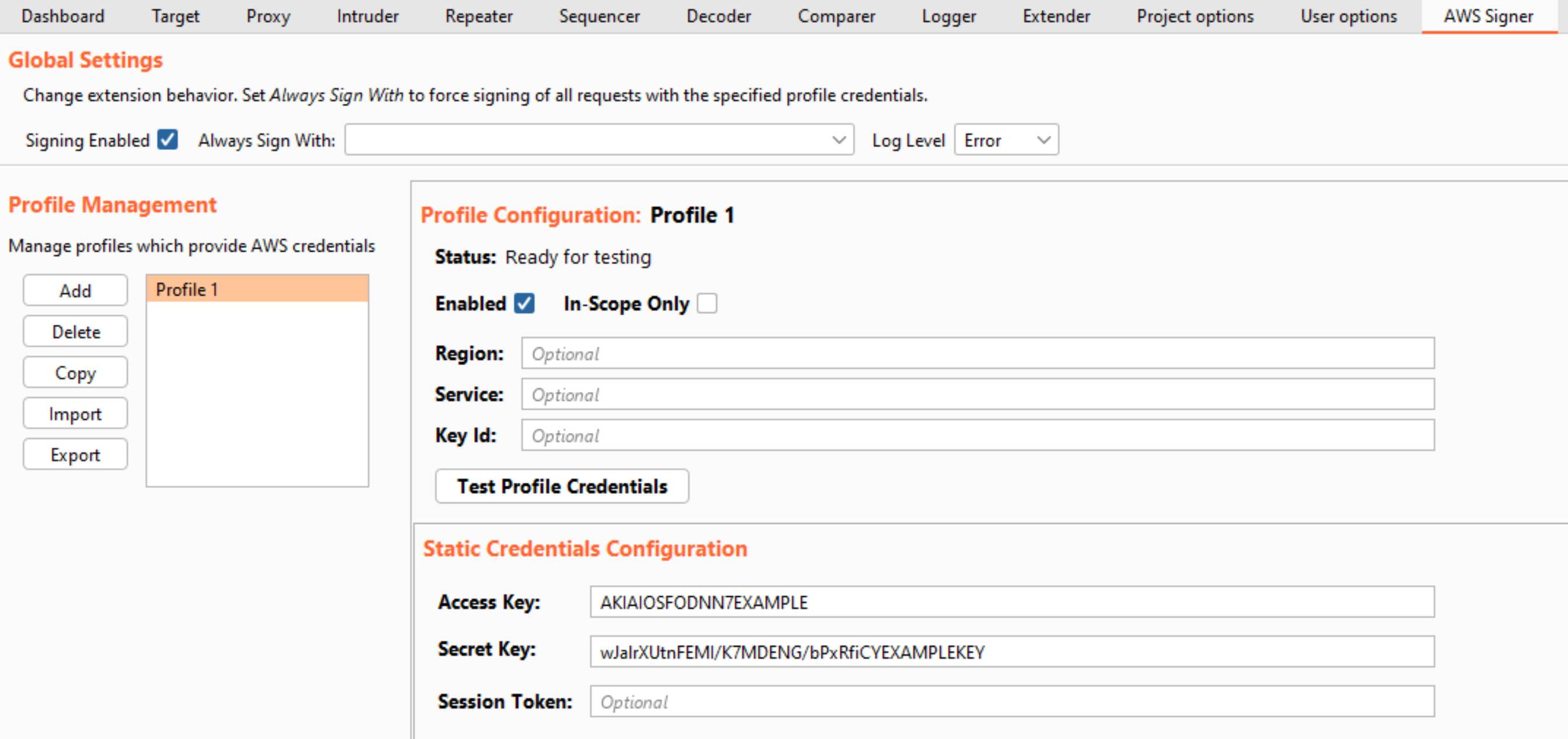

The New Burp Suite Extension Interface

The most obvious difference upon loading the new version is that the extension’s UI tab in Burp Suite looks very different. All the key functionality from the original version of the extension remains.

At the top of the tab, we have “Global Settings,” which controls extension-wide behavior. The user can enable/disable the extension entirely through a checkbox. Additionally, a user can also select a profile to use for signing all requests in the “Always Sign With” dropdown menu. If set, all eligible requests will be signed with the selected profile’s credentials. Speaking of profiles…

Introducing Profile Management

A profile represents a collection of settings for signing requests. As with the previous version of AWS Signer, a profile can specify which region and service should be used when signing the request. Or that information can be extracted from the request itself via the Authorization header. The new version of the extension introduces import and export functionality for profiles.

You Can Now Import Profiles from Multiple Sources

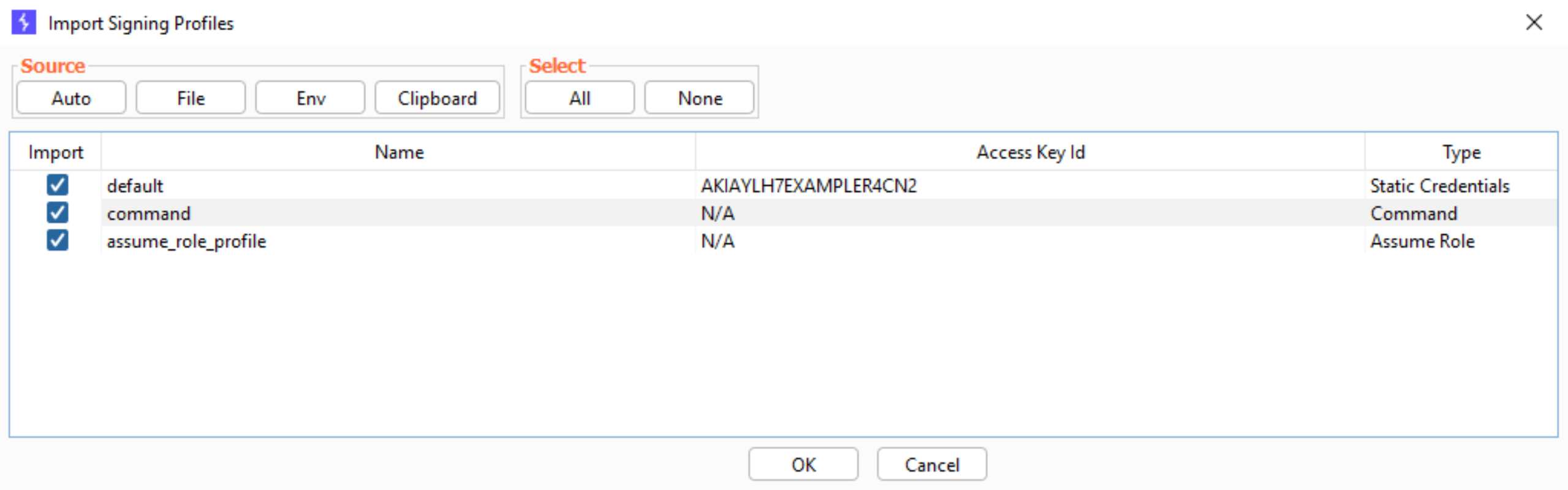

Upon clicking the Import button, a pop-up window will appear to guide the user through the import process.

Profiles can be imported from a variety of sources using the buttons at the top of the pop-up window:

- Auto: Automatically sources profiles from default credential files (as used by the AWS CLI), the clipboard, and the following environment variables:

- AWS_ACCESS_KEY_ID

- AWS_SECRET_ACCESS_KEY

- AWS_SESSION_TOKEN

- File: Allows the user to specify which file to load profiles from. This is useful for importing previously exported profiles.

- Env: Attempts to import a profile based on the standardized AWS CLI environment variables listed above.

- Clipboard: Attempts to automatically recognize and import a profile based on credentials currently copied and held in the user’s clipboard.

After sourcing the profiles, the user can select which ones to bring into the extension using the checkboxes beside each profile.

Once the profiles are imported and configured by the user, all of the profiles can be easily exported to a file via the Export button. If working with a teammate, the exported profiles could be shared between multiple testers to reduce configuration time. This also allows for easily storing and restoring the extension’s configuration without including sensitive credentials within the project’s Burp Suite configuration.

New: Profile Types

A key improvement in the latest version of the extension is the introduction of multiple profile types. Each profile type uses a different method for sourcing its credentials which will be used for signing requests. There are currently three profile types, each of which is described below.

Static Credentials Profile

This is the profile type that previous users of the extension will be most familiar with. The user simply provides an access key, a secret key and (optionally) a session token. When this profile is used to sign a request, these credentials are used to generate the signature.

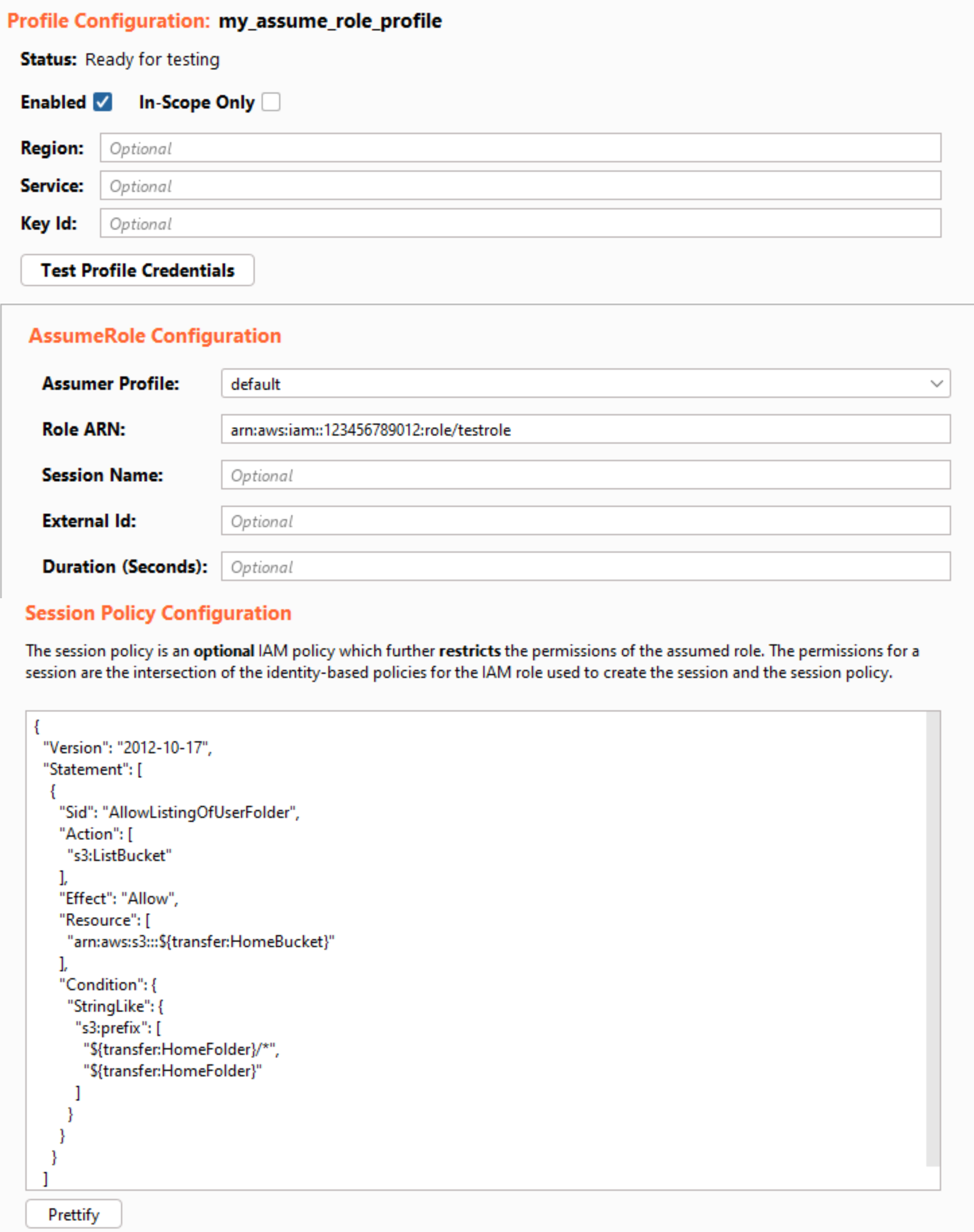

AssumeRole Profile

The AssumeRole profile type allows the user to sign requests with the credentials that were returned after assuming an IAM role. The user must provide at least the following:

- The role ARN of the IAM role to assume.

- A profile that provides credentials to assume the role. This is referred to as the “Assumer Profile.”

When signing a request with an AssumeRole profile, the extension will first call the AssumeRole API using the Assumer profile to obtain an access key, secret key, and session token for the role. Using a profile (rather than static credentials) allows the extension to handle complex chaining of multiple profiles to fetch the necessary credentials.

After retrieving the credentials, the extension will cache and reuse them to avoid continuously invoking the AssumeRole API. This is configurable through the Duration setting. The user may also provide a session policy to be applied when assuming the role. A session policy is an IAM policy, which can be used to further restrict the IAM permissions granted to a temporary session for a role. Session policies are useful for testing and confirming intended behavior with a specific policy because they are applied immediately upon the AssumeRole call with zero propagation delay and can be quickly modified. This eliminates the frustrating delays waiting for the eventual consistency of IAM permissions.

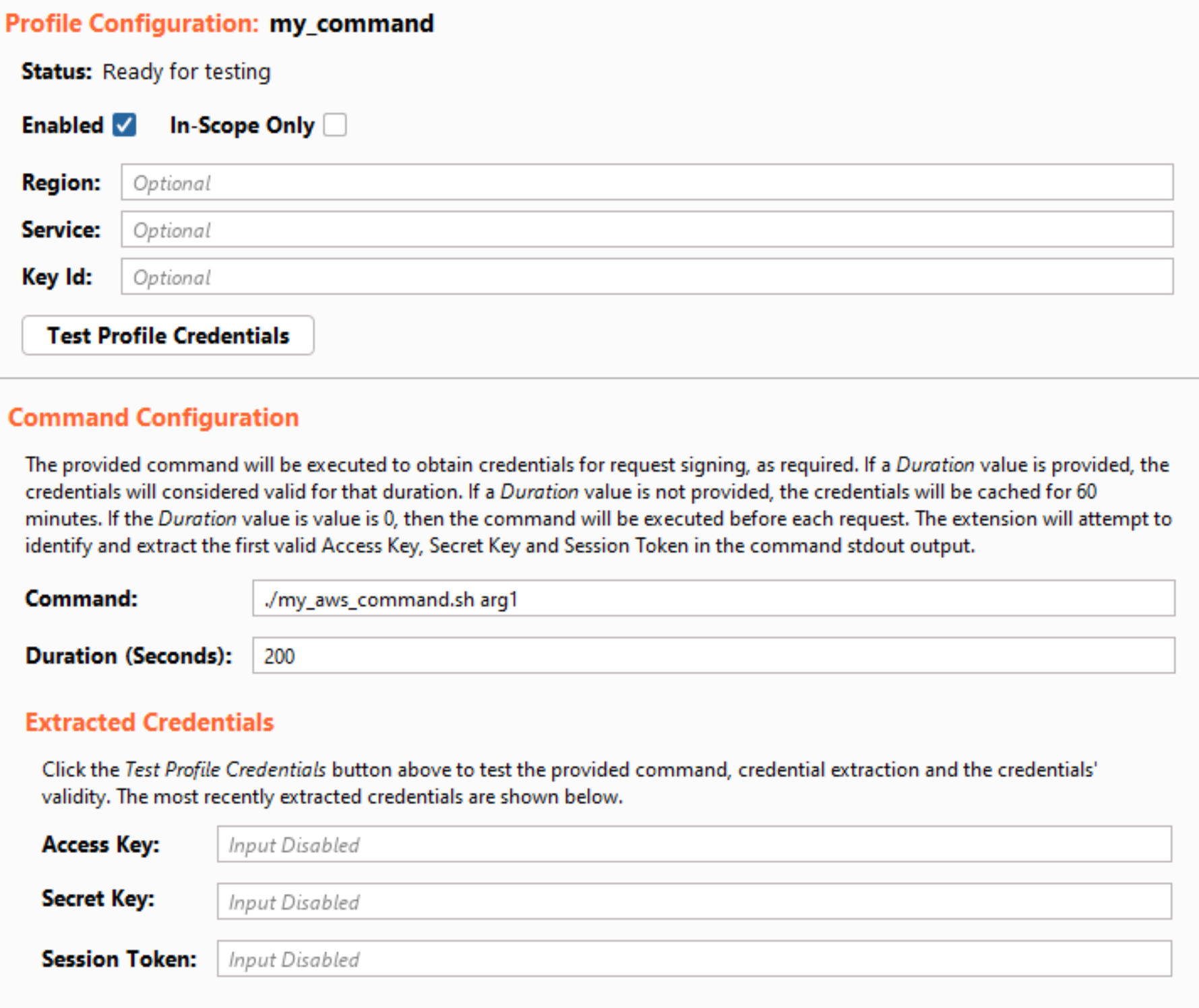

Command Profile

The final profile type allows the user to provide an OS command or external program to provide the signing credentials. The command will be executed using either cmd (Windows) or sh (non-Windows). The extension will attempt to parse the credentials from the command’s stdout output. The output does not have a set format, and the credential extraction is based on pattern matching. The extension is designed to recognize valid credentials in a variety of output formats. Similar to the AssumeRole profile, the returned credentials will be cached and reused where possible. The user can configure the lifetime of the credentials through the Duration field. For ease of testing, the UI also displays the latest credentials returned after clicking the “Test Profile Credentials” button.

Signing Improvements

The AWS Signer extension should properly sign well-formatted requests to any AWS service using SigV4. In older versions of the extension, some S3 API requests were not handled properly and would be rejected by the S3 service. The actual signing process is delegated to the AWS Java SDK, which should provide robust signing support for a wide variety of services and inputs.

The extension also provides experimental support for SigV4a. At the time of writing, there is minimal published information about the SigV4a signing process. However, this functionality is available in the AWS Java SDK. As such, the AWS Signer extension attempts to recognize SigV4a requests and perform the proper signing process via the SDK.

This is functional for the currently available multi-region access points in S3, which require SigV4a. However, as more information becomes available and as more services adopt this signing process, the extension may not handle all use cases.

Summary of Changes in Burp Suite Extension: AWS Signer 2.0

I sincerely hope you enjoy the changes in version 2.0 of the AWS Signer extension for Burp Suite. NetSPI will coordinate with PortSwigger to ensure this update is made available in the BApp store as soon as possible. In the meantime, the update is available from the Releases page of the project’s GitHub repositories. Please submit all bug reports and feature requests as issues in GitHub and we’ll address those as we’re able to.

Want to pentest some of the most prominent and innovative organizations in the world? NetSPI is hiring! Visit our careers page to explore open security consultant roles.