Month: June 2020

Your Phone Really is Listening to You: The Evolution of Data Privacy, GDPR, and Why/How to Ensure Compliance

My first long term job was in architecture and software development for a device, Blackberry, that put security first, so everything we were doing was privacy and security focused, including the way we developed the operating system. I was there for over 10 years and at the end of my time there, I was running the architecture team for the handheld software. This privacy-first notion was foundational to me to move into privacy and security, and help other people understand why that was so critical.

Our Limited Understanding of Data Privacy

The concept of data privacy has been developed over the last decade or so. Most people are not as tech savvy about what they’re putting online as they think they are. Even most people in the technical fields, including developers, haven’t read the terms and conditions on any of the software platforms that they’re using on a daily basis.

I’ve read these terms and conditions for several apps – they’re boring and long – and people generally don’t realize what they’re agreeing to give away. For example, APIs, which are the way that coders get access to information inside the systems aren’t just sharing one particular piece of information, they’re also sharing all of this other data about you.

Data privacy is similar to how we have faith in the safety of a microwave, but very few people actually know how it works. You just know it heats your food.

It’s the same thing I find with things like Facebook and Google. Google is going to search your results, but you don’t stop to think about how much data Google is collecting on you when you’re doing that to get you the results you need or what Facebook might be doing in order to set you up with the right people online so you can share photos. We don’t question the technology that comes into our lives. And because regulations and laws didn’t exist when they were built, they were able to take all sorts of liberties with data that they should never have been able to take.

Google search results are very tailored to you because of the amount of data that they’re gathering about you to be able to serve you content in a way that’s most relevant to you. For example, if two people search the same keywords on Google or another search engine, the results and order of those results tend to vary significantly based on web browsing habits, email content, things they’ve clicked on in the past in Google, etc.

While we are accustomed to this customization, we also need to recognize that it’s based on so much information. It’s not necessarily a bad thing, but it is a trade-off to be aware of.

What GDPR Means for Data Privacy

GDPR stands for the General Data Protection Regulation and is from the European Union. It’s essentially a set of rules that should have been in place a long time ago. As I mentioned earlier, software products have in a way, advanced without regulations and rules. While Europe has always had privacy laws, they finally put their foot down and said to companies, “You can’t use and abuse individual’s data without their knowledge and consent of what you’re doing.

GDPR puts the individual first.

Pieces of GDPR include:

- Companies have to have consent to collect data from people.

- Users have to have a clear understanding of what the company is doing with their data, it cannot be all legalese.

- Companies have to have a retention period, so if you’re collecting personal data about someone and it’s only valid for a certain number of days, you need to get rid of that data at that point. If you don’t have a legal or business reason to keep the data, you should be deleting it.

- Users also have individual rights, so they’re able to go back to a company and ask to see all the data the company has about them. Users can also ask to be removed from the company’s system, which is called the right to erasure.

While this is a European-based regulation, it affects any customers who are European and any business that’s based out of Europe, so it ends up being fairly global. For example, if your company is out of North America or India or Africa and you serve European customers, you have to comply with GDPR.

In addition, other countries are looking at similar regulations. Brazil is supposed to be launching their LGPD, which is GDPR under their law, and eventually Canada will adopt GDPR-like regulation. In addition, CCPA, which is the California Consumer Privacy Act, is more targeted at not having your data resold, which is more about the fact that the Facebook’s and Google’s of the world have been taking individual’s data and reselling it without their knowledge. It’s only a matter of time before other countries and states get on board with having more of these regulations to protect individuals.

Consequences of an Organization Failing to Achieve GDPR Compliance

GDPR has significant fines and they are already imposing these fines. There are several websites that are tracking the fines that are being applied. One example I’ve seen is a company in Ireland where a user asked to see their information and the company said no. They asked again several times and the company still said no. That individual then contacted the data protection authority and the company was fined $200,000 for not answering the individual.

The fines are based on due diligence. Every company is going to be breached at some point. The question is, as a company, have you done the best job you can to protect an individual and their personal information? Have you limited what you’re collecting? Are you storing it safely?

If an individual asks to see the data you have about them, you have to let them see it. If you do that, then if you were ever breached, you would have small to no fine, but if you haven’t done that, you haven’t even tried, and then that’s where these large fines are coming from.

How Can Companies Make Sure They Are GDPR Compliant?

The regulations themselves are a legal framework, and online you can find checklists from a legal perspective asking if you’ve taken certain action – Do you have a privacy policy that covers the following things? Do you have an incident response plan?

What I find the checklist misses though is the proper technical implementation of a lot of the requirements, which is something I’m helping companies put into place. For example, privacy by design is seven principals of how to best set up individual-first privacy over innovation, which has been adopted globally. Creating a foundation of privacy, ensuring that you’ve evaluated the data you’re collecting, whether you need it, how you’re storing it, etc. But then companies need to optimize on some of these things. If the checkbox on GDPR is: Can a user access their data? From a foundational and technical level, how are you authenticating to ensure that the user is who they say they are? How are you optimizing it so that if 100 people request their data on the same day, you can handle that in your company? Setting up the support at the bottom will help everything else fall into place at the top.

Why Your Company Should Prioritize Data Privacy

Many companies, especially startups say they don’t have the budget for data privacy because they want to focus that money on development. But data privacy is like an insurance policy. For example, an incident response plan, just the plan itself, will save you $500,000 when you have a breach – just by having the plan. If you don’t have a plan, then when you’re breached, you are scrambling to find your stakeholders, to get on a phone bridge, to figure out who you need to contact to do your forensics, etc.

Creating a plan should take your company less than a week, and you could either do it on your own or hire a consultant to do it. It should cost less than $10,000 from start to finish, and it saves you $500,000.

By putting privacy in place, it reduces your risk of the breach. If you take these steps now to set your company up right, you won’t have these large fines and reputation costs down the line.

How Individuals Can Protect Their Data

The number one thing people can do is set their privacy settings. Go into your privacy settings and go through each individual setting, and turn off your microphone, your camera, and your location. If you haven’t explicitly turned off microphone use, it is listening to you at all times. This is why people say, for example, “I was talking about going to New York, and now I’m getting all these ads for New York.” You want these apps to only use microphone, camera or location-tracking when that app is in use.

Recent Posts

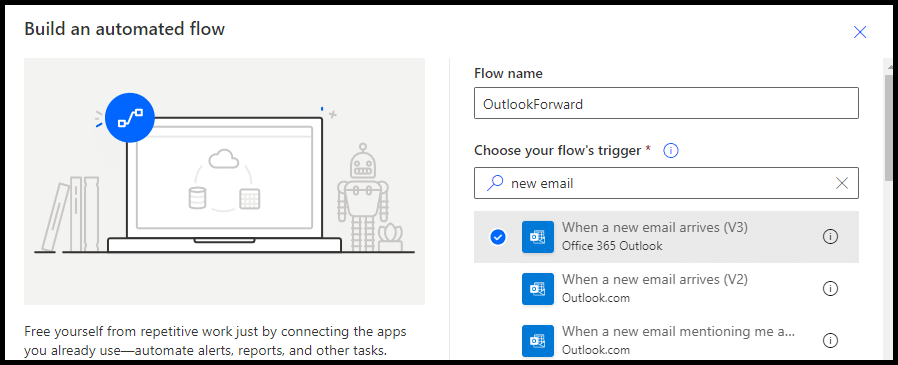

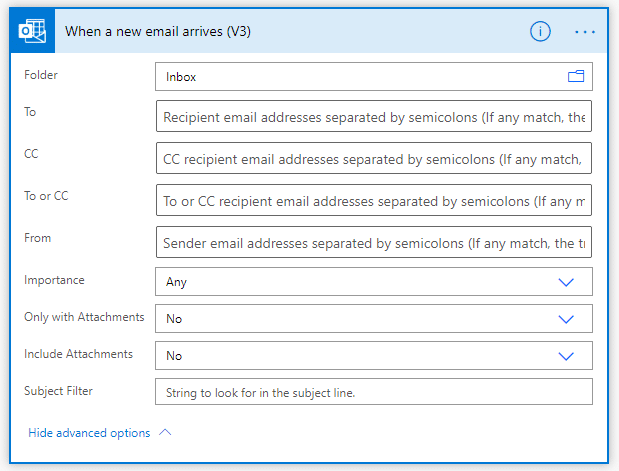

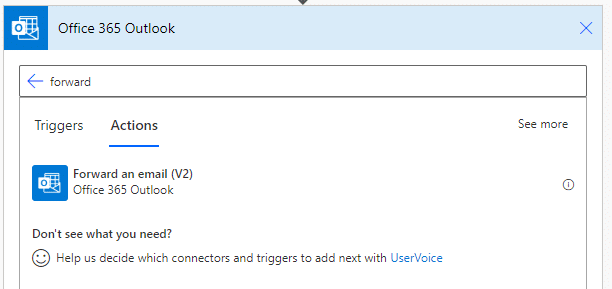

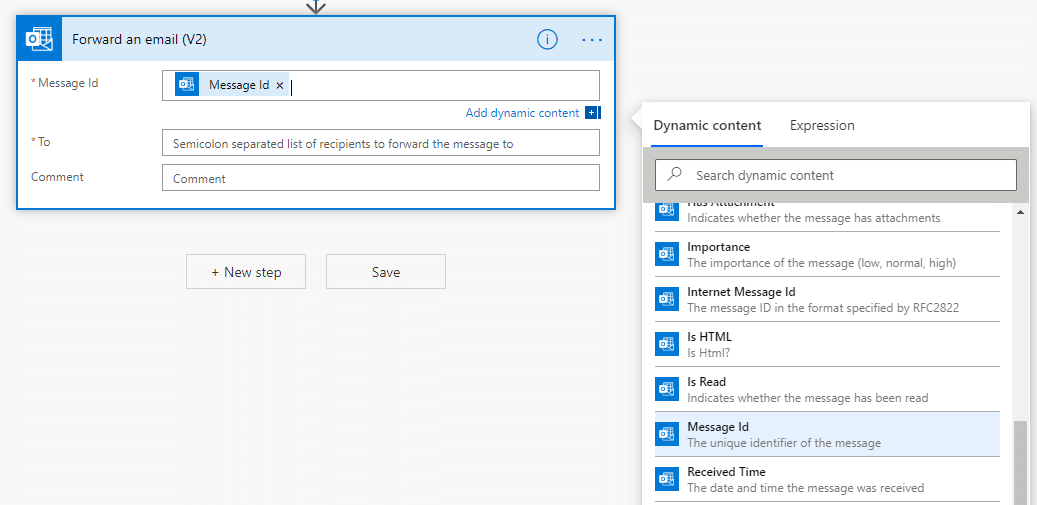

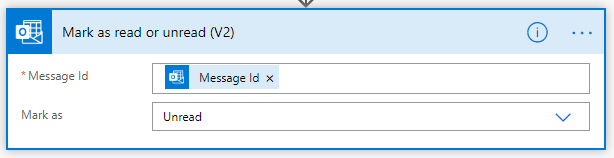

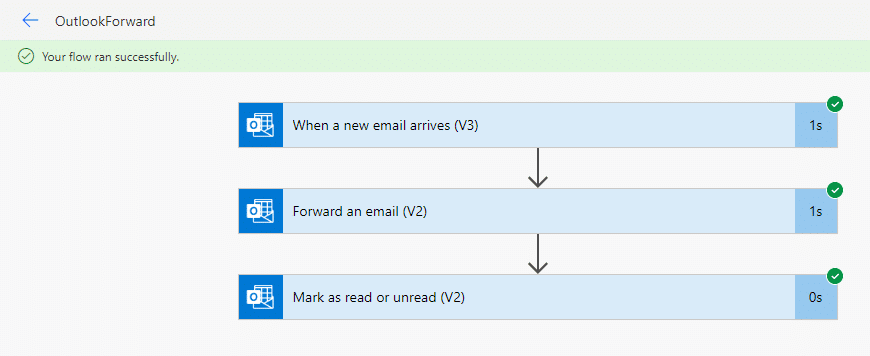

Bypassing External Mail Forwarding Restrictions with Power Automate

Recent Posts

COVID-19: Evaluating Security Implications of the Decisions Made to Enable a Remote Workforce

I was recently a guest on the CU 2.0 podcast, where I talked with host, Robert McGarvey about enabling a remote workforce while staying secure during the COVID-19 pandemic and how the pandemic has actually inspired digital transformation in some organizations.

I wanted to share some highlights in a blog post, but you can also listen to the full interview here.

Security Implications of Decisions Made to Enable a Remote Workforce

In spring 2020, when the COVID-19 pandemic first started, a lot of organizations weren’t quite ready to enable their employees to work from home. It became a race to get people to be functional while working remote, and security was an afterthought – if that. During this process, a lot of companies started to realize they had limitations in terms of how much VPN licensing they had or that they had a lot of employees who were required to work in the office so didn’t have laptops they could take home.

For many companies, when they started working remote in early spring, they thought it would be for a relatively short time. As such, many are just now starting to realize they haven’t truly assessed the risk they’re exposing themselves to. Only now, two to three months later, are many starting to focus their efforts on understanding the security implications of the decisions they made this past spring to enable a remote workforce.

For example, companies have limited licenses for virtual desktops, virtual images or even operating systems and versions of operating systems that they’re using. To quickly get employees access to their work systems and assets from home, a lot of companies ended up re-enabling operating systems that they had previously disabled, including bringing back Windows XP, Windows 7, and Windows 8 machines that they had stopped using. There are challenges to this, including not having proper patches and updates for issues that are discovered. If you have operating systems that are outdated, they have certain known vulnerabilities that could be exploited. Many companies made certain decisions from a business perspective, but are now asking what type of security risk they’ve exposed ourselves to.

It’s important to understand the basic principles of security, and make sure you’re thinking through those as you enable your workforce to be more effective while working from home. Organizations have to strike the balance between their business objectives, the function they’re trying to accomplish, and the security risks they’re exposing themselves to.

There’s also a lack of education around remote access technologies. For example, when you’re working from home, you need to ensure you’re always connected to your organization’s VPN when you’re browsing the internet or doing work-related activities, because that traffic then can’t be intercepted and viewed by anyone else on the internet.

It’s critical to use the right technologies correctly and enable things like multi-factor authentication. With multi-factor authentication, if a hacker has your password, at least they don’t have that second factor that comes to you via email, text message, phone call, or an authenticator application.

I strongly believe that today we must have multi-factor authentication enabled on everything. It’s almost negligent of an organization to not enable multi-factor authentication, especially given how much prevalence we’ve seen with passwords being breached or organizations with database breaches where their employees’ or their clients’ username and passwords have been exposed.

The Weakest Link in Today’s Technology Ecosystem: People

The weakest link in today’s technology ecosystem is the human element.

As soon as the COVID-19 pandemic started, we noticed there was a significant increase in phishing emails and scams that attackers started deploying and that they were very specifically geared towards the COVID-19 pandemic itself. Some examples include:

- Emails pretending to be from your doctor’s office with attachments that have certain steps that you need to take to prevent yourself from getting the virus or supporting your immune system.

- Emails supposedly from your business partners with FAQ attachments containing details around what they were doing to protect their business from a business continuity perspective during the pandemic.

- Emails from fake employees claiming they had contracted the virus and the attachment contained lists of people they had come in contact with.

- Emails pretending to be from HR, letting people know that their employment had been terminated, and they needed to click on a link to claim their severance check.

Spam filters are only upgraded once they see the new techniques attackers are using. Much of the language in these phishing emails was around COVID-19 language that they hadn’t seen before, so they weren’t being caught in spam filters – and many people fell victim to a lot of these attacks. Once this happens, you’re exposed to potential ransomware. Once one employee downloads a file onto their machine containing malware, that can eventually propagate across your whole network to other machines that are connected. And, like the real virus, this can propagate very fast and hide its symptoms until a certain time or a particular event that triggers a payload.

There are a lot of similarities between a medical virus and a computer virus, but the biggest difference in the digital world is that the spreading of the virus can happen exponentially faster, because everything moves faster on the internet.

COVID-19: Inspiring Digital Transformation

I believe there needs to be an increased focus on education around the importance of cyber security going beyond the typical targeted groups. Everyone within an organization is responsible for cyber security – and getting that broad understanding and education to all employees is key.

We’re seeing a lot of transformation today in terms of how we work and how the norm is going to change given this situation. As such, there needs to be increased awareness around security across the board, given the pandemic. People have to be the first step to making sure that they’re making good and sensible decisions before they take any specific action online. There are a lot of very simple hygiene related things that are missing today that needs to be done better – and people just need better education around these items.

Additionally, I believe organizations are going to take steps to make sure that they’re doing things like enabling multi-factor authentication for their employees to connect remotely and making sure that VPN access is required for you to work on your machine if you’re remote.

Cloud-based software is also going to be key and in fact, I can’t imagine organizations being very successful at sending people to work from home if they weren’t leveraging the cloud to quickly scale their ability to serve their employees and their customers in a different format.

In many ways, COVID-19 has inspired a lot of transformation and innovation in how we approach the work culture. People are also becoming more aware of what actions they’re taking online and thinking about security implications of the actions that they’re taking.

Recent Posts

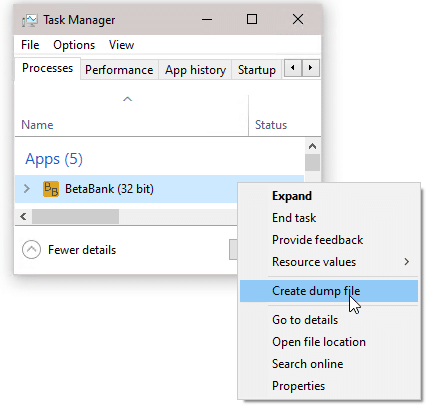

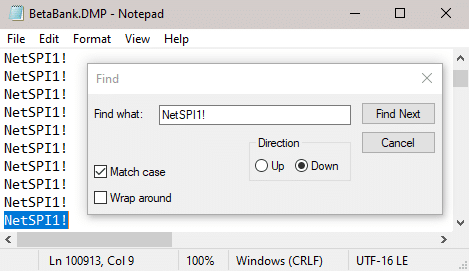

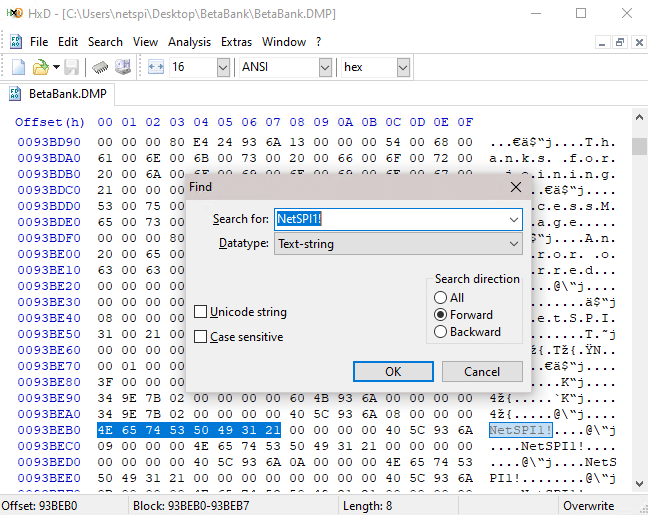

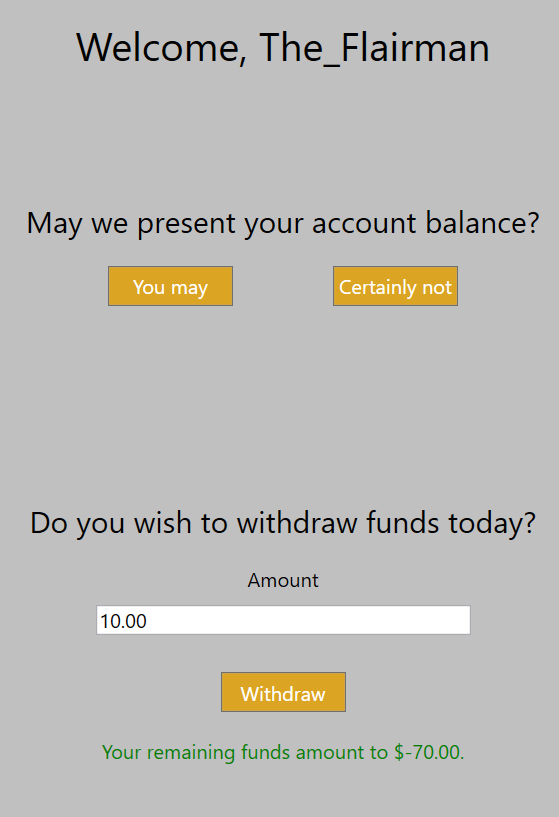

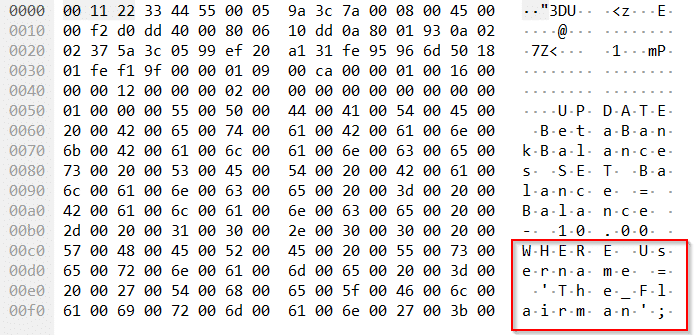

Introduction to Hacking Thick Clients: Part 6 – The Memory

Recent Posts

TechTarget: Invest in new security talent with cybersecurity mentorships

On June 16, 2020, NetSPI Managing Director Nabil Hannan was featured in TechTarget.

What do NVIDIA’s Jensen Huang, Salesforce’s Marc Benioff and Microsoft’s Satya Nadella have in common? They were all deemed the greatest business leaders of 2019, according to Harvard Business Review’s “The CEO 100” list. But another commonality they share is that each have had mentors to help guide them through their careers in technology and get them to where they are today.

Mentorship is critical in every industry but given the immense opportunity for career growth in the cybersecurity industry today, having the right guidance is a must. The industry faces many challenges from a staffing perspective — from the skills shortage to employee burnout — making the role of a mentor that much more important as others navigate these challenges. While mentorship is often considered subjective, there are a few best practices to follow to ensure you’re establishing a solid foundation in the mutually beneficial relationship, not only to help new talent navigate the industry, but also to help strengthen the industry as a whole. First, let’s explore what to look for when hiring new cybersecurity talent.

Read the full article here.

Recent Posts

Getting Started on Your Application Security Journey

Common Myths Around Application Security Programs

In order for an organization to have a successful Application Security Program, there needs to be a centralized governing Application Security team that’s responsible for Application Security efforts. In practice, we hear many reasons why organizations struggle with application security, and here are four of the most common myths that need to be dispelled:

1. An Application Security Team is Optional

Just like everything else, there needs to be dedicated effort and responsibility assigned for Application Security in order for an Application Security Program to be successful. Based on our experience and evidence of successful Application Security Programs, all of them have a dedicated Application Security team focused on managing Application Security efforts based on the organization’s business needs.

2. My Organization is Too Small to Have an Application Security Team

A small organization is no excuse to avoid doing Application Security activities. Application security cannot be an after-thought or something that’s bolted on when needed. It needs to be an inherent property of your software and having focus and responsibility for Application Security in the organization will help prevent and remediate security vulnerabilities.

3. I Cannot Have an Application Security Team Because We Are a DevOps/Agile/Special Snowflake Shop

Just because your business or your development processes are different from others, doesn’t mean that you don’t have a need for Application Security, nor does it mean that you cannot adopt certain application security practices. There are many opportunities in any type of an SDLC to inject application security touchpoints to ensure that business objectives or development efforts are not hindered by security, but rather are enhanced by security practices.

4. An Application Security Team will Hinder Our Ability to Deliver/Conduct Business

In our experience, we have seen that more secure applications are typically better in all perspectives – performance, quality, scalability, etc. Application Security activities, if adopted correctly will not hinder your organization or team’s ability to conduct business but will in fact provide a competitive advantage within your business vertical.

Why Do You Need an Application Security Program?

Defect Discovery – Organizations typically start their application security journey in defect discovery efforts. The two most common discovery techniques used are Penetration Testing and Secure Code Review to get started discovering security vulnerabilities and remediating them appropriately.

Defect Prevention – An Application Security Program’s goal is not only to help proactively identify and remediate security issues, but also to avoid security issues from being introduced.

Understanding Risk – In order to identify an organization’s risk posture, it’s necessary to identify what defects exist, and then determine the likelihood of these defects being exploited and the resulting business impact from successful exploitation. Organizations need to understand how the defects identified actually work and determine what components of the organization and business are affected by the identified defects.

Getting Started with Defect Discovery

There are many different techniques of defect discovery, and each has its own set of strengths, weaknesses, and limitations in what they can identify. Certain techniques are also prone to higher levels of false positives than others. There’s also factors such as speed at which these techniques can be implemented and how quickly results can be made available to the appropriate stakeholders which need to be considered when implementing a particular defect discovery technique in an organization. Ultimately, all of the techniques do have certain areas of overlap in terms of the types of defects that they can identify, and all the techniques do complement each other.

Discovery Technique #1 – Penetration Testing

Penetration Testing is the most popular defect discovery technique used by organizations and is a great way to get started if you have had no focus towards Application Security in the past. Pentesting allows an organization to get a baseline of the types of vulnerabilities that their applications are most likely to contain. There’s a plethora of published materials on known attacks that work and it’s easy to determine what to try. When performing penetration testing, the type of testing varies significantly based on the attributes of the system being tested (web application, thick client, mobile application, embedded application, etc.).

| Execution Methods | |

| Technology/Tool Driven |

|

| Outsourced Manual Penetration Testing (Third-Party Vendor) |

|

| In-House Manual Penetration Testing |

|

Discovery Technique #2 – Secure Code Review

Secure Code Review is often mistaken for Code Review that development teams typically do in a peer review process. Secure Code Review is an activity where source code is reviewed in an effort to identify security defects that may be exploitable. There are plenty of checklists on common patterns to look for or certain coding practices to avoid (hardcoded passwords, usage of dangerous APIs, buffer overflow, etc.). There are also various development frameworks that publish secure coding guidelines that are readily available. Some organizations with more mature Secure Code Review practices have implemented secure by design frameworks or adopted hardened libraries to ensure that their developers are able to avoid common security defects by enforcing the usage of the organization’s pre-approved frameworks and libraries in their development efforts.

| Execution Methods | |

| Technology/Tool Driven |

|

| Outsourced Manual Secure Code Review (Third-Party Vendor) |

|

| In-House Manual Secure Code Review |

|

Defect Discovery is Just the Beginning

It’s important to remember that defect discovery is more than just the two techniques discussed here. In the scheme of your Application Security Program, the effort towards defect discovery is just a part of your application security program. In addition to defect discovery, you need to consider the following (and much more):

- What does it mean for your organization to have a Secure SDLC from a governance perspective?

- How are you going to create awareness and outreach for your SDLC to ensure the appropriate stakeholders know what their roles and responsibilities are towards application security?

- What key processes and technology do you need to put in place to ensure everyone is capable of performing the application security activity that they’re responsible for?

- How are you going to manage software that’s developed (and/or managed) by a third party (augmenting vendor management to reduce risk)?

Application Security Governance and Strategy

Application security governance is a blueprint that is comprised of standards and policies layered on processes that an organization can leverage in their decision-making processes in their application security journey.

Organizations have started adopting a Secure SDLC (S-SDLC) process as part of their software development efforts, and this tends to vary greatly between organizations. Ultimately, the focus of the S-SDLC is to ensure that vulnerabilities are detected and remediated (or prevented) as early as possible.

Many organizations unfortunately have not defined their application security governance model, and as a result, lack a proper S-SDLC. Without the proper processes in place, it’s challenging, if not impossible to have oversight of the application security risks posed to all the applications in an organization’s application inventory.

Ultimately, we’ve observed that regardless of where the governance function is implemented (software engineering, centralized application security team, or somewhere else), there needs to be dedicated focus on application security to get started on the journey to reducing risk faced from an application security perspective.

The Trifecta of People, Process and Technology

1. Application Security Team (People)

Organizations need to assign responsibility for application security. In order to do this, it’s important to establish an application security team that is a dedicated group of people who are focused on making constant improvements to an organization’s overall application security posture and as a result, protect against any potential attacks. Organizations that have a dedicated application security team are known to have a better application security posture overall.

2. Secure SDLC/Governance (Process)

Clear definition of standards, policies, and business processes are key to having a successful application security strategy. The S-SDLC ensures that applications aren’t created with vulnerabilities or risk areas that are unacceptable to the organization’s business objectives.

3. Application Security Tools and Technology

There’s a plethora of open source and commercial technologies available today that all leverage different defect discovery techniques to identify vulnerabilities in applications. DAST, SAST, IAST, SCA, and RASP are some of the more common types of technologies available today. Based on the business goals, objectives, and the software development culture, the appropriate tool (or combination of tools) needs to be implemented to automate and expedite detection of vulnerabilities as accurately and early as possible in the SDLC.

Taking a Strategic Approach to Application Security

In order to grow and improve, organizations need to have an objective way to measure their current state, and then work on defining a path forward. Leveraging the appropriate application security framework to benchmark the current state of the application security program allows organizations to use real data and drive their application security efforts more strategically towards realistic application security goals.

Standard frameworks also allow for re-measurements over time to objectively measure progress of the application security program and determine how effective the time, effort, and budget being put towards the application security program are.

As the application security capabilities mature, so does the amount and quality of data that is at the organization’s disposal. It’s important to ensure that the data collection is automated and proper application security metrics are captured to determine the effectiveness of different application security efforts, and also measure progress while being able to intelligently answer the appropriate questions from executive leadership and board members.

NetSPI’s Strategic Advisory Services

NetSPI offers a range of Strategic Advisory Services to help organizations in their application security journey.

Regardless of where you are in your application security goals and aspirations, NetSPI provides:

- Application Security Benchmarking – Measure the current state of your application security program and understand how your organization compares to other similar organizations within the same business vertical.

- Application Security Roadmap – Understand the organization’s application security goals and build a realistic roadmap with key timely milestones.

- Application Security Metrics – Based on the organization’s application security program, understand what data is available for collection and automation, allowing for definition of metrics that allow the application security team to answer the appropriate questions to help drive their application security efforts.

Recent Posts

Introduction to Hacking Thick Clients: Part 5 – The API

Recent Posts

Making the Case for Investing in Proactive Cyber Security Testing

Proactive or preventative cyber security testing continues to be an afterthought in today’s conversations around breach preparedness. In this Forbes article, for example, the author suggests establishing an incident response plan, defining recovery objectives and more, all of which are necessary – but there’s no mention of investing in enterprise security testing tools and penetration testing services that boost your cyber security posture in the first place.

Sure, it can be difficult to make a business case for the C-suite to invest in an intangible that doesn’t directly result in new revenue streams. Historically, though, breaches cost companies millions and sometimes billions of dollars, proving that a ‘dollars and sense’ case can be made for preventative cyber security testing.

Even when a case is made to the board and funding is available, enterprise security teams struggle to be proactive because they are constantly reacting to the threats already looming in their network, lack adequate staffing, and the pace of vulnerabilities continues to outpace the business. So, how can the C-suite and security teams come together to prioritize the urgency of implementing a proactive cyber security testing program? How can we communicate that the upfront planning and set up is a proactive investment that will help eliminate the financial and time strain of a reactive-forward cyber security program?

The reality is that cyber security breaches today are inevitable and put organizations at grave risk. To help your security team make the case for prevention-based security investments, such as penetration testing and adversarial simulation, here are three recommendations that will get the attention of C-suite executives and help your security team remain proactive:

Translate the Impact of a Breach into Dollars and Sense that the C-Suite Understands

In today’s digital world, data is more valuable than ever and more vulnerable. So, how can you best communicate this heightened value of data security and risk to your leadership team? By speaking a language they understand. First, shift your mindset from talking about “cyber security and compliance” to “customer safety and quality services;” these terms will resonate better with the C-suite.

Next, be prepared to talk financial risk. Annually, IBM and Ponemon Institute release the Cost of a Data Breach Report, which includes a calculator based on industry and cost factors – such as board-level involvement, compliance failures, and insurance – to determine the potential financial impact of a breach. Use this resource to calculate your own organization’s estimated cost of a data breach.

A simple calculation case study: In the United States, if an attacker compromises just 5,000 records, it could cost your organization over $1 million (based on the average cost of $242 per lost record). This case demonstrates the cost of a smaller-scale breach – in fact, the average size of a data breach in the United States in 2019 is 25,575 records, resulting in an average cost of $8.2 million per breach. Compare that to the average cost of a vulnerability management or penetration testing program, and your case to the executive team is pretty simple. Notably, loss of customer trust and loss of business are the largest of the major cost categories, according to the report. The study finds that breaches caused a customer turnover of 3.9 percent – and heaps of reputational damage.

Lastly, use examples in your respective industry as proof points. For example, if you’re in the financial services industry, reference other breaches in the sector and their associated cost. It’s important to clearly communicate the reality of what happens when your organization is breached to get the C-suite on board for more cyber security spend. Sharing concerning results of reactive cyber security strategies helps executives see the benefit of investing in proactive security measures to prevent a breach from happening in the first place.

Help Leaders Understand Cyber Security Testing’s Role in a Crisis Preparedness Plan

A data breach is a common crisis scenario for which every business should plan. It should be discussed in tandem with other risk scenarios like natural disasters, product recalls, employee misconduct, and conflict with interest groups, to name a few. As with any disaster preparedness program, documentation and reporting are critical. Specifically, documentation of your vulnerability testing results and remediation efforts should be viewed as a tool to inform leaders about the organization’s exposure to risk, as well as its ability to prevent breach attempts from being successful. Cyber security weaknesses to look for from an organizational standpoint include lack of continuous vulnerability testing and patching, untested incident response plans, and limited training and security awareness programs. These three key areas can turn into the “Achilles heel” of any organization’s security posture if not addressed and implemented properly.

Position Your Pentest Team as an Extension of Your Own Security/IT Team

According to a survey we conducted earlier this year, over 80 percent of security leaders say lack of resources keeps them up at night. And for some time now, the cyber security industry has suffered a skills shortage. While companies are eager to hire cyber security experts to address the ever-evolving threat landscape and avoid the high costs of a breach, there aren’t enough people who can fill these roles. According to the latest data from non-profit (ISC)², the shortage of skilled security professionals in the U.S. is nearly 500,000.

Hiring outside cyber security resources is one solution to this demand conundrum. Time is invaluable, so if you propose to hire new vendors, it’s important from the start to position the white hat testers to your executives as an extension of your own team. It is the responsibility of both corporate security practitioners and vendors to find ways to work collaboratively as one team.

Pentesting is a great example of the importance of collaboration in cyber security. Traditionally, pentesters complete their engagement, hand off a PDF and send the internal team off to remediate. With the emergence of Penetration Testing as a Service (PTaaS), pentesters not only perform an engagement, they also conduct more deep-dive manual tests, continuously scan for vulnerabilities to deliver ongoing pentest reports in an interactive digital platform that separates critical vulnerabilities from false positives (a time-consuming activity for your in-house team) and serve as remediation consultants for your organization. Make it clear to your C-suite that vendor relationships are changing and cyber security testing vendors can serve as a solution for current cyber security skills gaps within the business.

When the C-suite and IT and security departments are disconnected on priorities, the risk of a data breach increases. Learn to speak the language of your executive leaders and communicate the true value of proactive security measures. Effective communication around the potential financial impact of a breach, where vulnerability testing fits in a crisis preparedness plan, and ways to solve cyber security talent shortages, can result in additional budget for proactive cyber security testing and other security initiatives.

Recent Posts

E-Commerce Trends During COVID-19 and Achieving PCI Compliance

Online Shopping Behavior and E-Commerce Transformation

By this point it’s clear that organizations and every individual has to make changes and adapt their day-to-day activities based on the weeks of lock-down that everyone has faced due to the Coronavirus.

All of a sudden, there’s a surge of people using mobile applications and online payments to order food, medicine, groceries, and other essential items through delivery. Due to social distancing requirements that are in place, the interactions between retailers and consumers have shifted drastically. We all know that people are heavily dependent on their mobile devices, even more so today than ever before. New studies show that “72.1% of consumers use mobile devices to help do their shopping” and there’s been a “34.9% increase year over year in share of consumers reporting online retail purchases.”

Businesses have been drastically impacted during the pandemic, and they’re also adapting to be able to conduct business online more than ever before. In these efforts, there’s been an increase in using online payments through the use of credit card transactions because they are preferred since they’re contact-less compared to cash transactions (which add a higher likelihood of the Coronavirus spreading through touch).

Given the increased use of online credit card payments, organizations need to ensure that they’re compliant with the Payment Card Industry (PCI) Data Security Standard – referred to as PCI DSS. The current version of the PCI-DSS is version 3.2.1 which was released in May 2018.

The Spirit of PCI DSS

PCI DSS is the global data security standard which all payment card brands have adopted for anyone that is processing payments using credit cards. As stated on their website, “the mission is to enhance global payment account data security by developing standards and supporting services that drive education, awareness, and effective implementation by stakeholders.”

Payment card data is highly sensitive, and in the case cardholder data is stolen or compromised, it’s quite a hassle to deal with that compromise. Attackers are constantly scanning the internet to find weaknesses in systems that store and process credit card information.

One well-known credit card breach was in late 2013 when a major retailer, had a major breach. This attack brought to the foreground the importance of ensuring proper segregation of systems that process credit card payments from other systems that may be on the same network.

PCI DSS wants to ensure that any entity that is storing or processing credit card information are following the minimum bar when it comes to protecting and segregating credit card information.

I am not going to go into detail about each and every PCI DSS requirement, but focus this post around how we at NetSPI help our clients with their journey towards PCI compliance.

NetSPI’s Role in Our Clients’ PCI Compliance Journey

First of all, it’s important to note that at the moment, NetSPI has made the business decision not be a PCI “Approved Scanning Vendor” (ASV) or a PCI “Qualified Security Assessor” (QSA). You can find the PCI definitions for these at the PCI Glossary. Given NetSPI’s business objectives, our focus is on deep dive, high quality technical offerings instead of having an audit focus that is required in order to be an ASV or a QSA.

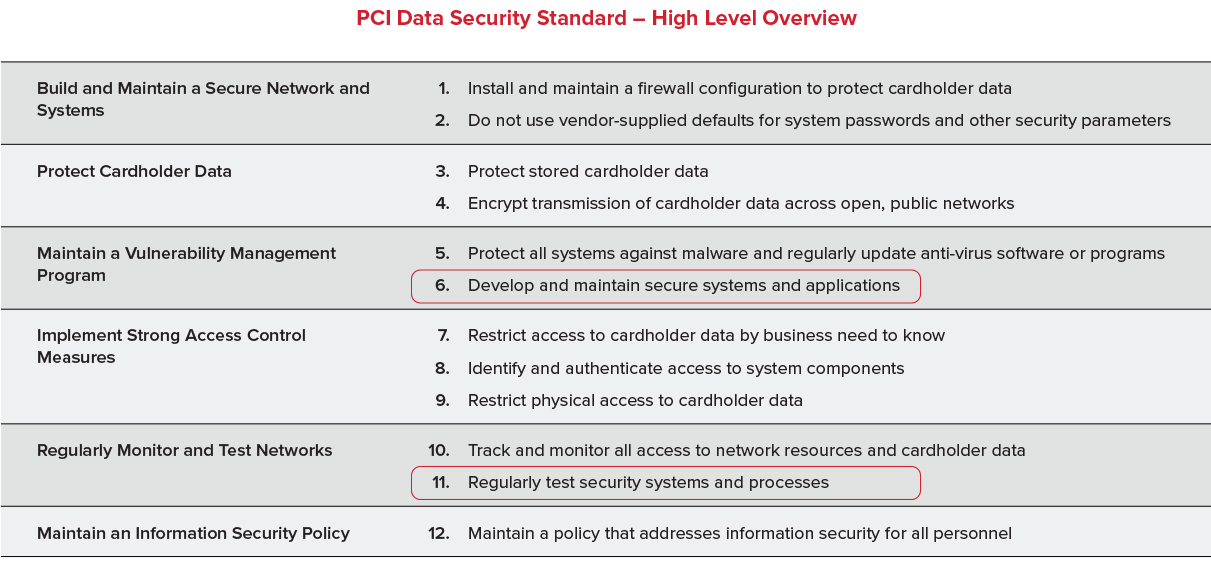

That being said, we are extremely familiar with PCI DSS requirements and work with some of the largest banks and financial institutions to enable them to become PCI compliant (and meet other regulatory pressures) by helping them in their efforts to develop and maintain secure systems and applications, and regularly testing their security systems and processes.

We’ll go deeper into some of the specific PCI requirements that we work closely with our customers on, and describe our service offerings that are leveraged in order to satisfy the PCI requirements. In almost all cases, NetSPI’s service offerings go above and beyond the minimum PCI requirements in terms of technical depth, scope, and thoroughness.

PCI Requirements and Services Mapping

PCI at a high level provides a Security Standard that’s broken down as shown in the table below that’s been published in their PCI DSS version 3.2.1.

PCI DSS Requirement 6.3

Incorporate information security throughout the software-development lifecycle.

NetSPI Offerings Leveraged by Clients

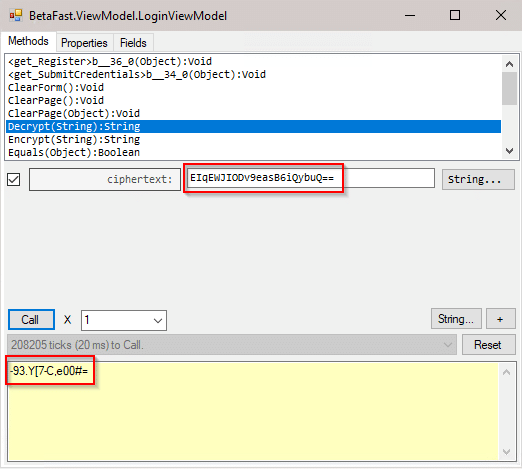

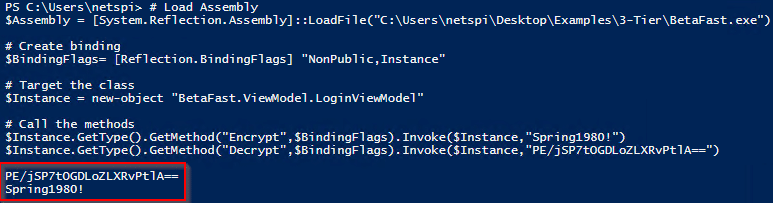

NetSPI is an industry-recognized leader in providing high quality penetration testing offerings. NetSPI’s offerings around Web Application, Mobile, and Thick Client Penetration Testing services are leveraged by clients to not only satisfy PCI DSS Requirement 6.3, but also go beyond the PCI requirements as elaborated on in their requirement 6.5.

PCI DSS Requirement 6.5

Verify that processes are in place to protect applications from, at a minimum, the following vulnerabilities:

- Injection Flaws

- Buffer Overflows

- Insecure Cryptographic Storage

- Insecure Communications

- Improper Error Handling

- Cross-site Scripting

- Cross-site Request Forgery

- Broken Authentication and Session Management

NetSPI Offerings Leveraged by Clients

NetSPI’s Web Application, Mobile, and Thick Client Penetration Testing services go above and beyond looking for vulnerabilities defined in the list above. The list of vulnerabilities in this particular requirement is heavily driven by the OWASP Top Ten list of vulnerabilities. NetSPI’s service offerings around Application Security provides at a minimum OWASP Top Ten issues, but typically goes above and beyond the OWASP Top Ten list of vulnerabilities.

PCI DSS Requirement 6.6

For public-facing web applications, address new threats and vulnerabilities on an ongoing basis and ensure these applications are protected against known attacks by either of the following methods:

- Reviewing public-facing web applications via manual or automated application vulnerability security assessment tools or methods, at least annually and after any changes

- Installing an automated technical solution that detects and prevents web-based attacks (for example, a web application firewall) in front of public-facing web applications, to continually check all traffic.

NetSPI Offerings Leveraged by Clients

NetSPI’s Web Application Penetration Testing offerings are highly sought after by our clients. In particular, at NetSPI we work closely with seven of the top 10 banks in the U.S., and are actively delivering various levels of Web Application Penetration Testing engagements for them. Customers continue to work with us because of the higher level of quality that they see in the output of the work we deliver, which is typically credited to the use of our Penetration Testing as a Service delivery model which is enabled by our Resolve ™ platform.

PCI DSS Requirement 11.3

Implement a methodology for penetration testing:

- A penetration test must be done every 12 months.

- The penetration testing verifies that segmentation controls/methods are operational and effective, and isolate all out-of-scope systems from systems in the CDE.

- Methodology includes network, server, and application layer testing.

- Includes coverage for the entire CDE perimeter and critical systems. Includes testing from both inside and outside the network.

NetSPI Offerings Leveraged by Clients

NetSPI provides both Internal and External Network Penetration Testing services. When customers request an assessment that they want to leverage the results to satisfy PCI requirements, NetSPI provides customers with deliverables that are tailored to be provided to our client’s QSA to satisfy PCI requirements. In cases where the Cardholder Data Environment (CDE) is located in the Cloud, then NetSPI Cloud Penetration Testing goes above and beyond the minimum PCI Stanrdard’s testing requirements.