Month: April 2020

Zoom Vulnerabilities: Making Sense of it All

Physical Distancing, Yet Connecting Virtually

We find ourselves living the COVID-19 pandemic, abruptly switching to a work from home model with virtual meetings becoming the norm. By now, unless you’re living under a rock, you’ve heard about people using the Zoom videoconferencing service.

Given that everyone is trying to shelter-in-place/social distance to stop the spread of the virus, the popularity of using Zoom for video calls with groups of people has become extremely popular. Given Zoom’s popularity, there’s been a spike in the usage of Zoom’s video conferencing capabilities for both professional and personal meetings as an avenue for multiple people to have face-to-face video conference calls.

Unfortunately for Zoom, given the spike in usage, there’s also been a rise in the number of security vulnerabilities that keep getting reported with Zoom’s software. This has resulted in Zoom focusing all their development efforts to sort out security and privacy issues with their software.

Let’s explore some of the most popular vulnerabilities that are being discussed and see if we can make sense of them and the impact that they’re going to have if exploited.

Zoom Security Concerns

Zoombombing

This term has gained a lot of popularity. Derived from the term “photo-bombing,” zoombombing refers to when a person or multiple people join a zoom meeting that they’re not invited to and interrupt the discussion in some sort of vulgar manner (e.g. sharing obscene videos or photos in the meeting).

There are a few reasons why this is possible:

- Meetings with Personal IDs – Zoom gives each user a personal ID, and you can use those IDs to quickly start a Zoom meeting at any given time. Because this ID is static and doesn’t change, zoombombers will keep iterating through all possible personal IDs until they get one that has an active meeting going on and they can join.

- Meetings not requiring passwords – Users can set up meetings that don’t require a password, so if a zoombomber figures out the meeting ID, and there’s no password for that meeting, then they can join the meeting.

- Lack of rate limiting – Zoom didn’t seem to have any type of rate limiting that would limit a machine from trying to access meetings.

There are steps a user can take to prevent their meetings from getting zoombombed, including:

- Generate a new meeting ID for every meeting instead of using your static Personal ID

- Make sure your meeting has a password (this is now enabled by default)

- Enable the waiting room, so you have to give users permission to join before they can join the meeting

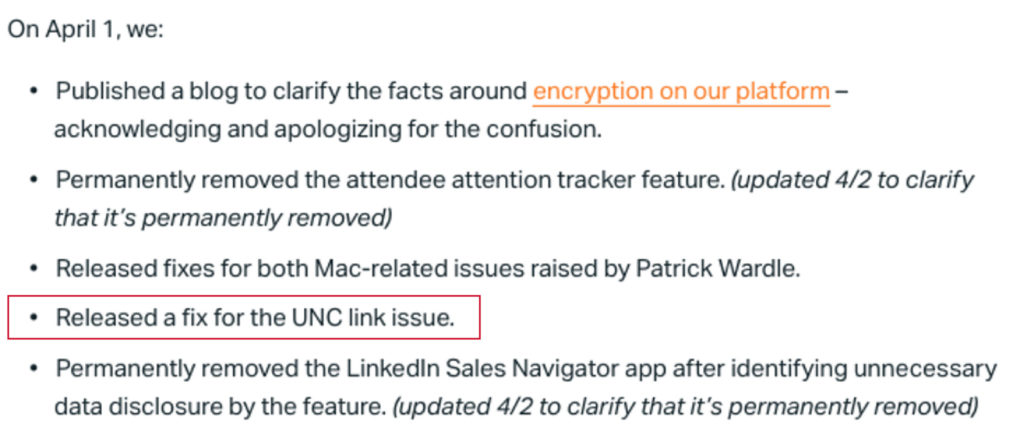

A Lack of True End-to-End (E2E) Encryption

Zoom does do E2E encryption, but it’s not doing the necessary encryption on the video conference piece. If you’re just using Zoom for social interactions and non-business meetings, and there’s nothing sensitive being shared, you probably don’t care about this too much.

There is encryption happening on the transport layer, but the encryption isn’t true E2E because Zoom can still decrypt your video information. What you basically have is the same level of protection as you would from having interactions with any website that you’re interacting with over HTTPS (with TLS).

When Zoom refers to E2E encryption, they mean all of their chat functionality is protected with true E2E encryption.

The reason you don’t see true E2E encryption for other platforms either is because it is really challenging to do. If you look at other services available like WhatsApp and FaceTime that allow group video calls, they limit how many participants you can have on a call at a time and don’t scale like Zoom does, where currently in the Zoom Gallery View you can see video from up to 49 participants at the same time.

Details around the use of encryption in Zoom can be found here.

China Being Able to Eavesdrop on Zoom Meetings

There’s been a lot of discussion around the issue that the Chinese government can force Zoom to hand over keys and as a result, they would be able to decrypt and view Zoom conversations because a few of the key servers that were used to generate encryption keys were located in China.

It needs to be noted that Zoom does have employees in China and runs development and research operations from there. That being said, most of Zoom’s key servers are based in the U.S. and if there are subpoenas from the FBI or other agencies, then Zoom would be required to hand over the keys (FISA warrant).

To summarize, if you’re just having regular video calls with your family and friends and there’s nothing that’s sensitive in nature being discussed on these calls, you probably shouldn’t worry too much about this issue.

Your Private Chat Conversation Isn’t Really Private

During a zoom meeting, when you send a private chat to someone in that meeting through Zoom, even though it cannot be exposed to anyone else immediately, but after the meeting if the host decides to download the transcript for that meeting, they will have access to both the chats that occurred in public and was sent to everyone in that meeting, along with any private chat message that may have been exchanged between two people privately.

This isn’t necessarily surprising that a host/admin would have access to all chat transcripts, but to sum this one up, if you’re chatting about something privately with someone during the meeting, don’t talk about something or say something that you wouldn’t want others to see or find out about.

Zoom Mimics the OS X Interface to Gain Additional Privileges

When Zoom is being installed, the app requires some additional privileges to complete the process, and so the app installer prompts the user for their OS X password. The message presented to the user is very deceiving since the messages says “System needs your privilege to change” while asking for the administrator credentials to be entered.

It’s challenging to determine whether this is truly malicious or not, because we can’t get into the heads of the developers to determine the true intent – but this trick is commonly used by malware to gain additional privileges.

This in itself isn’t as big of an issue since it happens to take place while a user is intentionally installing the software on their own machine. There are scenarios where attackers could somehow convince their target to install Zoom, and then leverage any vulnerabilities in Zoom itself to cause damage. While not impossible, it’s a little far-fetched.

Zoom Can Escalate Privileges to ‘Root’ on Mac OS

I’m not going to spend too much time going over the technical details of how this can be done, but you can find all the details you want on Patrick Wardle’s blog.

What I want to emphasize here is that this requires someone to already have access to your system to be able to exploit it. If an attacker has physical access to your computer, you have other things to worry about because they basically “own” your machine at this point – they can do whatever they want – time and skill permitting of course. And if there’s some malware that’s exploiting this Zoom issue on your computer, guess what, the malware is already on your machine, and probably has root (or close to root) access anyway because you somehow inadvertently gave it additional privileges to install itself on your machine.

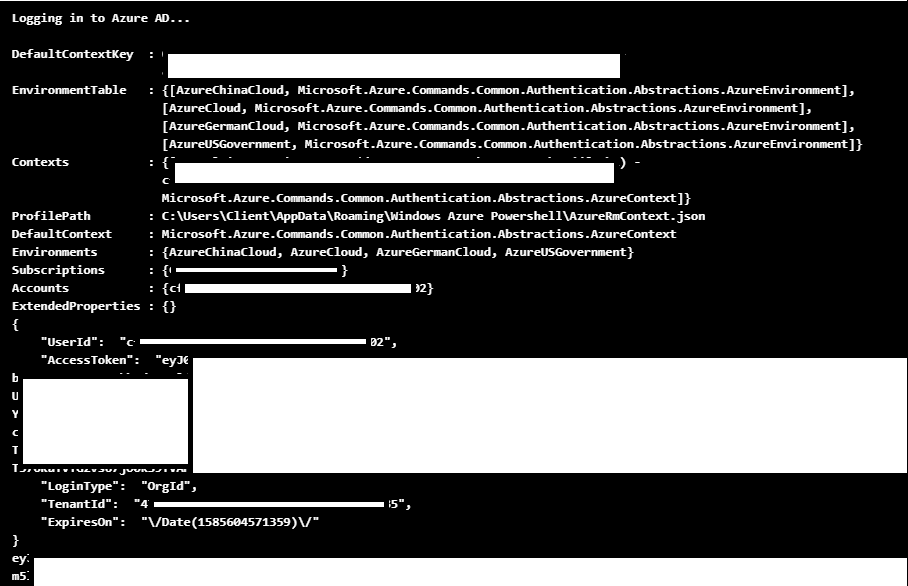

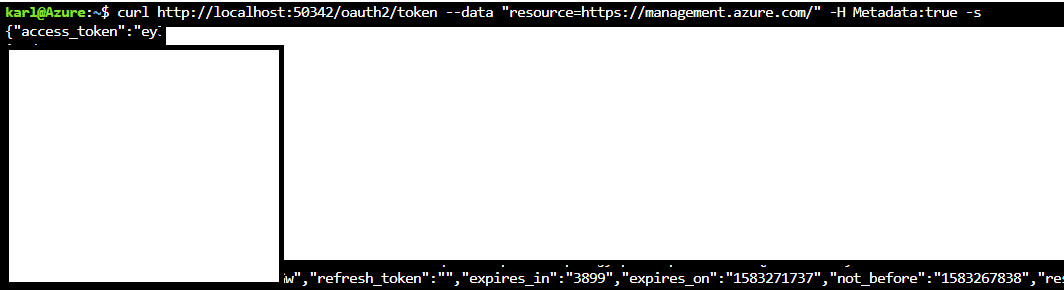

Attackers Can Steal Your Windows Credentials Through the Windows Zoom Application

This is a vulnerability where an attacker can send you a chat message with a UNC link. The Windows app was converting the UNC links into clickable links just like they would with web links. So a link like “ \\ComputerName\Shared Folder\mysecretfile.txt ” would get converted to a web link like “www.netspi.com.” By clicking the link, a user would have their Windows credentials (the username and the password hash) sent to the attacker.

I want to re-iterate the importance of not clicking on links that you don’t trust, or don’t know where it’s really going. It’s important for everyone to be vigilant against clicking on untrusted links just like they would with email phishing. This is no different.

This issue has reportedly been fixed by Zoom on April 1, 2020, as long as you update to the latest version of the Zoom application on Windows.

Source: https://blog.zoom.us/wordpress/2020/04/01/a-message-to-our-users/

What Does This Mean for You?

There’s a lot to digest here, and given Zoom’s popularity in recent times, it’s not surprising that more and more issues are getting reported because researchers are focusing on these issues more, and attackers are trying to take advantage of any little issue that can be exploited on an app that a majority of the population may be using.

The bottom line on how you should use Zoom really depends on your use case. If you’re using it for informal, personal, social purposes and there’s nothing of sensitive nature that you’re worried about, Zoom will serve you just fine. On the other hand, if you need to have sensitive business-related discussions or need to use a communication channel to discuss something that’s top secret, then it’s probably best to avoid Zoom, and use known secure methods of communication that have been approved and vetted by your business/organization.

Recent Posts

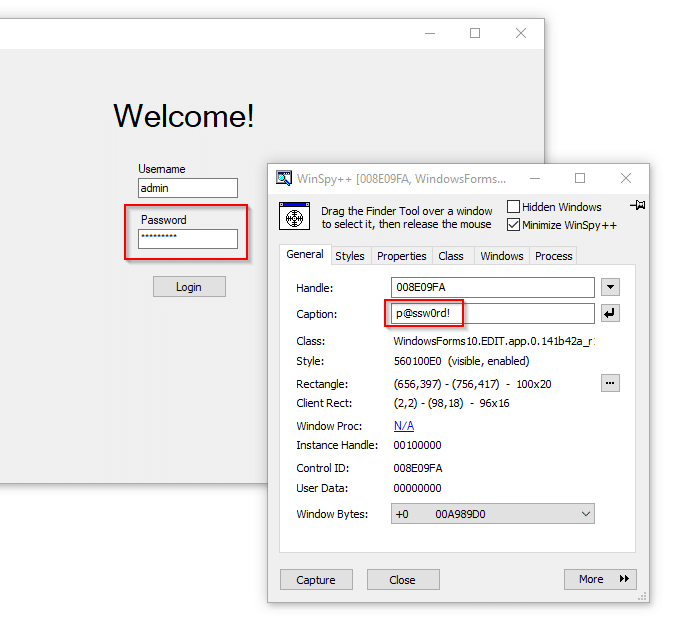

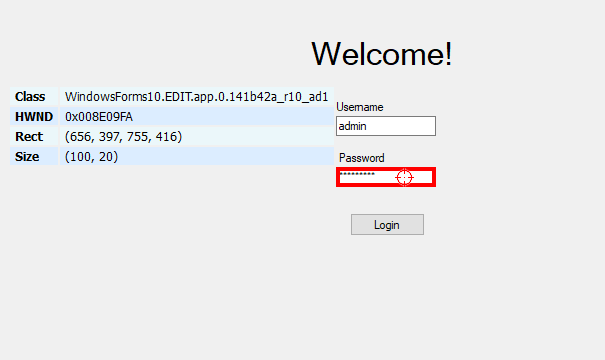

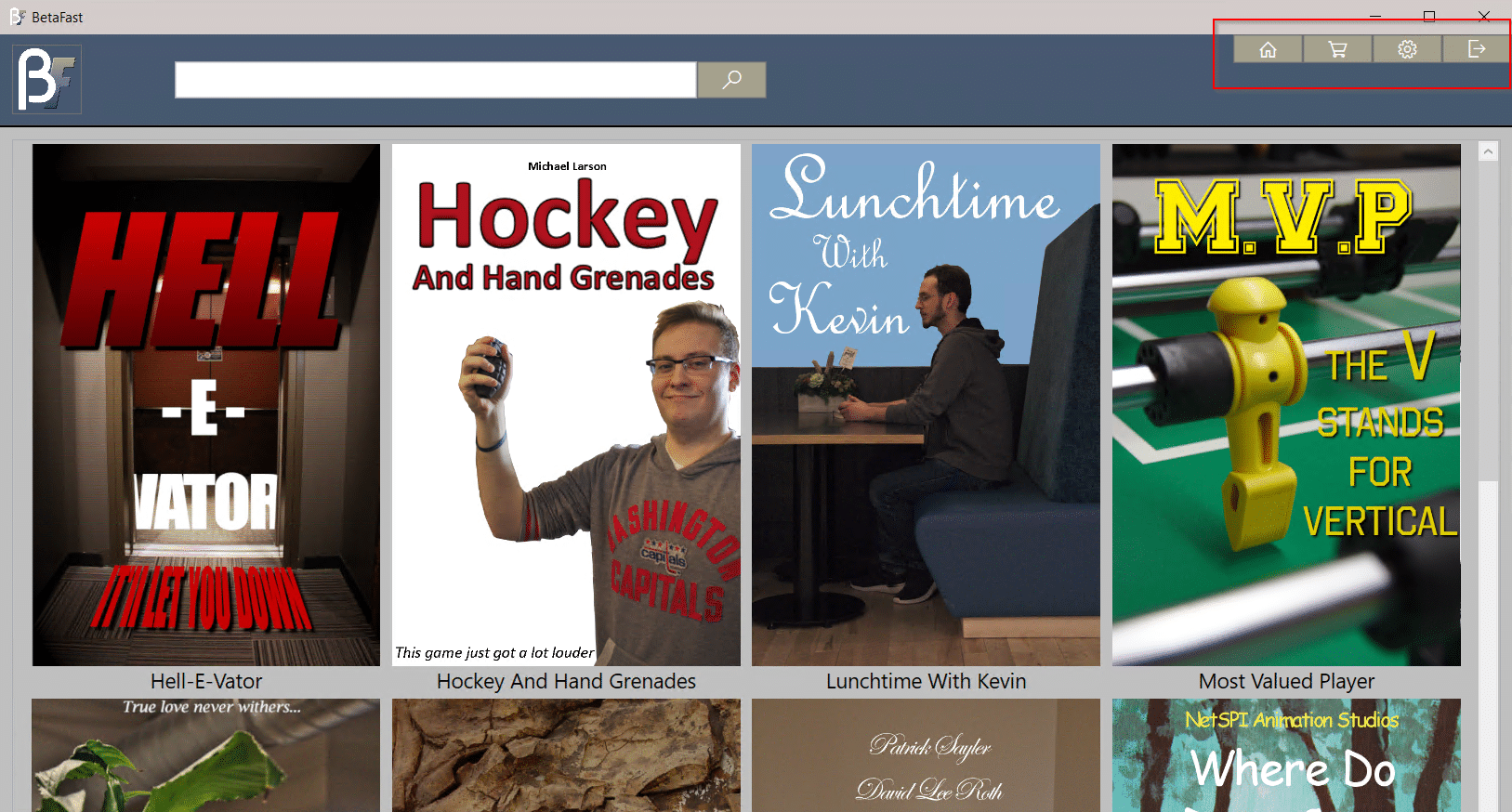

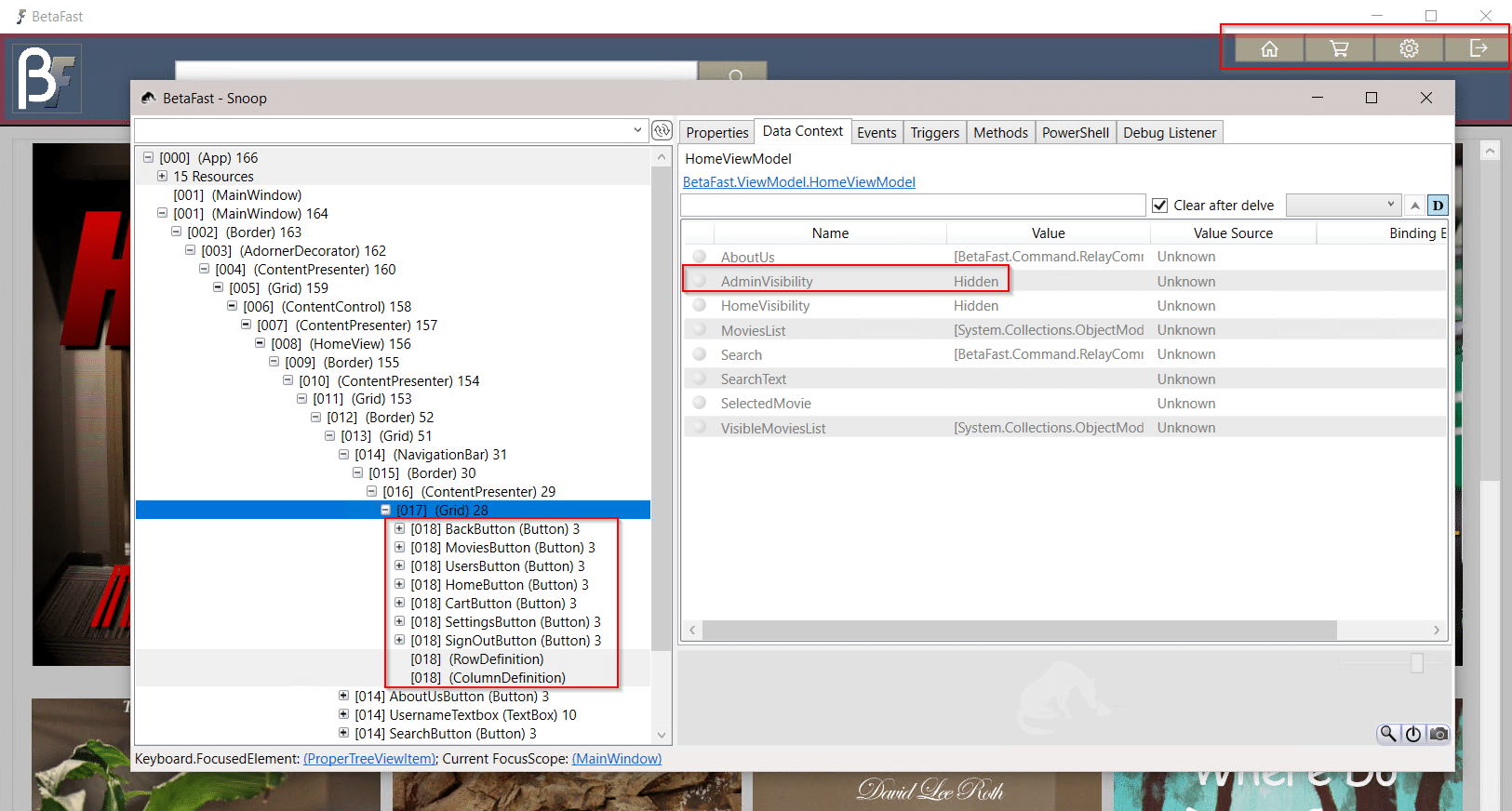

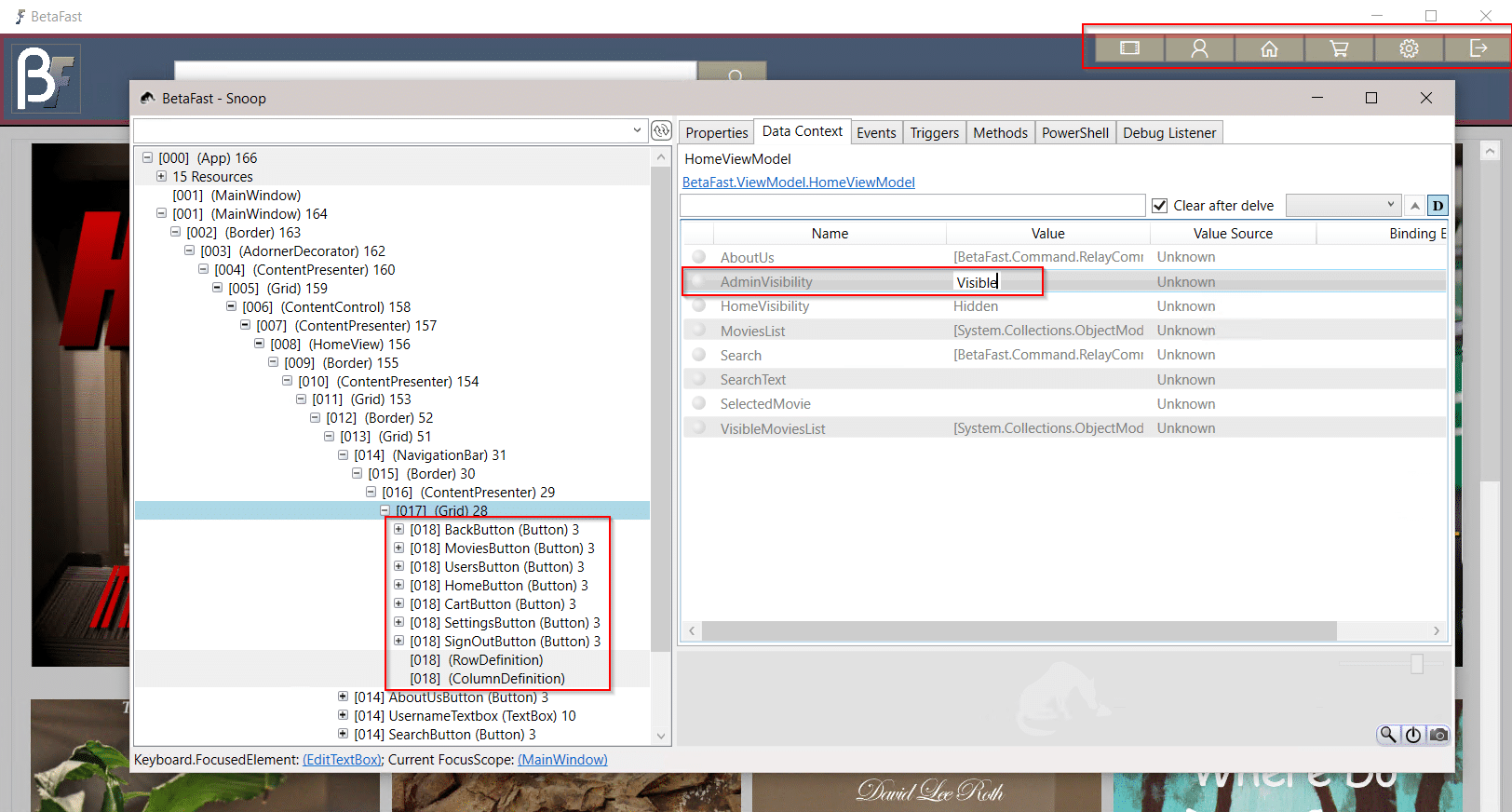

Introducing BetaFast – NetSPI's Vulnerable Thick Client

Recent Posts

Penetration Testing as a Service – Scaling to 50 Million Vulnerabilities

The process of assessing third-party penetration testing vendors is the start of a long-term relationship that is core to your security testing program. It’s critical to find a vendor that can both conduct and operationalize these testing programs to scale across the smallest and largest of security organizations. This can only happen when a testing service provider is technology-enabled and can plug into any environment.

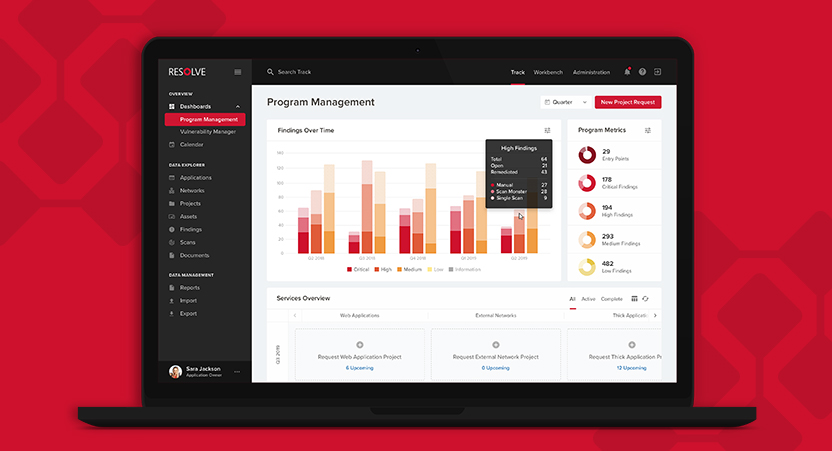

At RSA in February, NetSPI launched Penetration Testing as a Service (PTaaS). PTaaS is our unique delivery model that provides our Threat and Vulnerability Management (TVM) platform, Resolve™ to our customers on every engagement. PTaaS is designed to provide best-in-class TVM solutions, by default, for every test. Starting with the first engagement, all vulnerabilities are correlated, deduplicated, and delivered directly through Resolve™. As the testing grows, the entire suite of product functionality can be added so all of an organization’s internal and third-party testing programs can be viewable in Resolve™.

In this two-part blog, we will first review existing features that come standard with a penetration test through PTaaS. Then, in the second blog in May, we’ll discuss additional and upcoming functionality that exists to scale Resolve™ across even the largest organizations.

Program Management

The entry point into Resolve™ is the Program Management Dashboard, which helps orchestrate all testing activities that are ongoing and have been completed in the platform. At the top, you will see new vulnerabilities trending over time and by hovering over them, you can see the efficacy of each testing method. This helps identify what was found through manual penetration testing versus our proprietary multi-scanner orchestration and correlation tool, Scan Monster™, versus a traditional single network scanner.

On this same Program Management Dashboard screen, you can see the Services Overview, which aggregates all projects in Resolve™ into a matrix via Project Type and timeframe. For example, the top left card in this overview represents all Web Application Penetration Tests performed in Q1 2020. Additional detail such as scoping and vulnerability information can also be found on this card.

Projects

By clicking into one of these cards in the Services Overview, you will be taken to the Projects grid, where each project’s details can be viewed. Selecting a project will bring up all information related to that project at-a-glance, where you can view information including recent activity and comments, users assigned to the project, and project scope and definition. All communication for the project flows through this page. The project entities are also available here, along with important information like the findings discovered during the engagement and the assets that were included in the test. An asset typically relates to a unique IP address or URL.

Findings

The Findings tab will display all vulnerabilities discovered during the engagement. These findings can be searched, sorted, and filtered directly in this grid, as well as globally. Selecting a row will bring up a wealth of information about that finding.

The finding details present everything a developer would need to know to understand this type of vulnerability, including the overall severity, description, business impact, and remediation instructions for that issue, as well as what CVEs and OWASP categories are associated with that vulnerability.

Selecting the instances tab will bring up all the unique locations this vulnerability was discovered on this asset.

Instances

Inside an instance will be all the information needed to identify the specific vulnerability, including the affected URL and port and what parameters were used in the attack, along with step-by-step verification instructions. These instructions detail how to reproduce the vulnerability so developers can quickly understand and remediate it.

Concluding Thoughts

All these features are available at both the project and global levels. Users can filter, search, and globally prioritize all vulnerabilities and assets that exist in Resolve™. NetSPI has performed our penetration testing services in Resolve™ for over a decade and currently host 50+ million vulnerabilities for our clients – a number which is rapidly increasing.

Be sure to check back in late May for our part two in this series where we’ll discuss additional and new functionality that exists to scale Resolve™ across even the largest organizations.

To learn more about PTaaS, see the below resources:

Recent Posts

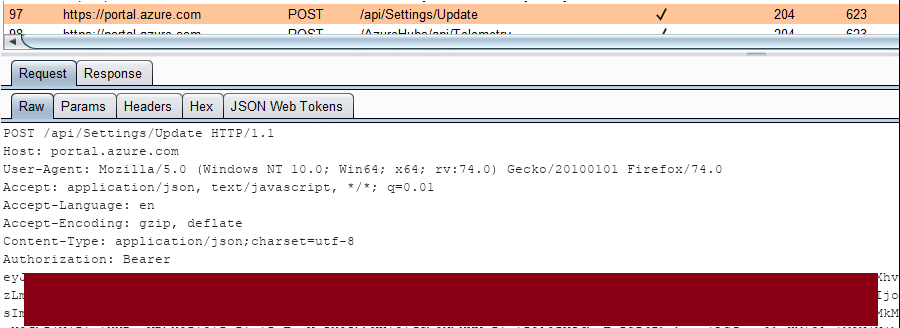

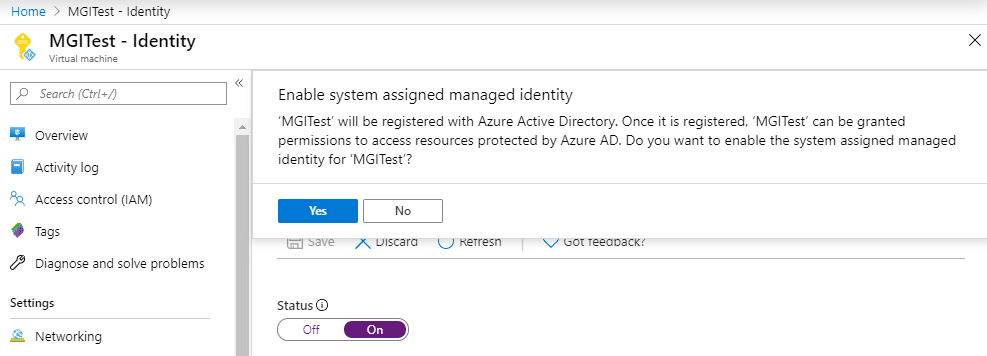

Gathering Bearer Tokens from Azure Services

Recent Posts

BAI Banking Strategies: Work from home presents a data security challenge for banks

On April 15, 2020, NetSPI Managing Director Nabil Hannan was featured in BAI Banking Strategies.

The mass relocation of financial services employees from the office to their couch, dining table or spare room to stop the spread of the deadly novel coronavirus is a significant data security concern, several industry experts tell BAI.

But they add that it is a challenge that can be managed with the right tools, the right training and enduring vigilance.

Read the full article here.

Recent Posts

The Evolution of Cyber Security Education and How to Break into the Industry

In the inaugural episode of NetSPI’s podcast, Agent of Influence, Managing Director and podcast host, Nabil Hannan talked with Ming Chow, a professor of Cyber Security and Computer Science at Tufts University about the evolution of cyber security education and how to get started in the industry.

Below is an excerpt of their conversation. To listen to the full podcast, click here, or you can find Agent of Influence on Spotify, Apple Music, or wherever you listen to podcasts.

Nabil Hannan

What are your views and thoughts on how actual education in cyber security and computer science has evolved over the last couple of decades?

Ming Chow

I think one thing that is nice, which we didn’t have, is this: ten or twenty years ago, if we wanted to learn Java, for example, or about databases, or SQL, you had to go buy a book from your local tech bookstore or we had to go to the library. That doesn’t have to happen now. There’s just so much information out there on the web.

I think it’s both a good and a bad thing. Now, with all this information readily available, it feels like that content and information is much more accessible. I don’t care if you’re rich or poor, it really leveled the playing field in terms of the accessibility and the availability of information.

At the same time, there is also the problem of information overload. I’ll give you two good examples. Number one: I’ve had co-workers ask me, “What’s the best book to use for python?” That question, back in the day when we had physical books was a lot easier to answer. Making a recommendation now is a lot harder. Do you want a physical book? Are you looking for a publisher? Are you looking for an indie publisher? Are you looking for a website? Are you looking for an electronic form? Now, there are just way too many options.

Now it’s even worse when it comes to cyber security and information security. There are a lot of people trying to get into cyber security and a common question is how to get started. If you ask 10 experts that question, you’ll get 10 different answers. This is one of the reasons why, especially for newcomers, that it’s hard to understand where to get started. There are way too many options and too many avenues.

Nabil Hannan

Right, so people get confused by what’s trustworthy and what’s not, or what’s useful versus what isn’t.

Ming Chow

And, what makes this worse is social media because a lot of people in cyber security are on Twitter and there’s also a community on Facebook. This has both pros and cons, of course. You have community, which is great, but at the same time, there is just more information and more information overload.

But, there is one thing that hasn’t changed in cyber security education – or lack thereof – and computer science curricula since 2014. I don’t see much changed in computer science curricula at all. I still see a lot of students walking out of four years of computer science classes who don’t know anything about basic security, not to mention about cross site scripting and SQL injection. Here we are in 2020 and there are still many senior developers who don’t know about these topics.

Nabil Hannan

Let’s say you have a student who wants to become a cyber security professional or get into a career in cyber security. What’s your view on making sure they have a strong foundation or strong basics of understanding of computer science? What do you tell them? And how do you emphasize the importance of knowing the basics correctly?

Ming Chow

Get the fundamentals right. Learn basic computer programming and understand the basics. It makes absolutely no sense to talk about cyber security if you don’t have the fundamentals or technical underpinnings right. You must have the basic technical underpinnings first in order to understand cyber security. Because you see a lot of people talkabout cyber security – they talk and talk and talk – but half of the stuff they say makes no sense because they don’t have the basic underpinnings.

That’s why I tell brand new people, number one, get the fundamentals right. You must get those because you’re going to look like a fool if you talk about cyber security, but you don’t actually have any knowledge of the basic technical underpinning.

Nabil Hannan

The way I tell people, that is, it’s important for you to know how software is actually built in order for you to learn or figure out how you’re going to break that piece of software. So that’s how I iterate the same thing. But yes, continue please.

Ming Chow

Number two is to educate yourself broadly. Let me explain why that’s important. You want to have the technical underpinnings, but you also want to educate yourself broadly – take courses in calligraphy, psychology, political science, information warfare, nuclear proliferation, and others.

Educate yourself broadly, because cyber security is a very broad field. I think that’s something that many people fail to understand. A lot of people, especially in business, think that cyber security is just targeted toward technology. A lot of people think cyber security is IT’s responsibility. But of course, that’s not true, because things like legal and HR have huge implications for cyber security. You have to educate yourself broadly because sometimes the answer is not technical at all.

Nabil Hannan

I think some of the most successful people that I’ve seen in this space are usually very adaptable – they learn to adapt to different situations, different scenarios, different cultures, different environments. And, technology is always evolving and so are the actual security implications of the evolving technology. Some of the basics and foundations may still be similar, but the way to approach certain problems ends up being different. And the people who are most adaptable to those type of changing and evolving scenarios tend to be the most successful in cyber security, from what I’ve seen.

Ming Chow

I think it’s a huge misnomer for any young person who is studying and trying to get into security. Cyber security is not about the 400-pound hacker in the basement. It’s also not hunting down adversaries or just locking yourself in a room, isolating yourself in a cubicle, writing code that would actually launch attacks.

Nabil Hannan

So, you’re saying it’s not as glamorous as Hollywood makes it seem in their movies like Hackers and Swordfish?

Ming Chow

I think the most legit show is Mr. Robot because they actually vet out real security professionals for that show.

Now, I want to go back into something you said about the software engineering role. Probably one of the best ways to get into cyber security is to follow one of these avenues: software development, software engineering, help desk, network administration, or system administration. And the reason is because when you’re in one of those positions, you will actually be on the front lines and see how things really work.

Nabil Hannan

Things in practice are so different than things in theory, right? So, that’s what you really got to learn hands on.

To listen to the full podcast, click here, or you can find Agent of Influence on Spotify, Apple Music, or wherever you listen to podcasts.

Recent Posts

Credit Union Times: Vulnerability Management Considerations for Credit Union M&As

On April 8, 2020, NetSPI Managing Director Nabil Hannan was featured in Credit Union Times.

Given the hundreds of merger and acquisition applications approved each year by the NCUA, M&As remain an appealing strategy for growth. However, in today’s cyberworld, merging with another company also means adopting another company’s network infrastructure, software assets and all the security vulnerabilities that come with it. In fact, consulting firm West Monroe Partners reported that 40% of acquiring businesses discovered a high-risk security problem after an M&A was completed.

A case in point: In the early 2000s, I was part of a team heavily involved during and after the merger of two large financial institutions. We quickly came to the realization that the entities had two completely different approaches to cybersecurity. One had a robust testing program revolving around penetration testing (or pentesting) and leveraged an industry standard framework to benchmark its software security initiative annually. The other did not do as much penetration testing but focused more on architecture and design level reviews as its security benchmarking activity. Trying to unify these divergent approaches quickly brought to the surface myriad vulnerabilities that required immediate remediation. However, the acquired entity didn’t have the business cycle or funding needed for the task, which created a backlog of several hundred thousand issues needing to be addressed. This caused delays in the M&A timing because terms and conditions had to be created. Both parties also had to agree to timelines within which the organizations would address identified vulnerabilities and the approach they would take to prioritize remediation activities accordingly.

Read the full article here.

Recent Posts

Through the Attacker's Lens: What Is Visible on Your Perimeter?

The Internet is a hacker’s playground. When a hacker is looking for targets to attack, they typically start with the weakest link they can find on the perimeter of a network – something they can easily exploit. Usually when they find a target that they can try to breach, if the level of effort becomes too high or the target is sufficiently protected, they simply move on to the next target.

The most common type of attackers are called “Script Kiddies.” These are typically inexperienced actors that simply use code/scripts posted online to replicate a hack or download and use software like Metasploit to try to run scans against systems to find something that breaks. This underscores the need for making sure that the perimeter of your network and what’s visible to the outside world is properly protected so that even inadvertent scanning by Script Kiddies or tools being ran against the network don’t end up causing issues.

Testing the External Network

In most cases, it seems like all the focus and energy goes to the network perimeter that is externally facing from an organization. Almost all the organizations we work with have a focus on, or automated scanners that are regularly testing the external facing network. This is an important starting point because usually vulnerabilities within the network are easily detected and there are many tools out there at the hackers’ disposal allowing them to easily discover vulnerabilities in the network. It’s very common for attackers to regularly scan the Internet network space to try to find vulnerabilities and determine what assets they are going to try to exploit first.

Organizations have processes or automations that regularly scan the external network looking for vulnerabilities. Depending on the industry an organization falls into, there are regulatory pressures that also require a regular cadence of security testing.

The Challenge of Getting an Inventory of All Web Assets

Large organizations that are rapidly creating and deploying software commonly struggle to have an up-to-date inventory of all web applications that are exposed to the Internet at any given time. This is due to the dynamic nature in which organizations work and have business drivers that require web applications to be regularly deployed and updated. One common example is organizations that heavily use web applications to support their business needs, particularly for marketing purposes where they deploy new micro-sites on a regular basis. Not only are new pages deployed regularly, but with today’s adoption of the DevOps culture and Continuous Integration (CI) / Continuous Deployment (CD) methodology being adopted by so many software engineering teams, almost all applications are regularly being updated with code changes.

With changes happening on the perimeter where web applications are exposed and updated all the time, organizations need to regularly scan their perimeter to discover what applications are truly exposed, as well as if they have any vulnerabilities that are easily visible on the outside to attackers that may be running scanning tools.

Typically, organizations do have governance and processes to perform regular testing of applications in non-production or production-like UAT environments, but many times, testing applications in production doesn’t happen. Although doing authenticated security testing in production may not always be feasible depending on the nature and business functionality of a web application, performing unauthenticated security scanning of applications using Dynamic Application Security Testing (DAST) tools can be done easily – after all, the hackers are going to be doing it anyways, so might as well proactively perform these scans and figure out ahead of time what will be visible to the hackers.

The Need for Unauthenticated DAST Scanning Against Web Applications in Production

Given these challenges, it’s important for organizations to seriously consider whether it makes sense to start performing unauthenticated DAST scanning against all their web applications in production on a periodic basis to ensure that vulnerabilities don’t make it through the SDLC to production.

At NetSPI, for our assessments we typically use multiple DAST scanning tools to perform assessments, leveraging both open source DAST scanners and commercial DAST scanning tools.

What Does NetSPI’s Assessment Data Tell Us?

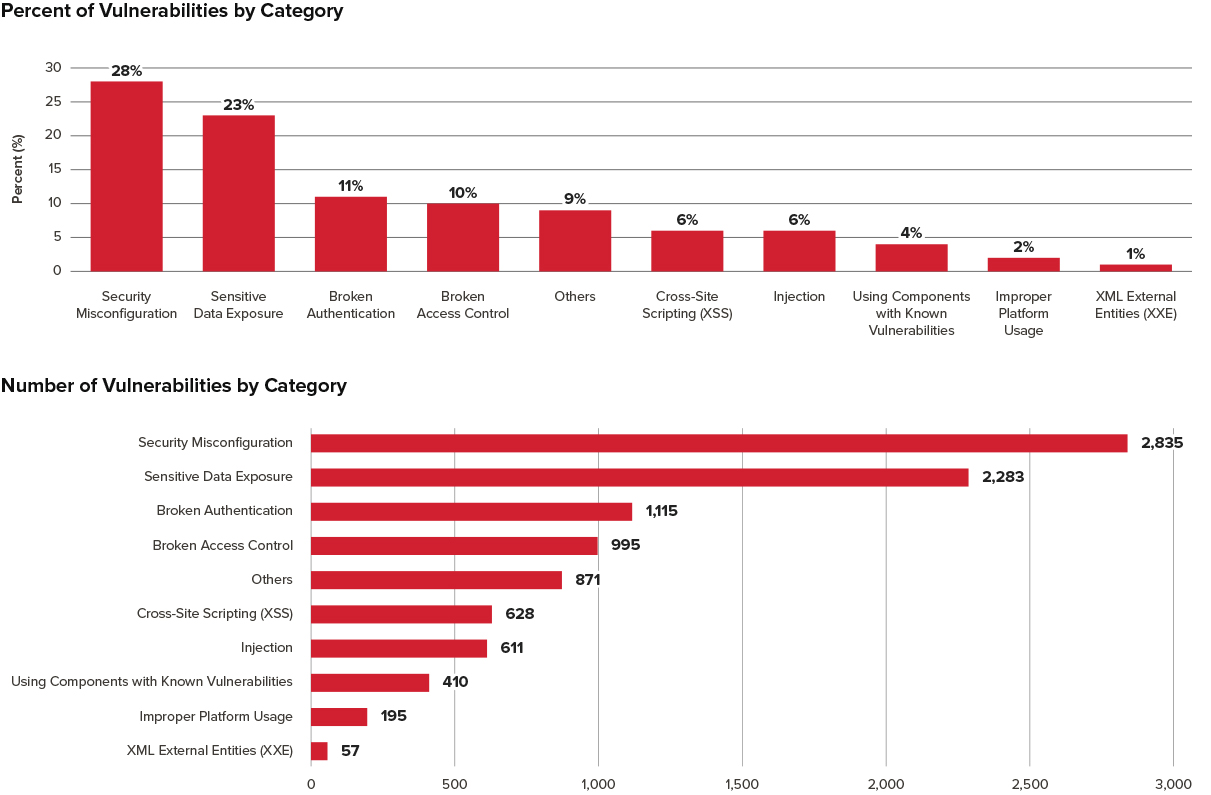

Given our experience in web application assessments, we looked at data from the last 10,000 vulnerabilities identified from web application assessments, and the most common issues are Security Misconfiguration (28%) and Sensitive Data Exposure (23%). Full analysis of the data is in the graphs below.

Given how Security Misconfiguration is the most common vulnerability that we tend to find, it highlights how these misconfigurations could accidentally also be moved into production. This shows why it’s so important to periodically test all web applications in production for common vulnerabilities.

Key Takeaways

- Testing your external network is a good start and a common practice in most organizations.

- Keeping an up-to-date inventory of all web applications and Internet facing assets is challenging.

- Companies should periodically perform DAST assessments unauthenticated against all web applications in production.

- Data from thousands of web application assessments shows that Security Misconfiguration and Sensitive Data Exposure are the most common types of vulnerabilities found in web applications.

Recent Posts

Staying Off the Hamster Wheel of Cloud Security Pain

It all starts innocently enough. You engage with a trusted provider to perform a penetration test of your shiny, new cloud-native application. And the penetration testers find stuff. Of course, they find stuff. They always find stuff because that’s what they do. Sure, you try to make it harder for them to find stuff, and sometimes you’re somewhat successful in that, but they’ll still find stuff.

Sigh. That means you have to fix stuff, right? Now you have a decision, and it can be a pretty tough decision. Do you fix things once and move onto the next thing? Or do you try to be a little more strategic and address the root cause of the issue, which is typically a human error committed by a well-meaning operations person? Our pals at Gartner believe that 99% of all cloud security failures will be the customer’s fault. Ouch.

You probably look at your to-do list and then make the quick fix to move onto the next thing. To be clear, I’m not judging that choice. It’s reality given the number of items on your plate and the amount of time it’ll take to really fix the problem.

Unfortunately, you are now on the cloud security hamster wheel of pain. This concept was coined back in 2005 by Andy Jaquith and highlighted the challenge of doing security correctly. No matter what you did, you ended up in the same place – breached and having to respond to the incident. Yeah, those were fun times. But I guess I shouldn’t speak of that in past tense because far too many folks are still on their hamster wheel.

So, what is a better approach to dealing with these cloud infrastructure security issues that provide the avenues for our trusted penetration testers to gain access to your cloud environment? It’s to implement a security operations platform that both responds to attacks and enforces a set of policy guardrails around your infrastructure.

Let’s start with the guardrails concept, as you may already be familiar with that term – since it’s taken on a life of its own in security marketing circles. We’re referring to the ability to assess against a set of security best practices continually (for instance, the CIS Benchmarks) and automatically remediate if a change is made that violates the policies. The guardrails moniker is apropos, as you don’t want anyone to drive your cloud application off the proverbial road, and the automated security guardrails keep you there without slowing anyone down.

But risks to your systems are not always security policy violations, as there are times where an attacker gains access to your cloud environment and starts making changes. You likely have all sorts of detection technologies and monitors (like AWS CloudTrail and GuardDuty, or Microsoft Security Center) checking on your cloud, but what happens when an alert fires from one of those tools? In some environments, nothing happens. Yeah, that’s the wrong answer. If you had a pre-designed set of playbooks to take actions depending on the attack, you’d already have addressed the attack before too much damage is done. We call this capability Cloud Detection and Response (CDR), but we’re not particular about the name – rather just that you can respond to a cloud attack at the speed of cloud.

Now, we’re all too aware that you may have broken into hives at the mere mention of automated remediation. We get it, many of us came from operations backgrounds as well, and we know (all too well) the downside of an automation run awry. So, we believe that humans should be in the process where needed. Thus, your cloud security operations platform should have logical points where an administrator can approve an automation before it takes action. Being able to make a “decision” about an automated action goes a long way toward gaining comfort with the tool and the changes required.

I’d be lying if I told you that cloud security (or any security discipline, for that matter) is easy. There is nothing easy about it. But staying on the cloud security hamster wheel of pain of making tactical fixes over and over again is an even harder path. Building internal cloud security operations capabilities is certainly an option, as we have many friends who carry around their own “suitcase full of scripts and Lambdas” to automate remediation.

But we don’t think DIY (do it yourself) is a long-term answer either. The solution is deploying a cloud security operations platform, as we’ve described above. If you’d like to learn more about this concept and how to gracefully address the issues found by your penetration testing team, check out our webinar on April 16.

And then you’ll have the confidence that you’ll have addressed the issues once and for all, or at least until the next time NetSPI penetration testers go after your application because they’ll find different stuff. That’s what they do…

Learn more about NetSPI’s cloud penetration testing services, including how cloud pentesting helps improve your cloud security posture.