Month: October 2020

A Beginners Guide to Gathering Azure Passwords

Recent Posts

Introducing PTaaS+: Decreasing Your Organization’s Time to Remediation

The PTaaS+ features listed within this blog post are now offered with any NetSPI service that leverages PTaaS. This excludes ticketing integrations, which are available for an additional cost. Contact us to learn more.

NetSPI is focused on creating the next generation of security testing. Our Penetration Testing as a Service (PTaaS) delivers higher quality vulnerabilities, in less time than any other provider and we are now expanding these benefits into your remediation lifecycle.

This month we’re expanding your options with our PTaaS+ plan, which focuses on vulnerability management and remediation. With our base PTaaS plan, we deliver vulnerabilities the same day they are found, now with PTaaS+ you and your team are empowered to act upon and begin remediating them immediately, decreasing your time-to-remediation by up to 1 month for high severity issues. A couple of key features contribute to this new functionality:

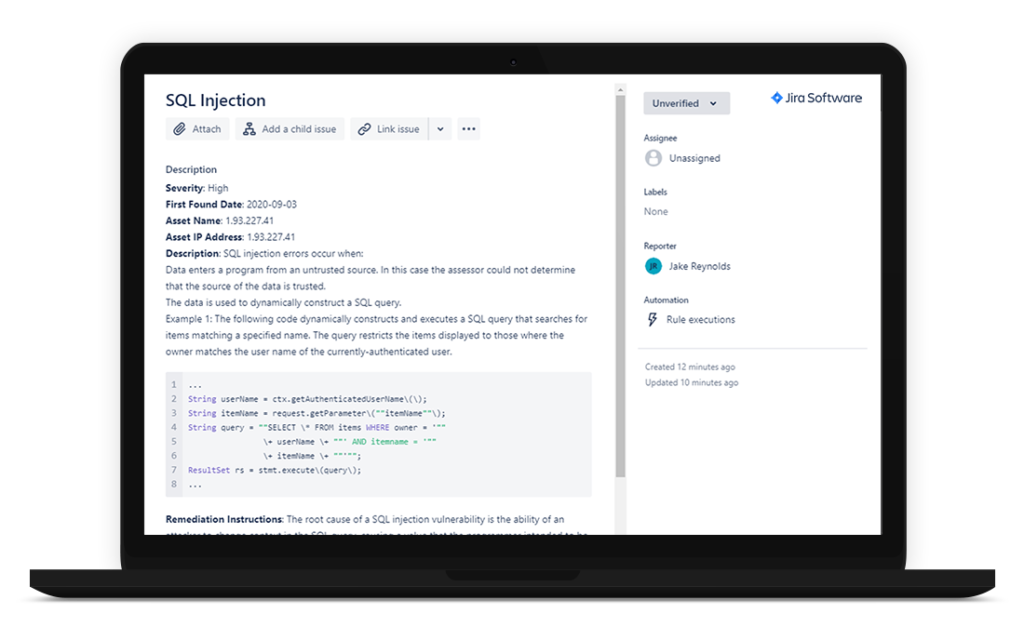

Ticketing Integrations

On average, we report over 50 vulnerabilities on a regular web application test, that number jumps above 700 when we perform external network testing. When receiving so many vulnerabilities, making sense of it all can be a full-time job before you even get to remediating them. With PTaaS+, we offer free integration with Jira or Service Now to easily get the vulnerabilities into your tools and into the remediator’s hands on day zero.

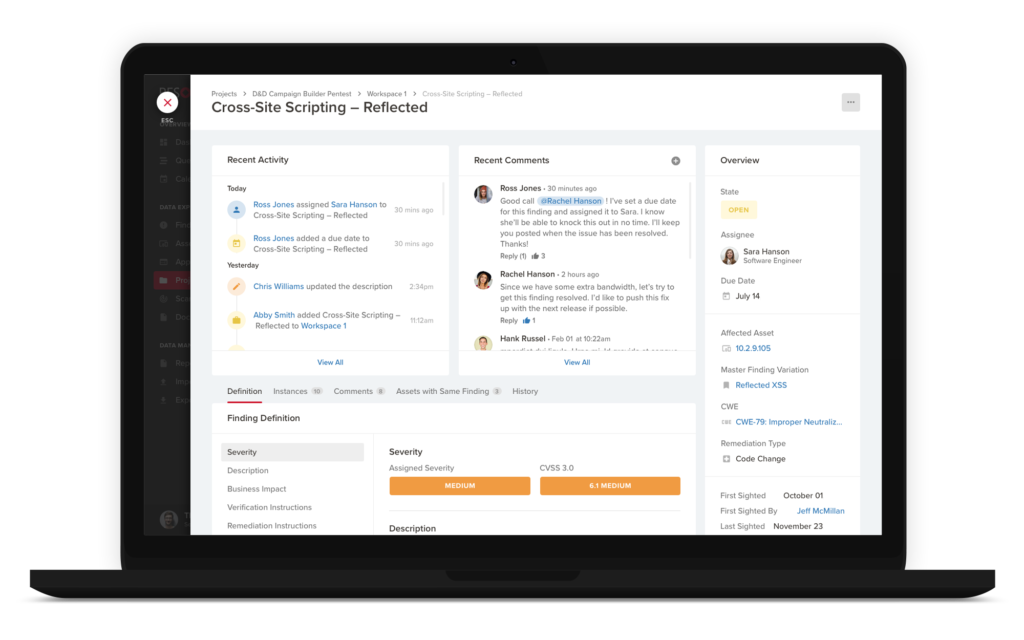

Remediation Assignments & SLAs

After receiving a large number of vulnerabilities, the first step is assigning a due date for remediation based on vulnerability severity. PTaaS+ allows each severity to be assigned a timeframe in which it must be remediated from the delivery date. NetSPI’s standard recommendation is:

- Critical – 30 days

- High – 60 days

- Medium – 90 days

- Low – 365 days

However, these can be customized to fit your organization’s policies. Additionally, with PTaaS+, you can assign vulnerabilities to specific users, letting you track and delegate vulnerabilities throughout the remediation lifecycle.

Vulnerability Customization

After delivering vulnerabilities, one common point of discussion is NetSPI’s severity rating vs. an organization’s internal vulnerability rating. Every organization rates vulnerabilities differently and to help with that, PTaaS+ allows you to provide an assigned severity to all vulnerabilities, from which your remediation due dates can be calculated. Both NetSPI’s and your severities will be maintained for auditing and future reporting.

Data Analytics

After you have a handle on your remediation processes, you can start looking for trends to ensure fewer vulnerabilities next year. PTaaS+ grants you access to NetSPI’s Data Lab which allows you to analyze and trend vulnerabilities across all your assessments with NetSPI. Popular data lab queries include:

- Riskiest asset in your environment

- Most common vulnerabilities across your company

- Top OWASP categories

The PTaaS+ features listed within this blog post are now offered with any NetSPI service that leverages PTaaS. This excludes ticketing integrations, which are available for an additional cost. Contact us to learn more.

Recent Posts

NetSPI Adds to Leadership Team to Support Continued Focus on Customer Success

Recent Posts

TechTarget: How to hold Three Amigos meetings in Agile development

On October 22, NetSPI’s Shyam Jha, SVP of Engineering was featured in TechTarget:

Agile software development teams often adopt what’s called a Three Amigos approach, which combines the perspectives of business, development and quality assurance for sprint planning and evaluation.

Typically, a Three Amigos meeting includes a business analyst, quality specialist and developer. The product owner or other businessperson clarifies the requirements before development; the development expert lays out the issues that arise in development processes; and the software-quality expert or tester ensures that testing considerations are designed into requirements and development.

Read the full article here.

Recent Posts

Cyber Security: How to Provide a Safe, Secure Space for People to Work

Creating a Culture of Education Around Cyber Security

When it comes to cyber security training, less is more. Determine what is necessary and make it mandatory compliance training. The balance can be subtle and served through a variety of media. Ideally, it will not even feel like training. For example, record a brief video, narrate over a few PowerPoint slides, or host Q&A sessions for any cyber security questions.

It is no longer realistic to base cyber security standards around employees keeping their personal and professional activities separate. By educating employees about digital security in their personal lives, it will extend into their business activities. Additionally, employees will appreciate the trusted guidance.

Another of my training philosophies is having open communication absent of shaming. When someone is the victim of a cyber security scam, they feel shame. We need to move past this. If an employee reports being tricked by a suspicious email, link, call, etc., thank them and encourage them to share their experience with employees. This helps others protect themselves and your business.

Lessons Learned from the Unlikeliest of Places

In 2016, I joined the cyber security team after two years of international travel. Together with my husband, we bicycled from Seattle to Singapore. The lessons I learned along the way were surprisingly relevant to my work in cyber security.

- Learning to communicate when you don’t share a common language was key for me both in work and in life. Professionally, I was able to translate cyber security or any tech speak to something employees could understand and relate to their daily responsibilities. More broadly, as our environment grows more diverse, we will continue to find ourselves interacting with people from different geographies, cultural backgrounds, and native languages. It is increasingly important for us to effectively communicate with our global citizens whether personally or professionally.

- Change has become the new normal. Being in a different location and needing to find food, water, and shelter each day forced me to live with change and uncertainty. Within a few weeks, it became normal and much less stressful. I became comfortable trusting that things would work out.

Areas of Cyber Security Focus in the Biotech Sector

Great cyber security is boring and some media have done a disservice to the industry by making it flashy and scary. Cyber security is about doing the preparation to provide a safe, secure space for people to work. Three main areas I would focus on are:

- Equipment Maintenance: Everything runs off software. Ensure the software is current with security patches applied. It can be a difficult balance between business and security when you need to take a money-making instrument offline to do a security upgrade. Having excellent cross-functional relationships so you can have those tough conversations is critical.

- Data Privacy: Ensure your systems are secured from the outside and you have alerting and monitoring mechanisms in place should the worst happen. Prevention only goes so far. You need to be prepared and practiced for what to do in the event of a breach. The speed to recognition and recovery is more important.

- Audit Trails: Ensure that the right information and the right discoveries are attributed to the right people. Audit trails are also key in cyber security investigations. When you are trying to determine whose PC or which server or what part of the network was infiltrated, that audit trail and an environment with open communication allows you to conduct a successful investigation.

The views presented by Kristin are those of her own and do not necessarily represent the views of her employer or its subsidiaries.

Recent Posts

The Payment Card Industry: Innovation, Security Challenges, and Explosive Growth

In a recent episode of Agent of Influence, I talked with John Markh of the PCI Council. John has over 15 years of experience in information security, encompassing compliance, threat and risk management, security assessments, digital forensics, application security, and emerging technologies such as AI, machine learning, IoT, and blockchain. John currently works for the PCI Council and his role includes developing and evolving standards for the emerging mobile payments technologies, along with technical contributions and effort surrounding penetration testing, secure application, secure application lifecycle, and emerging technologies such as mobile payment technologies, cloud, and IoT.

I wanted to share some of his insights in a blog post, but you can also listen to our interview here, on Spotify, Apple Music, or wherever you listen to podcasts.

About the PCI (Payment Card Industry) Council

The PCI Council was established in 2009 by several payment brands to create a single framework that would be acceptable to all those payment brands to secure payment or account data of the merchants and service providers in that ecosystem. Since then, the PCI Council has created many additional standards that not only cover the operational environment, but also device security standards such as the PCI PTS standard and security standards that cover hardware security modules and point to point encryption solutions. The Council is in the process of developing security standards for various emerging payment technologies. The mission of the council is to allow secure payment processing by all stakeholders.

Over the years, a number of the security requirements created by the Council have been enhanced to ensure the standard does not become obsolete but keeps up with the current threats to the payment card industry as a whole. For example, PCI DSS, which was the very first standard created and published by the Council, has evolved and had numerous iterations since its publication to account for evolving threats.

The standards built by the PCI Council are built in a way to address threats that directly impact the payment ecosystem. They are not all-encompassing standards. For example, organizations that operate national infrastructure or electricity grids will find some security requirements that will be applicable to them, but the standards will not address all the risks that are applicable to them. The PCI Council standard is focused on the payment ecosystem.

The Evolution of the Payment Card Industry

John shared how people want convenience – not just in payment, but in every aspect of their life. They want convenience and security. So, payments will evolve to accommodate that.

Even today, there are stores where you put items you want to purchase in your shopping bag and you walk out. Automation, artificial intelligence, machine vision, and biometric systems that are installed in that store will identify the products you have put in your bag and deduct the money from your pre-registered account completely seamlessly.

There are also pilot stores in Asia where you still have to check out at the grocery store, but to pay, you just look at a scanner, which identifies you through iris scan to verify your identity, and then payment is process from a pre-registered account.

Many appliances are also becoming connected to the internet, so it is possible that in the future, a refrigerator will identify that you run out of milk, purchase the milk to be delivered to you, and perform the payment on your behalf. You could soon wake up with a fresh gallon of milk on your doorstep that was ordered by your refrigerator.

And of course, mobile is everywhere. More and more people have smartphones – and smartwatches – and with that comes the convenience of paying using your device. Paying by smart device is way simpler and in these times of COVID-19, it’s also contactless. I think we will see more and more technologies that allow this type of payment. It will still be backed by a credit card behind the scenes, but the form factor of your rectangular plastic will shift to other form factors that are more convenient and seamless.

There are also “smart rings” that can perform biometric authentication of the wearer of the ring. You can load payment cards and transit system cards into the ring, for example. So, when you want to pay or take the train, you just tap your ring to the NFC-enabled reading device, and you’re done.

Convenience will drive innovation. Innovation will have to adapt to meet security standards and it will also drive new security standards to ensure that the emerging technologies are secure.

Innovation and Privacy

In order to have seamless payments, the system still needs some way to validate who you are. If you use a chip and pin enabled card, you authenticate yourself by entering a pin, which is a manual process. But John noted, it’s far more seamless to use iris scans, but to do that, you need to surrender something of yours to the system so the system can identify that you are you.

Right now, the standards are focusing on protecting account data, but maybe in the future, there will be a merge between standards that focus on protecting account data and standards that protect biometric data.

People have several characteristics that identify us for the duration of our lifetime since they don’t change much, including fingerprints and iris scans. It’s difficult to say whether a choice of fingerprint or iris scan is the right choice for consumer authentication or not. At the end of the day, the payment system needs to authenticate you. If the system is using characteristics that cannot be changed, then it also needs to have additional inputs into making sure that it’s not a fraudulent transaction.

For example, payment authentication could be a combination of your fingerprint and the mobile device you’re using. If it is a known mobile device that belongs to you, the system could accept the transaction that was authenticated by your fingerprint plus additional information collected from your device, such as the fact that it belongs to you and there is no known malware on the device. If you were using your fingerprint on a new device, the system could identify that the fingerprints match, but recognize it’s a new device or the device might have some suspicious software on it, in which case the system will ask you to enter your PIN or to provide additional authentication. It will be a more elaborate system that takes numerous characteristics of the transaction and its environment into account before the transaction is processed.

Challenges of Making the Phone a Point of Sale (POS)

One area of focus for the PCI Council are mobile payment platforms. As John said, business owners want to be able to install an app on mobile devices and be able to take payments through that – creating an instant point of sale. However, the fact that the phone is not controlled by an enterprise, and people can install a variety of applications on their phones (some of which might be malware) puts tremendous risk on the entire payment processing system.

While this enables business owners to sell to more people, especially those who don’t have cash and only have credit cards or smart devices, it also creates an additional system for potential fraud.

John said the PCI Council is focused on a way to make mobile payment platforms more secure. As such, the Council has already published two standards.

- The Software-based PIN Entry on COTS (SPoC) standard enables solution providers to develop an app along with a small hardware dongle. The purpose of the hardware dongle is to read card information while the phone becomes a point of sale and device for consumers to enter their pin to authenticate consumers.

- The second standard the PCI Council has released is Contactless Payments on COTS (CPoC™). In this case, it’s just an application that the merchant can download to their phone that would make sure the phone is reasonably secure by performing various attestations of the phone and application, and allow merchants to instantly transform their phone into a point of sale. In some emerging markets, there is no payment infrastructure that exists where you can walk into a bank and get a merchant account, or it may take a very long time. With the mobile payment technologies, you can basically become a merchant immediately.

As I have personally seen, having the ability to make financial transactions in parts of the world that don’t have a lot of infrastructure through mobile devices has dramatically changed people’s livelihood. And we need to make sure that it’s being done securely.

To listen to the full podcast, click here, or you can find Agent of Influence on Spotify, Apple Music, or wherever you listen to podcasts.

Recent Posts

Cyber Defense Magazine: 3 Steps to Reimagine Your AppSec Program

On October 7, NetSPI Managing Director Nabil Hannan and Product Manager Jake Reynolds were featured in Cyber Defense Magazine:

With Continuous Integration/Continuous Deployment (CI/CD) increasingly becoming the backbone of the modern DevOps environment, it’s more important than ever for engineering and security teams to detect and address vulnerabilities early in the fast-paced software development life cycle (SDLC) process. This is particularly true at a time where the rate of deployment for telehealth software is growing exponentially, the usage of cloud-based software and solutions is high due to the shift to remote work, contact tracing software programs bring up privacy and security concerns, and software and applications are being used in nearly everything we do today. As such, there is an ever-increasing need for organizations to take another look at their application security (AppSec) strategies to ensure applications are not left vulnerable to cyberattacks.

Read the full article for three steps to get started – starting on page 65 of the digital magazine here.

Recent Posts

The Evolution of Security Frameworks and Key Factors that Affect Software Development

In a recent episode of Agent of Influence, I talked with Cassio Goldschmidt, Head of Information Security at ServiceTitan about the evolution of security frameworks used to develop software, including key factors that may affect one company’s approach to building software versus another. Cassio is an internationally recognized information security leader with a strong background in both product and program level security.

I wanted to share some of his insights in a blog post, but you can also listen to our interview here, on Spotify, Apple Music or wherever you listen to podcasts.

Key Considerations When Developing Software

As Goldschmidt noted, one of the first security frameworks that was highly publicized was Microsoft STL, and a lot of security practitioners thought it was the way to develop software, and it was a one size fits all type of environment. But that is definitely not the case.

Goldschmidt said that when SAFECode (Software Assurance Forum for Excellence and Code), a not for profit was created, it was a place to discuss how to develop secure code and what the development lifecycle should be among those companies and at large. But – different types of software and environments require different approaches, and will be affected by a variety of factors at each business, including:

- Type of Application: Developing an application that is internet facing or just internet connected, or software for ATM machines, will influence the kind of defense mechanisms you need and how you should actually think about the code you’re developing.

- Compliance Rules: If your organization has to abide by specific compliance obligations, such as PCI, for example, they will in some ways dictate what you have to do. Like it or not, you will need to follow some steps, including looking at the OWASP Top 10 and make sure you are free of any cross-site scripting or SQL injections.

- The Platform: The architecture for phones and the security controls you have for memory management are very different from a PC, or what you have in the data center, where people will not be able to actually reverse engineer things. It’s something you have to take into consideration when you are deciding how you are going to review your code and the risk that it represents.

- Programming Language: Still today a lot of software is developed using C++. Depending on the language you use, you may not have the proper support for cross site scripting, so you have to actually make sure that you’re doing something to compensate for the flaws of the language.

- Risk Profile: Each business has its own risk profile and the kind of attacks they are willing to endure. For example, DDoS could be a big problem for some companies versus others, and for some companies, even if they have a data breach, it might not matter as much as for other companies depending on the type of business. For example, if you’re in the TV streaming business and a single episode of Game of Thrones leaks, it likely won’t have a big impact, but if you’re in the movie business and one of your movies leaks, then that will likely affect revenue for that movie.

- Budget: Microsoft, Google, and other companies with large budgets have employee positions that don’t exist anywhere else. For example, when Goldschmidt was at Symantec, they had a threat research lab, which is a luxury. Start-ups and many other companies might not have this and might need to use augmented security options.

- Company Culture: The maturity of the culture of the company also matters quite a bit as well. Security is not just a one stop activity that you can do at a given time, but something that ends up becoming part of your culture.

Today, there are a lot of tools and resources in the market such as Agile Security by O’Reilly that will tell you how to do things in a way that really fit the new models that people are using for developing code.

Security Software Versus Software Security

Security software is the software used to defend your computer, such as antivirus, firewalls, IDS, and IPS. These are really important, but that doesn’t mean they are necessarily secure software or that they were actually developed with defense programming in mind. Meanwhile, secure software is software developed to resist malicious attacks.

Goldschmidt said he often hears that people who make security software don’t necessarily make secure software. In his experience though, security software is so heavily scrutinized that it eventually becomes secure software. For example, antivirus software is a great target for hackers because if an attacker can get in and disable that antivirus, they can ultimately control the system. So, from his experience, security software does tend to become more secure, although it’s not necessarily true all the time.

One inherent benefit I’ve noticed for companies developing security software is that they’re in the business of security, so the engineers and developers they’re hiring are already very savvy when it comes to understanding security implications. Thus, they tend to focus on making sure at least some of the most common and basic issues are covered by default, and they’re not going to fall prey to basic issues.

If an individual doesn’t have this experience when they join a company developing security software, it becomes part of their exposure and experience since they are spending so much time learning about viruses, malware, vulnerabilities, and more. They inherently learn this as part of their day to day – it’s almost osmosis from being around other developers who are constantly thinking about it.

One of my mentors described the difference between security software and secure software to me this way: Security software is software that’s going to protect you as the end user from getting breached. Software security is making sure that your developers are developing the software in a manner that the software is going to behave when an attacker is trying to make it misbehave.

Goldschmidt and I also spent time discussing the cyber security of the Brazilian elections. You can listen to the podcast here to learn more.

Recent Posts

TechTarget: 3 common election security vulnerabilities pros should know

On October 1, NetSPI Managing Director Nabil Hannan was featured in TechTarget:

During the Black Hat 2020 virtual conference, keynote speaker Matt Blaze analyzed the security weaknesses in our current voting process and urged the infosec community – namely pentesters – and election commissions to work together. His point: Testers can play an invaluable role in securing the voting process as their methodology of exploring and identifying every possible option for exploitation and simulating crisis scenarios is the perfect complement to shore up possible vulnerabilities and security gaps.

Read the full article here.