Noah Dunn

Credentials and certifications earned include Certified Blockchain Security Professional, Certified Solidity Developer. He holds a master’s degree in digital Forensics and Cyber Analysis.

WP_Query Object

(

[query] => Array

(

[post_type] => Array

(

[0] => post

[1] => webinars

)

[posts_per_page] => -1

[post_status] => publish

[meta_query] => Array

(

[relation] => OR

[0] => Array

(

[key] => new_authors

[value] => "154"

[compare] => LIKE

)

[1] => Array

(

[key] => new_presenters

[value] => "154"

[compare] => LIKE

)

)

)

[query_vars] => Array

(

[post_type] => Array

(

[0] => post

[1] => webinars

)

[posts_per_page] => -1

[post_status] => publish

[meta_query] => Array

(

[relation] => OR

[0] => Array

(

[key] => new_authors

[value] => "154"

[compare] => LIKE

)

[1] => Array

(

[key] => new_presenters

[value] => "154"

[compare] => LIKE

)

)

[error] =>

[m] =>

[p] => 0

[post_parent] =>

[subpost] =>

[subpost_id] =>

[attachment] =>

[attachment_id] => 0

[name] =>

[pagename] =>

[page_id] => 0

[second] =>

[minute] =>

[hour] =>

[day] => 0

[monthnum] => 0

[year] => 0

[w] => 0

[category_name] =>

[tag] =>

[cat] =>

[tag_id] =>

[author] =>

[author_name] =>

[feed] =>

[tb] =>

[paged] => 0

[meta_key] =>

[meta_value] =>

[preview] =>

[s] =>

[sentence] =>

[title] =>

[fields] =>

[menu_order] =>

[embed] =>

[category__in] => Array

(

)

[category__not_in] => Array

(

)

[category__and] => Array

(

)

[post__in] => Array

(

)

[post__not_in] => Array

(

)

[post_name__in] => Array

(

)

[tag__in] => Array

(

)

[tag__not_in] => Array

(

)

[tag__and] => Array

(

)

[tag_slug__in] => Array

(

)

[tag_slug__and] => Array

(

)

[post_parent__in] => Array

(

)

[post_parent__not_in] => Array

(

)

[author__in] => Array

(

)

[author__not_in] => Array

(

)

[search_columns] => Array

(

)

[ignore_sticky_posts] =>

[suppress_filters] =>

[cache_results] => 1

[update_post_term_cache] => 1

[update_menu_item_cache] =>

[lazy_load_term_meta] => 1

[update_post_meta_cache] => 1

[nopaging] => 1

[comments_per_page] => 50

[no_found_rows] =>

[order] => DESC

)

[tax_query] => WP_Tax_Query Object

(

[queries] => Array

(

)

[relation] => AND

[table_aliases:protected] => Array

(

)

[queried_terms] => Array

(

)

[primary_table] => wp_posts

[primary_id_column] => ID

)

[meta_query] => WP_Meta_Query Object

(

[queries] => Array

(

[0] => Array

(

[key] => new_authors

[value] => "154"

[compare] => LIKE

)

[1] => Array

(

[key] => new_presenters

[value] => "154"

[compare] => LIKE

)

[relation] => OR

)

[relation] => OR

[meta_table] => wp_postmeta

[meta_id_column] => post_id

[primary_table] => wp_posts

[primary_id_column] => ID

[table_aliases:protected] => Array

(

[0] => wp_postmeta

)

[clauses:protected] => Array

(

[wp_postmeta] => Array

(

[key] => new_authors

[value] => "154"

[compare] => LIKE

[compare_key] => =

[alias] => wp_postmeta

[cast] => CHAR

)

[wp_postmeta-1] => Array

(

[key] => new_presenters

[value] => "154"

[compare] => LIKE

[compare_key] => =

[alias] => wp_postmeta

[cast] => CHAR

)

)

[has_or_relation:protected] => 1

)

[date_query] =>

[request] => SELECT wp_posts.ID

FROM wp_posts INNER JOIN wp_postmeta ON ( wp_posts.ID = wp_postmeta.post_id )

WHERE 1=1 AND (

( wp_postmeta.meta_key = 'new_authors' AND wp_postmeta.meta_value LIKE '{cc53dfcbb27c3587664cdad115f9b783afee754473dd53a99ae2d968bb517b91}\"154\"{cc53dfcbb27c3587664cdad115f9b783afee754473dd53a99ae2d968bb517b91}' )

OR

( wp_postmeta.meta_key = 'new_presenters' AND wp_postmeta.meta_value LIKE '{cc53dfcbb27c3587664cdad115f9b783afee754473dd53a99ae2d968bb517b91}\"154\"{cc53dfcbb27c3587664cdad115f9b783afee754473dd53a99ae2d968bb517b91}' )

) AND wp_posts.post_type IN ('post', 'webinars') AND ((wp_posts.post_status = 'publish'))

GROUP BY wp_posts.ID

ORDER BY wp_posts.post_date DESC

[posts] => Array

(

[0] => WP_Post Object

(

[ID] => 30563

[post_author] => 154

[post_date] => 2023-07-13 09:00:00

[post_date_gmt] => 2023-07-13 14:00:00

[post_content] =>

Continuing our series on Anti-Scraping techniques, this blog covers implementation of Anti-Scraping protections in a fake message board and examination of how scrapers can adapt to these changes.

While completely preventing scraping is likely impossible, implementing a defense in depth strategy can significantly increase the time and effort required to scrape an application. Give the first blog in this series a read, Anti-Scraping Part 1: Core Principles to Deter Scraping, and then continue on with how implementing these core principles affects scrapers' ability to exfiltrate data.

Hardening the Application

Multiple changes have been made to the Fake Message Board site to try and prevent scraping. The first major change is that rate limiting has been enforced throughout the application. Previously, the search bar had a “limit” parameter with no maximum enforced value which allowed all users of the application to be scraped in only a few requests. Now the “limit” parameter has a maximum enforced value of 100. All error messages have been converted to generic messages that do not leak information.

Not all the recommended changes have been applied to the application. Account creation has still not been locked down. Additionally, the /search endpoint still does not require authentication. As illustrated in “The Scraper’s Response” section, these gaps undermine most of the “fixes” made to the application.

Implementing Rate Limiting

There are many design decisions that need to be made when implementing rate limits. Here are some of the considerations:

- Should rate limits be specific to endpoints or constant across the application?

- How many requests should be allowed per unit of time? (ex: 100 requests/second)

- What are the consequences of reaching a rate limit?

Additionally, there is a distinction between logged-in and logged-out rate limits. Logged-in rate limits are applied to user sessions. When scrapers create fake accounts and use them for scraping, logged-in rate limits can suspend or block those accounts. If creating fake accounts is difficult, then this can significantly hinder a scraper’s progress. Logged-out rate limits are applied to endpoints where having an account isn’t required. From a defensive perspective, there are limited signals that can be used when a scraper is logged-out. The most common signal is an IP address. There are additional signals such as user-agent, cookies, additional HTTP headers, and even TCP fields. In the next sections we’ll review how the Fake Message Board application implemented both logged-in and logged-out rate limits.

Logged-in Rate Limits

In this case the logged-in rate limits are applied across the application. This probably isn’t the best decision for most organizations, but it is the easiest to implement. Each user is allowed to send 1000 requests/minute, 5000 requests/hour, and 10,000 requests/day. If a user violates any of those rate limits, then they receive a strike. If a user receives 3 strikes, then they are blocked from the platform. Implementing an unforgiving strategy like this is probably too strict for most applications.

Determining the number of requests allowed per unit of time is a very difficult problem. The last thing we want is to impact legitimate users of the application. Analyzing traffic logs to see how many requests the average user is sending will be critical to this process. When viewing the users sending the most traffic, it may be difficult to distinguish authentic traffic from user’s misusing the platform via automation. This will more than likely require manual investigations and extensive testing in pre-production environments.

It is also worth noting that bugs in applications can result in requests being repeatedly sent on the user’s behalf without their knowledge. Punishing these users with rate limits would be a huge mistake. If a user is sending a large number of requests to the same endpoint and the data returned isn’t changing, then it seems more likely that this is a bug in the application as opposed to scraping behavior.

Logged-out Rate Limits

In this case the logged-out rate limits are also applied across the application. Each IP address is allowed to send 1000 requests/minute, 5000 requests/hour, and 10,000 requests/day. If an IP address violates any of those rate limits, then it receives a strike. If the IP receives 3 strikes, then it’s blocked from the platform. This isn’t a realistic solution for multiple reasons, but the primary is that some IP addresses can be used by multiple users such as public WiFi IPs or university IPs. In those cases, the IPs would be blocked unfairly.

One of the main weaknesses of logged-out rate limits is that almost every signal can be controlled by the scraper. For example, the scraper can change their user agent with every request. If that signal is used in isolation, then you’ll just see a small number of requests from a bunch of different user agents. Similarly, a scraper can rotate their IP address. This is a devastating technique from a defensive perspective because our most reliable signal is the IP address. There are multiple open source libraries that make rotating IP addresses trivial. Implementing effective logged-out rate limits will probably require combining multiple of the mentioned signals in sophisticated ways.

Enforcing Data Limits

Ensuring that there’s a maximum limit to the amount of data that can be returned per response is a crucial piece in the fight against scraping. Previously, the search bar had a “limit” parameter which could return hundreds of thousands of users in one response. Now only up to 100 users can be returned per response. If more than 100 users are requested, then an error message is returned as shown below.

HTTP Request:

POST /search?limit=101 HTTP/1.1

Host: 127.0.0.1:12345

[TRUNCATED]

{"input":"t"}

HTTP Response:

HTTP/1.0 400 Bad Request

[TRUNCATED]

{"error":"error"}

Return Generic Error Messages

Previously the forgot password and create account workflows leaked information useful to scrapers via error messages. The forgot password workflow used to reveal whether the provided username matched an existing account and would return the email address if it did. Now it always returns the same message, “If the account exists an email has been sent.”

HTTP Request:

POST /forgotPassword HTTP/1.1

Host: 127.0.0.1:12345

[TRUNCATED]

username=doesnotexist

HTTP Response:

HTTP/1.0 200 OK

[TRUNCATED]

{"message":"if the account exists an email has been sent"}

The create account workflow used to reveal whether a specific username, email address, or phone number was already taken. Now it always returns the message, “If the account was created an email has been sent.”

HTTP Request:

POST /createAccount HTTP/1.1

Host: 127.0.0.1:12345

[TRUNCATED]

username=vfoster166&email=test@test.com&phone=&password=[REDACTED]

HTTP Response:

HTTP/1.0 200 OK

[TRUNCATED]

{"response":"if the account was created an email has been sent"}

The Scraper’s Response

Since the application has been updated, let’s review each piece of functionality and figure out how we can bypass the protections:

- Recommended Posts Functionality: Requires authentication, and rate limits your account after 1,000 requests.

- Search Bar: Does not require authentication, returns 100 users/response, and rate limits your IP address after 1,000 requests.

- User Profile Pages: Requires authentication and rate limits your account after 1,000 requests.

- Account Creation: No longer useful for leaking data, but fake accounts can be made easily.

- Forgot Password: No longer useful for leaking data.

Overall, the application has had multiple improvements since the previous scrape in Part 1. The introduction of rate limits forces us to adapt our techniques. For Logged-In scraping the tactic will be to rotate sessions. Since we’re able to easily make many fake accounts, we will send a small number of requests from each account and as a result never get rate limited. For Logged-out scraping the tactic will be to rotate IP addresses. By only sending a few requests from each IP address we will hopefully never get rate limited.

Logged-In Scraping

The goal is to extract all 500,000 users using either the recommended posts functionality or user profile pages. Since user profile pages return more data about the user (ID, name, username, email, birthday, phone number, posts, etc…) let’s focus on that endpoint. Assuming we only get one user per response, each of our fake accounts can send 999 requests/minute without being rate limited. If we make 500 fake accounts, then each account will only have to send ~999 requests in order to scrape every user on the platform. This means in only a couple minutes every user can be scraped and no rate limiting will be encountered.

Pseudo Scraper Code:

user_id_counter = 1 # User IDs start at 1 and are sequential

fake_accounts = [session1, session2, …, session500] # make a list with session cookies for all the fake accounts

while(user_id_counter < 500,000): # go through all 500,000 users

for session in fake_accounts: # rotate your session

user_data = requests.get(“/profile/” + str(user_id_counter))

user_id_counter += 1

Logged-Out Scraping

Extracting all 500,000 users using the search functionality is not as easy as it was last time. Now only 100 users are returned per response. Since each IP address can send 999 requests/minute without being rate limited, each IP can scrape 99,900 users without being blocked. This means only a few IP addresses are required. Most IP rotation tools leverage tens of thousands of IPs so getting a few is not an issue.

Pseudo Scraper Code:

while(users_scraped < 500,000):

user_data = requests.get(“/search?limit=100”, data=random_search_string, proxy=Some IP rotation service)

Summary

With multiple protections in place, scraping all 500,000 fake users of the application in a few minutes is still pretty easy. As a quick recap, the five core principles of scraping in our view are:

- Require Authentication

- Enforce Rate Limits

- Lock Down Account Creation

- Enforce Data Limits

- Return Generic Error Messages

As demonstrated in this blog, failing to implement one of these principles can completely undermine protections implemented in the others. Although this application enforced rate limits, data limits, and returned generic error messages, by not enforcing authentication and by allowing fake accounts to easily be created, the other protections barely hindered the scraper.

Additional Protections

There are several additional Anti-Scraping techniques that are worth bringing to your attention before wrapping up.

- When locking down account creation, implementing CAPTCHAs is a critical step in the right direction.

- Requiring the user to provide a phone number can be a gigantic annoyance to scrapers. If there’s a limit on the number of accounts per phone number, and virtual phone numbers are blocked, then it’s much more difficult to create fake accounts at scale.

- If user data is being returned in HTML, then adding randomness to the responses is another great deterrent. By changing the number and type of HTML tags around the user data, without affecting the UI, it becomes significantly harder to parse the data.

- On the rate limiting front, make sure to apply rate limits to the user as opposed to the user’s session. If rate limits are applied to the session, then a scraper can just log out and log back in which grants them a new session and thus bypasses the rate limit.

- For logged-out rate limits blocking certain IP ranges such as TOR IPs, or proxy/VPN providers may make a lot of sense especially if the majority of the traffic is inauthentic. Each organization with ranges of IP addresses will be assigned autonomous system numbers (ASNs). There are several publicly available ASN -> IP range tools/APIs that may be very useful in these cases.

- Lastly, user IDs should not be generated sequentially. A lot of scrapes first require a list of user IDs. If they are hard to guess and there isn’t an easy way to collect a large list of them then this can also significantly slow down a scraper.

Conclusion

Hopefully some of the Anti-Scraping tactics and techniques shared in this series were useful to you. Although completely preventing scraping probably isn’t possible, implementing a defense in depth approach which follows our five core principles of Anti-Scraping will go a long way in deterring and wasting time of scrapers.

[post_title] => Anti-Scraping Part 2: Implementing Protections [post_excerpt] => Implementing anti-scraping principles can deter scrapers by making it too difficult for them to bypass an application’s protections. [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => anti-scraping-part-2 [to_ping] => [pinged] => [post_modified] => 2024-04-02 09:10:48 [post_modified_gmt] => 2024-04-02 14:10:48 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?p=30563 [menu_order] => 89 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [1] => WP_Post Object ( [ID] => 30458 [post_author] => 154 [post_date] => 2023-06-29 09:00:00 [post_date_gmt] => 2023-06-29 14:00:00 [post_content] =>Introduction and Motivations for Scraping

Scraping usually refers to the practice of utilizing automation to extract data from web applications. There are a multitude of motivations for scraping which range from hackers trying to amass a large amount of phone numbers and email addresses which they can sell, to law enforcement extracting pictures and user data from social media sites to assist in solving missing persons cases. Although there is a wide range of both well-intentioned and malicious reasons to scrape web applications, allowing vast amounts of user data to be extracted by third parties can result in regulatory fines, reputational damage, and harm to the individuals who had their data scraped.

Anti-Scraping refers to the set of tactics, techniques, and procedures intended to make scraping as difficult as possible. Although completely preventing scraping is probably not possible, requiring scrapers to spend an inordinate amount of time trying to bypass an application’s protections will most likely deter the average scraper.

Anti-Scraping Core Principles

In our view there are 5 core principles in Anti-Scraping:

- Require Authentication

- Enforce Rate Limits

- Lock Down Account Creation

- Enforce Data Limits

- Return Generic Error Messages

These principles may look simple in theory but in practice are increasingly difficult with scale. Protecting an application on a single server with hundreds of users and a dozen endpoints is an approachable problem. However, dealing with multiple applications spread across global data centers with millions of concurrent users is an entirely different beast. Additionally, any of these principles taken too far can result in a hindrance to the user experience.

For example, enforcing rate limits sounds great on paper but exactly how many requests should the user be able to send per minute? If it’s too strict then it can result in a normal user being unable to use the application, which may be entirely unacceptable from a business perspective. Hopefully further diving into these topics can help illuminate both what solutions organizations can try to implement and what hurdles to expect along the way.

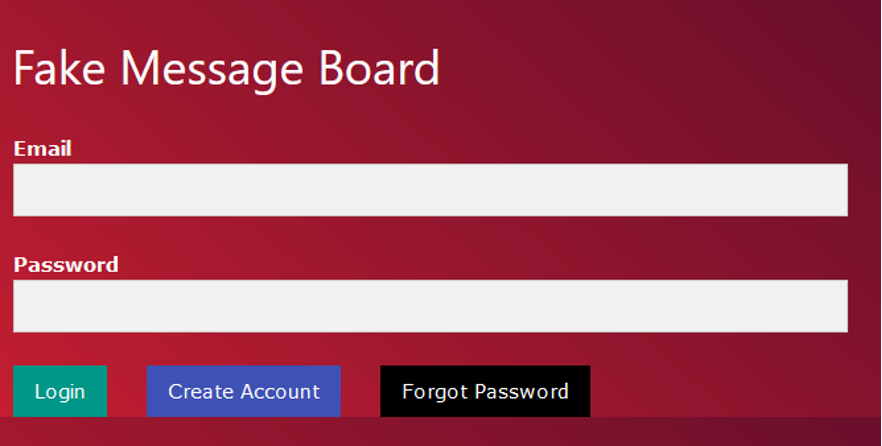

Fake Message Board

In this series of blogs, a fake message board site we developed will be utilized to demonstrate the back and forth between defenders hardening their application, and scrapers bypassing those protections. All the users and data shown in the application were randomly generated. The application has typical login, account creation, and forgotten password workflows.

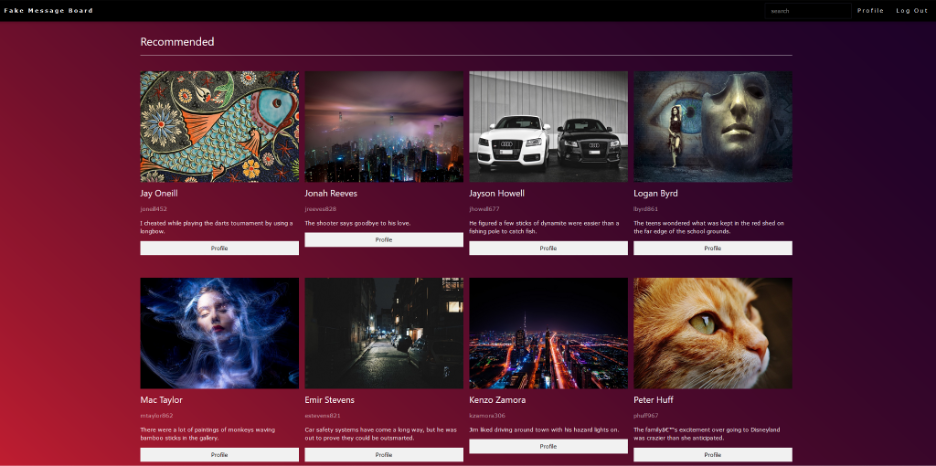

Once signed into the application the user is shown 8 recommended posts. There is a search bar in the top right which can be used to find specific users.

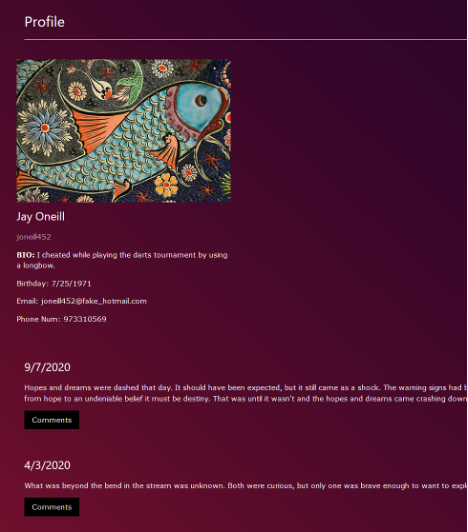

Additionally, each user has a profile page which returns information about the user (name, email, bio, birthday, email, phone number) and their posts (date, content, comments).

No attempts were made to restrict scraping. Let’s see how complicated it is to scrape all 500,000 fake users on the platform.

The Scraper’s Perspective

One of a scraper’s first steps will be to proxy the traffic for the web application by using a tool like Burp Suite. The goal is to find endpoints that return or leak user data. In this case there a few different areas of the application to look at:

- Recommended Posts Functionality

- Search Bar

- User Profile Pages

- Account Creation

- Forgot Password

Recommended Posts Functionality

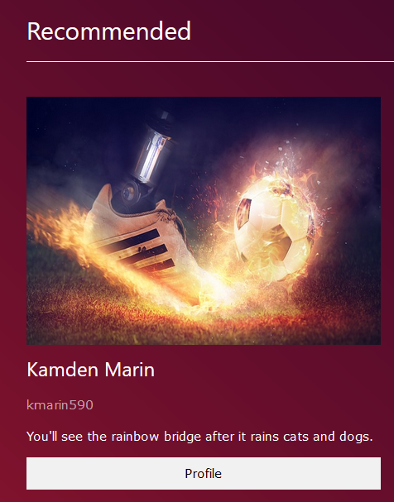

The recommended posts functionality returns 8 different posts every time the home page is refreshed. As shown below, the user data is embedded in the HTML.

HTTP Request:

GET /home HTTP/1.1

Host: 127.0.0.1:12345

Cookie: session_cookie=60[TRUNCATED]HTTP Response:

HTTP/1.0 200 OK

[TRUNCATED]

<div class="w3-row w3-row-padding"><div class="w3-col l3 m6 w3-margin-bottom" style="padding-bottom:30px">

<img src="/images/fake_profile_pictures/158.jpg" alt="Profile Picture" style="width:100%;height:300px">

<h3>Kamden Marin</h3>

<p class="w3-opacity">kmarin590</p>

<p>You'll see the rainbow bridge after it rains cats and dogs.</p>

<p><a class="w3-button w3-light-grey w3-block" href="/81165/profile/">Profile</a></p>

</div>

[TRUNCATED]As a scraper trying to figure out which endpoint to target, here are a few key questions we may ask ourselves:

- Is authentication required: Yes

- How much data is returned: 8 users/response

- What data is returned: User ID, name, username, bio

- Is the data easy to parse: Pretty easy, it’s just in the HTML

Search Bar

The search bar by default returns 8 users related to the provided search term. As shown below, there are multiple interesting aspects to the /search endpoint.

HTTP Request:

POST /search?limit=8 HTTP/1.1

Host: 127.0.0.1:12345

[TRUNCATED]

{"input":"t"}HTTP Response:

HTTP/1.0 200 OK

[TRUNCATED]

{

"0": [

"Valentin",

"Foster",

"vfoster166",

"7/13/1988",

"vfoster166@fake_yahoo.com",

"893183164"

],

"4": [

"Zion",

"Fuentes",

"zfuentes739",

"6/28/1985",

"zfuentes739@fake_gmail.com",

"905030314"

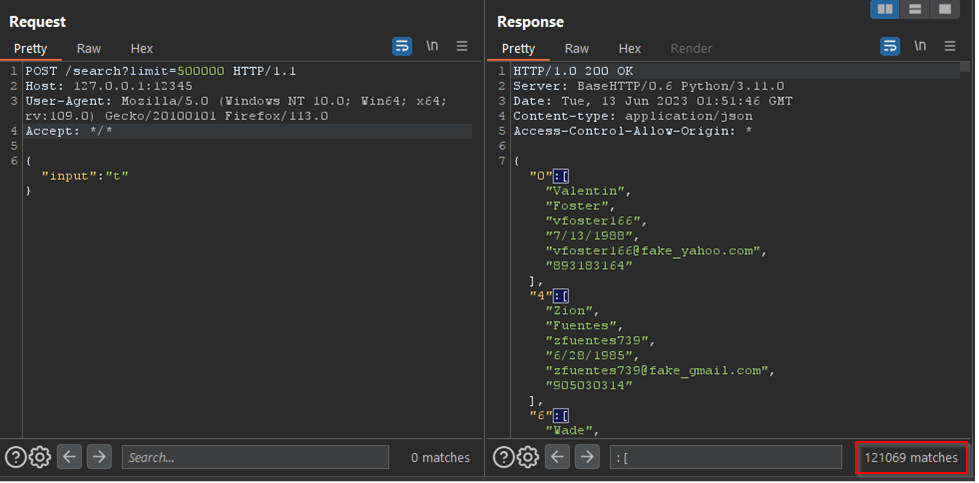

],[TRUNCATED]One of the first features to check is whether authentication is required. In this case the server still returns data even after removing the user’s session cookie. Additionally, any time there is a parameter which tells the server how much data to return that is potentially a great target for a scraper.

What happens if we increase the limit parameter from 8 to some higher value? Is there a maximum limit? As shown in the screenshot below, changing the limit parameter to 500,000 and searching for the value “t” results in 121,069 users being returned in a single response.

- Is authentication required: No

- How much data is returned: No max limit

- What data is returned: User ID, name, username, birthday, email, phone number

- Is the data easy to parse: Very easy, it’s just JSON

User Profile Pages

Visiting a user’s profile page returns information about the user, posts they have made, and comments on those posts.

HTTP Request:

GET /419101/profile/ HTTP/1.1

Host: 127.0.0.1:12345

Cookie: session_cookie=60[TRUNCATED] HTTP Response:

HTTP/1.0 200 OK

[TRUNCATED]

<div class="w3-margin-bottom" style="padding-bottom:30px;width:25%">

<img src="/images/fake_profile_pictures/153.jpg" alt="Profile Picture" style="width:100%;height:300px">

<h3>Ashton Weeks</h3>

<p class="w3-opacity">aweeks950</p>

<p><b>BIO: </b>People keep telling me "orange" but I still prefer "pink".</p>

<p>Birthday: 3/25/1980</p>

<p>Email: aweeks950@fake_outlook.com</p>

<p>Phone Num: 381801397</p>

[TRUNCATED]Since the user IDs are sequentially generated we can just start at user ID “1” and increment up until 500,000.

- Is authentication required: Yes

- How much data is returned: 1 targeted user and 3 commentors per post

- What data is returned: User ID, name, username, bio, birthday, email, phone num

- Is the data easy to parse: Pretty easy, it’s just in the HTML

Account Creation

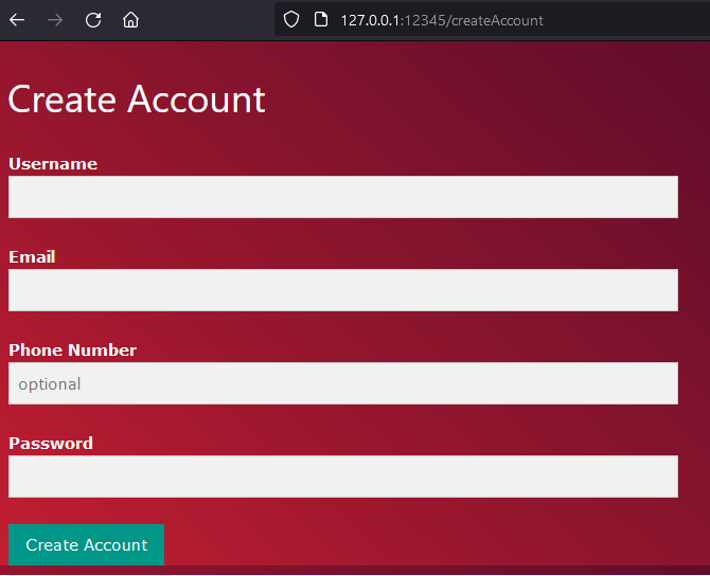

The account creation functionality requires a username, email, password, and optionally a phone number.

HTTP Request:

POST /createAccount HTTP/1.1

Host: 127.0.0.1:12345

[TRUNCATED]

username=test&email=test@test.com&phone=&password=[REDACTED]HTTP Response:

HTTP/1.0 200 OK

[TRUNCATED]

{"response":"success"}The server responds with a “success” message when given a new username/email/phone. What happens if a username/email/phone number is provided that is already in use by a user?

HTTP Request:

POST /createAccount HTTP/1.1

Host: 127.0.0.1:12345

[TRUNCATED]

username=&email=&phone=893183164&password=[REDACTED]HTTP Response:

HTTP/1.0 200 OK

[TRUNCATED]

{"response":"phone number taken"}In this case an account is already using the phone number “893183164” and the server leaks that information. Although this endpoint doesn’t return user data, it still leaks information. This can be utilized by a scraper to, for example brute force all possible phone numbers and collect a list of all numbers used by users on the platform.

Additionally, since there appears to be no protections on the account creation workflow, we can create a ton of fake accounts which can then be used for future scrapes.

Forgot Password

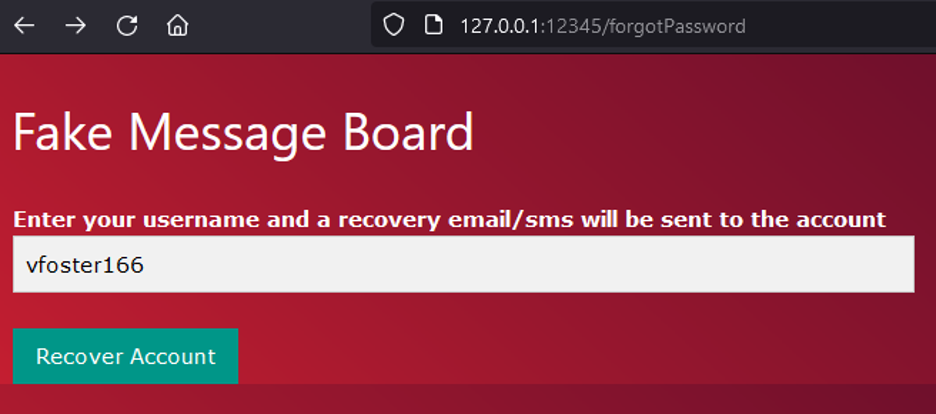

The forgot password functionality requires a username and sends a recovery email/sms if the account exists.

HTTP Request Valid User:

POST /forgotPassword HTTP/1.1

Host: 127.0.0.1:12345

[TRUNCATED]

username=vfoster166HTTP Response Valid User:

HTTP/1.0 200 OK

[TRUNCATED]

{"success":"email sent to vfoster166@fake_yahoo.com"}Observe that if a valid username is provided then the server returns the email address of the account. This can be utilized by scrapers to collect email addresses by either brute forcing usernames or collecting usernames from elsewhere in the application. For reference, if an invalid username is provided then the server returns an error message as shown below.

HTTP Request Invalid User:

POST /forgotPassword HTTP/1.1

Host: 127.0.0.1:12345

[TRUNCATED]

username=doesnotexistHTTP Response Invalid User:

HTTP/1.0 200 OK

[TRUNCATED]

{"error":"Invalid Username"} Conclusion

Let’s review how the Fake Message Board application is performing with respect to our 5 core anti-scraping principles.

- Require Authentication

- The /search endpoint is accessible logged-out

- Enforce Rate Limits

- Rate limiting is nonexistent throughout the application

- Lock Down Account Creation

- There are no protections in place

- Enforce Data Limits

- The /search endpoint accepts a “limit” parameter with no maximum enforced value

- Return Generic Error Messages

- The /createAccount and /forgotPassword endpoints both leak information through error messages

With no protections in place, scraping all 500,000 fake users of the application can be trivially done in minutes with only a few requests. In part two we’ll begin implementing anti-scraping protections into the application and examine how scrapers can adapt to those changes.

[post_title] => Anti-Scraping Part 1: Core Principles to Deter Scraping [post_excerpt] => Part one of our anti-scraping series covers five core anti-scraping principles and how they stack up against a fake message board. [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => anti-scraping-part-1 [to_ping] => [pinged] => [post_modified] => 2023-08-08 14:42:09 [post_modified_gmt] => 2023-08-08 19:42:09 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?p=30458 [menu_order] => 92 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) ) [post_count] => 2 [current_post] => -1 [before_loop] => 1 [in_the_loop] => [post] => WP_Post Object ( [ID] => 30563 [post_author] => 154 [post_date] => 2023-07-13 09:00:00 [post_date_gmt] => 2023-07-13 14:00:00 [post_content] =>Continuing our series on Anti-Scraping techniques, this blog covers implementation of Anti-Scraping protections in a fake message board and examination of how scrapers can adapt to these changes.

While completely preventing scraping is likely impossible, implementing a defense in depth strategy can significantly increase the time and effort required to scrape an application. Give the first blog in this series a read, Anti-Scraping Part 1: Core Principles to Deter Scraping, and then continue on with how implementing these core principles affects scrapers' ability to exfiltrate data.

Hardening the Application

Multiple changes have been made to the Fake Message Board site to try and prevent scraping. The first major change is that rate limiting has been enforced throughout the application. Previously, the search bar had a “limit” parameter with no maximum enforced value which allowed all users of the application to be scraped in only a few requests. Now the “limit” parameter has a maximum enforced value of 100. All error messages have been converted to generic messages that do not leak information.

Not all the recommended changes have been applied to the application. Account creation has still not been locked down. Additionally, the /search endpoint still does not require authentication. As illustrated in “The Scraper’s Response” section, these gaps undermine most of the “fixes” made to the application.

Implementing Rate Limiting

There are many design decisions that need to be made when implementing rate limits. Here are some of the considerations:

- Should rate limits be specific to endpoints or constant across the application?

- How many requests should be allowed per unit of time? (ex: 100 requests/second)

- What are the consequences of reaching a rate limit?

Additionally, there is a distinction between logged-in and logged-out rate limits. Logged-in rate limits are applied to user sessions. When scrapers create fake accounts and use them for scraping, logged-in rate limits can suspend or block those accounts. If creating fake accounts is difficult, then this can significantly hinder a scraper’s progress. Logged-out rate limits are applied to endpoints where having an account isn’t required. From a defensive perspective, there are limited signals that can be used when a scraper is logged-out. The most common signal is an IP address. There are additional signals such as user-agent, cookies, additional HTTP headers, and even TCP fields. In the next sections we’ll review how the Fake Message Board application implemented both logged-in and logged-out rate limits.

Logged-in Rate Limits

In this case the logged-in rate limits are applied across the application. This probably isn’t the best decision for most organizations, but it is the easiest to implement. Each user is allowed to send 1000 requests/minute, 5000 requests/hour, and 10,000 requests/day. If a user violates any of those rate limits, then they receive a strike. If a user receives 3 strikes, then they are blocked from the platform. Implementing an unforgiving strategy like this is probably too strict for most applications.

Determining the number of requests allowed per unit of time is a very difficult problem. The last thing we want is to impact legitimate users of the application. Analyzing traffic logs to see how many requests the average user is sending will be critical to this process. When viewing the users sending the most traffic, it may be difficult to distinguish authentic traffic from user’s misusing the platform via automation. This will more than likely require manual investigations and extensive testing in pre-production environments.

It is also worth noting that bugs in applications can result in requests being repeatedly sent on the user’s behalf without their knowledge. Punishing these users with rate limits would be a huge mistake. If a user is sending a large number of requests to the same endpoint and the data returned isn’t changing, then it seems more likely that this is a bug in the application as opposed to scraping behavior.

Logged-out Rate Limits

In this case the logged-out rate limits are also applied across the application. Each IP address is allowed to send 1000 requests/minute, 5000 requests/hour, and 10,000 requests/day. If an IP address violates any of those rate limits, then it receives a strike. If the IP receives 3 strikes, then it’s blocked from the platform. This isn’t a realistic solution for multiple reasons, but the primary is that some IP addresses can be used by multiple users such as public WiFi IPs or university IPs. In those cases, the IPs would be blocked unfairly.

One of the main weaknesses of logged-out rate limits is that almost every signal can be controlled by the scraper. For example, the scraper can change their user agent with every request. If that signal is used in isolation, then you’ll just see a small number of requests from a bunch of different user agents. Similarly, a scraper can rotate their IP address. This is a devastating technique from a defensive perspective because our most reliable signal is the IP address. There are multiple open source libraries that make rotating IP addresses trivial. Implementing effective logged-out rate limits will probably require combining multiple of the mentioned signals in sophisticated ways.

Enforcing Data Limits

Ensuring that there’s a maximum limit to the amount of data that can be returned per response is a crucial piece in the fight against scraping. Previously, the search bar had a “limit” parameter which could return hundreds of thousands of users in one response. Now only up to 100 users can be returned per response. If more than 100 users are requested, then an error message is returned as shown below.

HTTP Request:

POST /search?limit=101 HTTP/1.1

Host: 127.0.0.1:12345

[TRUNCATED]

{"input":"t"}HTTP Response:

HTTP/1.0 400 Bad Request

[TRUNCATED]

{"error":"error"}Return Generic Error Messages

Previously the forgot password and create account workflows leaked information useful to scrapers via error messages. The forgot password workflow used to reveal whether the provided username matched an existing account and would return the email address if it did. Now it always returns the same message, “If the account exists an email has been sent.”

HTTP Request:

POST /forgotPassword HTTP/1.1

Host: 127.0.0.1:12345

[TRUNCATED]

username=doesnotexistHTTP Response:

HTTP/1.0 200 OK

[TRUNCATED]

{"message":"if the account exists an email has been sent"}The create account workflow used to reveal whether a specific username, email address, or phone number was already taken. Now it always returns the message, “If the account was created an email has been sent.”

HTTP Request:

POST /createAccount HTTP/1.1

Host: 127.0.0.1:12345

[TRUNCATED]

username=vfoster166&email=test@test.com&phone=&password=[REDACTED]HTTP Response:

HTTP/1.0 200 OK

[TRUNCATED]

{"response":"if the account was created an email has been sent"}The Scraper’s Response

Since the application has been updated, let’s review each piece of functionality and figure out how we can bypass the protections:

- Recommended Posts Functionality: Requires authentication, and rate limits your account after 1,000 requests.

- Search Bar: Does not require authentication, returns 100 users/response, and rate limits your IP address after 1,000 requests.

- User Profile Pages: Requires authentication and rate limits your account after 1,000 requests.

- Account Creation: No longer useful for leaking data, but fake accounts can be made easily.

- Forgot Password: No longer useful for leaking data.

Overall, the application has had multiple improvements since the previous scrape in Part 1. The introduction of rate limits forces us to adapt our techniques. For Logged-In scraping the tactic will be to rotate sessions. Since we’re able to easily make many fake accounts, we will send a small number of requests from each account and as a result never get rate limited. For Logged-out scraping the tactic will be to rotate IP addresses. By only sending a few requests from each IP address we will hopefully never get rate limited.

Logged-In Scraping

The goal is to extract all 500,000 users using either the recommended posts functionality or user profile pages. Since user profile pages return more data about the user (ID, name, username, email, birthday, phone number, posts, etc…) let’s focus on that endpoint. Assuming we only get one user per response, each of our fake accounts can send 999 requests/minute without being rate limited. If we make 500 fake accounts, then each account will only have to send ~999 requests in order to scrape every user on the platform. This means in only a couple minutes every user can be scraped and no rate limiting will be encountered.

Pseudo Scraper Code:

user_id_counter = 1 # User IDs start at 1 and are sequential

fake_accounts = [session1, session2, …, session500] # make a list with session cookies for all the fake accounts

while(user_id_counter < 500,000): # go through all 500,000 users

for session in fake_accounts: # rotate your session

user_data = requests.get(“/profile/” + str(user_id_counter))

user_id_counter += 1Logged-Out Scraping

Extracting all 500,000 users using the search functionality is not as easy as it was last time. Now only 100 users are returned per response. Since each IP address can send 999 requests/minute without being rate limited, each IP can scrape 99,900 users without being blocked. This means only a few IP addresses are required. Most IP rotation tools leverage tens of thousands of IPs so getting a few is not an issue.

Pseudo Scraper Code:

while(users_scraped < 500,000):

user_data = requests.get(“/search?limit=100”, data=random_search_string, proxy=Some IP rotation service) Summary

With multiple protections in place, scraping all 500,000 fake users of the application in a few minutes is still pretty easy. As a quick recap, the five core principles of scraping in our view are:

- Require Authentication

- Enforce Rate Limits

- Lock Down Account Creation

- Enforce Data Limits

- Return Generic Error Messages

As demonstrated in this blog, failing to implement one of these principles can completely undermine protections implemented in the others. Although this application enforced rate limits, data limits, and returned generic error messages, by not enforcing authentication and by allowing fake accounts to easily be created, the other protections barely hindered the scraper.

Additional Protections

There are several additional Anti-Scraping techniques that are worth bringing to your attention before wrapping up.

- When locking down account creation, implementing CAPTCHAs is a critical step in the right direction.

- Requiring the user to provide a phone number can be a gigantic annoyance to scrapers. If there’s a limit on the number of accounts per phone number, and virtual phone numbers are blocked, then it’s much more difficult to create fake accounts at scale.

- If user data is being returned in HTML, then adding randomness to the responses is another great deterrent. By changing the number and type of HTML tags around the user data, without affecting the UI, it becomes significantly harder to parse the data.

- On the rate limiting front, make sure to apply rate limits to the user as opposed to the user’s session. If rate limits are applied to the session, then a scraper can just log out and log back in which grants them a new session and thus bypasses the rate limit.

- For logged-out rate limits blocking certain IP ranges such as TOR IPs, or proxy/VPN providers may make a lot of sense especially if the majority of the traffic is inauthentic. Each organization with ranges of IP addresses will be assigned autonomous system numbers (ASNs). There are several publicly available ASN -> IP range tools/APIs that may be very useful in these cases.

- Lastly, user IDs should not be generated sequentially. A lot of scrapes first require a list of user IDs. If they are hard to guess and there isn’t an easy way to collect a large list of them then this can also significantly slow down a scraper.

Conclusion

Hopefully some of the Anti-Scraping tactics and techniques shared in this series were useful to you. Although completely preventing scraping probably isn’t possible, implementing a defense in depth approach which follows our five core principles of Anti-Scraping will go a long way in deterring and wasting time of scrapers.

[post_title] => Anti-Scraping Part 2: Implementing Protections [post_excerpt] => Implementing anti-scraping principles can deter scrapers by making it too difficult for them to bypass an application’s protections. [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => anti-scraping-part-2 [to_ping] => [pinged] => [post_modified] => 2024-04-02 09:10:48 [post_modified_gmt] => 2024-04-02 14:10:48 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?p=30563 [menu_order] => 89 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [comment_count] => 0 [current_comment] => -1 [found_posts] => 2 [max_num_pages] => 0 [max_num_comment_pages] => 0 [is_single] => [is_preview] => [is_page] => [is_archive] => [is_date] => [is_year] => [is_month] => [is_day] => [is_time] => [is_author] => [is_category] => [is_tag] => [is_tax] => [is_search] => [is_feed] => [is_comment_feed] => [is_trackback] => [is_home] => 1 [is_privacy_policy] => [is_404] => [is_embed] => [is_paged] => [is_admin] => [is_attachment] => [is_singular] => [is_robots] => [is_favicon] => [is_posts_page] => [is_post_type_archive] => [query_vars_hash:WP_Query:private] => 9c926954509fc34af84b8d33b88cc713 [query_vars_changed:WP_Query:private] => [thumbnails_cached] => [allow_query_attachment_by_filename:protected] => [stopwords:WP_Query:private] => [compat_fields:WP_Query:private] => Array ( [0] => query_vars_hash [1] => query_vars_changed ) [compat_methods:WP_Query:private] => Array ( [0] => init_query_flags [1] => parse_tax_query ) )