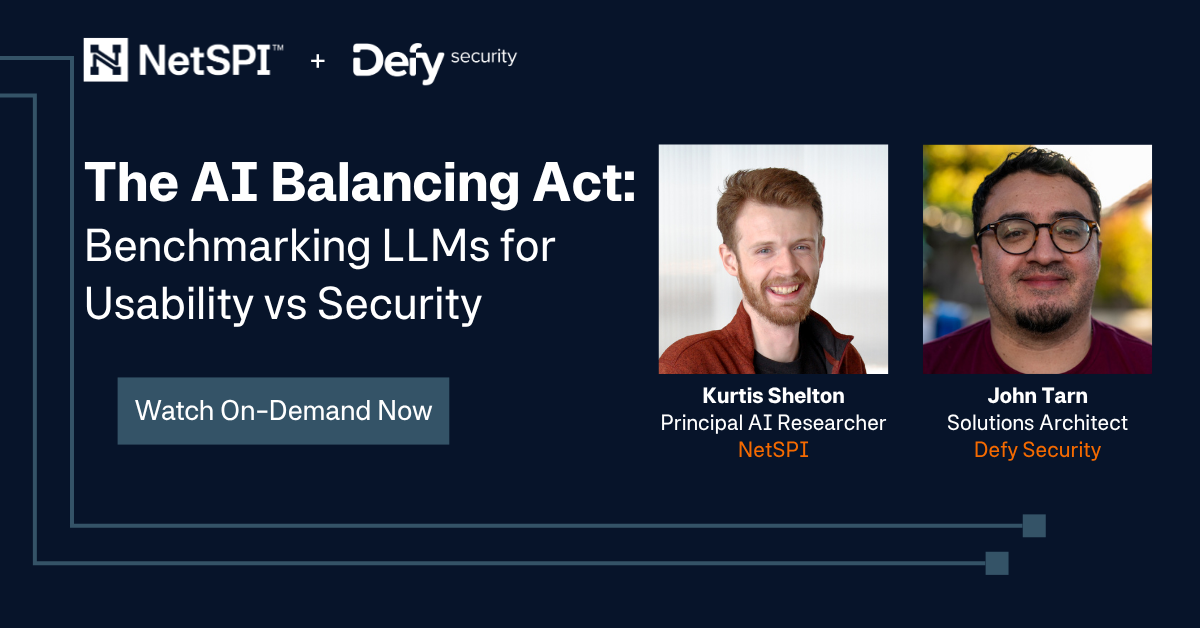

The AI Balancing Act:

Benchmarking LLMs for Usability vs Security

Security or usability? When it comes to large language models (LLMs), it's not always possible to have both. Watch on-demand now to get our experts' tips on tackling this dilemma.