Steve Kerns

WP_Query Object

(

[query] => Array

(

[post_type] => Array

(

[0] => post

[1] => webinars

)

[posts_per_page] => -1

[post_status] => publish

[meta_query] => Array

(

[relation] => OR

[0] => Array

(

[key] => new_authors

[value] => "26"

[compare] => LIKE

)

[1] => Array

(

[key] => new_presenters

[value] => "26"

[compare] => LIKE

)

)

)

[query_vars] => Array

(

[post_type] => Array

(

[0] => post

[1] => webinars

)

[posts_per_page] => -1

[post_status] => publish

[meta_query] => Array

(

[relation] => OR

[0] => Array

(

[key] => new_authors

[value] => "26"

[compare] => LIKE

)

[1] => Array

(

[key] => new_presenters

[value] => "26"

[compare] => LIKE

)

)

[error] =>

[m] =>

[p] => 0

[post_parent] =>

[subpost] =>

[subpost_id] =>

[attachment] =>

[attachment_id] => 0

[name] =>

[pagename] =>

[page_id] => 0

[second] =>

[minute] =>

[hour] =>

[day] => 0

[monthnum] => 0

[year] => 0

[w] => 0

[category_name] =>

[tag] =>

[cat] =>

[tag_id] =>

[author] =>

[author_name] =>

[feed] =>

[tb] =>

[paged] => 0

[meta_key] =>

[meta_value] =>

[preview] =>

[s] =>

[sentence] =>

[title] =>

[fields] =>

[menu_order] =>

[embed] =>

[category__in] => Array

(

)

[category__not_in] => Array

(

)

[category__and] => Array

(

)

[post__in] => Array

(

)

[post__not_in] => Array

(

)

[post_name__in] => Array

(

)

[tag__in] => Array

(

)

[tag__not_in] => Array

(

)

[tag__and] => Array

(

)

[tag_slug__in] => Array

(

)

[tag_slug__and] => Array

(

)

[post_parent__in] => Array

(

)

[post_parent__not_in] => Array

(

)

[author__in] => Array

(

)

[author__not_in] => Array

(

)

[search_columns] => Array

(

)

[ignore_sticky_posts] =>

[suppress_filters] =>

[cache_results] => 1

[update_post_term_cache] => 1

[update_menu_item_cache] =>

[lazy_load_term_meta] => 1

[update_post_meta_cache] => 1

[nopaging] => 1

[comments_per_page] => 50

[no_found_rows] =>

[order] => DESC

)

[tax_query] => WP_Tax_Query Object

(

[queries] => Array

(

)

[relation] => AND

[table_aliases:protected] => Array

(

)

[queried_terms] => Array

(

)

[primary_table] => wp_posts

[primary_id_column] => ID

)

[meta_query] => WP_Meta_Query Object

(

[queries] => Array

(

[0] => Array

(

[key] => new_authors

[value] => "26"

[compare] => LIKE

)

[1] => Array

(

[key] => new_presenters

[value] => "26"

[compare] => LIKE

)

[relation] => OR

)

[relation] => OR

[meta_table] => wp_postmeta

[meta_id_column] => post_id

[primary_table] => wp_posts

[primary_id_column] => ID

[table_aliases:protected] => Array

(

[0] => wp_postmeta

)

[clauses:protected] => Array

(

[wp_postmeta] => Array

(

[key] => new_authors

[value] => "26"

[compare] => LIKE

[compare_key] => =

[alias] => wp_postmeta

[cast] => CHAR

)

[wp_postmeta-1] => Array

(

[key] => new_presenters

[value] => "26"

[compare] => LIKE

[compare_key] => =

[alias] => wp_postmeta

[cast] => CHAR

)

)

[has_or_relation:protected] => 1

)

[date_query] =>

[request] => SELECT wp_posts.ID

FROM wp_posts INNER JOIN wp_postmeta ON ( wp_posts.ID = wp_postmeta.post_id )

WHERE 1=1 AND (

( wp_postmeta.meta_key = 'new_authors' AND wp_postmeta.meta_value LIKE '{8b420845f53deb995b02c5c1ce4c1da3127296d623e5c401d4623b9b94b5be54}\"26\"{8b420845f53deb995b02c5c1ce4c1da3127296d623e5c401d4623b9b94b5be54}' )

OR

( wp_postmeta.meta_key = 'new_presenters' AND wp_postmeta.meta_value LIKE '{8b420845f53deb995b02c5c1ce4c1da3127296d623e5c401d4623b9b94b5be54}\"26\"{8b420845f53deb995b02c5c1ce4c1da3127296d623e5c401d4623b9b94b5be54}' )

) AND wp_posts.post_type IN ('post', 'webinars') AND ((wp_posts.post_status = 'publish'))

GROUP BY wp_posts.ID

ORDER BY wp_posts.post_date DESC

[posts] => Array

(

[0] => WP_Post Object

(

[ID] => 7654

[post_author] => 26

[post_date] => 2017-06-06 07:00:19

[post_date_gmt] => 2017-06-06 07:00:19

[post_content] => OWASP has just released their release candidate of the Top 10 most critical web application security risks. While no major changes were included, i.e. Injection is still number one in the list, they added two new ones:

- A7 – Insufficient Attack Protection

- A10 - Under protected APIs

This blog discusses the first.

A7 – Insufficient Attack Protection

OWASP stated the reason for the addition as being:

For years, we’ve considered adding insufficient defenses against automated attacks. Based on the data call, we see that the majority of applications and APIs lack basic capabilities to detect, prevent, and respond to both manual and automated attacks. Application and API owners also need to be able to deploy patches quickly to protect against attacks.

An application must protect itself against attacks not just from invalid input, but also involved detecting and blocking attempts to exploit the security vulnerabilities. The application must try to detect and prevent them, log these attempts and respond to them.

What are some examples of attacks that should be handled?

- Brute force attacks to guess user credentials

- Flooding user’s email accounts using email forms in the application

- Attempting to determined valid credit card numbers from stolen cards

- Denial of service by flooding the application with many requests

- XSS or SQL Injection attacks by automated tools

Prevention

The first step is to prevent these types of attacks. Consider using some built-in steps for preventing attacks to the application. This includes:- Remove or limit the values of the data accessed using the application; can it be changed, masked or removed?

- Create use (abuse) cases that simulate automated web attacks.

- Identify and restrict automated attacks by identifying automation techniques to determine is the requests are being made by a human or by an automated tool.

- Make sure the user is authenticated and authorized to use the application.

- Consider using CAPTCHA when high value functions are being performed.

- Set limits on how many transaction can be performed over a specified time; consider doing this by user or groups of users, devices or IP address.

- Consider the use of web application firewalls that detect these types of attacks. Another alternative is using OWASP AppSensor or similar; it is built into the application to detect these types of attacks.

- Build conditions into your terms and conditions; require the user not to use automated tools when using the application.

- Networks firewalls

- Load balancers

- Anti-DDoS systems

- Intrusion Detection System (IDS) and Intrusion Prevention System (IPS)

- Data Loss Prevention

Detection

An application must determine if activity is an attack or just suspicious. The response must be appropriate based on which of these is true.- Could the user have made a mistake when entering the data?

- Is the process being followed for the application or is the user trying to jump past steps in the process?

- Does the user need a special tool or knowledge?

Response

The application can handle detected attacks or even the suspicion of attacks in a variety of ways. The first should be a warning to the user. This will deter a normal user that their activities are being monitored. It would also warn a malicious user that certain events are being monitored, though it will probably not deter the latter person. The application could, based on further activity after the warning, either logout the user or lockout the user. If a logout is performed, automated tools can be programed to automatically re-authenticate the user. If lockout is chosen, then all activity will stop. In any of the above cases, a legitimate user may end up calling the help desk, so the application must log this type of activity and notify the application’s administrators. The log must be reviewed to determine if the response was appropriate. Choosing which action to perform would depend on the sensitivity of the data within the application. A public website must be more forgiving to prevent overreaction to suspicious activities; whereas an application with highly sensitive data must respond quickly to suspicious activity.Conclusion

The OWASP Top 10 2017 A7 – Insufficient Attack Protection requires the application to prevent, detect, and respond to attacks. This could affect other regulations such as PCI, which base their standards on the OWASP Top 10.References

- OWASP Top 10 2017: https://github.com/OWASP/Top10/raw/master/2017/OWASP%20Top%2010%20-%202017%20RC1-English.pdf

- Insufficient Anti-automation: https://projects.webappsec.org/w/page/13246938/Insufficient%20Anti-automation

- OWASP Automated Threats to Web Applications: https://www.owasp.org/index.php/OWASP_Automated_Threats_to_Web_Applications

- OWASP AppSensor Project: https://www.owasp.org/index.php/OWASP_AppSensor_Project

- OWASP Automated Threat Handbook: https://www.owasp.org/images/3/33/Automated-threat-handbook.pdf

Licenses

There are many different open source licenses, some of them good (permissive) and some not so good. A "permissive" license is simply a non-copyleft open source license — one that guarantees the freedoms to use, modify, and redistribute, but that permits proprietary derivative works. As of last count, the Open Source Initiative (OSI) has 76 different licenses, some permissive and some not so permissive. There are also some that OSI does not recognize, such as the Beerware license. It says “As long as you retain this notice you can do whatever you want with this stuff. If we meet some day, and you think this stuff is worth it, you can buy me a beer in return. Poul-Henning Kamp”. This is considered a permissive license. Copyleft is a copyright licensing scheme where an author surrenders some, but not all rights under copyright law. Copyleft allows an author to impose some restrictions on those who want to engage in activities that would more usually be reserved by the copyright holder. "Weak copyleft" licenses are generally used for the creation of software libraries, to allow other software to link to the library and then be redistributed without the legal requirement for the work to be distributed under the library's copyleft license. Only changes to the weak-copylefted software itself become subject to the copyleft provisions of such a license, not changes to the software that links to it. This allows programs of any license to be compiled and linked against copylefted libraries such as glibc (the GNU project's implementation of the C standard library) and then redistributed without any re-licensing required. Copyleft licenses (GPL, etc.) become an issue if the OSS source is actually pulled into your application. The developers can do this without anyone’s knowledge, but would require you to release your source code. All of your intellectual property then becomes open source under that license. The licenses on the OSS you are using can have many or few restrictions on your software. Make sure you are aware of the license(s) that is applied to each OSS you are using and have a lawyer review all of them. What if you do not comply with the license? I am not a lawyer, but I believe if a company finds out you are using their software out of compliance with the license, you may end up with a lawsuit. In fact, the lawyer I was working with at a previous job was adamant that the company not use any copyleft software. He would not sign off on the software release unless it was free of copyleft software.Security Vulnerabilities

As you are aware, all software has bugs and from a security perspective, the OSS you are using contains them as well. Over the last couple of weeks I was doing a web application penetration test and discovered that the software was using about 80 different open source libraries (JAR files). Among them were the Apache Commons Collections (ACC) and Apache Standard Taglibs (AST). Each of these have security vulnerabilities that are considered high risk (CVSS score of 7.5 or above). For example, ACC is vulnerable to insecure deserialization of data, which may result in arbitrary code execution. If the application is using OSS that is out-of-date by many months or years, it may have undiscovered or unreported vulnerabilities. Older software tend to have security vulnerabilities that go undetected or unreported. What vulnerabilities you allow in your software is up to your company policy, so you need to determine if you will allow the release of software that is old or contains security vulnerabilities.Solutions

You can do research on each OSS you use. This means visiting the website for the OSS or opening up each JAR file and reviewing the license information. Make sure you track this because OSS can change licenses between releases. Under one version it could be released using the aforementioned BEER license and the next one could be under a copyleft license. For security vulnerabilities, going to the vendors web site might give you some information, but also review the following:- https://seclists.org

- https://www.cvedetails.com

- https://www.securityfocus.com

- https://www.osvdb.org/

- LLDB (https://lldb.llvm.org/)

- Debugserver (part of Xcode)

- Tcprelay.py (https://code.google.com/p/iphonetunnel-mac/source/browse/trunk/gui/tcprelay.py?r=5)

$ ./tcprelay.py -t 22:2222 1234:1234 Forwarding local port 2222 to remote port 22 Forwarding local port 1234 to remote port 1234 Incoming connection to 2222 Waiting for devices... Connecting to device <MuxDevice: ID 17 ProdID 0x12a8 Serial '0ea150b00ba3deeacb42f399492b7990416a0c87' Location 0x14120000> Connection established, relaying data Incoming connection to 1234 Waiting for devices... Connecting to device <MuxDevice: ID 17 ProdID 0x12a8 Serial '0ea150b00ba3deeacb42f399492b7990416a0c87' Location 0x14120000> Connection established, relaying dataThe command “tcprelay.py -t 22:2222 1234:1234” is redirecting two local ports to the device. The first one is used to SSH to the device over port 2222. The second one is the port the debugserver will be using. Then you will need to connect to the iOS device and start the debug server (I am assuming you have already copied the software to the device). If not, you can use scp to copy the binary.)

$ ssh root@127.0.0.1 -p 2222 root@127.0.0.1's password:Then, if the application is already running, verify its name using ‘ps aux | grep <appname>’ and connect to the application with debugserver (using the name of the application not the PID):

root# ./debugserver *:1234 -a appname debugserver-@(#)PROGRAM:debugserver PROJECT:debugserver-320.2.89 for arm64. Attaching to process appname... Listening to port 1234 for a connection from *... Waiting for debugger instructions for process 0.The command ‘./debugserver *:1234 -a appname’ is telling the software to startup on port 1234 and hook into the application named ‘appname’. It will take a little time, so be patient. On the MAC, startup LLDB and connect to the debugserver software running on the iOS device. Remember, we have relayed the device port 1234 that the debugserver is listening on to the local port 1234.

$ lldb (lldb) process connect connect://127.0.0.1:1234 Process 2017 stopped * thread #1: tid = 0x517f9, 0x380f54f0 libsystem_kernel.dylib mach_msg_trap + 20, queue = 'com.apple.main-thread', stop reason = signal SIGSTOP frame #0: 0x380f54f0 libsystem_kernel.dylib mach_msg_trap + 20 libsystem_kernel.dylib mach_msg_trap: -> 0x380f54f0 <+20>: pop {r4, r5, r6, r8} 0x380f54f4 <+24>: bx lr libsystem_kernel.dylib mach_msg_overwrite_trap: 0x380f54f8 <+0>: mov r12, sp 0x380f54fc <+4>: push {r4, r5, r6, r8}Now you can dump the information about the memory sections of the application.

(lldb) image dump sections appname Sections for '/private/var/mobile/Containers/Bundle/Application/F3CFF345-71FC-47C4-B1FB-3DAC523C7627/appname.app/appname(0x0000000000047000)' (armv7): SectID Type Load Address File Off. File Size Flags Section Name ---------- ---------------- --------------------------------------- ---------- ---------- ---------- ---------------------------- 0x00000100 container [0x0000000000000000-0x0000000000004000)* 0x00000000 0x00000000 0x00000000 appname.__PAGEZERO 0x00000200 container [0x0000000000047000-0x00000000001af000) 0x00000000 0x00168000 0x00000000 appname.__TEXT 0x00000001 code [0x000000000004e6e8-0x000000000016d794) 0x000076e8 0x0011f0ac 0x80000400 appname.__TEXT.__text 0x00000002 code [0x000000000016d794-0x000000000016e5e0) 0x00126794 0x00000e4c 0x80000400 appname.__TEXT.__stub_helper 0x00000003 data-cstr [0x000000000016e5e0-0x0000000000189067) 0x001275e0 0x0001aa87 0x00000002 appname.__TEXT.__cstring 0x00000004 data-cstr [0x0000000000189067-0x00000000001a5017) 0x00142067 0x0001bfb0 0x00000002 appname.__TEXT.__objc_methname 0x00000005 data-cstr [0x00000000001a5017-0x00000000001a767a) 0x0015e017 0x00002663 0x00000002 appname.__TEXT.__objc_classname 0x00000006 data-cstr [0x00000000001a767a-0x00000000001abe0c) 0x0016067a 0x00004792 0x00000002 appname.__TEXT.__objc_methtype 0x00000007 regular [0x00000000001abe10-0x00000000001ac1b8) 0x00164e10 0x000003a8 0x00000000 appname.__TEXT.__const 0x00000008 regular [0x00000000001ac1b8-0x00000000001aeb20) 0x001651b8 0x00002968 0x00000000 appname.__TEXT.__gcc_except_tab 0x00000009 regular [0x00000000001aeb20-0x00000000001aeb46) 0x00167b20 0x00000026 0x00000000 appname.__TEXT.__ustring 0x0000000a code [0x00000000001aeb48-0x00000000001af000) 0x00167b48 0x000004b8 0x80000408 appname.__TEXT.__symbolstub1 0x00000300 container [0x00000000001af000-0x00000000001ef000) 0x00168000 0x00040000 0x00000000 appname.__DATA 0x0000000b data-ptrs [0x00000000001af000-0x00000000001af4b8) 0x00168000 0x000004b8 0x00000007 appname.__DATA.__lazy_symbol 0x0000000c data-ptrs [0x00000000001af4b8-0x00000000001af810) 0x001684b8 0x00000358 0x00000006 appname.__DATA.__nl_symbol_ptr 0x0000000d regular [0x00000000001af810-0x00000000001b2918) 0x00168810 0x00003108 0x00000000 appname.__DATA.__const 0x0000000e objc-cfstrings [0x00000000001b2918-0x00000000001ba8d8) 0x0016b918 0x00007fc0 0x00000000 appname.__DATA.__cfstring 0x0000000f data-ptrs [0x00000000001ba8d8-0x00000000001baf1c) 0x001738d8 0x00000644 0x10000000 appname.__DATA.__objc_classlist 0x00000010 regular [0x00000000001baf1c-0x00000000001baf4c) 0x00173f1c 0x00000030 0x10000000 appname.__DATA.__objc_nlclslist 0x00000011 regular [0x00000000001baf4c-0x00000000001bafa0) 0x00173f4c 0x00000054 0x10000000 appname.__DATA.__objc_catlist 0x00000012 regular [0x00000000001bafa0-0x00000000001bafa4) 0x00173fa0 0x00000004 0x10000000 appname.__DATA.__objc_nlcatlist 0x00000013 regular [0x00000000001bafa4-0x00000000001bb078) 0x00173fa4 0x000000d4 0x00000000 appname.__DATA.__objc_protolist 0x00000014 regular [0x00000000001bb078-0x00000000001bb080) 0x00174078 0x00000008 0x00000000 appname.__DATA.__objc_imageinfo 0x00000015 data-ptrs [0x00000000001bb080-0x00000000001e0d40) 0x00174080 0x00025cc0 0x00000000 appname.__DATA.__objc_const 0x00000016 data-cstr-ptr [0x00000000001e0d40-0x00000000001e4420) 0x00199d40 0x000036e0 0x10000005 appname.__DATA.__objc_selrefs 0x00000017 regular [0x00000000001e4420-0x00000000001e442c) 0x0019d420 0x0000000c 0x00000000 appname.__DATA.__objc_protorefs 0x00000018 data-ptrs [0x00000000001e442c-0x00000000001e4ab8) 0x0019d42c 0x0000068c 0x10000000 appname.__DATA.__objc_classrefs 0x00000019 data-ptrs [0x00000000001e4ab8-0x00000000001e4e48) 0x0019dab8 0x00000390 0x10000000 appname.__DATA.__objc_superrefs 0x0000001a regular [0x00000000001e4e48-0x00000000001e6184) 0x0019de48 0x0000133c 0x00000000 appname.__DATA.__objc_ivar 0x0000001b data-ptrs [0x00000000001e6184-0x00000000001ea02c) 0x0019f184 0x00003ea8 0x00000000 appname.__DATA.__objc_data 0x0000001c data [0x00000000001ea030-0x00000000001ed978) 0x001a3030 0x00003948 0x00000000 appname.__DATA.__data 0x0000001d zero-fill [0x00000000001ed980-0x00000000001edce0) 0x00000000 0x00000000 0x00000001 appname.__DATA.__bss 0x0000001e zero-fill [0x00000000001edce0-0x00000000001edce8) 0x00000000 0x00000000 0x00000001 appname.__DATA.__common 0x00000400 container [0x00000000001ef000-0x0000000000207000) 0x001a8000 0x00015bf0 0x00000000 appname.__LINKEDITThe next step is to convert that output into LLDB commands to actually dump the data in those memory sections. You can probably skip the sections named zero-fill or code. For example, the take the following output:

0x00000003 data-cstr [0x000000000016e5e0-0x0000000000189067) 0x001275e0 0x0001aa87 0x00000002 appname.__TEXT.__cstringInto the LLDB command:

Memory read --outfile ~/0x00000003data-cstr 0x000000000016e5e0 0x0000000000189067 –forceThis command is telling LLDB to dump the memory from address 0x000000000016e5e0 to 0x0000000000189067 and put it into the file 0x00000003data-cstr.

(lldb) memory read --outfile ~/0x00000003data-cstr 0x000000000016e5e0 0x0000000000189067 –forceYou will (or should) not see any output from this command other that the file being created. Once you have all of the files, search them using your favorite search tool or even a text editor. Search for sensitive data (i.e. credit card number, passwords, etc. The files will contain information similar to the following:

0x0016e5e0: 3f 3d 26 2b 00 3a 2f 3d 2c 21 24 26 27 28 29 2a ?=&+.:/=,!$&'()* 0x0016e5f0: 2b 3b 5b 5d 40 23 3f 00 00 62 72 61 6e 64 4c 6f +;[]@#?..brandLo 0x0016e600: 67 6f 2e 70 6e 67 00 54 72 61 64 65 47 6f 74 68 go.png.TradeGoth 0x0016e610: 69 63 4c 54 2d 42 6f 6c 64 43 6f 6e 64 54 77 65 icLT-BoldCondTwe 0x0016e620: 6e 74 79 00 4c 6f 61 64 69 6e 67 2e 2e 2e 00 4c nty.Loading....L 0x0016e630: 6f 61 64 69 6e 67 00 76 31 32 40 3f 30 40 22 4e oading.v12@?0@"N 0x0016e640: 53 44 61 74 61 22 34 40 22 45 70 73 45 72 72 6f SData"4@"EpsErro 0x0016e650: 72 22 38 00 6c 6f 61 64 69 6e 67 50 61 67 65 54 r"8.loadingPageT 0x0016e660: 79 70 65 00 54 69 2c 4e 2c 56 5f 6c 6f 61 64 69 ype.Ti,N,V_loadi 0x0016e670: 6e 67 50 61 67 65 54 79 70 65 00 6f 76 65 72 76 ngPageType.overv 0x0016e680: 69 65 77 52 65 71 52 65 73 48 61 6e 64 6c 65 72 iewReqResHandler 0x0016e690: 00 54 40 22 45 70 73 4f 76 65 72 76 69 65 77 52 .T@"EpsOverviewR 0x0016e6a0: 65 71 52 65 73 48 61 6e 64 6c 65 72 22 2c 26 2c eqResHandler",&, 0x0016e6b0: 4e 2c 56 5f 6f 76 65 72 76 69 65 77 52 65 71 52 N,V_overviewReqR 0x0016e6c0: 65 73 48 61 6e 64 6c 65 72 00 41 50 49 43 61 6c esHandler.APICalHave fun looking at the iOS application memory and use this process for only good intentions. As stated in the previously mentioned blog: This technique can be used to determine if the application is not removing sensitive information from memory once the instantiated classes are done with the data. All applications should de-allocate spaces in memory that deal with classes and methods that were used to handle sensitive information, otherwise you run the risk of the information sitting available in memory for an attacker to see. [post_title] => Dumping Memory on iOS 8 [post_excerpt] => Back in January of 2015 NetSPI published a blog on extracting memory from an iOS device. Even though NetSPI provided a script to make... [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => dumping-memory-on-ios-8 [to_ping] => [pinged] => [post_modified] => 2021-04-13 00:05:28 [post_modified_gmt] => 2021-04-13 00:05:28 [post_content_filtered] => [post_parent] => 0 [guid] => https://netspiblogdev.wpengine.com/?p=5970 [menu_order] => 662 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [3] => WP_Post Object ( [ID] => 1123 [post_author] => 26 [post_date] => 2014-03-27 07:00:27 [post_date_gmt] => 2014-03-27 07:00:27 [post_content] =>

On March 25, 2014, Microsoft released the source code for Microsoft Word for Windows 1.1a. They said they released it "to help future generations of technologists better understand the roots of personal computing."

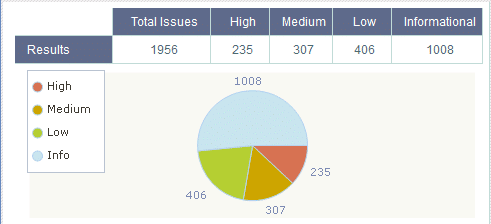

I thought it would be interesting to perform an automated code review on it using CheckMarx, to see how they did related to security. The source consisted mainly of C++ code (376,545 lines of code) as well as code written in assembler. The assembler code was not scanned because CheckMarx (or any other automated code scanners) does not support assembler. What came out of the tool was interesting.

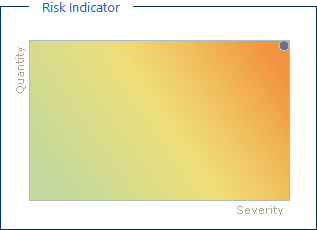

CheckMarx indicated that the risk in the code is:

The distribution of risk from Informational to High:

You have to remember that this code is from the 1980s. Many people did not have a concept of secure code and the development tools did not address security at all.

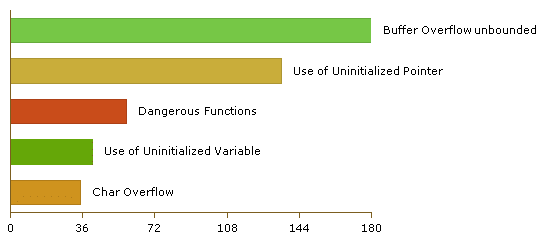

The top five vulnerabilities are as follows:

From the code that I looked at, most of the issues come from the use of unsafe functions. For example:

if (!strcmp(szClass, "BEGDATA")) strcpy(szNameSeg, "Data"); else strcpy(szNameSeg, szName); nSegCur = nSeg;

The function strcpy has been replaced by a safe function strncpy. The function strncpy combats buffer overflow by requiring you to put a length in it. The function strncpy did not exist in the 1980s. The code also contains 123 instances of the goto statement. For example:

LError: cmdRet = cmdError; goto LRet; } pdod = PdodDoc(doc);

From the MSDN web site, Microsoft states, "It is good programming style to use the break, continue, and return statements instead of the goto statement whenever possible. However, because the break statement exits from only one level of a loop, you might have to use a goto statement to exit a deeply nested loop." I am not sure of the C++ syntax back in the 1980s, but maybe break, continue, and return statements did not exist.

You can get a copy of the code for both MS Word and MS-DOS from here: https://www.computerhistory.org/press/ms-source-code.html. Just remember there now are better ways to write code.

Below is the complete list of issues found in the code:

| Vulnerability Type | Occurrences | Severity |

|---|---|---|

| Buffer Overflow unbounded | 180 | High |

| Buffer Overflow StrcpyStrcat | 22 | High |

| Format String Attack | 18 | High |

| Buffer Overflow OutOfBound | 12 | High |

| Buffer Overflow cpycat | 3 | High |

| Use of Uninitialized Pointer | 135 | Medium |

| Dangerous Functions | 58 | Medium |

| Use of Uninitialized Variable | 41 | Medium |

| Char Overflow | 35 | Medium |

| Stored Format String Attack | 19 | Medium |

| Stored Buffer Overflow cpycat | 11 | Medium |

| MemoryFree on StackVariable | 4 | Medium |

| Short Overflow | 2 | Medium |

| Integer Overflow | 1 | Medium |

| Memory Leak | 1 | Medium |

| NULL Pointer Dereference | 341 | Low |

| Potential Path Traversal | 24 | Low |

| Unchecked Array Index | 18 | Low |

| Unchecked Return Value | 11 | Low |

| Potential Off by One Error in Loops | 6 | Low |

| Use of Insufficiently Random Values | 3 | Low |

| Potential Precision Problem | 2 | Low |

| Size of Pointer Argument | 1 | Low |

| Methods Without ReturnType | 500 | Information |

| Unused Variable | 310 | Information |

| GOTO Statement | 132 | Information |

| Empty Methods | 9 | Information |

| Potential Off by One Error in Loops | 6 | Information |

This code is a good example of what not to do.

Programming languages and tools have evolved to make your application much more secure, but only if you teach your developers the concepts of secure coding.

[post_title] => The Way Back Machine - Microsoft Word for Windows 1.1a [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => the-way-back-machine-microsoft-word-for-windows-1-1a [to_ping] => [pinged] => [post_modified] => 2021-06-08 21:49:12 [post_modified_gmt] => 2021-06-08 21:49:12 [post_content_filtered] => [post_parent] => 0 [guid] => https://netspiblogdev.wpengine.com/?p=1123 [menu_order] => 720 [post_type] => post [post_mime_type] => [comment_count] => 3 [filter] => raw ) [4] => WP_Post Object ( [ID] => 1140 [post_author] => 26 [post_date] => 2013-11-14 07:00:44 [post_date_gmt] => 2013-11-14 07:00:44 [post_content] => The PCI Council has just released PA-DSS version 3.0. They have added new requirements, removed one, and changed a few. How this affects your application really depends on how you implemented security.What's Been Added

Req. 3.4 Payment application must limit access to required functions/resources and enforce least privilege for built-in accounts:

- By default, all application/service accounts have access to only those functions/resources specifically needed for purpose of the application/service account.

- By default, all application/service accounts have minimum level of privilege assigned for each function/resource as needed for the application/service account.

Req. 5.1.5 –Payment application developers to verify integrity of source code during the development process

You need to make sure that any source control tools (i.e. Visual SourceSafe, etc.) is configured so that only the people that do development can make changes to the code. This does not preclude giving other users read access, but you need to minimize who has write access.Req. 5.1.6 – Payment applications to be developed according to industry best practices for secure coding techniques.

You must develop the application with least privilege to ensure insecure assumptions are not introduced into the application. To prevent an attacker from obtaining sensitive information about an application failure including fail-safe defaults that could then be used to create subsequent attacks. You must also ensure that security is applied to all accesses and inputs into the application to avoid an input channel being left open to compromise. This includes how the sensitive data and the PAN is handled in memory, the PCI Council it trying to prevent capture of this data by screen scrapping. Try to encrypt this data in memory and keep it there only for a short period of time.Req. 5.2.10 - Broken authentication and session management

You need to make sure, in your web application, that:- Any session cookies are marked as secure.

- The session is never to be passed on the URL. This would allow them to be logged in the web server.

- The web application must time out the session after a certain number of minutes. Once timed out, the user must re-authenticate to get access to the application.

- The session id must change when there is a change in permissions. For example, a session id is set when an anonymous user accesses the login page and after successful authentication, the session id must change to a different value.

- If a user logs out of the application, you need to delete the session on both the client and the server.

Req. 5.4 - Payment application vendors to incorporate versioning methodology for each payment application.

This is not a change in the way you write your code but a process change and, like so many other requirements, has to be documented both internally and in the Implementation Guide. Many companies already do this but make sure you have a defined method of changing the version numbers.- Details of how the elements of the version-numbering scheme are in accordance with requirements specified in the PA-DSS Program Guide.

- The format of the version-numbering scheme is specified and includes details of number of elements, separators, character set, etc. (e.g., 1.1.1.N, consisting of alphabetic, numeric, and/or alphanumeric characters).

- A definition of what each element represents in the version-numbering scheme (e.g., type of change, major, minor, or maintenance release, wildcard, etc.)

- Definition of elements that indicate use of wildcards (if used). For example, a version number of 1.1.x would cover specific versions 1.1.2 and 1.1.3, etc.

- If an internal version mapping to published versioning scheme is used, the versioning methodology must include mapping of internal versions to the external versions

- You must have a process in place to review application updates for conformity with the versioning methodology prior to release.

Req. 5.5 - Risk assessment techniques (for example, application threat modeling) are used to identify potential application security design flaws and vulnerabilities during the software-development process. Risk assessment processes include the following:

- Coverage of all functions of the payment application, including but not limited to, security-impacting features and features that cross trust-boundaries.

- Assessment of application decision points, process flows, data flows, data storage, and trust boundaries.

- Identification of all areas within the payment application that interact with PAN and/or SAD or the cardholder data environment (CDE), as well as any process-oriented outcomes that could lead to the exposure of cardholder data.

- A list of potential threats and vulnerabilities resulting from cardholder data flow analyses and assign risk ratings (for example, high, medium, or low priority) to each.

- Implementation of appropriate corrections and countermeasures during the development process.

- Documentation of risk assessment results for management review and approval.

Req. 5.6 Software vendor must implement a process to document and authorize the final release of the application and any application updates. Documentation includes:

- Signature by an authorized party to formally approve release of the application or application update

- Confirmation that secure development processes were followed by the vendor.

Req. 7.3 - Include release notes for all application updates, including details and impact of the update, and how the version number was changed to reflect the application update.

Make sure you are publishing your release notes and that they include the customer impact.Req. 10.2.2 - If vendors or integrators/resellers can access customers’ payment applications remotely, a unique authentication credential (such as a password/phrase) must be used for each customer environment.

This one is simple enough; your support people cannot use the same password to access different customers. Avoid the use of repeatable formulas to generate passwords that are easily guessed. These credentials become known over time and can be used by unauthorized individuals to compromise the vendor’s customers.Req. 13.1.1 - Provides relevant information specific to the application for customers, resellers, and integrators to use.

The Implementation Guide must state the name and version for the software it is intended for. It must also include the application dependencies (i.e. SLQ Server, PCCharge, etc.)Req. 14.1 – Provide information security and PA-DSS training for vendor personnel with PA-DSS responsibility at least annually

These training materials are for your personnel involved in the development and support of your application. The materials must be about PA-DSS and information security.Req. 14.2 - Assign roles and responsibilities to vendor personnel including the following:

- Overall accountability for meeting all the requirements in PA-DSS

- Keeping up-to-date within any changes in the PCI SSC PA-DSS Program Guide

- Ensuring secure coding practices are followed

- Ensuring integrators/resellers receive training and supporting materials

- Ensuring all vendor personnel with PA-DSS responsibilities, including developers, receive training

What's Been Removed

Req. 2.4 - If disk encryption is used (rather than file- or column-level database encryption), logical access must be managed independently of native operating system access control mechanisms (for example, by not using local user account databases). Decryption keys must not be tied to user accounts.

All of the PA vendors I worked with did not do disk encryption but use file, table/column encryption. It makes sense to remove this requirement. In addition, disk encryption is only effective at preventing data loss due to physical theft, which is not a significant concern in the majority of datacenters.What's Been Significantly Changed

Req. 3.3.2 - Use a strong, one-way cryptographic algorithm, based on approved standards to render all payment application passwords unreadable during storage. Each password must have a unique input variable that is concatenated with the password before the cryptographic algorithm is applied.

It appears that encrypting the password is no longer acceptable. In your application, you must use a strong, one-way cryptographic algorithm (hash) as well as a salt value. Review your application storage to make sure that you are using a hashing algorithm with a salt. They do note that the salt does not have to be unpredictable or a secret.Req. 4.2.5 - Use of, and changes to the application’s identification and authentication mechanisms (including but not limited to creation of new accounts, elevation of privileges, etc.), and all changes, additions, deletions to application accounts with root or administrative privileges.

Any changes to accounts in the application must be audited. [post_title] => PA-DSS 3.0 – What to Expect [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => pa-dss-3-0-what-to-expect [to_ping] => [pinged] => [post_modified] => 2021-04-13 00:06:10 [post_modified_gmt] => 2021-04-13 00:06:10 [post_content_filtered] => [post_parent] => 0 [guid] => https://netspiblogdev.wpengine.com/?p=1140 [menu_order] => 735 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [5] => WP_Post Object ( [ID] => 1144 [post_author] => 26 [post_date] => 2013-10-03 07:00:38 [post_date_gmt] => 2013-10-03 07:00:38 [post_content] => I have been reading a few articles on outsourcing application development. Many of them have good information on what to look for and how to work with the companies doing the development. However, I have yet to see any of these articles talk about security and how to handle that in the outsourcing process. In any development process, either in-sourced or out-sourced, security needs to be considered. The developers need to be trained in secure coding techniques, and the architects have to have some training and experience on implementing security throughout the software development life cycle. In fact, these should never be excluded or glossed over. The development company you are considering needs to prove this and these requirements must be written into the contract. Have them show you the classes that these people have taken, and make sure they are up-to-date on the latest security vulnerabilities. You cannot have a developer take a course five years ago and consider their skills current. What about after the code is completed? Do your contracts specify a security review? Both an application penetration test and code review must be done before the release of the application. The contract must also specify that any security vulnerabilities must be mitigated. It must also specify that the test will repeat until all high and medium level vulnerabilities are mitigated. Also, have the security testing done by your own company or a third party. It does not matter where the developers are from; it could be India, China, or the United States, there are bad or malicious programmers out there and you must trust them but verify their work. [post_title] => Outsourcing application development – what is missing? [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => outsourcing-application-development-what-is-missing [to_ping] => [pinged] => [post_modified] => 2021-04-13 00:06:10 [post_modified_gmt] => 2021-04-13 00:06:10 [post_content_filtered] => [post_parent] => 0 [guid] => https://netspiblogdev.wpengine.com/?p=1144 [menu_order] => 738 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [6] => WP_Post Object ( [ID] => 1165 [post_author] => 26 [post_date] => 2013-04-11 07:00:16 [post_date_gmt] => 2013-04-11 07:00:16 [post_content] => A question came up about a PCI audit that was performed for one of our customers. They just finished their PCI audit and passed. I am now working with them on a new software application and there is a vulnerability in their application that was ranked as a high. This was discovered on an application penetration test back in 2011 but was accepted by the company as a business risk; resulting in the vulnerability being marked closed because of this acceptance. The client wanted to include this same functionality within a new application, resulting in the new application containing the vulnerability. The QSA who performed their last PCI audit should not have passed them because this vulnerability is in violation of Requirement 6.5.6. The requirement states: Prevent common coding vulnerabilities in software development processes, to include all “High” vulnerabilities identified in the vulnerability identification process (as defined in PCI DSS Requirement 6.2). Please note, according to PCI Requirement 6.2, a CVSS score of 4 and above is considered to be a “High” risk vulnerability. Because of this vulnerability and because the company has not fixed it, they could be fined by their bank. Furthermore, this vulnerability could pose financial liability and reputation risk for the company. If customers find out about this vulnerability, they may question the company’s ability as a trusted vendor. So why did the previous QSA pass them? Without discussing this with the QSA, one can assume that since the issue was closed, it was fixed. You have to remember that when the auditor is performing the audit, they are presented with a lot of information. This is a lot like trying to drink from a fire hose. Things like this vulnerability could have been missed; it was one finding out of many or possibly the auditor assumed that since the finding was closed, that it had been remediated. Another reason may be the way an auditor interprets the PCI Requirements. This person may not have understood the requirement and made the wrong interpretation. In many cases, one auditor’s interpretation may be different from another auditor. It does not really matter now, why the company passed their audit, even though they did not fix the vulnerability. The issue now is that they need to fix it before moving forward. [post_title] => Why does one QSA pass me and another would not? [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => why-does-one-qsa-pass-me-and-another-would-not [to_ping] => [pinged] => [post_modified] => 2021-04-13 00:05:58 [post_modified_gmt] => 2021-04-13 00:05:58 [post_content_filtered] => [post_parent] => 0 [guid] => https://netspiblogdev.wpengine.com/?p=1165 [menu_order] => 762 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [7] => WP_Post Object ( [ID] => 1173 [post_author] => 26 [post_date] => 2013-02-26 07:00:41 [post_date_gmt] => 2013-02-26 07:00:41 [post_content] => We have worked with many companies that are following the letter of the law. The law being the PCI Council’s requirement (6.3.2) that all code must be reviewed prior to release. It states: 6.3.2 Review of custom code prior to release to production or customers in order to identify any potential coding vulnerability. Note: This requirement for code reviews applies to all custom code (both internal and public-facing), as part of the system development life cycle. Code reviews can be conducted by knowledgeable internal personnel or third parties. Web applications are also subject to additional controls, if they are public facing, to address ongoing threats and vulnerabilities after implementation, as defined at PCI DSS Requirement 6.6. NetSPI has reviewed and used a number of automated scanning tools. These tools include HP’s Fortify SCA, Ounce Labs (now part of IBM’s Appscan toolset), Veracode, and Checkmarx. These tools do a fine job for what they were built for, performing an automated scan of the source code. All of these tools meet the 6.3.2 requirements, but they simply are not enough. The tools are missing many of the problems that the manual review finds, such as authentication and authorization vulnerabilities, among others. In addition, many companies are providing software as a service (SAAS) solutions for code reviews. By using this service, a company meets the requirement. These services make it easy to do the code reviews; you upload the binaries and in a few days, you get a report with many findings. So now what do you do with this report? Many organizations throw the report back at the developers and say "fix it". The developers look at the overwhelming number of findings and start applying resources to fix them. What many companies have experienced is that these reports contain so many false positives that the developers just give up. Are you really meeting the requirement? Of course you are, but how many vulnerabilities are you missing? Based on what NetSPI has experienced, maybe half of them. [post_title] => Code Review – is automated testing enough? [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => code-review-is-automated-testing-enough [to_ping] => [pinged] => [post_modified] => 2021-04-13 00:06:02 [post_modified_gmt] => 2021-04-13 00:06:02 [post_content_filtered] => [post_parent] => 0 [guid] => https://netspiblogdev.wpengine.com/?p=1173 [menu_order] => 769 [post_type] => post [post_mime_type] => [comment_count] => 1 [filter] => raw ) [8] => WP_Post Object ( [ID] => 1175 [post_author] => 26 [post_date] => 2013-01-23 07:00:41 [post_date_gmt] => 2013-01-23 07:00:41 [post_content] =>I was reading a few articles about how mobile devices, because of their popularity, are now the focus of malicious hackers. I thought this was interesting because many companies are developing applications for the mobile platforms and based on the information I have heard, they really do not have a formal process to test these applications for security. Back in March, NetSPI put on a webinar on how to test for security issues in a mobile application. NetSPI also gave this presentation at Secure360 and OWASP NY. I was hoping I would see other companies putting out information on doing this kind of testing and I have seen a few. However, there has not been enough emphasis on mobile application testing. Maybe I am not on the right mailing lists, but many lists contain articles on defending the device itself. I have seen much of the emphasis on MDMs. This is good, but it does not prevent the application from doing a poor job of protecting sensitive data. A couple of questions to ask yourself about securing a mobile application:

- Do you know if the developers, either internal or third party, have put a back door in the application?

- Do you know if your application is storing passwords or keys on its file system in the clear?

- How about someone putting a malicious application on the Google or Apple stores and this application starts collecting this information?

- How would your companies reputation be changed because of this, once it gets out to the press?

At a minimum, have the application tested by someone not involved in the development of the application; this can be internal personnel or an external company. At best, have the application and code reviewed for security flaws. What are your reasons you are not doing this? We do not know how We do not have the manpower There is not enough time These are just excuses. Learn the processes, call a company (such as NetSPI) to do the testing for you, but get it done and get it secured.

[post_title] => Mobile Application Testing - Where is it? [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => mobile-application-testing-where-is-it [to_ping] => [pinged] => [post_modified] => 2021-04-13 00:05:31 [post_modified_gmt] => 2021-04-13 00:05:31 [post_content_filtered] => [post_parent] => 0 [guid] => https://netspiblogdev.wpengine.com/?p=1175 [menu_order] => 771 [post_type] => post [post_mime_type] => [comment_count] => 2 [filter] => raw ) [9] => WP_Post Object ( [ID] => 1176 [post_author] => 26 [post_date] => 2013-01-21 07:00:41 [post_date_gmt] => 2013-01-21 07:00:41 [post_content] => I just read an article about how Oracle Database suffers from "stealth password cracking vulnerability". This means someone trying to exploit this vulnerability can brute force your passwords and you would never know about it. Oracle fixed this vulnerability in the new version of the authentication protocol but decided not to patch the previous version. Therefore, everyone running Oracle 11G will need to upgrade. Upgrading is going to be an issue for many companies running Oracle 11G since either they cannot or will not upgrade for many reasons. Maybe it is time to rethink this policy in your organization. There is a paper published about the problems in the Oracle Authentication protocol, so your databases are possible being attacked right now. Because many companies do not upgrade, this vulnerability is going to be around for a long time. [post_title] => Oracle’s stealth password cracking vulnerability [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => oracles-stealth-password-cracking-vulnerability [to_ping] => [pinged] => [post_modified] => 2021-04-13 00:05:52 [post_modified_gmt] => 2021-04-13 00:05:52 [post_content_filtered] => [post_parent] => 0 [guid] => https://netspiblogdev.wpengine.com/?p=1176 [menu_order] => 773 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [10] => WP_Post Object ( [ID] => 1135 [post_author] => 26 [post_date] => 2013-01-01 07:00:20 [post_date_gmt] => 2013-01-01 07:00:20 [post_content] => Now that we have come upon the new year, it is time to resolve to statically test (code review) and dynamically (penetration test) test your applications. You may be saying to yourself that we do not need to do one or both of these tests, but why? Applications are being attacked with a passion from all sides, including from the inside of your company. Individually, neither type of test can find all of the vulnerabilities in your applications, so by not doing both, there will be vulnerabilities you have missed. If you do have these tests done (one or both), make sure to fix the problems (vulnerabilities) that are discovered. Do not assume that they will not be taken advantage of at any time in the future. We have often heard "Oh, this application is only available internally, nothing will happen" or even "No one can take advantage of that vulnerability" or even better "We will just wait to fix it when we have time". How can you be sure that no one will find the vulnerability? NetSPI has some smart people, but the bad guys also have some smart people. If we can find the vulnerability, given enough time, someone else will also find it. When they do find them, what will they do with it? Steal your information, steal some money, or even worse, ruin your reputation. Will you ever have time to fix these vulnerabilities? These may be put on your list of fixes, but priorities change and marketing may put something on the list that just absolutely has to be added to the application; there goes your time to fix the problems. Now say after me, "I will have my applications code reviewed and pen tested this year." [post_title] => Happy New Year – Have you made your application testing resolution yet? [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => happy-new-year-have-you-made-your-application-testing-resolution-yet [to_ping] => [pinged] => [post_modified] => 2021-04-13 00:06:05 [post_modified_gmt] => 2021-04-13 00:06:05 [post_content_filtered] => [post_parent] => 0 [guid] => https://netspiblogdev.wpengine.com/?p=1135 [menu_order] => 776 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [11] => WP_Post Object ( [ID] => 1183 [post_author] => 26 [post_date] => 2012-11-19 07:00:18 [post_date_gmt] => 2012-11-19 07:00:18 [post_content] => Physical artifacts are amazing little (okay sometimes big) things that give us insight into how earlier civilizations lived, worked, and played. These rediscovered relics provide such useful information that we wouldn't otherwise have about such time-frames and people. Virtual artifacts are rather similar, just less tangible. Virtual artifacts run the gamut from computer generated artwork, photographs of family, and other critical files denoting and cataloging our (virtual) lives. However, they also include forgotten or discarded files that were never deleted (of course the true digital archaeologist knows how to dig even deeper to get files not securely deleted). As such, virtual artifacts provide keen insight into a system and the system’s owner. Including such files that we probably would have preferred never to see the light of day again. So why should we concern ourselves with these little remnants in our organization’s computer systems? The obvious concern is that of hackers (both internal and external). Virtual artifacts can affect your compliance efforts even without hackers as part of the equation. Depending upon the quantity of information stored in the files (such as data dumps from databases, debug logs, etc.) you may face some potential breach notification issues with significant consequences. These may also undermine all the scoping efforts performed to date, specifically relating to PCI. If those files remain on a file server that is discovered during an assessment, your cardholder data just ballooned beyond the comfort level. During ISO reviews, these artifacts may be as helpful as a hostile witness to your (re)certification case. Alongside these are internal policy violations which may compromise sensitive internal information (employee information such as payroll, etc.). So how do we combat these virtual artifacts within our organization? In essence, where do we start to dig within our virtual landscape? As unfavorable as it may seem, you start at the system most likely to contain such files and just keep going. There are tools that can help automate this process. First think like an attacker; NetSPI’s Assessment Team does just that during penetration tests. They look for unprotected and residual data (the files that are just “left out there”); this includes sensitive data (PII, PHI, cardholder data, passwords, etc.) through generic file system searches. While not overly glamorous, sometimes the simplest method is the best. Then they scour multiple systems at once through spider or crawler tools, and even look at databases and their output. Speaking of, Scott Sutherland has a new blog post that includes finding potentially sensitive information within SQL databases. They find where programmers are leaving their specific output files, debug logs, etc. Sometimes the most nondescript system can have that file you don’t want to see the light of day. So how often should you be performing these internal reviews? It partly depends on your organization’s propensity to leave virtual golden idols lying around and how effective your defenses / controls are. If movies have taught us anything is that the truly daring individual can overcome most controls if the gains are substantial enough. The best defense is to have guidelines for employees (especially those in positions that generate, or even have the ability to generate) to securely delete files no longer needed (i.e., don’t store the golden idols on pedestals where the sunlight gleams off them like a beacon). For a more realistic example, an application owner or custodian should ensure that their application’s logs that include sensitive information are properly secured behind active access controls, temporary logs are immediately deleted when no longer needed, and the passwords to the system are secured (encrypted), etc. Some may respond and say that the Data Loss Prevention (DLP) tool will catch these, so we are good to go. However some organizations implement a DLP tool focusing on one aspect only (Network, Storage, or End-Point). Each of these components can be overcome through various means. Blowguns (Storage controls), weight-monitoring pedestals (End-Point controls), and giant boulders closing the opening (Network controls) can be all be bypassed by careful and skilled virtual archaeologists. It’s not uncommon for a found stray file to compromise an organization’s compliance efforts. By reviewing your environment proactively you also help make the case that your organization is performed the necessary due diligence should an incident occur. But then the point is to find those files first, leaving nothing for the tomb raiders. [post_title] => Compliance Impact of Virtual Artifacts [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => compliance-impact-of-virtual-artifacts [to_ping] => [pinged] => [post_modified] => 2021-04-13 00:06:03 [post_modified_gmt] => 2021-04-13 00:06:03 [post_content_filtered] => [post_parent] => 0 [guid] => https://netspiblogdev.wpengine.com/?p=1183 [menu_order] => 781 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [12] => WP_Post Object ( [ID] => 1195 [post_author] => 26 [post_date] => 2012-08-09 07:00:41 [post_date_gmt] => 2012-08-09 07:00:41 [post_content] => During PA-DSS audits, NetSPI is often asked about what training options payment application vendors have for developers. These questions are in reference to PA-DSS requirement 5.2.a. This requirement states: Obtain and review software development processes for payment applications (internal and external, and including web-administrative access to product). Verify the process includes training in secure coding techniques for developers, based on industry best practices and guidance. The PCI-Council is working with SANS for a set of courses that PA-DSS vendors can use. These courses include fundamental courses for developers and security staff as well as development language specific courses. There are also courses for senior level developers, tester and managers. An example of one of the courses is Secure Coding for PCI Compliance. This is a two-day course on the OWASP top ten issues and is for a developer with experience in one of the following languages: Perl, PHP, C, C++, Java or Ruby. If you are a payment application vendor needing to start of enhance your training, look at the SANS web site - https://www.sans.org/visatop10/. These should help you get through requirement 5.2.a. Please note, NetSPI is not associated with SANS in any way. [post_title] => PA-DSS vendors now have training options [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => pa-dss-vendors-now-have-training-options [to_ping] => [pinged] => [post_modified] => 2021-04-13 00:06:10 [post_modified_gmt] => 2021-04-13 00:06:10 [post_content_filtered] => [post_parent] => 0 [guid] => https://netspiblogdev.wpengine.com/?p=1195 [menu_order] => 793 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [13] => WP_Post Object ( [ID] => 1196 [post_author] => 26 [post_date] => 2012-08-02 07:00:18 [post_date_gmt] => 2012-08-02 07:00:18 [post_content] => The PCI Council recently announced a new certification program called the Qualified Integrators and Resellers (QIR) Program. In my opinion this fills a gap that has existed for specific environments which typically reflects negatively on merchants or service providers that purchase off-the-shelf payment application solutions. Using a PA-DSS validated payment application is a requirement for merchants as is using it in a PCI-DSS compliant manner. However, the issue appears when resellers or integrators may not be fully aware of how their implementation plan and methods impact the merchant; the entity ultimately responsible for compliance. The issue then manifests during a QSA lead assessment when it is discovered that the system was not implemented properly per the Implementation Guide (segmentation efforts were negated, etc). As a QSA this is a hard conversation to have with my clients, especially since this usually means a non-compliant assessment and the merchant has to spend additional time or resources to resolve the issue. Now I understand that this certification program is not going to solve everything, but having integrators and resellers that are trained similar to PA-QSA’s and QSA’s just helps everyone involved in the process to be on the same playing field. This results with the merchants and service providers reaping the largest slice of Benefit Pie. Questions will come up whether this program will be worth it or if it is going to last since all indications lean towards this program being voluntary. While I get that the PCI Council’s official list of certified integrators and resellers may not be the first place the merchant or service providers go when selecting their next Point of Sale (POS) system (application features versus QIR certified reseller), they can insist that the POS vendor use QIR certified integrators, since in the end it is the merchant or service provider’s compliance status on the line. While still a little scarce since it has not been rolled out just yet, more information on the QIR Program can be found on the PCI Council’s QIR program site at https://www.pcisecuritystandards.org/training/qir_training.php The Council will also be having a webinar August 16 and again on August 29. Additional information can be found at the PCI Council’s Training Webinar page. [post_title] => Filling the Void - QIR Program [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => filling-the-void-qir-program [to_ping] => [pinged] => [post_modified] => 2021-04-13 00:06:05 [post_modified_gmt] => 2021-04-13 00:06:05 [post_content_filtered] => [post_parent] => 0 [guid] => https://netspiblogdev.wpengine.com/?p=1196 [menu_order] => 794 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [14] => WP_Post Object ( [ID] => 1197 [post_author] => 26 [post_date] => 2012-07-30 07:00:18 [post_date_gmt] => 2012-07-30 07:00:18 [post_content] => Many organizations have Incident Response plans. They go through the testing and send people through training but when that incident happens and the alarms klaxons begin sounding up and down the hallways the response isn’t what the organization expected. This strikes a discordant tone since all audits (be they HIPAA, PCI, etc.) always come back clean as they pertain to Incident Response processes. I’d like to take a brief moment to point of few pitfalls from “on paper” to application, but I’ll leave fixing them to you.Training

Training is a serious investment for both the organization and the individual. Unless you employ a full-time Incident Response Team this level of training is akin to insurance. Until you need it, it doesn’t seem to really be needed, yet this doesn’t decrease the importance to your organization. Think of them as similar to a volunteer fire department: training is needed even though the volunteers have day jobs. And continued training is critical. Sending people to quality training versus having them read a book from Amazon is like sending that volunteer fire fighter to CPR class versus watching a YouTube video.Testing

Tabletop testing is fine at first but you should strive to get past the tabletop as quickly as possible since this doesn’t simulate the climate of an actual incident. You want the individuals that went to training to use those tools they learned on, assuming they aren’t using them daily. When using just table top exercises you won’t know if everyone is really cut out for Incident Response. Most individuals will agree to participate, but when the rubber meets the road at 3am and the pressure is on to properly contain the incident, are they still willing and able to perform? It’s difficult to admit, but not everyone is cut out for Incident Response. I’m not saying you should get a full simulated environment, but the individuals that went to training should be testing those tools and their skills. Attaching a test scenario to a disaster recovery test is often the most effective and easiest way to minimize additional downtime to production systems. Some tests can be performed that won’t have any impact on the networked environment such as chain-of-custody process testing.Lessons Learned

While this often happens, it sometimes is performed more as an accusation arena with pointed fingers and tempers flaring. If needed, have a moderator but keep to the task at hand – determining what happened, what was the full impact, what will prevent the event from happening again, are other systems vulnerable? Write up an agenda of questions and keep people on task. But what’s really important is to follow up on the remediation work. One great way may be to plug any remediation efforts into your Risk Management program, the only caveat here being that you should keep everyone at the Lessons Learned event informed. If the Incident Response team is not involved in the remediation efforts (even if just informed) then they may not be aware of certain configuration changes that may be relevant for future Incident Responses. It’s important that you practice the Lessons Learned process by performing them against your tests as well. As you consider these suggestions, ask yourself: if that event were to occur today, how ready will you really be? [post_title] => Incident Response – When Expectations Go Astray [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => incident-response-when-expectations-go-astray [to_ping] => [pinged] => [post_modified] => 2021-04-13 00:06:07 [post_modified_gmt] => 2021-04-13 00:06:07 [post_content_filtered] => [post_parent] => 0 [guid] => https://netspiblogdev.wpengine.com/?p=1197 [menu_order] => 795 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [15] => WP_Post Object ( [ID] => 1202 [post_author] => 26 [post_date] => 2012-06-28 07:00:18 [post_date_gmt] => 2012-06-28 07:00:18 [post_content] => Certain events remind us of the important things; holidays may remind us of loved ones or perhaps how dysfunctional families can be. When our favorite (I use this term loosely) word processor crashes forever losing the most insightful blog document ever written, we realize we should have saved that document with greater frequency. When such an event happens to others we can use these as safe reminders for ourselves. Just like when Word crashes on the neighbor’s system, we can mumble, “should have saved more often…” whilst we hit the save button on the document that’s still titled Document1 on our own computer. In this same vein let’s take a look at the recent events that befell LinkedIn and eHarmony. With the recent password breach that befell those two organizations, has your organization done anything? It may seem odd to ask what your organization did in regards to another’s incident but this is a great opportunity for some security (re)awareness. Even if you don’t allow access to LinkedIn or eHarmony within your environment this can be an excuse to engage your company employees because odds are there are many who have an account on, at least, one of those two sites. The focus of the message shouldn’t be on strong passwords (complexity, maximum age, etc.) – although still good topics. However, password strength and associated requirements are most likely covered already in your annual training programs and via policies (if they aren’t, they should be). Instead discuss that which allows you to reach the audience on a personal level, and one that will hopefully have positive benefits within the work place. For this security awareness notice, center on the usage of passwords across multiple locations/sites. The incidents at LinkedIn and eHarmony involve the compromise of the password hashes (the hashes were copied outside of the respective sites). This doesn’t mean that the hashes for all affected users have been compromised (yet) but they can be using brute force methods given enough time. Some have made light about the consequences of what can be done to their compromised LinkedIn accounts, but the true threat to users is if they use the same credentials on multiple sites. To cross the boundaries of personal use to the workplace, what if the credentials match those within your organization? This is where we hope to raise awareness across the company to minimize this potential risk. This message should offer suggestions for using unique credentials on different sites/systems. While this may seem to suggest creating weaker passwords or passwords that can be guessed easily enough, “eHarmony-password” versus “LinkedIn-password” there are tools that can make this easier for individuals to track their personal passwords while keeping them strong. Tools like KeePass and PasswordSafe are local apps (they can also be put on USB flash drives – but only mention USB flash drives if they are allowed within your environment). However there is also a “cloud” service in LastPass. However if you decide to include mentioning such tools it is critical to include the notice to remember that master password! It’s often difficult to get people to actually pay attention to security alerts but using an event that has personal associations across departmental lines, roles, and levels is hard to pass up. Take advantage of this one while it’s still hot. Hopefully by getting individuals to use unique passwords on different sites that will include passwords used within your organization as well! [post_title] => Passwords: Strength and Longevity vs. Uniqueness [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => passwords-strength-and-longevity-vs-uniqueness [to_ping] => [pinged] => [post_modified] => 2021-04-13 00:05:52 [post_modified_gmt] => 2021-04-13 00:05:52 [post_content_filtered] => [post_parent] => 0 [guid] => https://netspiblogdev.wpengine.com/?p=1202 [menu_order] => 799 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [16] => WP_Post Object ( [ID] => 1208 [post_author] => 26 [post_date] => 2012-05-04 07:00:18 [post_date_gmt] => 2012-05-04 07:00:18 [post_content] => For those that aren’t keeping track, June 30, 2012 is a day to mark on your calendar. Not because of any special anniversaries or birthdays (although if yours does fall on that day then Congratulations!). June 30 is the day that we can add one more validation point to our compliance lists from the PCI Data Security Standard. The testing procedure for requirement 6.2 will transition the risk ranking assignment to new vulnerabilities from optional to mandatory. And yes, this does impact those filling out a Self-Assessment Questionnaire (SAQ) as well, but only the SAQ D. Specifically the requirement’s reporting detail reads: If risk ranking is assigned to new vulnerabilities, briefly describe the observed process for assigning a risk ranking, including how critical, highest risk vulnerabilities are ranked as “High”* (Note: the ranking of vulnerabilities is considered a best practice until June 30, 2012, after which it becomes a requirement.) * The reporting detail for “Observe process, action state” is not required until June 30, 2012 Personally, I think this is a good idea as it actually gets you thinking about the impacts of the vulnerabilities specific to your organization. It also allows you to downgrade the vendor supplied criticality should you have existing controls in place to lessen the vulnerability realization. A common example is having to apply a patch to a web server on a very restricted network (full Access Control Lists, etc.) because the vendor rated it critical (the patch fixed an exploit for remote code execution). The critical rating is perfectly valid for public facing websites but not as severe for servers that don’t interact with the Internet. For those that don’t currently have an established risk assessment process in place (or those that could use some tweaking), the following blog posts might be helpful; “The Annual Struggle with Assess Risk” and “Measuring Security Risks Consistently.” Seems like we planned those other blogs, doesn’t it? [post_title] => The Choice is No Longer Yours - Changes to PCI [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => the-choice-is-no-longer-yours-changes-to-pci [to_ping] => [pinged] => [post_modified] => 2021-04-13 00:06:15 [post_modified_gmt] => 2021-04-13 00:06:15 [post_content_filtered] => [post_parent] => 0 [guid] => https://netspiblogdev.wpengine.com/?p=1208 [menu_order] => 805 [post_type] => post [post_mime_type] => [comment_count] => 1 [filter] => raw ) [17] => WP_Post Object ( [ID] => 1212 [post_author] => 26 [post_date] => 2012-03-26 07:00:18 [post_date_gmt] => 2012-03-26 07:00:18 [post_content] =>Let’s start with a little exercise. Rate the risk for the following events.

- Going 15 mph over the speed limit.

- Using a public wireless internet connection at the airport.

- Using a third party for payment services.

If you were to ask your neighbor how they would rate them, would it be the same? Go ahead and ask them, I’ll wait. For those not asking, do you think they would be the same? Probably not. Assigning a risk label to an event is too subjective. It’s based upon the person’s experience, profession, and situational awareness. How one labels risk most likely will not be the same as someone else. This is mostly due to the lack of comparable impacts. Assigning impact consistently is manageable with guidance. These may include factors such as:

- Fiscal costs to replace/fix.

- Employee hours needed (will you have to outsource?)

- Damage to reputation (usually more for service providers)

- Harm to individuals (employees and / or patients)?

Each of these factors and the threshold from one to the next is organization specific. $10,000 in replacement systems for one company may be fairly significant while for another it may be the budget for the annual holiday party. Establishing the different thresholds for each of your risk layers will make this a repeatable process. It’s an easier process than most think; just go through the possibilities for each. If this would cost our organization $__________ it would be bad, $____________ is really bad, and $_______________ is “I’m packing up my office right now.” Just keep doing that on all your impact decision factors. Creating a matrix will help quickly assign such risk impacts and also ensure that the right people are involved the process. That’s correct: assigning risks, the impact, and the likelihood, shouldn’t be a one person job; there are too many factors for one person to know. Healthcare is a great example. IT can determine how much it would cost to replace/fix a server but IT most likely will not be able to properly gauge organizational reputation damage and the potential harm to patients. Having more people with different roles also brings more situation awareness (i.e., threat likelihood) to the risk assignment process. They may be aware of additional controls which could lessen the change of the risk being realized. The more the situational awareness is raised allows your company to assess risks with greater understanding and accuracy. For example, would your risks you assigned to the examples above change with the following?

- Going 15 mph over the speed limit in a school zone.

- Using a public wireless internet connection at the airport after Defcon.

- Using a third party for payment services that continues to suffer data breaches.

All of the aspects above increase the maturity level of risk assignments used in Risk Management programs, audits, and everyday operations. It helps everyone within the organization speak the same language and ensure that we compare apples to apples. When everyone is on the same plane and knows how the risks are being assigned there tends to also be less resistance to risk reducing initiatives. This level of organizational “buy-in” is crucial for those projects that have a large impact radius and cross many departmental boundaries. So how does this all start? The easiest is to integrate this process as part of your Risk Management program and during each Risk Assessment. Use the same processes for your internal audits and have external companies either use your process or provide enough information to allow your group to rate findings again internally. Document the process and the various factors and make sure all involved know what they are. This will lead you down some interesting conversations, but stick to it! Having an established and consistent process turns the arbitrary into the meaningful.

[post_title] => Measuring Security Risks Consistently [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => measuring-security-risks-consistently [to_ping] => [pinged] => [post_modified] => 2021-04-13 00:06:08 [post_modified_gmt] => 2021-04-13 00:06:08 [post_content_filtered] => [post_parent] => 0 [guid] => https://netspiblogdev.wpengine.com/?p=1212 [menu_order] => 810 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [18] => WP_Post Object ( [ID] => 1214 [post_author] => 26 [post_date] => 2012-03-13 07:00:54 [post_date_gmt] => 2012-03-13 07:00:54 [post_content] =>Gettin’ Your Internal Security Assessor on…