Josh Weber

WP_Query Object

(

[query] => Array

(

[post_type] => Array

(

[0] => post

[1] => webinars

)

[posts_per_page] => -1

[post_status] => publish

[meta_query] => Array

(

[relation] => OR

[0] => Array

(

[key] => new_authors

[value] => "47"

[compare] => LIKE

)

[1] => Array

(

[key] => new_presenters

[value] => "47"

[compare] => LIKE

)

)

)

[query_vars] => Array

(

[post_type] => Array

(

[0] => post

[1] => webinars

)

[posts_per_page] => -1

[post_status] => publish

[meta_query] => Array

(

[relation] => OR

[0] => Array

(

[key] => new_authors

[value] => "47"

[compare] => LIKE

)

[1] => Array

(

[key] => new_presenters

[value] => "47"

[compare] => LIKE

)

)

[error] =>

[m] =>

[p] => 0

[post_parent] =>

[subpost] =>

[subpost_id] =>

[attachment] =>

[attachment_id] => 0

[name] =>

[pagename] =>

[page_id] => 0

[second] =>

[minute] =>

[hour] =>

[day] => 0

[monthnum] => 0

[year] => 0

[w] => 0

[category_name] =>

[tag] =>

[cat] =>

[tag_id] =>

[author] =>

[author_name] =>

[feed] =>

[tb] =>

[paged] => 0

[meta_key] =>

[meta_value] =>

[preview] =>

[s] =>

[sentence] =>

[title] =>

[fields] =>

[menu_order] =>

[embed] =>

[category__in] => Array

(

)

[category__not_in] => Array

(

)

[category__and] => Array

(

)

[post__in] => Array

(

)

[post__not_in] => Array

(

)

[post_name__in] => Array

(

)

[tag__in] => Array

(

)

[tag__not_in] => Array

(

)

[tag__and] => Array

(

)

[tag_slug__in] => Array

(

)

[tag_slug__and] => Array

(

)

[post_parent__in] => Array

(

)

[post_parent__not_in] => Array

(

)

[author__in] => Array

(

)

[author__not_in] => Array

(

)

[search_columns] => Array

(

)

[ignore_sticky_posts] =>

[suppress_filters] =>

[cache_results] => 1

[update_post_term_cache] => 1

[update_menu_item_cache] =>

[lazy_load_term_meta] => 1

[update_post_meta_cache] => 1

[nopaging] => 1

[comments_per_page] => 50

[no_found_rows] =>

[order] => DESC

)

[tax_query] => WP_Tax_Query Object

(

[queries] => Array

(

)

[relation] => AND

[table_aliases:protected] => Array

(

)

[queried_terms] => Array

(

)

[primary_table] => wp_posts

[primary_id_column] => ID

)

[meta_query] => WP_Meta_Query Object

(

[queries] => Array

(

[0] => Array

(

[key] => new_authors

[value] => "47"

[compare] => LIKE

)

[1] => Array

(

[key] => new_presenters

[value] => "47"

[compare] => LIKE

)

[relation] => OR

)

[relation] => OR

[meta_table] => wp_postmeta

[meta_id_column] => post_id

[primary_table] => wp_posts

[primary_id_column] => ID

[table_aliases:protected] => Array

(

[0] => wp_postmeta

)

[clauses:protected] => Array

(

[wp_postmeta] => Array

(

[key] => new_authors

[value] => "47"

[compare] => LIKE

[compare_key] => =

[alias] => wp_postmeta

[cast] => CHAR

)

[wp_postmeta-1] => Array

(

[key] => new_presenters

[value] => "47"

[compare] => LIKE

[compare_key] => =

[alias] => wp_postmeta

[cast] => CHAR

)

)

[has_or_relation:protected] => 1

)

[date_query] =>

[request] => SELECT wp_posts.ID

FROM wp_posts INNER JOIN wp_postmeta ON ( wp_posts.ID = wp_postmeta.post_id )

WHERE 1=1 AND (

( wp_postmeta.meta_key = 'new_authors' AND wp_postmeta.meta_value LIKE '{ab7f7b09a688a62291ae411855957a9eb8387b6fe99fd3ac978b0602bcfb1e2c}\"47\"{ab7f7b09a688a62291ae411855957a9eb8387b6fe99fd3ac978b0602bcfb1e2c}' )

OR

( wp_postmeta.meta_key = 'new_presenters' AND wp_postmeta.meta_value LIKE '{ab7f7b09a688a62291ae411855957a9eb8387b6fe99fd3ac978b0602bcfb1e2c}\"47\"{ab7f7b09a688a62291ae411855957a9eb8387b6fe99fd3ac978b0602bcfb1e2c}' )

) AND wp_posts.post_type IN ('post', 'webinars') AND ((wp_posts.post_status = 'publish'))

GROUP BY wp_posts.ID

ORDER BY wp_posts.post_date DESC

[posts] => Array

(

[0] => WP_Post Object

(

[ID] => 10631

[post_author] => 47

[post_date] => 2019-07-09 07:00:58

[post_date_gmt] => 2019-07-09 07:00:58

[post_content] => For our client engagements, we are constantly searching for new methods of open source intelligence (OSINT) gathering. This post will specifically focus on targeting client contact collection from a site we have found to be very useful (zoominfo.com) and will describe some of the hurdles we needed to overcome to write automation around site scraping. We will also be demoing and publishing a simple script to hopefully help the community add to their OSINT arsenal.

Reasons for Gathering Employee Names

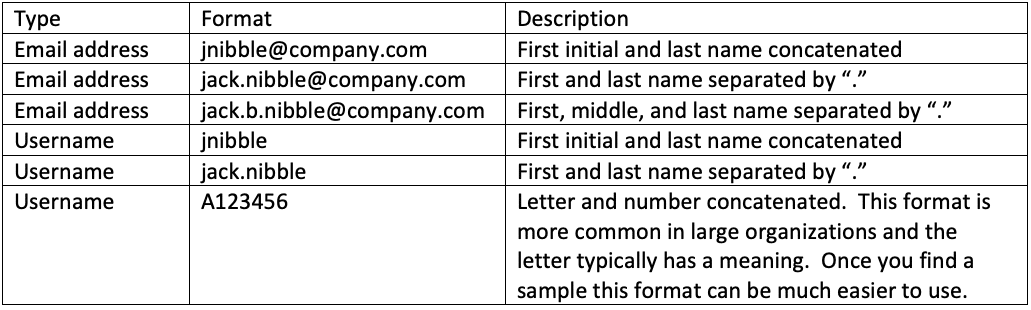

The benefits of employee name collection via OSINT are well-known within the security community. Several awesome automated scrapers already exist for popular sources (*cough* LinkedIn *cough*). A scraper is a common term used to describe a script or program that parses specific information from a webpage, often from an unauthenticated perspective. The employee names scraped (collected/parsed) from OSINT sources can trivially be converted into email addresses and/or usernames if one already knows the target organization's formats. Common formats are pretty intuitive, but below are a few examples.

Name example: Jack B. Nibble

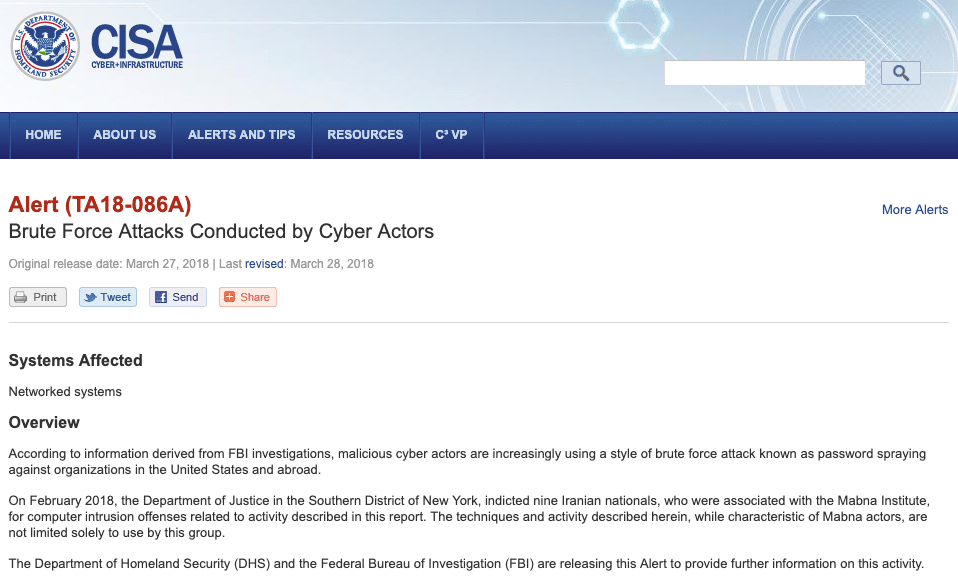

On the offensive side, a few of the most popular use cases for these collections of employee names, emails, and usernames are:

- Credential stuffing

- Email phishing

- Password spraying

Issues with Scraping

With the basic primer out of the way, let's talk about scraping in general as we lead into the actual script. The concepts discussed here will be specific to our example but can also be applied to similar scenarios. At their core, all web scrapers need to craft HTTP requests and parse responses for the desired information (Burp Intruder is great for ad-hoc scraping). All of the heavy lifting in our script will be done with Python3 and a few libraries. Some of the most common issues we've run into while scraping OSINT sources are:- Throttling (temporary slow-downs based on request rate)

- Rate-limiting (temporary blocking based on request rate)

- Full-blown bans (permanent blocking based on request rate)

Dealing with Throttling and Rate-limiting

In our attempts to push the script forward, we needed to overcome throttling and rate-limiting to successfully scrape. During initial tests, we noticed simple delays via sleep statements within our script would prevent the IUAM from kicking in, but only for a short while. Eventually IUAM would take notice of us, so sleep statements alone would not scale well for most of our needs. Our next thought was to implement Tor, and just switch exit nodes each time we noticed a ban. The Tor Stem library was perfect for this, with built-in control for identifying and programmatically switching exit nodes via Python. Unfortunately, after implementing this idea, we realized zoominfo.com would not accept connections via Tor exit nodes. Another simple transition would have been to use other VPN services or even switching via VPS’, but again, this solution would not scale well for our purposes. I had considered just spinning up an invisible or headless browser to interact with the site that could also interact with the JavaScript. In this way, Cloudflare (and hopefully any of our future targets) would only see a ‘normal’ browser interacting with their content, thus avoiding our throttling issues. While working to implement this idea, I was pointed instead to an awesome Python library called cloudscraper: https://pypi.org/project/cloudscraper/. The cloudscraper library does all the heavy lifting of interacting with the JavaScript challenges and allows our scraper to continue while avoiding throttling. We are then only left with the issue of potential rate-limiting. To avoid this, our script also has built-in delays in an attempt to appease Cloudflare. We haven't come up with an exact science behind this, but it appears that a delay of sixty seconds every ten requests is enough to avoid rate-limiting, with short random delays sprinkled in between each request for good measure. python3 zoominfo-scraper.py -z netspi-llc/36078304 -d netspi.com[*] Requesting page 1 [+] Found! Parsing page 1 [*] Random sleep break to appease CloudFlare [*] Requesting page 2 [+] Found! Parsing page 2 [*] Random sleep break to appease CloudFlare [*] Requesting page 3 [+] Site returned status code: 410 [+] We seem to be at the end! Yay! [+] Printing email address list adolney@netspi.com ajones@netspi.com aleybourne@netspi.com..output truncated..

[+] Found 49 names!

Preventing Automated Scrapers

Vendors or website owners concerned about automated scraping of their content should consider placing any information they deem ‘sensitive’ behind authentication walls. The goal of Cloudflare’s IUAM is to prevent DDoS attacks (which we are vehemently not attempting), with the roadblocks it brings to automated scrapers being just an added bonus. Roadblocks such as this should not be considered a safeguard against automated scrapers that play by the rules.

Download zoominfo-scraper.py

This script is not intended to be all-encompassing for your OSINT gathering needs, but rather a minor piece that we have not had a chance to bolt on to a broader toolset. We are presenting it to the community because we have found a lot of value with it internally and hope to help further advance the security community. Feel free to integrate these concepts or even the script into your own toolsets. The script and full instructions for use can be found here: https://github.com/NetSPI/HTTPScrapers/tree/master/Zoominfo [post_title] => Collecting Contacts from zoominfo.com [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => collecting-contacts-from-zoominfo-com [to_ping] => [pinged] => [post_modified] => 2021-06-08 21:57:33 [post_modified_gmt] => 2021-06-08 21:57:33 [post_content_filtered] => [post_parent] => 0 [guid] => https://netspiblogdev.wpengine.com/?p=10631 [menu_order] => 559 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) ) [post_count] => 1 [current_post] => -1 [before_loop] => 1 [in_the_loop] => [post] => WP_Post Object ( [ID] => 10631 [post_author] => 47 [post_date] => 2019-07-09 07:00:58 [post_date_gmt] => 2019-07-09 07:00:58 [post_content] => For our client engagements, we are constantly searching for new methods of open source intelligence (OSINT) gathering. This post will specifically focus on targeting client contact collection from a site we have found to be very useful (zoominfo.com) and will describe some of the hurdles we needed to overcome to write automation around site scraping. We will also be demoing and publishing a simple script to hopefully help the community add to their OSINT arsenal.Reasons for Gathering Employee Names

The benefits of employee name collection via OSINT are well-known within the security community. Several awesome automated scrapers already exist for popular sources (*cough* LinkedIn *cough*). A scraper is a common term used to describe a script or program that parses specific information from a webpage, often from an unauthenticated perspective. The employee names scraped (collected/parsed) from OSINT sources can trivially be converted into email addresses and/or usernames if one already knows the target organization's formats. Common formats are pretty intuitive, but below are a few examples. Name example: Jack B. Nibble On the offensive side, a few of the most popular use cases for these collections of employee names, emails, and usernames are:- Credential stuffing

- Email phishing

- Password spraying

Issues with Scraping

With the basic primer out of the way, let's talk about scraping in general as we lead into the actual script. The concepts discussed here will be specific to our example but can also be applied to similar scenarios. At their core, all web scrapers need to craft HTTP requests and parse responses for the desired information (Burp Intruder is great for ad-hoc scraping). All of the heavy lifting in our script will be done with Python3 and a few libraries. Some of the most common issues we've run into while scraping OSINT sources are:- Throttling (temporary slow-downs based on request rate)

- Rate-limiting (temporary blocking based on request rate)

- Full-blown bans (permanent blocking based on request rate)

Dealing with Throttling and Rate-limiting

In our attempts to push the script forward, we needed to overcome throttling and rate-limiting to successfully scrape. During initial tests, we noticed simple delays via sleep statements within our script would prevent the IUAM from kicking in, but only for a short while. Eventually IUAM would take notice of us, so sleep statements alone would not scale well for most of our needs. Our next thought was to implement Tor, and just switch exit nodes each time we noticed a ban. The Tor Stem library was perfect for this, with built-in control for identifying and programmatically switching exit nodes via Python. Unfortunately, after implementing this idea, we realized zoominfo.com would not accept connections via Tor exit nodes. Another simple transition would have been to use other VPN services or even switching via VPS’, but again, this solution would not scale well for our purposes. I had considered just spinning up an invisible or headless browser to interact with the site that could also interact with the JavaScript. In this way, Cloudflare (and hopefully any of our future targets) would only see a ‘normal’ browser interacting with their content, thus avoiding our throttling issues. While working to implement this idea, I was pointed instead to an awesome Python library called cloudscraper: https://pypi.org/project/cloudscraper/. The cloudscraper library does all the heavy lifting of interacting with the JavaScript challenges and allows our scraper to continue while avoiding throttling. We are then only left with the issue of potential rate-limiting. To avoid this, our script also has built-in delays in an attempt to appease Cloudflare. We haven't come up with an exact science behind this, but it appears that a delay of sixty seconds every ten requests is enough to avoid rate-limiting, with short random delays sprinkled in between each request for good measure. python3 zoominfo-scraper.py -z netspi-llc/36078304 -d netspi.com[*] Requesting page 1 [+] Found! Parsing page 1 [*] Random sleep break to appease CloudFlare [*] Requesting page 2 [+] Found! Parsing page 2 [*] Random sleep break to appease CloudFlare [*] Requesting page 3 [+] Site returned status code: 410 [+] We seem to be at the end! Yay! [+] Printing email address list adolney@netspi.com ajones@netspi.com aleybourne@netspi.com..output truncated..

[+] Found 49 names!

Preventing Automated Scrapers

Vendors or website owners concerned about automated scraping of their content should consider placing any information they deem ‘sensitive’ behind authentication walls. The goal of Cloudflare’s IUAM is to prevent DDoS attacks (which we are vehemently not attempting), with the roadblocks it brings to automated scrapers being just an added bonus. Roadblocks such as this should not be considered a safeguard against automated scrapers that play by the rules.